Introduction

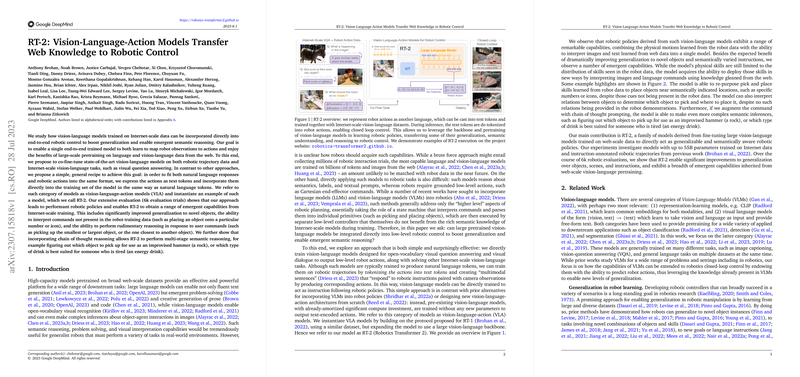

High-capacity models pretrained on vast web-scale datasets have exhibited effective and powerful downstream task performance. Incorporating such models directly into end-to-end robotic control has the potential to vastly improve generalization and enable emergent semantic reasoning. This paper probes the extent to which large-scale pretrained vision-LLMs (VLMs) can be integrated into robotic control, proposing a framework to train vision-language-action (VLA) models capable of interpreting and acting upon both linguistic and visual cues in the context of robotics.

Methodology

The core hypothesis tested is the practicality of co-fine-tuning state-of-the-art VLMs on robotic trajectory data alongside Internet-scale vision-language tasks. This entails a training paradigm where robotic actions are tokenized and added to the training set, treated comparably to natural language tokens. The VLA model can then output textual action sequences in response to prompts, which the robot interprets and enacts. The RT-2 model, an instantiation of such VLA architecture, is then rigorously evaluated across thousands of trials to validate its performance and emergent capabilities, such as improved generalization, command interpretation, and basic semantic reasoning.

Related Work and Findings

The investigation is situated within a landscape of recent works attempting to blend LLMs and VLMs into robotics. While these methods generally excel at high-level planning, they often fail to leverage the rich semantic knowledge during training for lower-level tasks. RT-2's approach is contrasted with those alternatives through its innovative real-time inference protocol and training strategy. Remarkably, RT-2 models demonstrate preferable generalization to novel objects, environments, and semantically varied instructions, significantly outperforming similar robotics-focused models.

Limitations and Future Work

Despite RT-2's promising abilities, the approach retains limitations such as its reliance on the range of physical skills present in the training robot data and the computational costs associated with running large models. Future research directions include potential solutions such as model quantization and distillation to improve efficiency. Moreover, greater availability of open-sourced VLMs and the ability to train on a broader array of data, including human demonstrations, could extend RT-2's generalizability and skillset further.

In conclusion, RT-2 suggests a powerful avenue through which robust pretraining on language and vision can enhance robotic control. By effectively transferring web-scale pretraining to robotic manipulation, RT-2 sets a benchmark for future vision-language-action models in the robotics domain.