- The paper introduces the CWMI framework that integrates a Causal Physics Module (CPM) to infuse causal understanding into LLMs.

- It employs dual loss functions, L_pred and L_causal, achieving 94.1% accuracy on PhysiCa-Bench and outperforming state-of-the-art models.

- Ablation studies confirm the necessity of both observational accuracy and causal reasoning for robust zero-shot physical reasoning.

Inducing Causal World Models in LLMs for Zero-Shot Physical Reasoning

Introduction

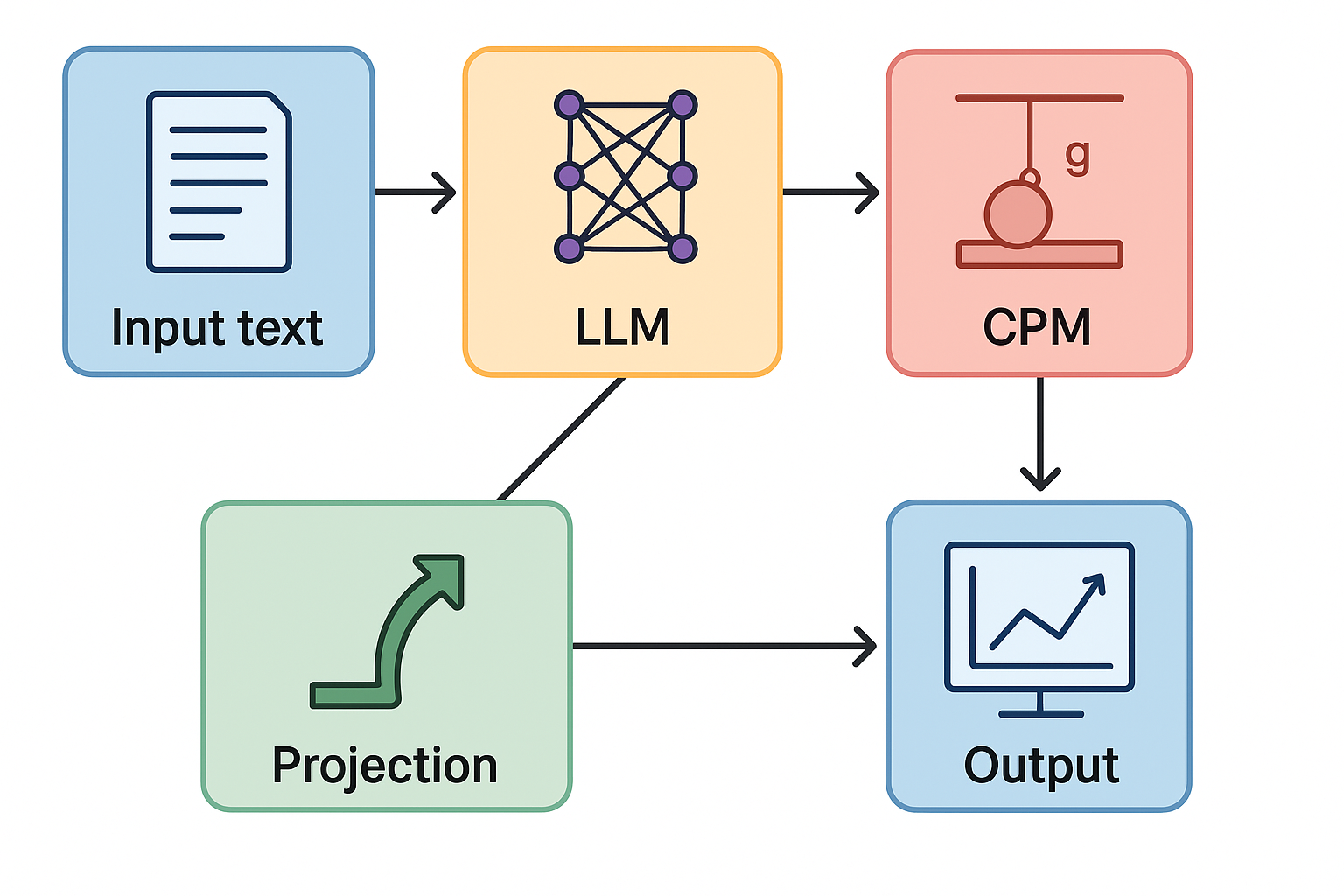

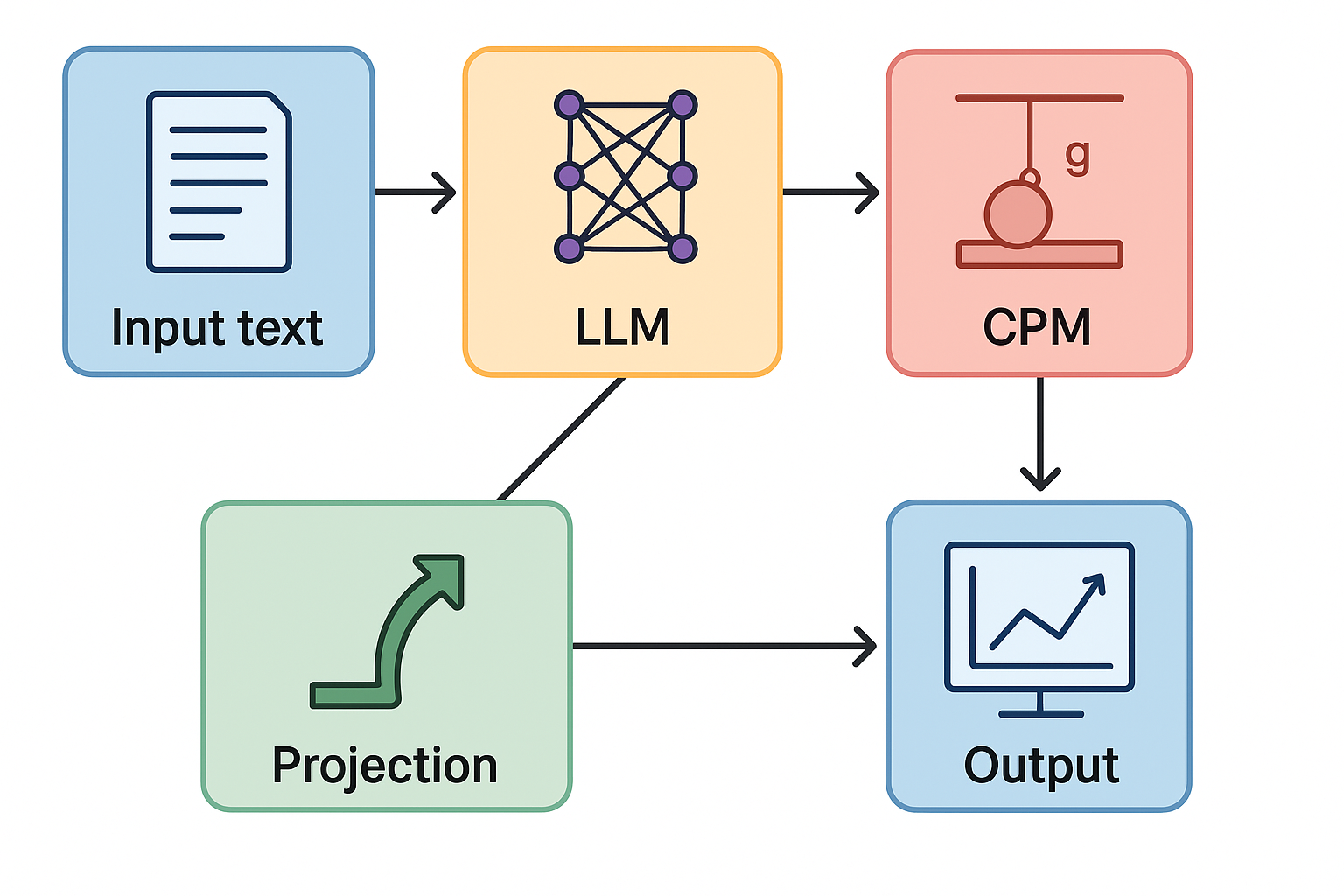

The paper "Inducing Causal World Models in LLMs for Zero-Shot Physical Reasoning" addresses the fundamental limitation of LLMs in understanding physical dynamics essential for causal reasoning. This work introduces the Causal World Model Induction (CWMI) framework, which embeds a specialized Causal Physics Module (CPM) into an LLM, thereby embedding explicit models of causal physics. The approach leverages a Causal Intervention Loss to enhance the learning of cause-and-effect relationships, enabling the model to predict hypothetical interventions, thereby attaining a robust internal representation of physical laws.

Figure 1: Rich, icon‑enhanced flowchart of the CWMI framework. The diagram illustrates the sequence of operations—from “Input Text” through “LLM Encoding,” “Projection Layer,” “Causal Physics Module,” to final “Output”—with color-coded modules.

Methodology

System Architecture

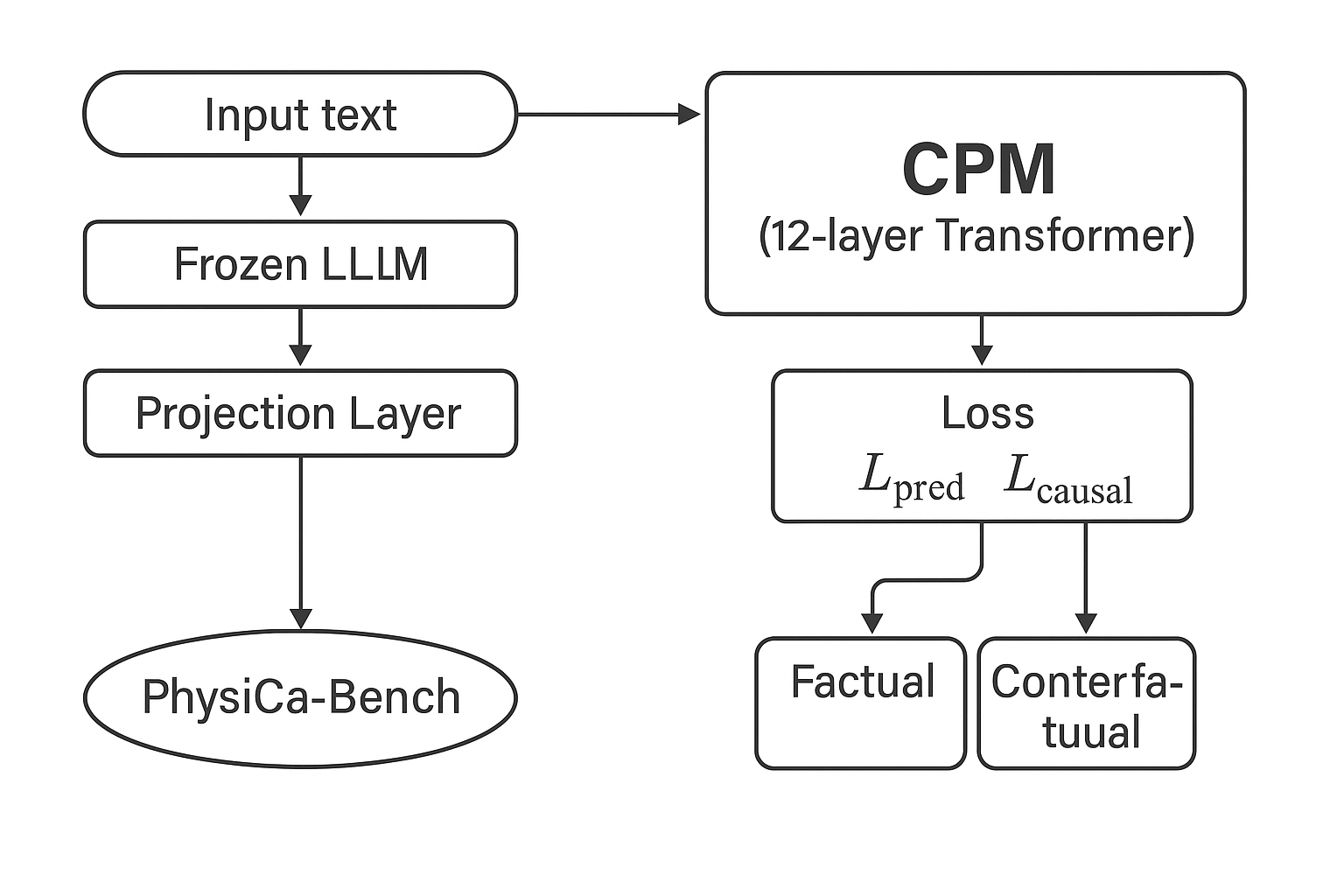

The CWMI framework consists of a frozen LLM and a dynamically trainable CPM. The LLM serves as a linguistic interface, converting text into semantic representations. These representations initialize the CPM, which then simulates physical interactions to predict future states.

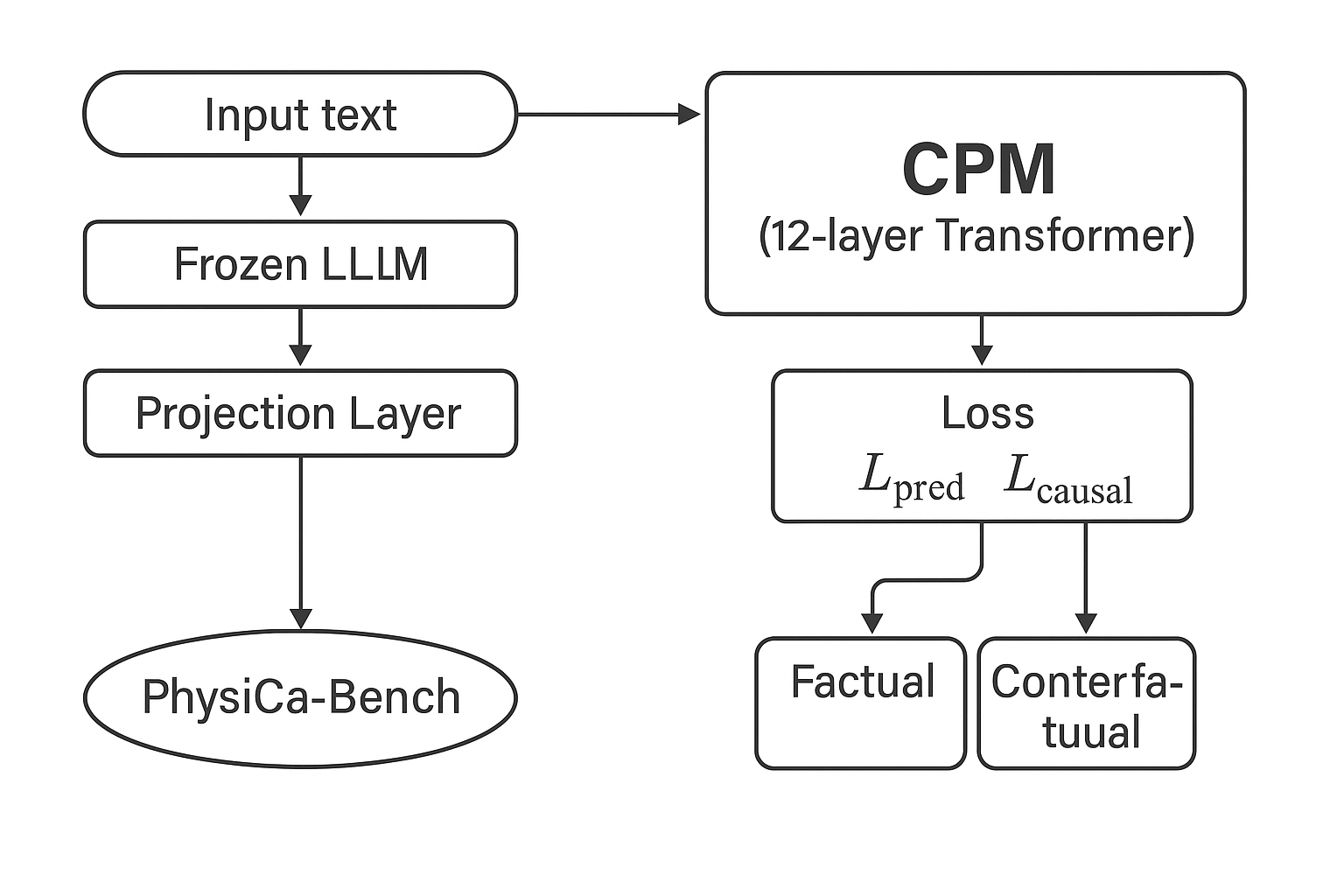

Figure 2: The overall architecture of the Causal World Model Induction (CWMI) framework.

The CPM, structured as a Transformer decoder, utilizes self-attention mechanisms to model interactions. It predicts final states by simulating temporal evolution under the governing causal laws. Training involves backpropagating through a composite loss combining predictive accuracy and causal inference.

Causal Induction and Loss

The CWMI utilizes a dual-function loss:

- State Prediction Loss (Lpred): Anchors model predictions to observed realities using an MSE between predicted and ground-truth states.

- Causal Intervention Loss (Lcausal): Focuses on learning causal mechanisms by observing the effects of interventions, using factual and counterfactual scenarios from the PhysiCa-Bench dataset.

Experimental Evaluation

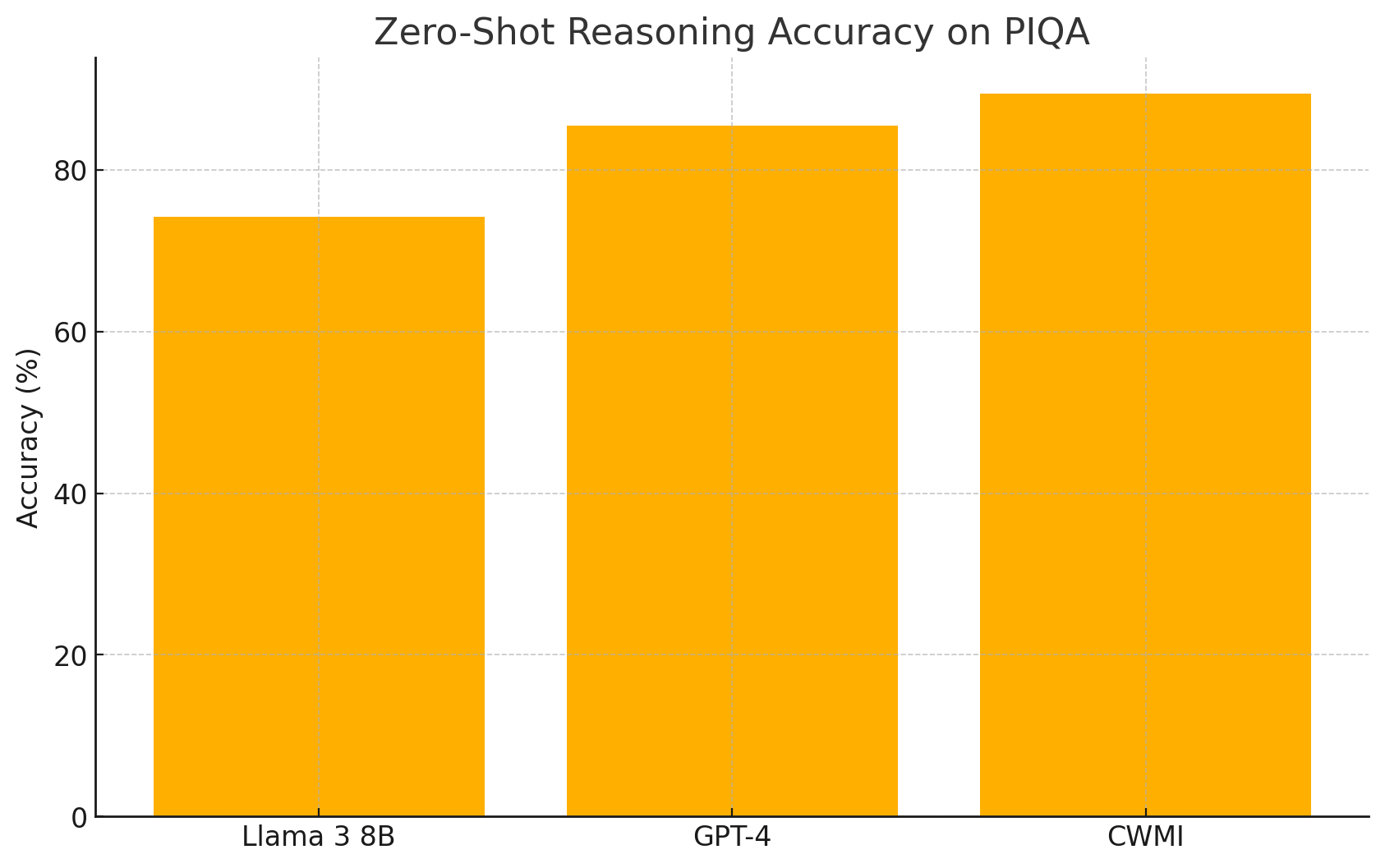

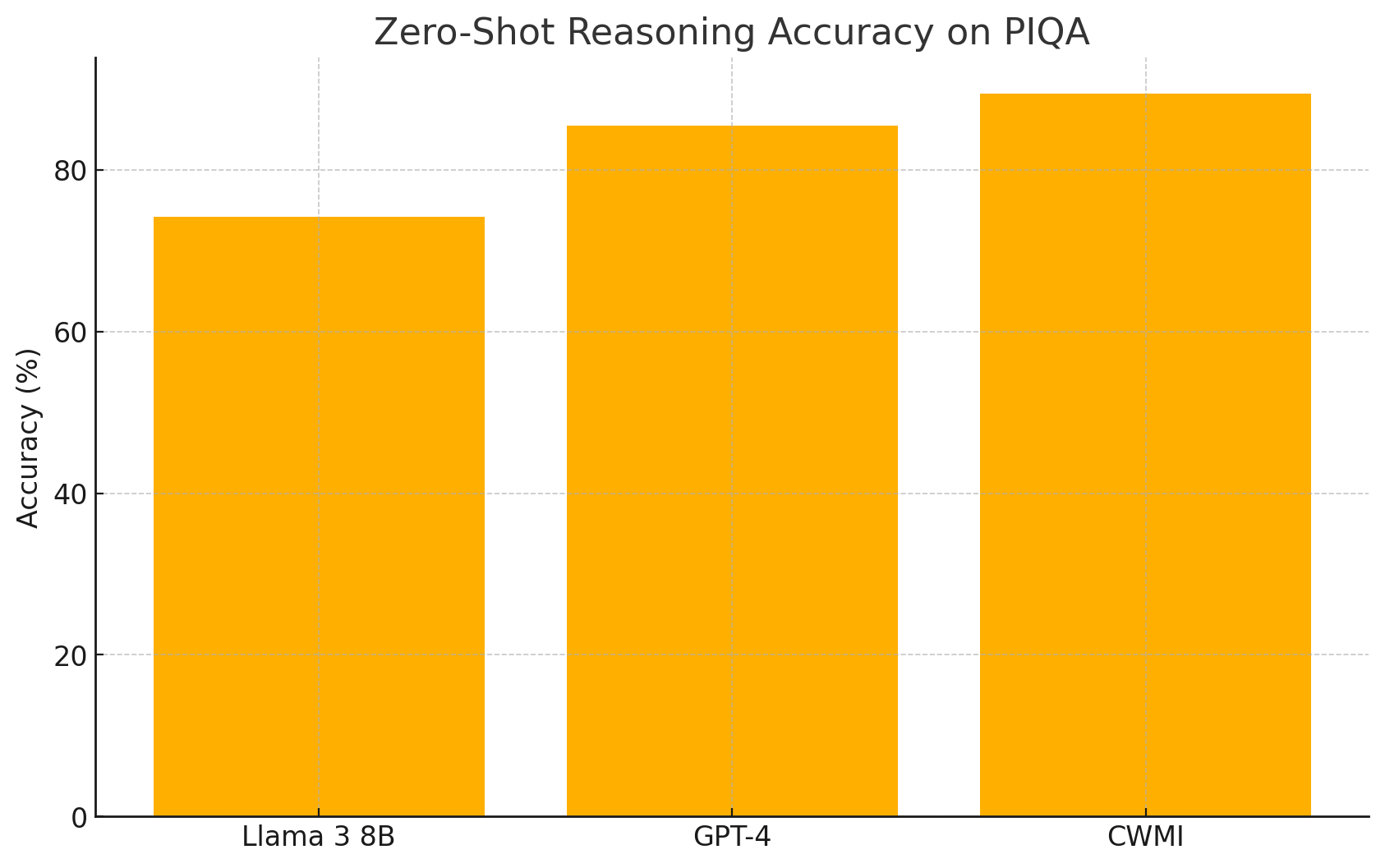

The CWMI framework excels in zero-shot settings, outperforming state-of-the-art models on both PIQA and PhysiCa-Bench benchmarks.

Figure 3: Zero-Shot Reasoning Accuracy on PIQA.

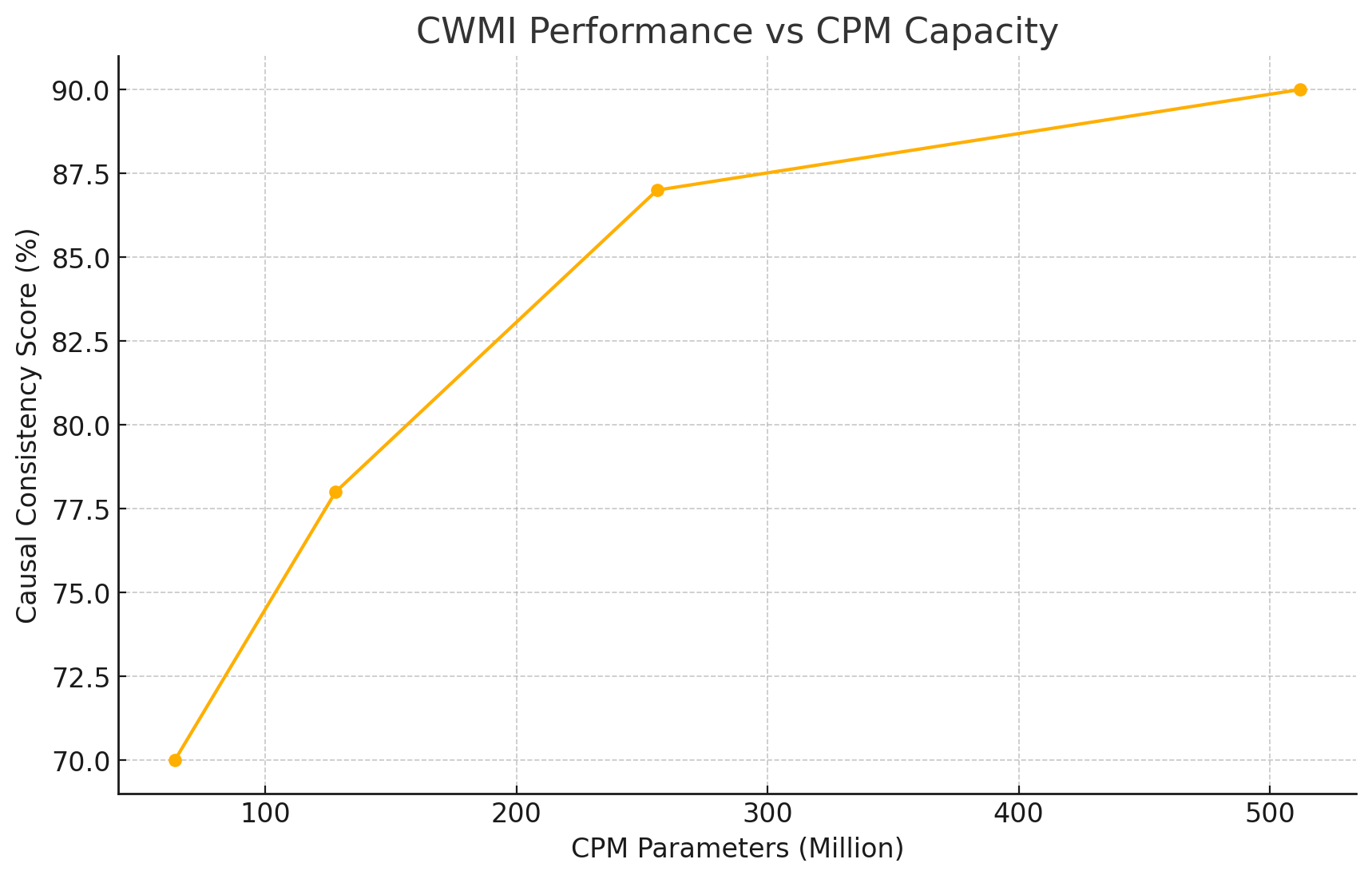

CWMI achieved a 94.1% accuracy on PhysiCa-Bench with an FSPA of 0.08 and a Causal Consistency Score (CCS) of 87.6%, significantly outpacing GPT-4, highlighting the framework's robust causal reasoning capabilities.

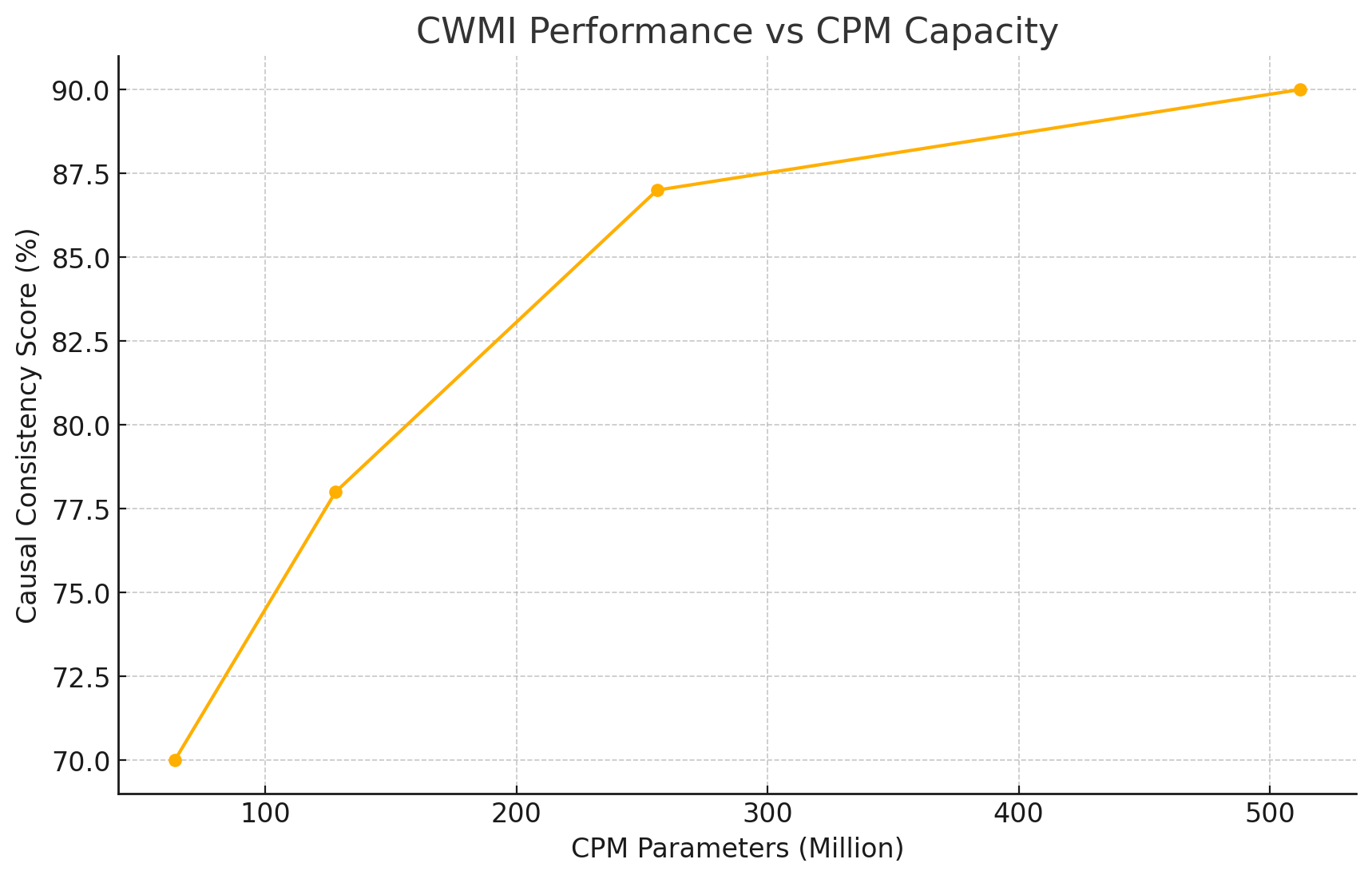

Figure 4: CWMI Performance vs CPM Capacity: Causal Consistency Score

Ablation Studies

Ablations confirm the necessity of each component. Without Lcausal, causal reasoning drops drastically, indicating its centrality. Conversely, omitting Lpred results in ungrounded predictions, emphasizing its role in ensuring observational accuracy. The architectural separation between the LLM and CPM is validated by the abysmal performance of a model lacking the CPM.

Implications and Future Directions

CWMI significantly advances AI towards achieving robust physical reasoning by instilling a causal understanding within LLMs. Future explorations could focus on expanding CPM to handle complex physics like fluid dynamics and improving multi-object interaction handling. This work signifies a pivotal step towards creating LLMs capable of not just understanding, but reasoning about the physical world effectively.

Conclusion

The introduction of CWMI demonstrates a method for overcoming LLM limitations in physical reasoning by employing a causal world model. This framework shows notable improvements in understanding and predicting physical interactions in a zero-shot context, setting a new standard in AI's capability to integrate language and causal reasoning effectively.