Causal Reasoning and LLMs: A Critical Analysis

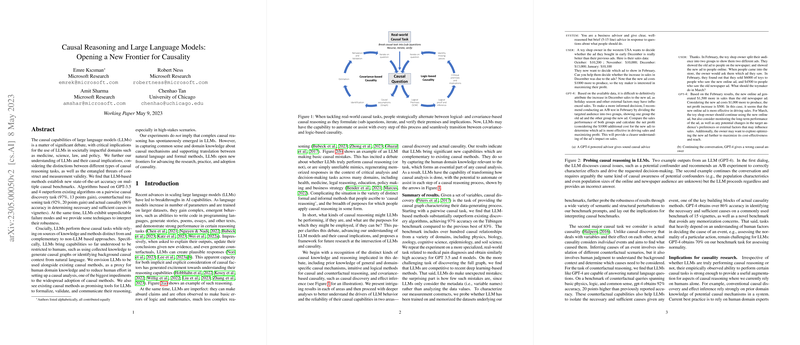

The paper "Causal Reasoning and LLMs: Opening a New Frontier for Causality" provides an extensive examination of the causal capabilities of LLMs. The authors aim to elucidate the potential of LLMs in addressing causal reasoning tasks, highlighting various results, challenges, and potential implications for both practical and theoretical advancements in the field of causality.

Summary of Findings

The paper presents comprehensive experiments demonstrating that LLM-based methods set new benchmarks in multiple causal reasoning tasks, including pairwise causal discovery, counterfactual reasoning, and actual causality assessments. Specifically, algorithms powered by GPT-3.5 and GPT-4 achieve impressive results, outperforming existing methods by substantial margins:

- In pairwise causal discovery tasks, the models achieve a 97% accuracy, a 13-point improvement over previous best methods.

- For counterfactual reasoning, the models attain a 92% accuracy, representing a 20-point gain.

- In determining necessary and sufficient causes in vignettes, the models demonstrate an 86% accuracy.

Implications and Insights

These results suggest that LLMs possess strong inherent capabilities for causal reasoning, functioning by utilizing knowledge sources and methods that are complementary to traditional non-LLM approaches. This involves abilities previously attributed exclusively to humans, such as generating causal graphs and identifying background causal context from natural language.

While the performance metrics demonstrate promise, the paper also identifies critical failure modes for LLMs, emphasizing the unpredictability of these models in certain causal tasks. The paper points out that although LLMs draw from human-like reasoning processes, their outputs cannot be wholly relied upon without further verification and refinement.

Practical Applications

The authors envision LLMs playing a significant role in various domains such as medicine, science, law, and policy by integrating with existing causal methods. They propose that LLMs can serve as an augmentation to human capabilities, acting as proxies for domain knowledge and reducing the effort required for setting up causal analyses. This potential to decrease human labor barriers could foster broader adoption of causal methods across diverse fields.

Furthermore, the paper suggests that LLMs may facilitate the formalization, validation, and communication of causal reasoning, making them viable tools for structured causal analysis, especially in high-stakes environments. The integration of LLMs into causal workflows could enhance efficiency and accuracy in deriving causal insights from complex datasets.

Theoretical Implications

On a theoretical level, the research underscores that while LLMs exhibit advanced reasoning, these abilities do not necessarily imply the spontaneous emergence of complex causal reasoning. Rather, LLMs might reflect an ability to mimic patterns from the training data underlined by vast corpuses of human text.

The authors encourage further investigation into the mechanisms behind LLMs' causal reasoning capabilities, asserting that future research should focus on improving the robustness and interpretability of LLMs when applied to causal reasoning. Such advancements could pave the way for more reliable and verifiable use of LLMs in both automated and human-assisted causal analyses.

Future Directions

Looking ahead, the paper speculates on diverse future research avenues, including enhancing collaboration between humans and LLMs in causal reasoning tasks, exploring LLMs' applications in more intricate causal inference scenarios, and systematically integrating LLM capabilities within existing causal frameworks. Additionally, refining LLMs' ability to handle nuances in causal queries, managing failure modes, and bolstering their reliability in real-world applications are highlighted as essential goals.

In conclusion, the paper presents compelling evidence that LLMs, particularly models akin to GPT-3.5 and GPT-4, have embarked on paving new frontiers in the paper and application of causality. By better understanding and leveraging these capabilities, LLMs stand to significantly influence the landscape of causal analysis, offering novel tools to both enhance and complement traditional causal reasoning approaches.