- The paper introduces PhysicsArena, a novel multimodal benchmark that evaluates variable identification, process formulation, and solution derivation in physics reasoning.

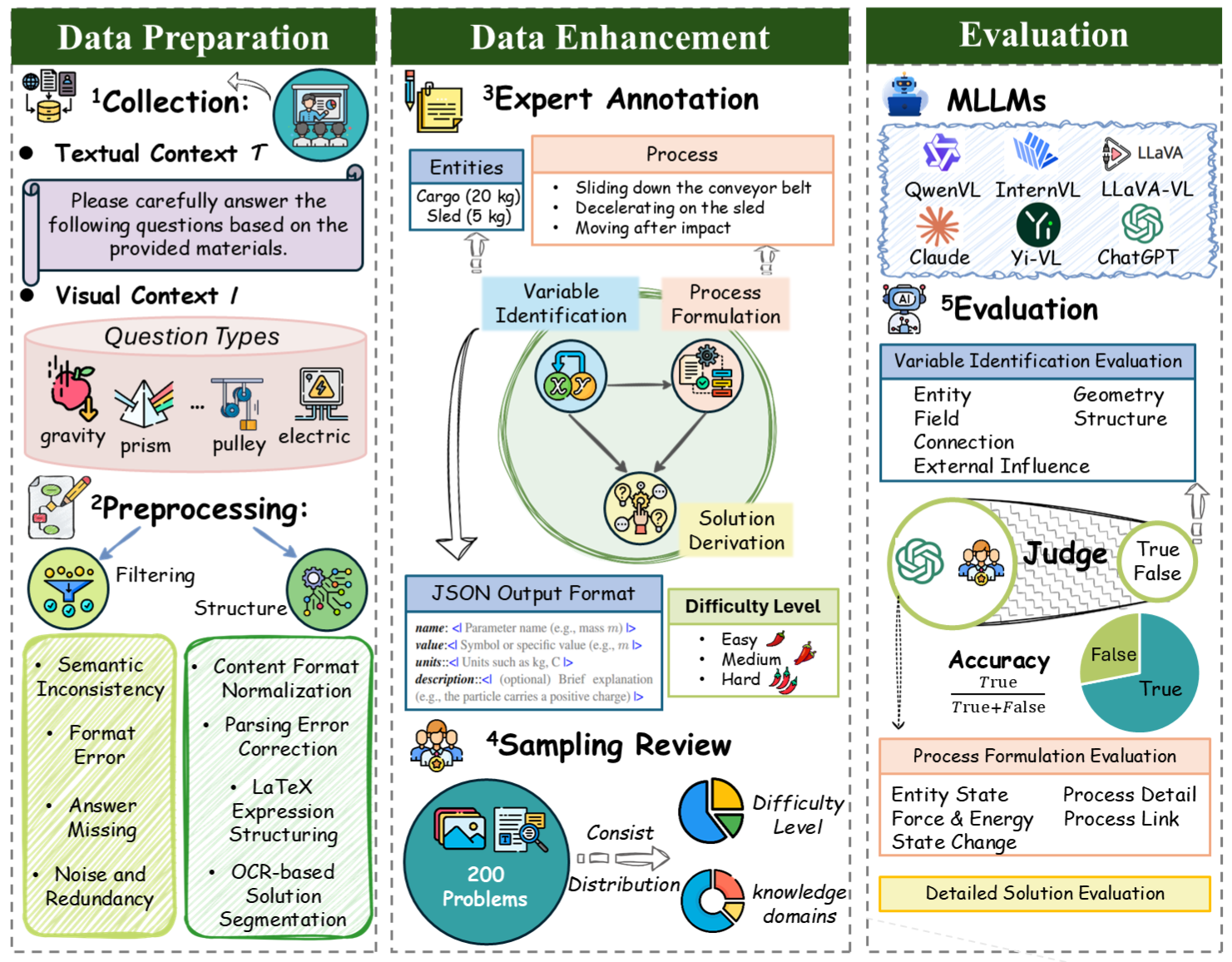

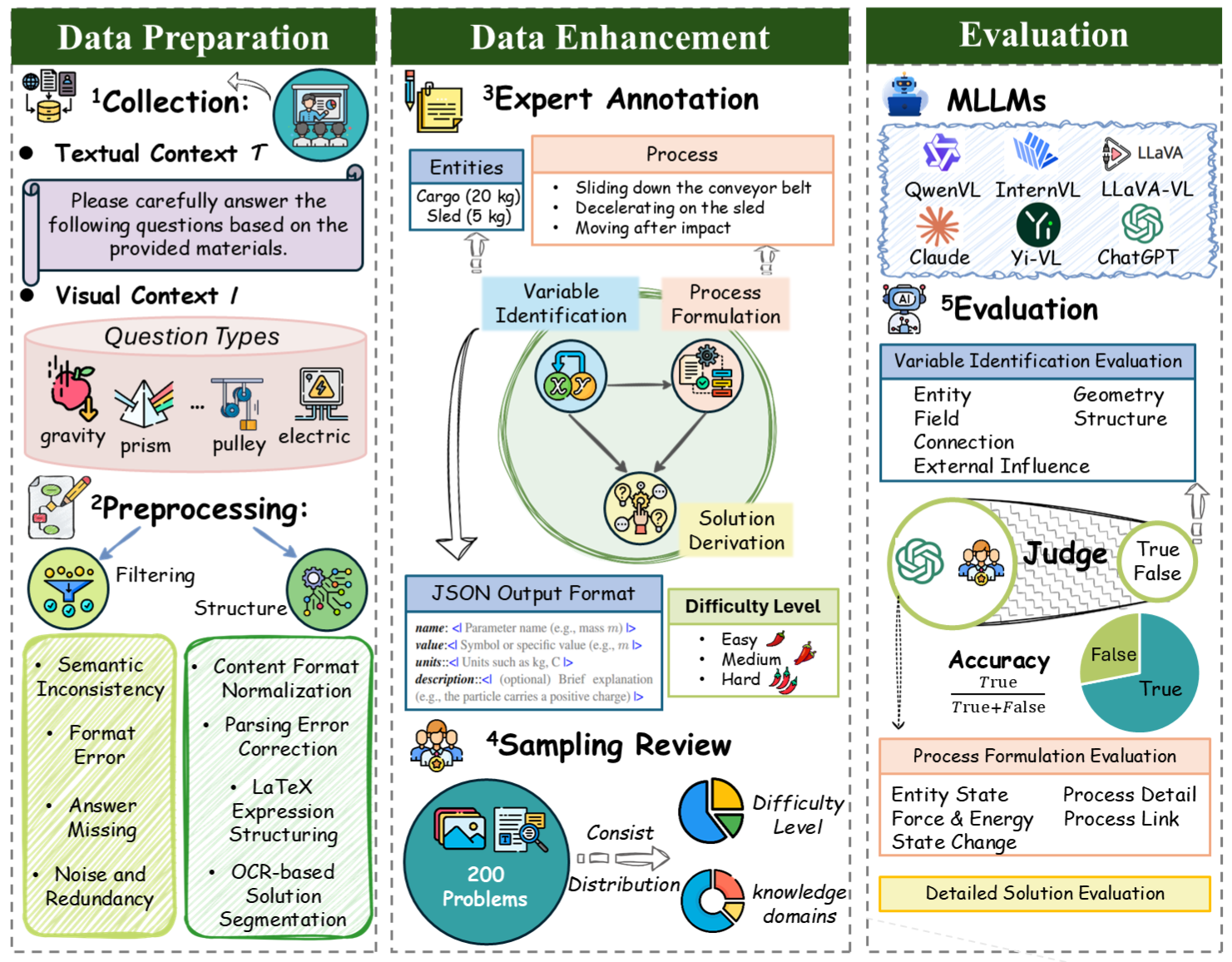

- It employs rigorous data collection, preprocessing, and AI-assisted annotation to integrate text and visual inputs, ensuring diverse and high-quality benchmark challenges.

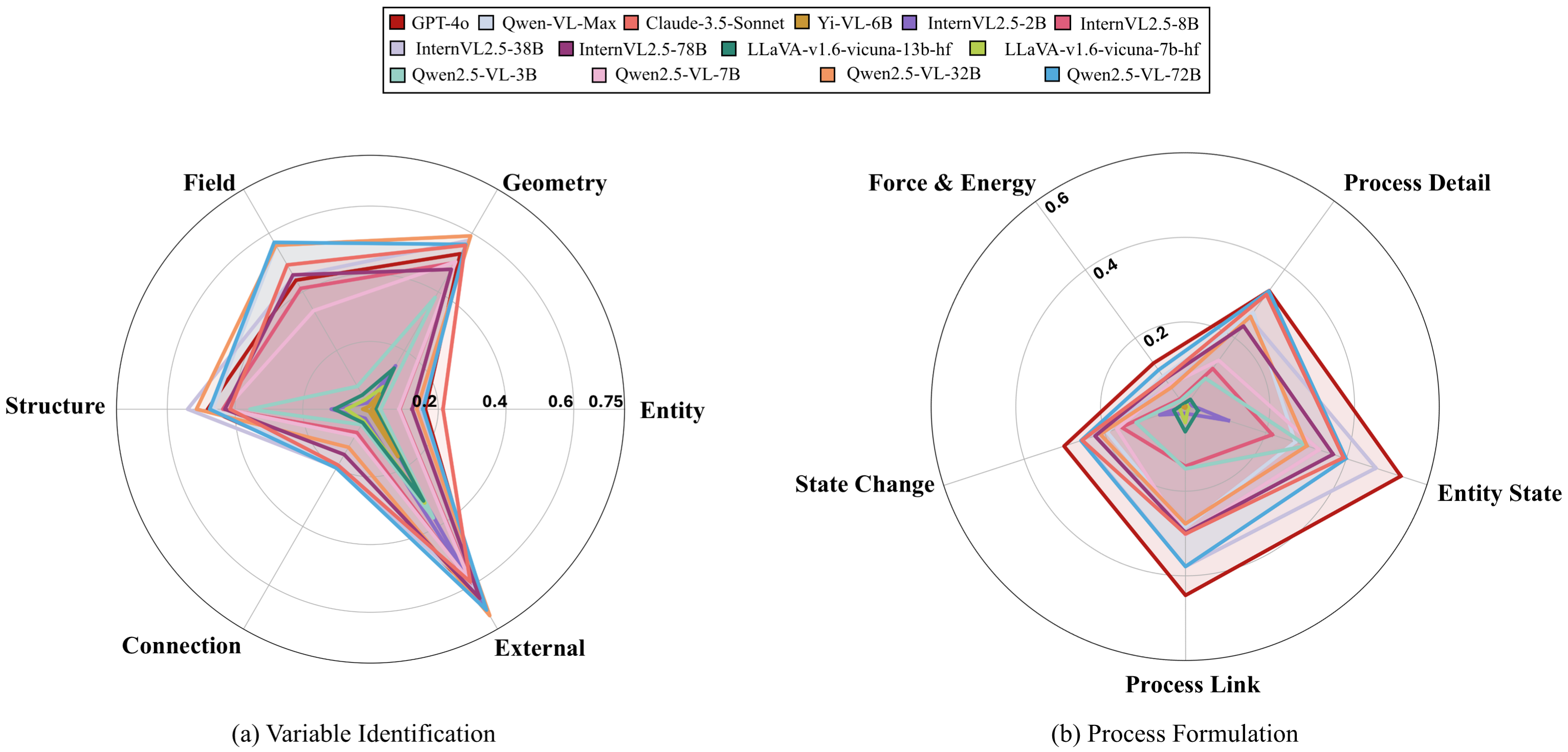

- The benchmark reveals that even state-of-the-art MLLMs struggle with complex physics tasks, highlighting the need for improved reasoning and model training approaches.

PhysicsArena: The First Multimodal Physics Reasoning Benchmark Exploring Variable, Process, and Solution Dimensions

Introduction

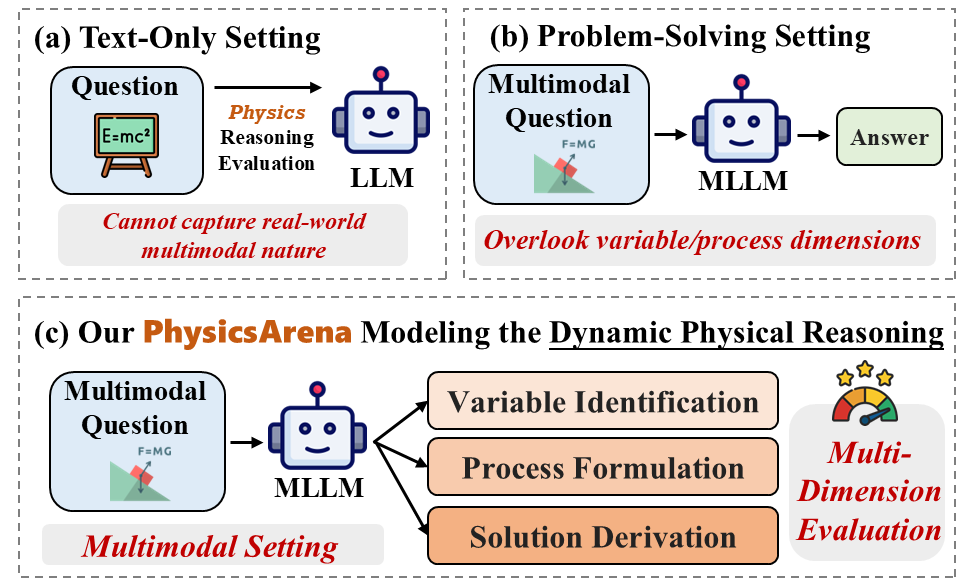

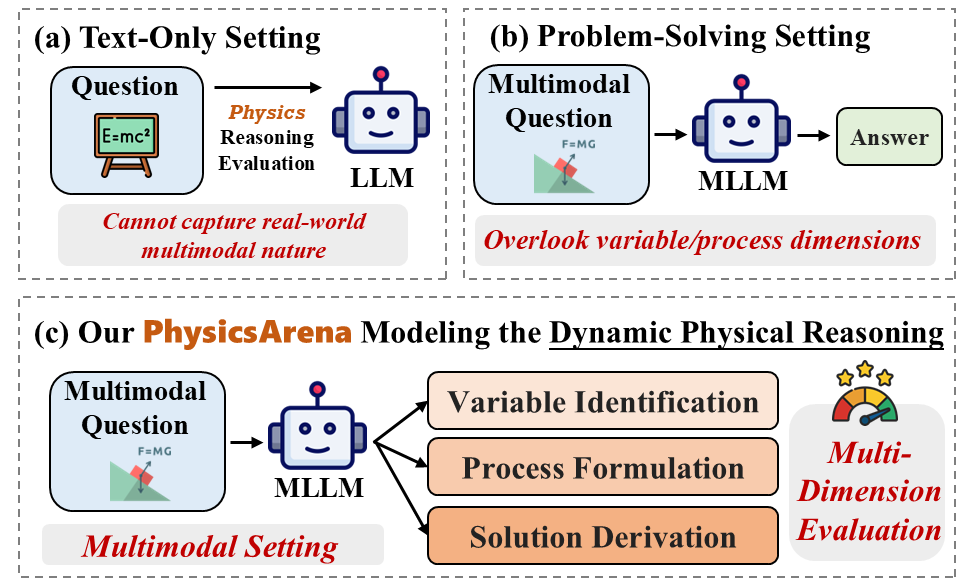

The "PhysicsArena" paper introduces a novel benchmark aiming to enhance multimodal LLMs' (MLLMs) capability in physics reasoning, which necessitates both logical reasoning and an understanding of physical conditions. PhysicsArena is unique in its comprehensive evaluation of MLLMs across three dimensions—variable identification, process formulation, and solution derivation—addressing previous benchmarks' limitations that often overlooked critical intermediate reasoning steps. This benchmark strives to rigorously evalute MLLMs' comprehension across complex physics scenarios.

Benchmark Design and Features

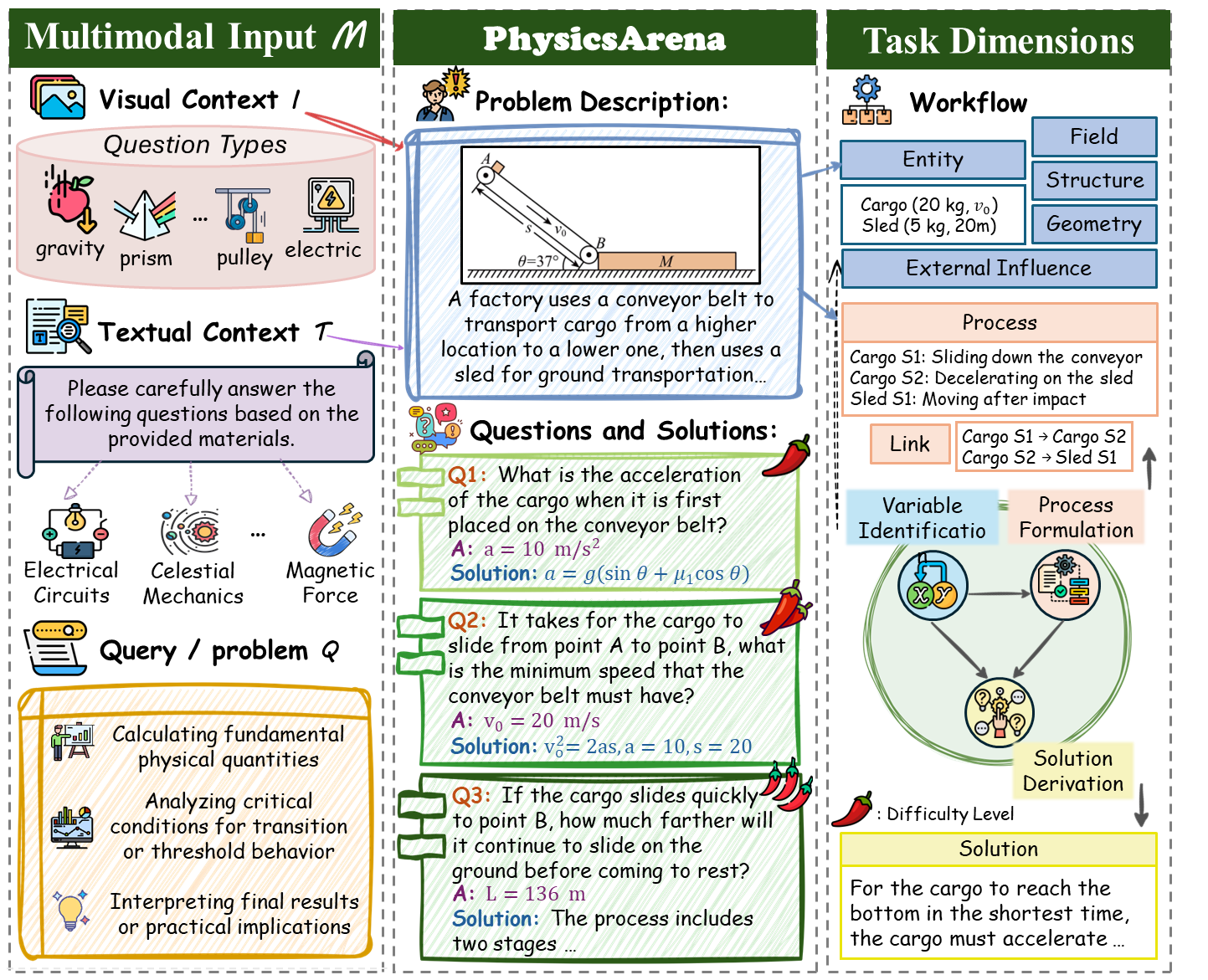

PhysicsArena distinguishes itself with its emphasis on multimodal inputs and reasoning, requiring models to interpret both textual descriptions and visual elements such as diagrams and experimental setups. Each problem instance is structured to demand reasoning across three distinct but interconnected tasks:

- Variable Identification: Models must discern and categorize entities and physical variables, evaluating up to six categories including geometry and connection.

- Process Formulation: This requires expressing the physical process in terms of dynamic interactions involving forces and states, potentially requiring models to draw upon conceptual understanding as opposed to mere computational skills.

- Solution Derivation: Models need to produce an answer through a coherent chain of reasoning, synthesizing insights from previous outputs.

Figure 1: Comparison between previous physics reasoning settings and our proposed PhysicsArena.

Data Collection and Preparation

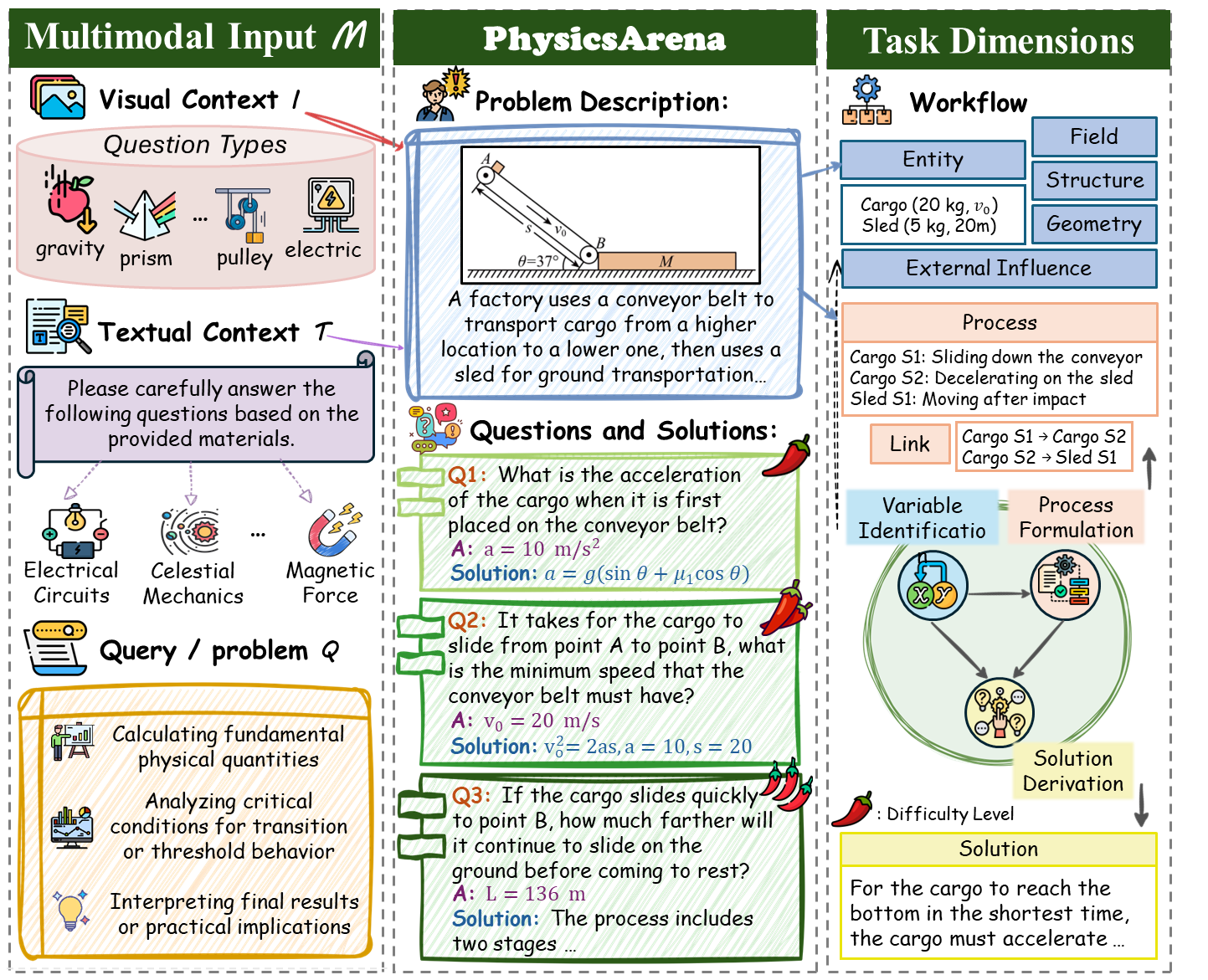

The dataset is meticulously curated through several critical phases: data collection, preprocessing, AI-assisted annotation, and human review. This extensive pipeline ensures high-quality, diverse inputs covering a broad array of physics topics from high-school level curricula. Multimodal examples typically combine text and visual indicators, underpinned by detailed annotations reflecting critical physics properties and relationships.

Figure 2: The illustration of a representative example from our proposed PhysicsArena dataset.

Benchmark Evaluation and Experimental Analysis

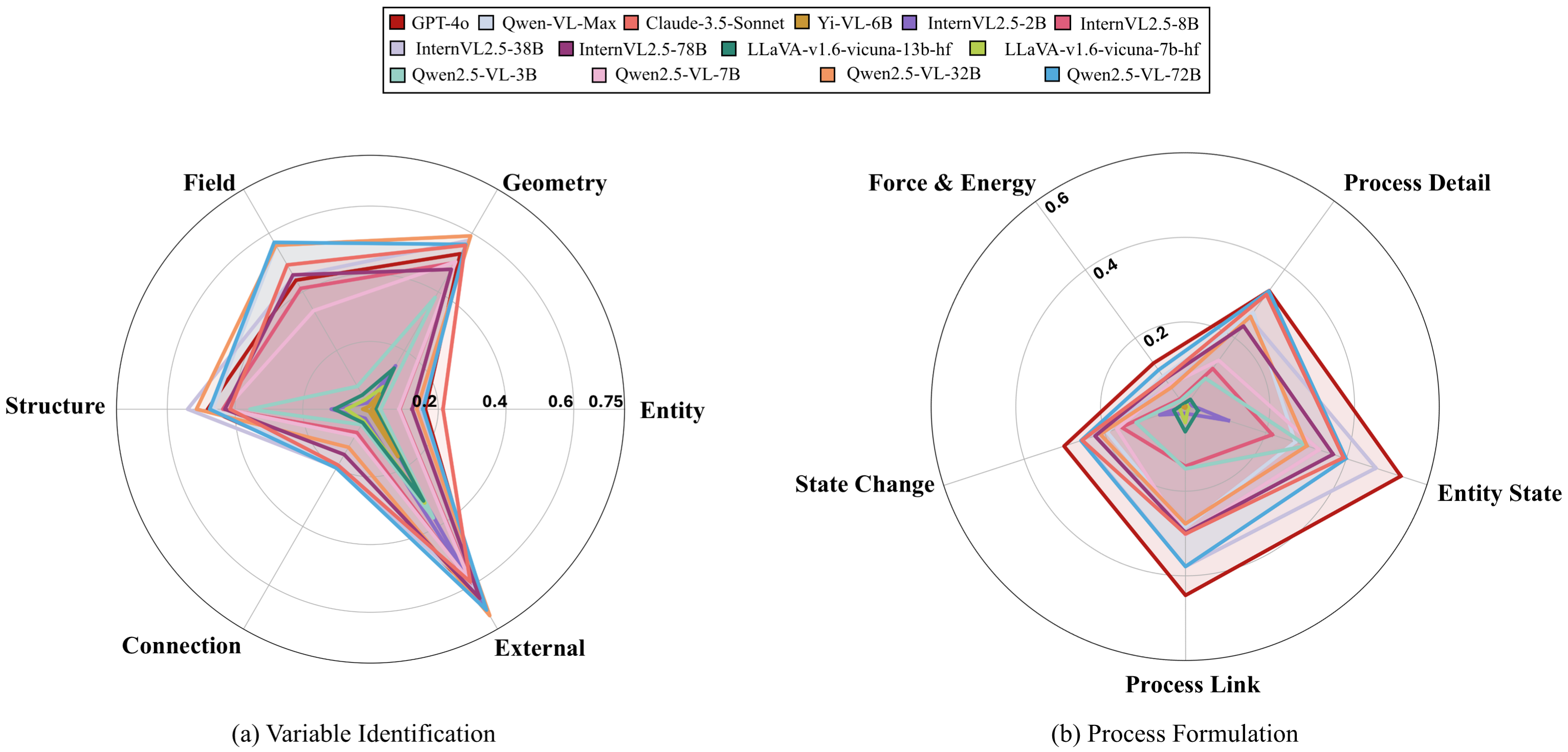

Evaluation involves a comprehensive range of state-of-the-art models across both open-source and proprietary domains. Despite progress in MLLM technology, current models continue to struggle with the varied demands of physics reasoning, particularly in process formulation and deriving solutions.

Figure 3: Performance comparison for Variable Identification (a) and Process Formulation (b).

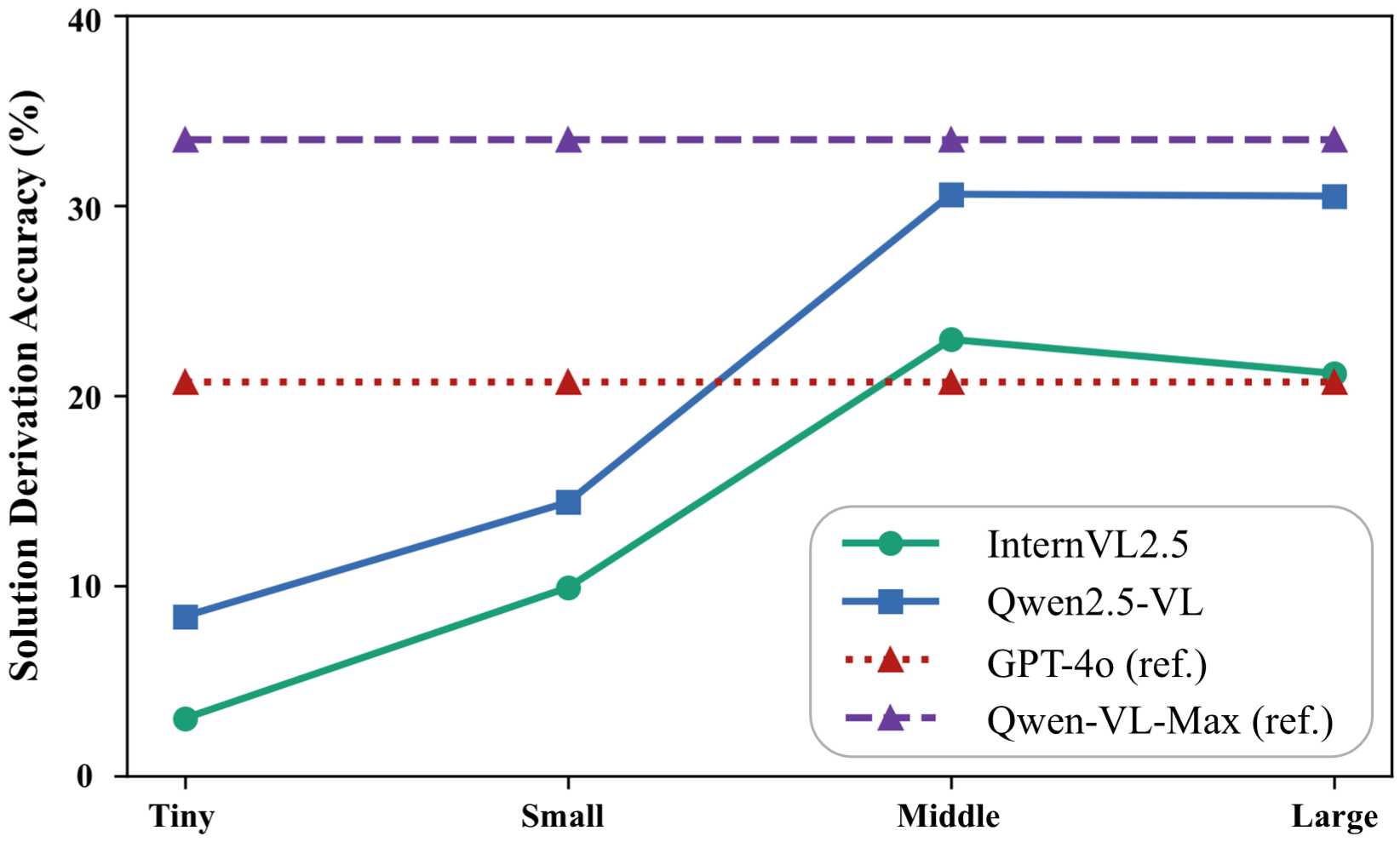

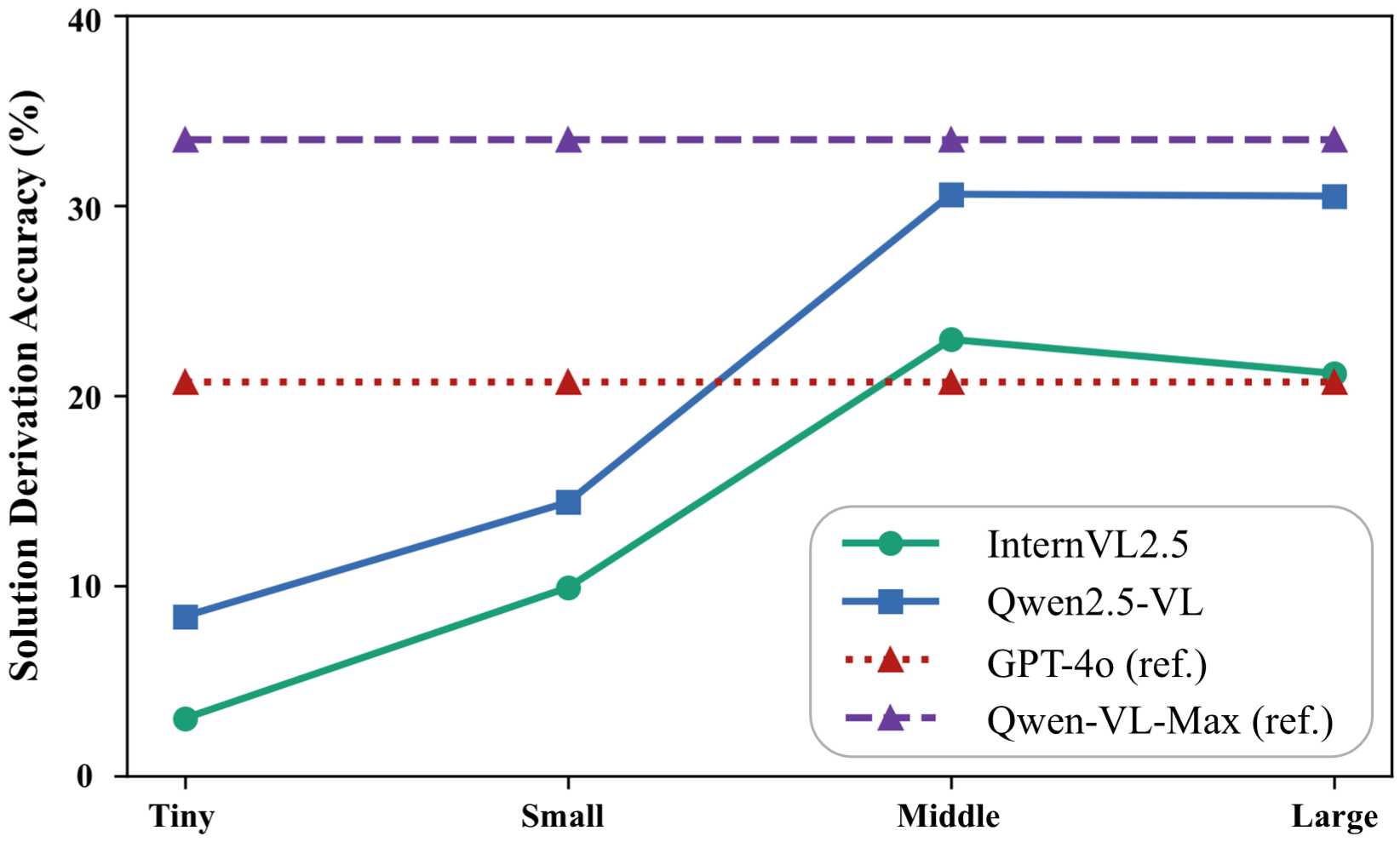

Larger models tend to outperform smaller ones, and accuracy varies slightly by model architecture and training paradigms, but results remain modest, indicating significant challenges yet to be overcome.

Figure 4: The accuracy of solution derivation of Qwen2.5VL and InternVL2.5. We denote Tiny, Small, Middle, Large as the 2B, 8B, 26B, 78B for InternVL2.5 and 3B, 7B, 32B, 72B for Qwen2.5VL, respectively.

Conclusion and Future Directions

The crucial implication of the PhysicsArena benchmark lies in its potential to identify specific areas of MLLM deficiency, particularly in advanced physics contexts, underlining the need for more nuanced model training approaches. Future endeavors could focus on integrating dynamic contextual inputs or exploring broader educational contexts to refine models' reasoning abilities. The findings highlight a persistent gap towards achieving AGI-level scientific reasoning, with prospects for further exploration in AI driven physics reasoning landscapes.

Conclusion

PhysicsArena sets a new standard for evaluating physics reasoning within AI frameworks, presenting a comprehensive evaluation across variable, process, and solution dimensions. Its meticulously structured benchmark promises to guide future developments, emphasizing the necessity of addressing intermediate reasoning steps that are pivotal for realistic problem-solving.

Figure 5: Roadmap of PhysicsArena dataset preparation, enhancement, and evaluation.