- The paper introduces a dual-stage pipeline combining multi-view generation using a Diffusion Transformer and subsequent material refinement.

- It employs cross-frame global attention and a PBR-based diffusion loss to ensure physical accuracy and consistency across views.

- Experimental evaluations using metrics like FID and KID demonstrate significantly enhanced realism for 3D modeling applications.

MCMat: Multiview-Consistent and Physically Accurate PBR Material Generation

The paper "MCMat: Multiview-Consistent and Physically Accurate PBR Material Generation" introduces an advanced methodology for physically-based rendering (PBR) material generation with an emphasis on multi-view consistency. This approach addresses prevalent challenges in current 2D and 3D methods by combining multi-view generation and material refinement through a dual-stage pipeline leveraging transformer-based diffusion models.

Methodology and Architecture

Two-Stage Approach

The approach is divided into two principal stages: Multi-View Generation and Material Refinement. In the Multi-View Generation stage, the system constructs consistent PBR materials by employing a Diffusion Transformer (DiT) model—aptly called Multi-View Generation DiT (MG-DiT). This model utilizes physical geometry, reference imagery, and textual input as conditions to ensure consistent generation across different views.

Figure 1: The method's pipeline highlighting stages of generation and refinement.

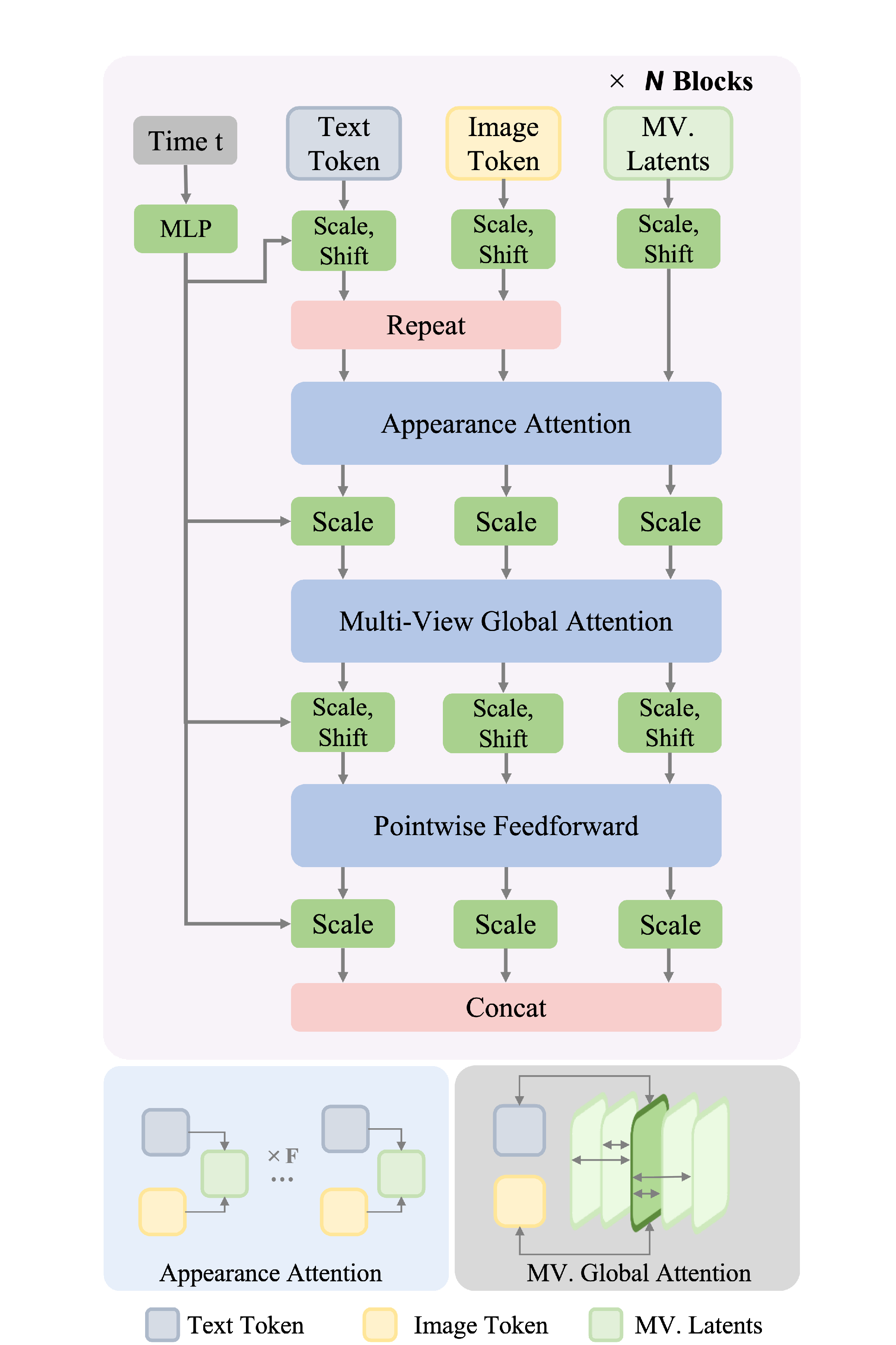

Multi-View Generation DiT (MG-DiT)

The MG-DiT employs a cross-frame global attention mechanism to harmonize features across views, obliquely addressing inconsistencies typical of 2D models. By using surface normal information as a geometric condition and integrating reference imagery via a reference-based DiT block, MG-DiT achieves augmented 3D consistency without succumbing to the limitations of traditional serial and parallel pipelines.

Figure 2: Structure showcasing the Multi-View Generation DiT block.

PBR-Based Diffusion Loss

A salient feature of this work is the introduction of a PBR-based Diffusion Loss, reinforced by a V-Prediction strategy to ensure generated maps adhere to realistic physical laws. This is achieved by leveraging random lighting configurations to diminish embedded lighting artifacts within albedo maps during training.

Material Refinement DiT (MR-DiT)

Following initial material generation, the Material Refinement DiT focuses on refining and filling gaps in the back-projected UV maps. By capitalizing on geometric conditions from the UV-mapped normal, MR-DiT enhances texture details, facilitating the production of detailed 2K resolution material images.

Experimental Evaluation

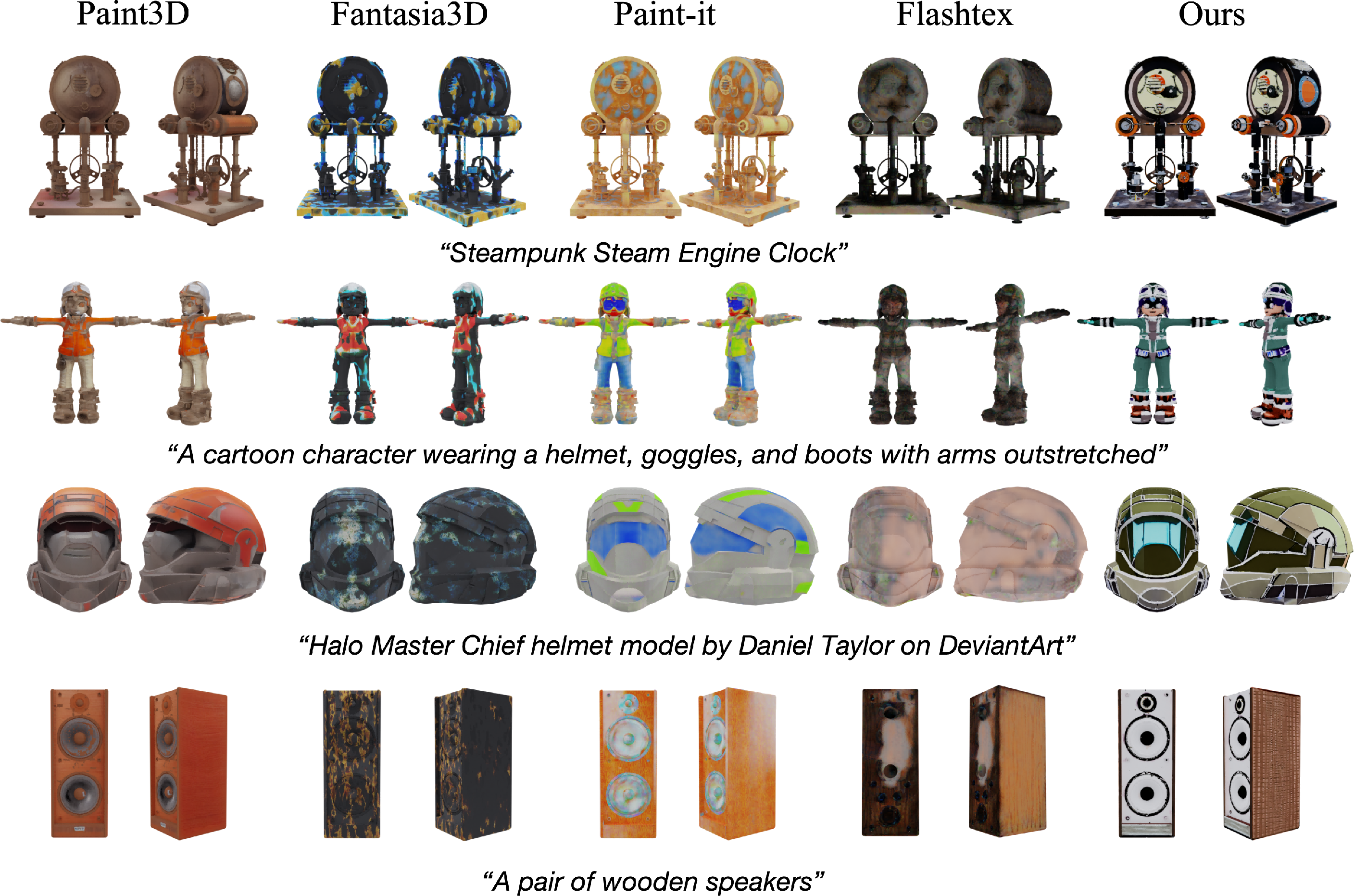

Comprehensive experimental validations underscore the superiority of MCMat over existing techniques regarding consistency, realism, and adaptability across lighting variations. Quantitative metrics such as FID and KID confirm the high fidelity and reduced artifact presence in texture generation.

Figure 3: Qualitative comparisons on PBR material generation conditioned on text prompt.

Implications for Real-World Applications

The paper's implications are substantial for industries reliant on 3D modeling, such as gaming and virtual reality. By providing a method that enhances texture realism and consistency, applications can achieve more convincing visual simulations under varying lighting conditions, facilitating more authentic user experiences.

Conclusion

In summary, the proposed method delineates a robust framework for multiview-consistent and physically accurate PBR material generation. While strides have been made in reducing artifacts and improving realism, future work could aim at optimizing the computational efficiency of the method to enhance scalability and applicability in real-time systems. Overall, MCMat sets a new benchmark in the field of AI-driven 3D content generation by improving both the aesthetic quality and physical realism of digital assets.