- The paper introduces a novel view packing technique and adapts autoregressive models to efficiently synthesize high-resolution PBR textures on 3D models.

- It employs a concurrent multi-view generation strategy using geometric condition maps from multiple fixed viewpoints to maintain texture consistency.

- Experimental results show significant improvements in fidelity (FID and CLIP scores) and faster inference times compared to traditional methods.

PacTure: Efficient PBR Texture Generation on Packed Views with Visual Autoregressive Models

This essay provides an expert-level summary and discussion of the paper "PacTure: Efficient PBR Texture Generation on Packed Views with Visual Autoregressive Models" (2505.22394). The paper introduces PacTure, which is a novel approach to generating high-quality Physically-Based Rendering (PBR) textures efficiently for 3D models using visual autoregressive models.

Introduction to PBR Texture Generation

The generation of PBR textures is crucial in industries requiring realistic 3D asset rendering, such as gaming and virtual/augmented reality. Traditional 2D generation approaches have faced issues such as inconsistent textures across different views, especially due to long inference times and resolution limitations. The paper addresses these issues with a novel view packing technique, leveraging visual autoregressive models to enhance both efficiency and quality of texture generation.

Core Innovations in PacTure

PacTure introduces several innovations to overcome the limitations of previous approaches:

- View Packing Technique: This is a central contribution that compactly arranges multi-view maps on an atlas, significantly increasing the effective resolution without increasing the inference cost. This technique enables superior utilization of the generative capacity by reducing wasted pixel space traditionally occupied by non-contributing background pixels.

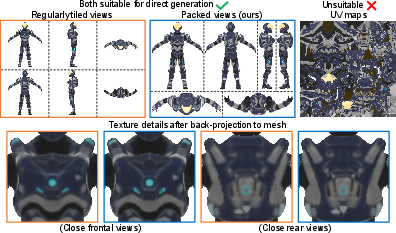

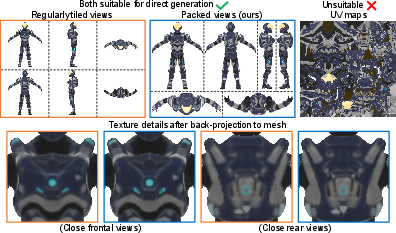

Figure 1: We propose view packing to compactly pack multi-view maps onto the atlas as the condition and target maps for image generative models used in texturing.

- Multi-view PBR Texture Generation: Instead of sequential texturing, PacTure adopts a concurrent multi-view generation strategy. By using a next-scale prediction autoregressive framework, it allows fine-grained control and supports multi-domain outputs efficiently. The model demonstrated robust performance by utilizing geometric condition maps rendered from multiple fixed viewpoints.

- Autoregressive Model Adaptation: Adapting Infinity's next-scale prediction model, the paper introduces efficient modifications for fine-grained controls and domain-specific outputs, aligning generation across views and improving training efficiency.

Practical Implementation Insights

Implementing PacTure requires understanding its pipeline:

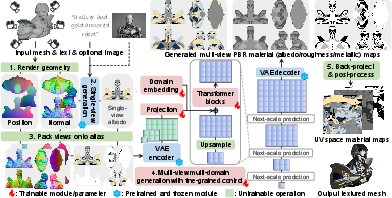

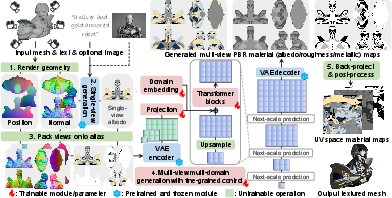

- Geometry Condition Maps: From the input mesh and prompts, maps like position and surface normal are generated from six fixed views and are crucial for guiding texture generation.

Figure 2: An overview of our pipeline comprising geometry condition and image prompt rendering, view packing on an atlas, and deploying a generative model for textures.

- Two-stage Generation Process: A single-view texture synthesis is used first, enabling high-quality albedo estimation, followed by multi-view generation benefiting from this reference, thus achieving consistent outputs across views.

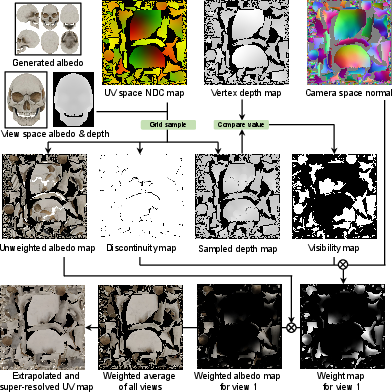

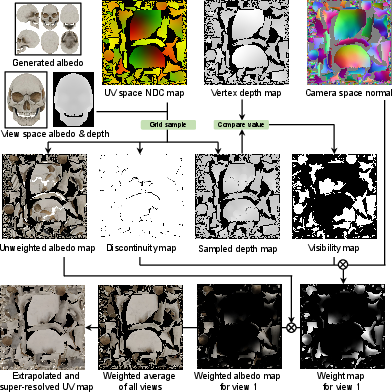

Figure 3: The overview of our back-projection process.

Experimental Results

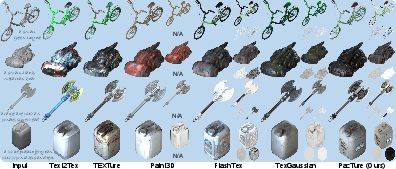

The paper provides extensive experimental analysis showing PacTure's superiority over existing methods. Notably:

Table 1 from the paper indicates substantial gains in quality (in terms of FID and CLIP scores) and efficiency (in terms of inference time), demonstrating that PacTure not only improves visual quality but also optimizes computational resources.

Conclusion

PacTure represents a significant advancement in PBR texture generation by addressing key challenges in resolution management and inference efficiency. Its novel application of view packing and adaptation of visual autoregressive models highlights the potential for automated, high-fidelity texturing in real-time applications. Future work could focus on extending these techniques to seamlessly handle complex occlusions and integrating PacTure with other robust generative models to further enhance detail and realism.