- The paper demonstrates that functional replication in computing systems does not guarantee phenomenological equivalence as defined by Integrated Information Theory (IIT).

- It employs cause-effect structure analysis using Transition Probability Matrices to quantitatively assess a system’s potential for consciousness.

- Findings reveal that typical modular and feedforward AI architectures inherently lack the necessary integration to support a unified conscious experience.

Dissociating Artificial Intelligence from Artificial Consciousness

The paper "Dissociating Artificial Intelligence from Artificial Consciousness" explores the distinction between AI and artificial consciousness through the lens of Integrated Information Theory (IIT). It argues against the notion that functional equivalence between computational systems implies phenomenological equivalence, challenging the thesis of computational functionalism. Here, we explore the technical facets and implications of this research.

Foundation and Postulates

IIT posits that consciousness arises from the intrinsic properties of a system's causal structure rather than its external functions. It defines consciousness through five axioms: intrinsicality, information, integration, exclusion, and composition. These axioms form the basis for evaluating whether a system can support consciousness by examining its causal model, typically expressed as a Transition Probability Matrix (TPM).

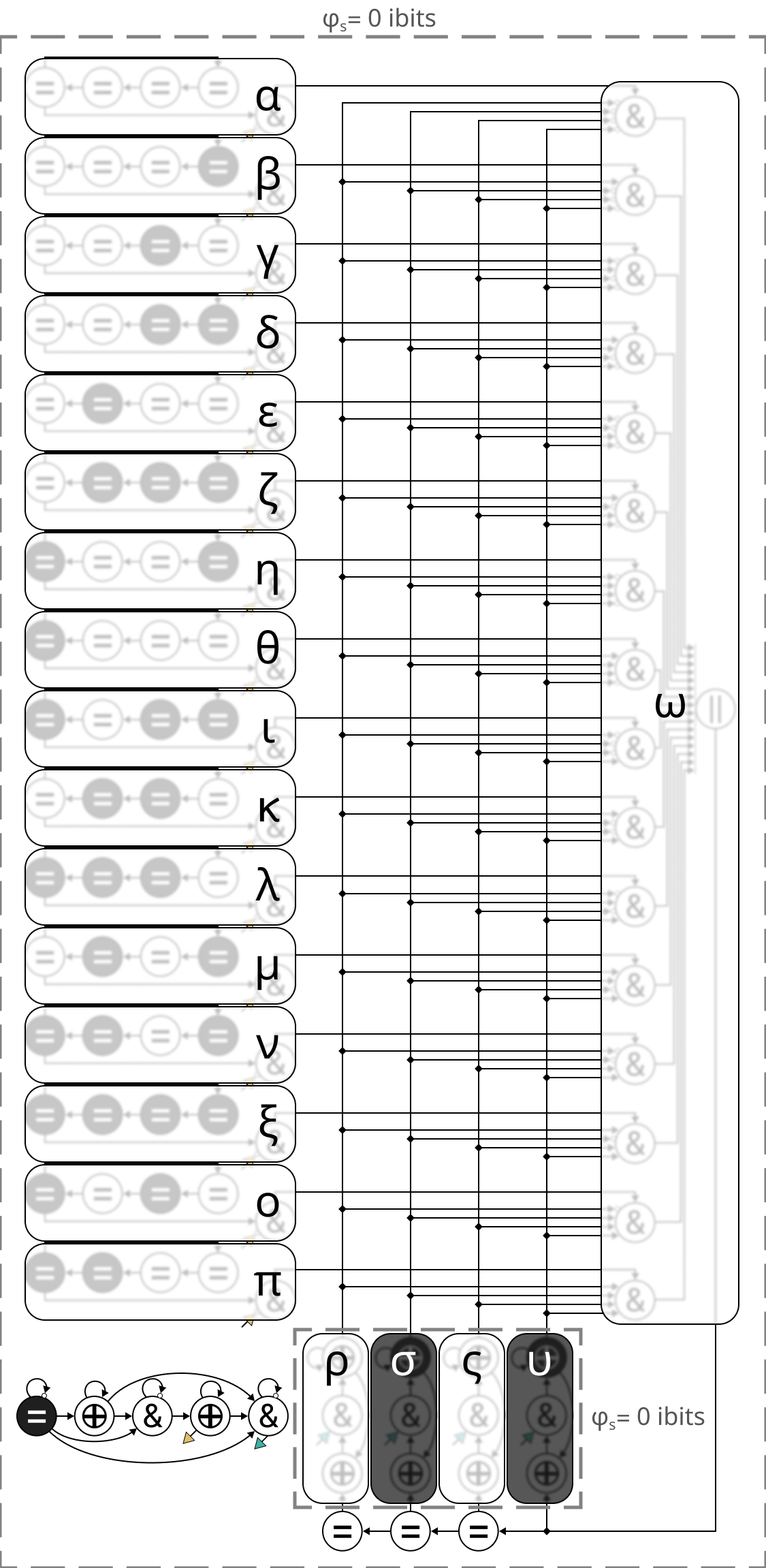

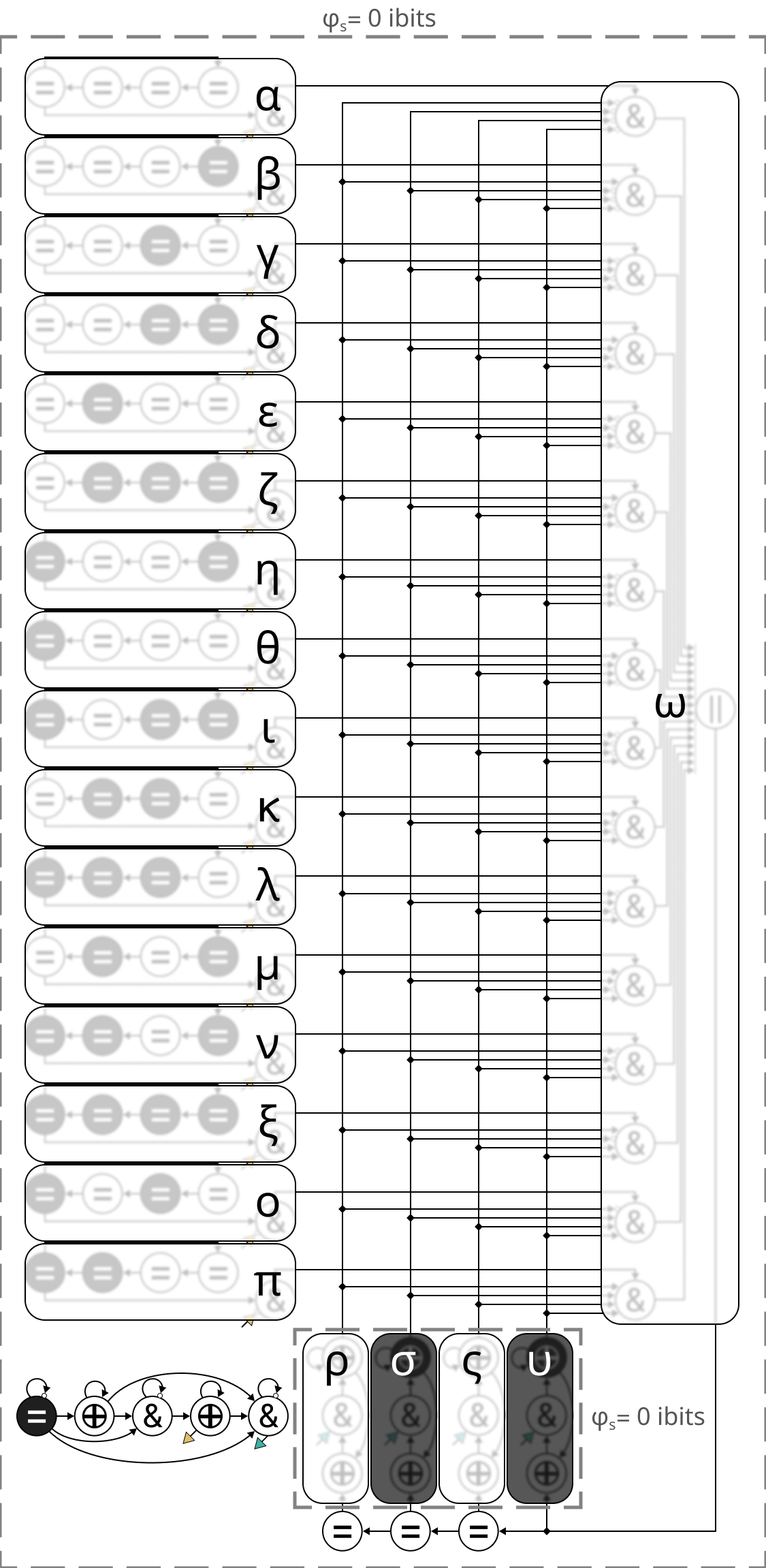

Figure 1: A target system for simulation. The system PQRS, with its deterministic state transitions and maximally irreducible complex.

Complex Identification and Cause-Effect Structures

A system is recognized as a complex if it maximizes integrated information under IIT's postulates. This process involves unfolding its cause-effect structure, identifying distinctions, and evaluating relations among them. The irreducibility of cause-effect power within a system's structure determines its potential for consciousness.

Figure 2: A simple computer simulating PQRS, illustrating the contrast between functional equivalence and cause-effect structure.

Functional Equivalence vs. Phenomenal Equivalence

Theoretical Exploration

The paper demonstrates that computational systems can be functionally equivalent to other systems without replicating their phenomenal experiences. By applying IIT to a basic stored-program computer simulating a deterministic system (PQRS), it shows that although the computer replicates the target system's input-output behavior, it fragments into multiple trivial complexes.

Figure 3: The fragmentation of the computer's cause-effect structures when simulating PQRS.

Dissociation Mechanisms

The dissociation between functionality and phenomenology results from the modular and feedforward architecture commonly found in computers. Such structures inherently lack the integration required by IIT for a singular, large complex capable of supporting consciousness. Even when feedback connections are introduced, the system remains reducible.

Implications and Extensions

Induction to General Architectures

The findings imply a general principle applicable to various computing architectures, asserting that functional replication of cognitive functions does not guarantee replication of consciousness. This challenges the computational-functionalism notion that simulating neuronal interactions at microphysical levels is sufficient for consciousness.

Figure 4: Inductive extension to larger systems illustrating the linear growth in cause-effect structure complexity.

Potential for Conscious Artificial Systems

While standard architectures might be limited, the paper opens discussion on the potential for neuromorphic or quantum computational systems whose structural organization could support high integrated information. Such systems might bridge the gap between AI capabilities and consciousness, expanding the field of artificial entities perceived as conscious.

Conclusion

"Dissociating Artificial Intelligence from Artificial Consciousness" presents a compelling argument grounded in IIT against the assumption that AI, due to computational capabilities, can naturally possess consciousness. By dissociating function from being, the paper calls into question assumptions regarding the sufficiency of computational replication for consciousness, guiding future exploration in AI development towards understanding the intrinsic causal structures essential for subjective experience.

Figure 5: Double dissociation demonstrating independence between consciousness and intelligence.