- The paper introduces a transformer-pointer network that efficiently predicts improving columns for parallel machine scheduling.

- It replaces computationally heavy dynamic programming with a neural network, achieving up to an 80% objective improvement on large instances.

- Empirical results demonstrate significant scalability and robustness across varied distributions, reducing computation time by 45% on medium-sized problems.

Introduction

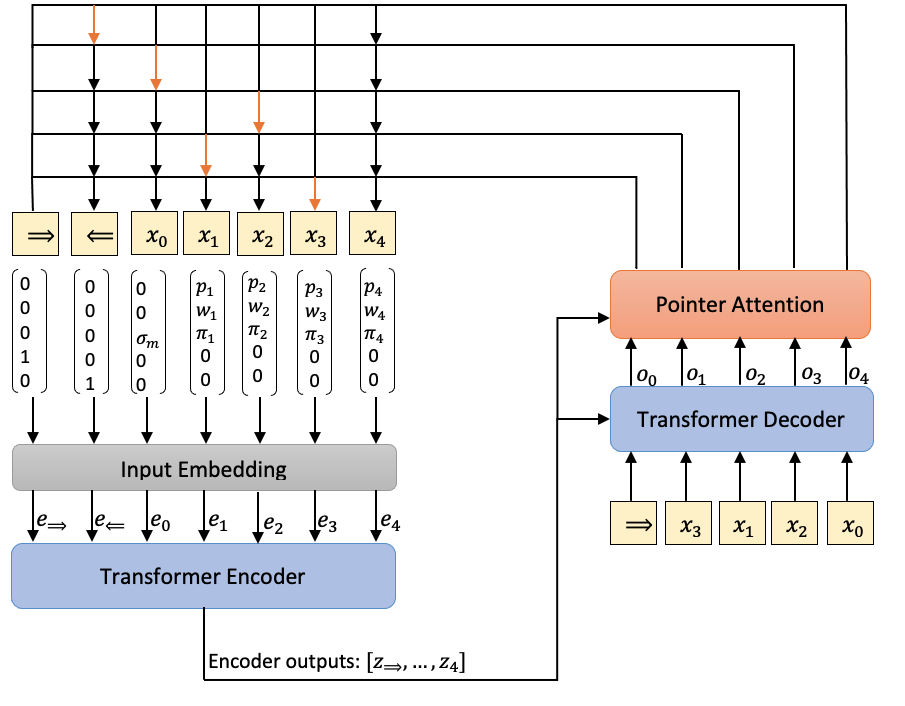

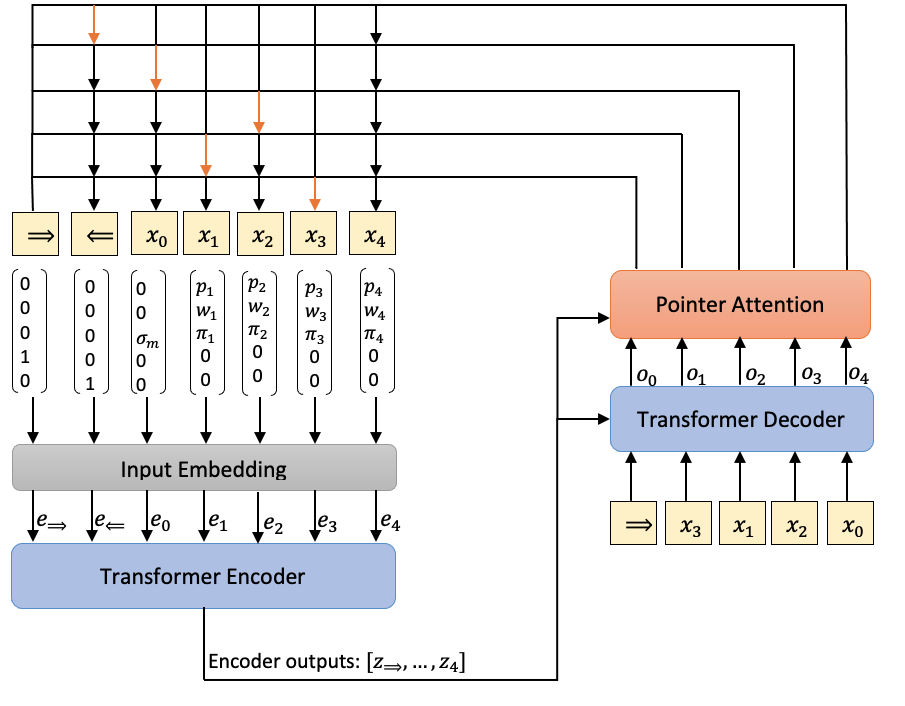

The paper "All You Need is an Improving Column: Enhancing Column Generation for Parallel Machine Scheduling via Transformers" (2410.15601) introduces a novel approach leveraging neural network innovations to refine the column generation process applied to parallel machine scheduling. The key proposition involves utilizing a transformer-based encoder-decoder model with a pointer mechanism to efficiently predict job sequences with negative reduced costs, thereby optimizing the master problem in column generation.

Column Generation and Machine Scheduling

Parallel machine scheduling poses significant challenges, particularly in managing job sequences to minimize total weighted completion time. Traditionally, solutions have rested on dynamic programming approaches, which, while exact, become computationally onerous with larger problem sizes due to their combinatorial nature.

Column generation is a well-established method to tackle such scheduling problems, solving linear relaxations iteratively by introducing improving columns. In each iteration, identifying columns that can enhance the objective function—or prove none exist to terminate the process—is crucial. The typical reliance on dynamic programming for solving pricing subproblems in this context introduces significant computational overhead.

Neural Network Integration

The crux of this research lies in replacing the computationally expensive dynamic programming with a transformer-pointer network. This neural model approximates the solution to the pricing subproblem, rapidly predicting schedules yielding the most promising job sequences without forgoing the optimality guarantee of the column generation framework.

Transformers, with their encoder-decoder design and multi-head self-attention mechanisms, have revolutionized sequential data tasks. In this work, they are adapted to interpret and process sequences of job and machine parameters. A noteworthy adaptation is the integration of a pointer network, enhancing the transformer's ability to select job sequences akin to combinatorial strategies employed in tasks like the Traveling Salesman Problem (TSP).

Figure 1: Transformer-Pointer Network with input X={x0,x1,x2,x3,x4}, and output {⇒,3,1,2,0,⇐} from which we have the output schedule [3,1,2]. The elements ⇒ and ⇐ represent the beginning and end of the schedule, respectively and $0$ the machine elements. Note that job $4$ represented by x4 is not selected in the partial schedule.

Empirical Evaluation

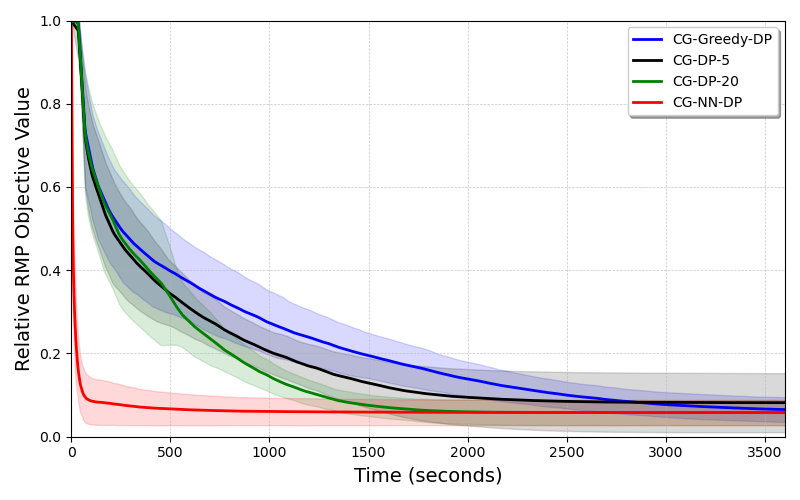

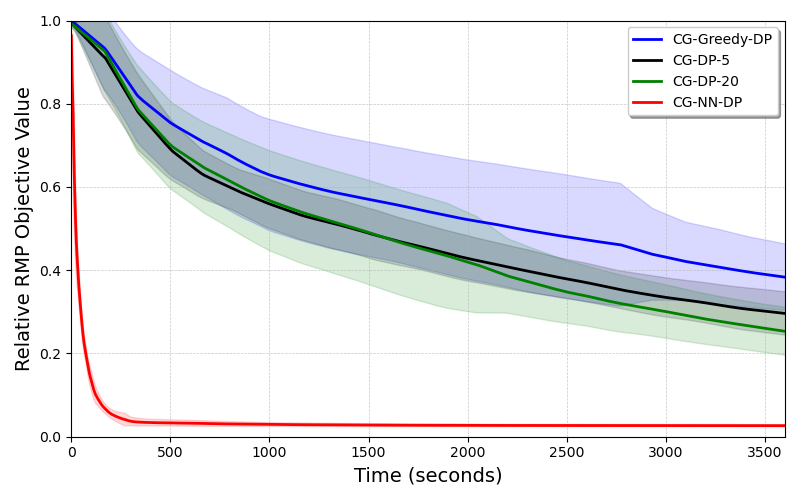

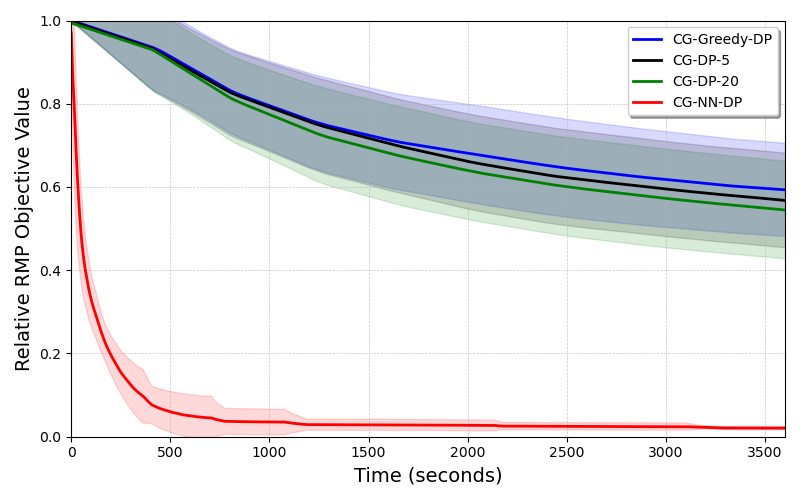

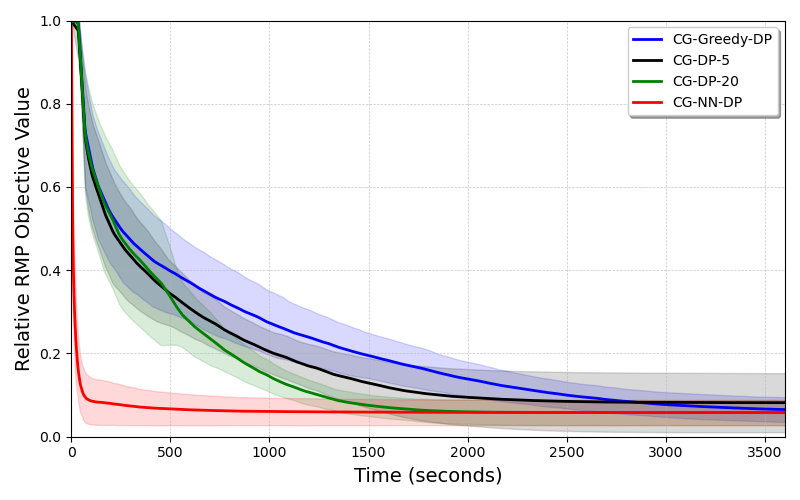

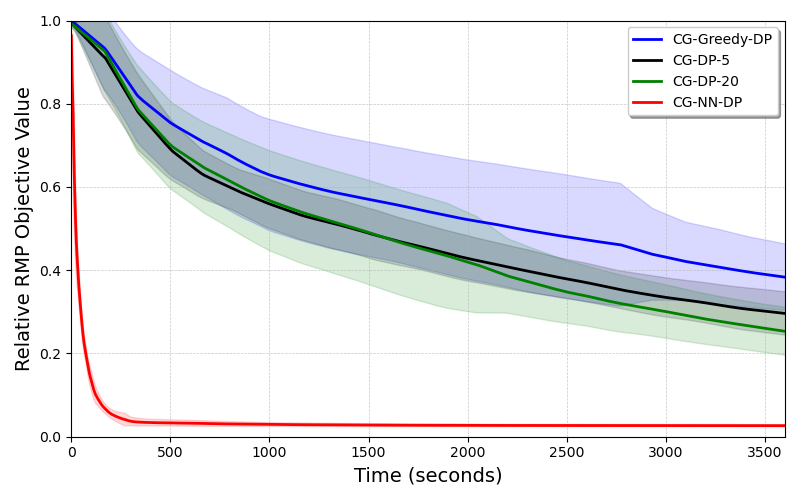

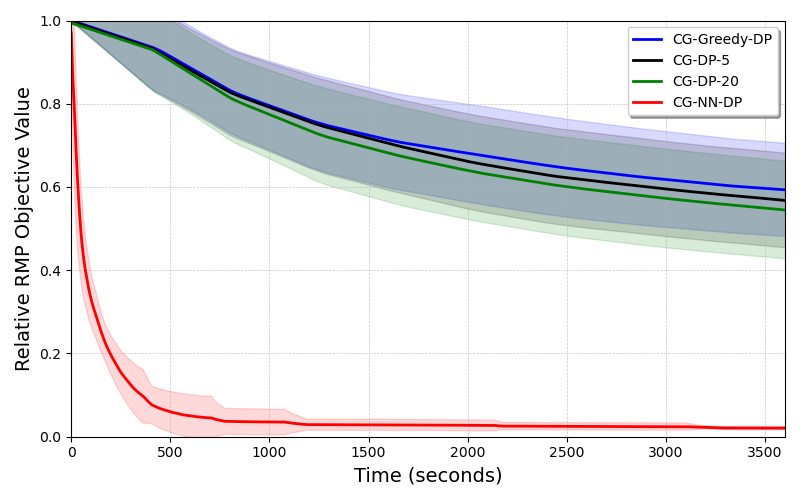

The proposed CG-NN-DP method demonstrates an impressive reduction in computation time—averaging a 45% decrease for medium-sized instances compared to the dynamic programming approach. Crucially, it maintains the accuracy and robustness expected of column generation methods, extending its utility across varied distributions and larger scale instances untackled in training.

In larger jobs and machines contexts, the CG-NN-DP approach significantly elevates scalability, achieving up to an 80% improvement in objective value under constrained time frames. The model's capacity to generalize beyond the training distributions, handling both uniform and Weibull-generated scenarios, underscores its robust adaptability.

Figure 2: Convergence plots of different CG-DP approaches and CG-NN-DP for test instances 8M60N, 16M80N, and 20M100N generated from Uniform distribution. The solid curves are the mean of the relative objective values over 10 instances and the shaded area shows ±1 standard deviation.

Conclusion

The integration of transformers, and specifically the pointer attention mechanism, presents a substantive advancement in solving parallel machine scheduling problems using column generation. This approach not only preserves optimality but also enhances efficiency and scalability, setting a baseline for future explorations into machine learning's role in combinatorial optimization. Future research avenues can explore transfer learning to broaden applicability across scheduling tasks or introduce reinforcement learning paradigms to mitigate exposure bias in the learning process.

In sum, this work exemplifies how cutting-edge machine learning architectures can redefine classical operations research methodologies, yielding innovations that align computational efficiency with practical solutions in complex scheduling environments.