- The paper introduces GOGH, a framework that uses neural networks for initial throughput estimation and dynamic GPU scheduling in heterogeneous clusters.

- It integrates an ILP-based optimizer with real-time performance feedback to minimize energy consumption while ensuring throughput guarantees.

- Experimental results show that pairing an RNN for initial estimation with a Feedforward model for refinement significantly improves scheduling accuracy and resource utilization.

GOGH: Efficient GPU Orchestration in Heterogeneous Clusters

Introduction

The paper "GOGH: Correlation-Guided Orchestration of GPUs in Heterogeneous Clusters" (2510.15652) proposes a framework named GOGH to address the challenge of efficiently managing computational workloads in heterogeneous clusters. It provides a solution for dynamic resource allocation by leveraging historical data to inform scheduling decisions and optimize both performance and energy efficiency.

Framework Overview

GOGH orchestrates machine learning jobs across heterogeneous GPU clusters by utilizing the predictive power of neural networks. It makes informed scheduling decisions by considering the correlations between jobs and GPU types in terms of throughput performance.

Architectural Design

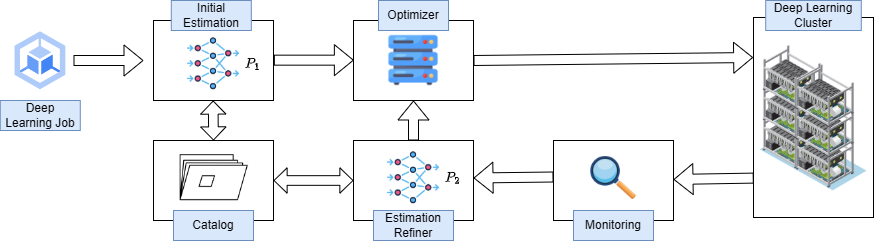

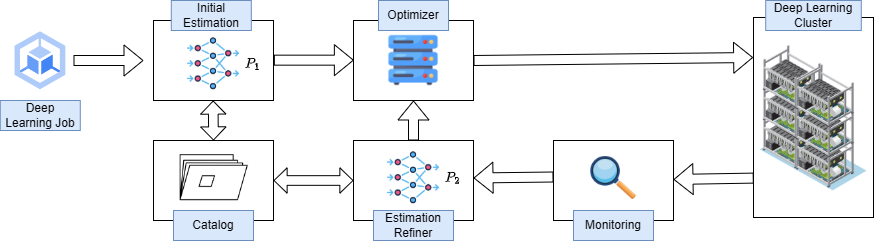

GOGH's architecture consists of three core modules: initial throughput estimation, optimization of job assignments, and refinement of throughput predictions.

Figure 1: The architecture of GOGH.

Initial Throughput Estimation: This module employs a neural network, referred to as P1, which predicts the throughput of a newly arrived job based on historical performance data of similar jobs. The estimates are refined iteratively using real-time measurements captured during the job's execution.

Optimizer: The optimization module formulates the GPU allocation problem as an integer linear programming (ILP) challenge. It aims to assign jobs to GPUs such that overall system energy consumption is minimized while ensuring throughput guarantees.

Estimation Refinement: Real performance data gathered during job execution is utilized by another neural network, P2, to update and refine throughput estimates. This enables continuous improvement in scheduling accuracy.

The paper introduces several essential notations to define the resource allocation problem formally. These include sets representing servers, GPU types, jobs, and job combinations. The objective is to minimize the total energy consumption while satisfying job-specific and system-wide constraints.

Problem 1 outlines the ILP formulation that guides resource allocation, considering throughput requirements and accelerator capacity constraints.

Evaluation

The effectiveness of GOGH was validated using a comprehensive dataset featuring a variety of machine learning workloads on heterogeneous GPU configurations. The experimental setup illustrated GOGH's ability to improve job scheduling efficiency and throughput prediction accuracy significantly.

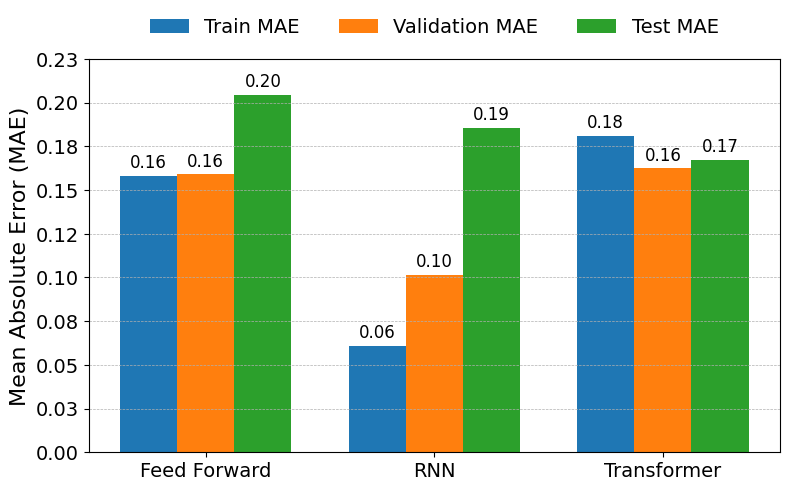

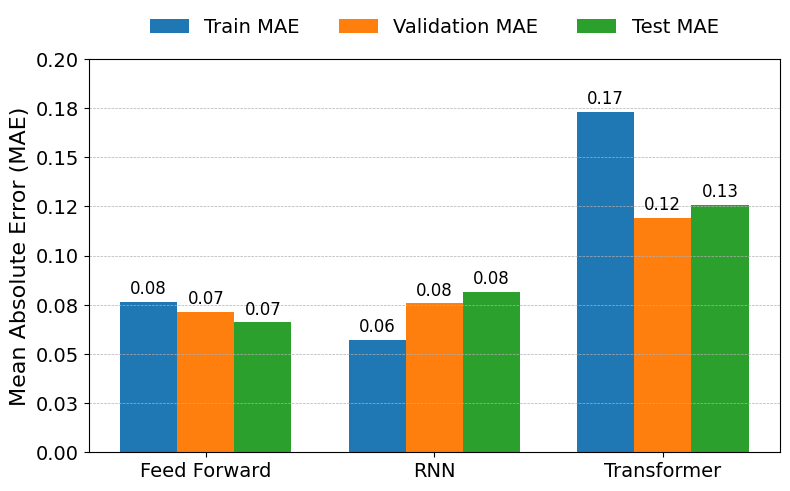

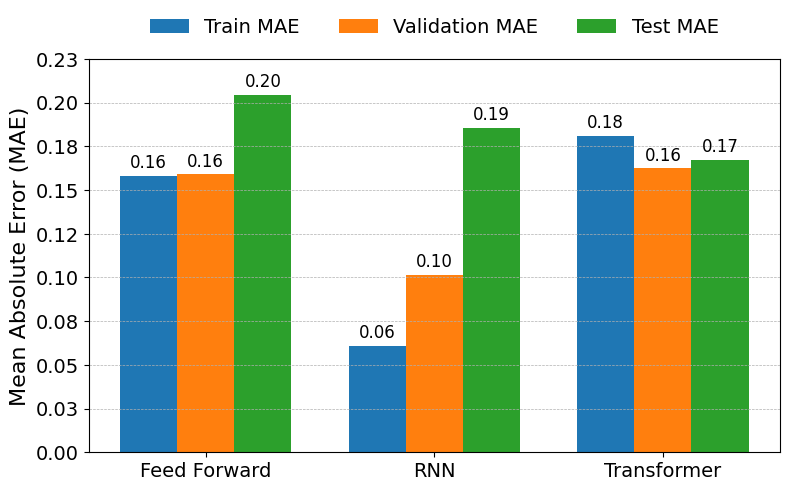

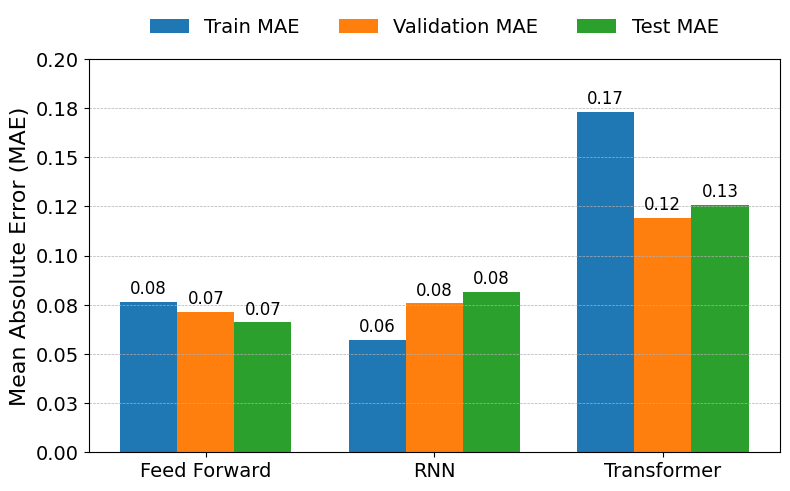

Neural Network Models: Three neural network architectures—Feedforward (FF), Recurrent Neural Network (RNN), and Transformer—were employed to analyze their impact on prediction accuracy.

Figure 2: Initial NN (P1).

Performance Results: The RNN architecture demonstrated superior predictive performance in the initial estimation phase (P1) with lower mean absolute error (MAE) than the transformer and FF models. For the refinement phase (P2), the Feedforward model exhibited consistent accuracy improvements over the RNN and Transformer.

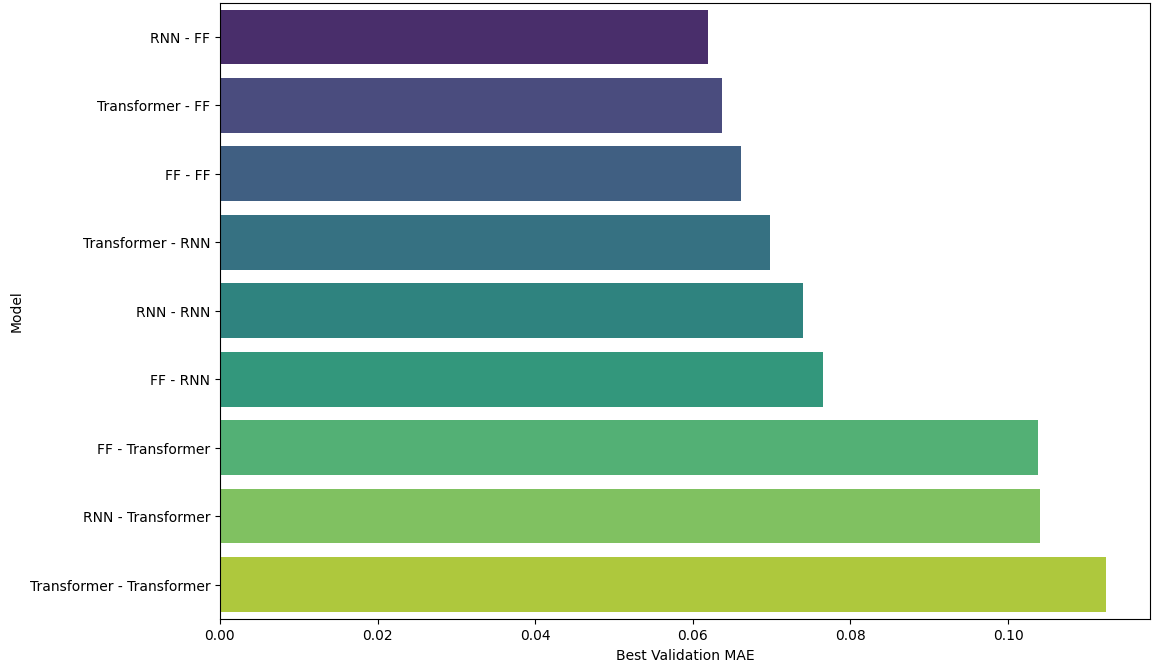

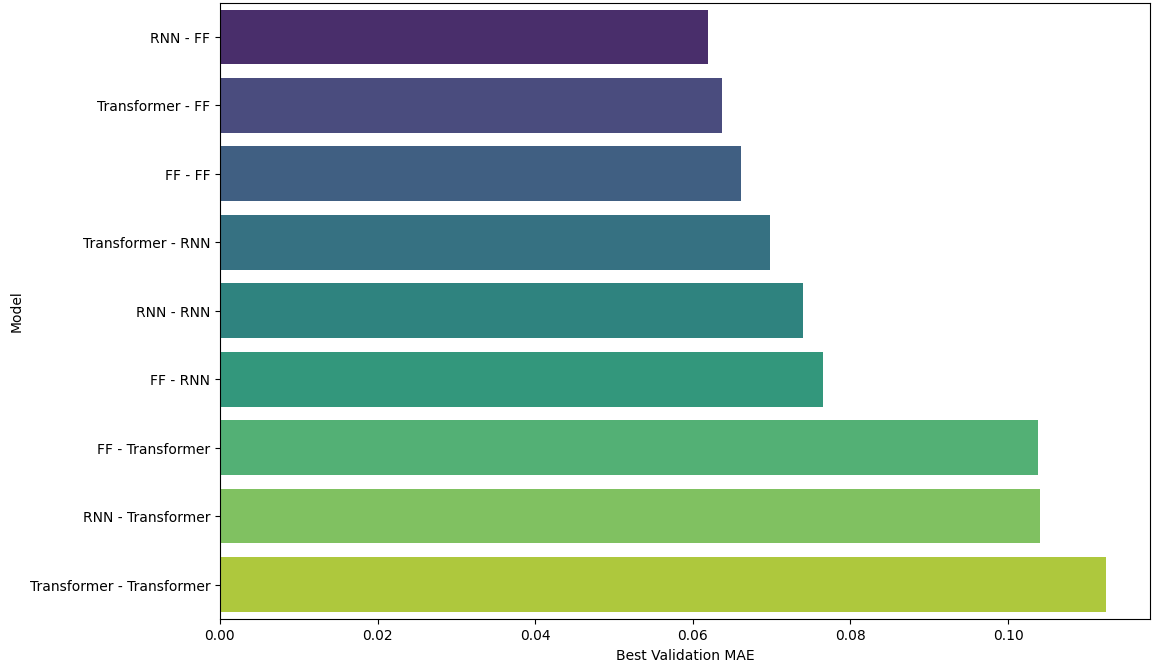

Figure 3: Combined validation results of P1-P2 model pairs.

End-to-End Scheduling: The combination of RNN for initial estimation and Feedforward for refinement resulted in the most accurate scheduling predictions, lowering energy consumption and enhancing resource utilization efficiency compared to alternative model pairings.

The paper situates GOGH within the broader context of recent literature on managing heterogeneous hardware. It reviews approaches for heterogeneity-aware scheduling, resource sharing, and elastic training platforms, among others.

Key contributions from works such as Gavel [narayanan2020heterogeneity], Pollux [qiao2021pollux], and ElasticFlow [gu2023elasticflow] are contrasted with GOGH's approach, particularly in terms of adaptive scheduling and dynamic resource management.

Conclusion

GOGH introduces a powerful framework for optimizing GPU orchestration in heterogeneous clusters, leveraging neural networks for data-driven scheduling decisions. It effectively balances throughput maximization with energy efficiency, addressing the fundamental challenges posed by mixed hardware generations. Future work may extend the ILP solution with approximation or reinforcement learning techniques to further enhance computational efficiency and scalability.

The insights and methodologies provided by GOGH hold substantial potential for deployment in real-world heterogeneous environments, facilitating cost-effective and sustainable machine learning operations.