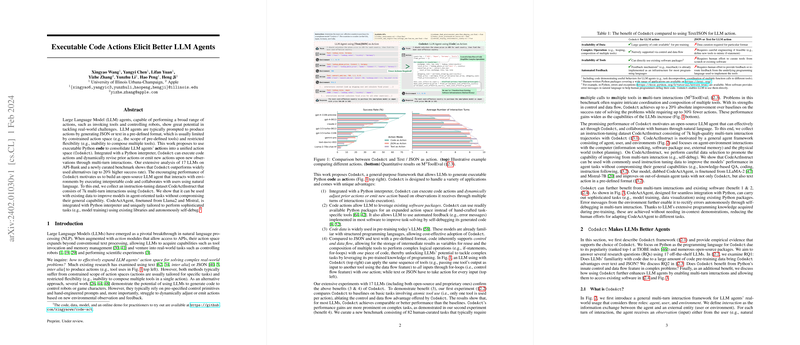

The paper "Executable Code Actions Elicit Better LLM Agents" (Wang et al., 1 Feb 2024 ) proposes CodeAct, a framework where LLMs generate executable Python code as actions to interact with environments. This approach aims to overcome the limitations of traditional methods that rely on generating actions in constrained text or JSON formats, which often suffer from limited action space and flexibility. By integrating with a Python interpreter, CodeAct enables LLM agents to execute generated code, receive execution results or error messages as observations, and dynamically adjust their subsequent actions in multi-turn interactions.

The core idea behind CodeAct is to leverage LLMs' pre-existing familiarity with programming languages due to extensive code data in their training corpora. This allows for a more natural and effective way for LLMs to express complex action sequences. The framework consolidates various types of actions (tool invocation, complex logic, data handling) into a single, unified action space using Python.

The paper highlights several practical advantages of using CodeAct:

- Dynamic Interaction: The multi-turn nature with an interpreter allows agents to respond to observations (like execution results or error messages) and dynamically modify their plans or code.

- Leveraging Existing Software: Agents can directly utilize readily available Python packages (e.g., Pandas, Scikit-Learn, Matplotlib) for a vastly expanded action space, rather than being limited to a predefined set of tools.

- Automated Feedback and Self-Debugging: Standard programming language feedback mechanisms, like error tracebacks, can be directly used by the agent as observations to self-debug its generated code.

- Control and Data Flow: Python's native support for control flow (if-statements, for-loops) and data flow (variable assignment, passing outputs as inputs) allows agents to compose multiple operations into a single, coherent piece of code, enabling more sophisticated and efficient problem-solving.

To empirically demonstrate the benefits of CodeAct, the authors conducted experiments comparing it against text and JSON action formats using 17 different LLMs. On API-Bank (Li et al., 2023 ), a benchmark for atomic tool use, CodeAct showed comparable or better performance, especially for open-source models, suggesting LLMs' inherent proficiency with code-like structures. To evaluate performance on complex tasks requiring multiple tool calls and intricate logic, the authors introduced MToolEval, a new benchmark. On MToolEval, CodeAct achieved significantly higher success rates (up to 20% absolute improvement for the best models) and required fewer interaction turns compared to text and JSON formats, directly showcasing the advantage of control and data flow.

Motivated by these results, the paper introduces CodeActAgent, an open-source LLM agent specifically fine-tuned for the CodeAct framework. To train CodeActAgent, a new instruction-tuning dataset called CodeActInstruct was curated. This dataset comprises 7,000 multi-turn interaction trajectories generated by stronger LLMs (GPT-3.5, Claude, GPT-4) across various domains like information seeking (HotpotQA [yang2018hotpotqa] using search APIs), software package usage (MATH [hendrycks2021math] and APPS [hendrycks2021measuring] for code generation and math libraries), external memory (WikiTableQuestions [pasupat2015compositional] for tabular reasoning with Pandas and SQLite), and robot planning (ALFWorld [shridhar2020alfworld]). A key aspect of CodeActInstruct data collection involved filtering trajectories to specifically promote the agent's capability for self-improvement and self-debugging based on environmental observations (like execution errors). CodeActAgent models were fine-tuned from Llama-2 7B (Touvron et al., 2023 ) and Mistral 7B (Jiang et al., 2023 ) on a mixture of CodeActInstruct and general conversation data.

Evaluation of CodeActAgent showed significant improvements on CodeAct tasks (both in-domain and out-of-domain MINT (Wang et al., 2023 ), and MToolEval) compared to other open-source models. The Mistral-based CodeActAgent, in particular, achieved a 12.2% success rate on MToolEval zero-shot, outperforming open-source models and approaching the performance of larger closed-source models. The models also demonstrated the ability to generalize to tasks requiring text-based actions (MiniWob++ (Kim et al., 2023 ), ScienceWorld [Wang2022ScienceWorldIY]) and maintained or improved performance on general LLM evaluation benchmarks (MMLU [hendrycks2020measuring], HumanEval (Chen et al., 2021 ), GSM8K (Cobbe et al., 2021 ), MTBench (Zheng et al., 2023 )), indicating that training for CodeAct capability does not necessarily degrade general abilities.

From an implementation perspective, CodeAct relies on an interactive Python environment (like a Jupyter Notebook kernel, as suggested by the prompt examples in the appendix) to execute the generated code. The LLM's output format includes tags like <execute> and </execute> to delineate code blocks. The environment captures stdout, stderr, and exceptions, which are then fed back to the LLM as observations in the next turn. The prompt design allows for optional natural language thoughts (<thought>) preceding the action, aiding in interpretability and planning. The ability to install packages via !pip install within the execute block is a practical feature mentioned in the prompt example.

The paper acknowledges limitations, including the potential for hallucination and the need for further alignment. Future work includes iteratively bootstrapping agent capabilities through extensive interaction and developing robust safety mechanisms, as directly executing code introduces significant security concerns, necessitating strong sandboxing. The authors have open-sourced the code, data, and models associated with CodeAct and CodeActAgent.