Overview of "DynaSaur: Large Language Agents Beyond Predefined Actions"

The paper "DynaSaur: Large Language Agents Beyond Predefined Actions" tackles a prevalent limitation in existing LLM agent systems, specifically the restriction imposed by relying on a fixed set of predefined actions. The authors propose a novel framework that allows LLM agents to dynamically create and accumulate actions, enhancing their flexibility and capability in handling complex and real-world tasks.

Motivation and Problem Addressed

The current paradigm in deploying LLM agents involves selecting actions from a static set, constraining adaptability, especially in dynamic environments with numerous potential actions. The primary challenges identified are: (1) the significant restriction on planning and executing actions due to the limited set, and (2) the impracticality of humanly enumerating and implementing all possible actions in complex environments. The authors present an alternative by enabling LLM agents to define and execute actions using programs written in general-purpose programming languages. This fundamentally shifts the reliance away from predefined actions to an adaptable, on-demand creation of actions.

Methodology

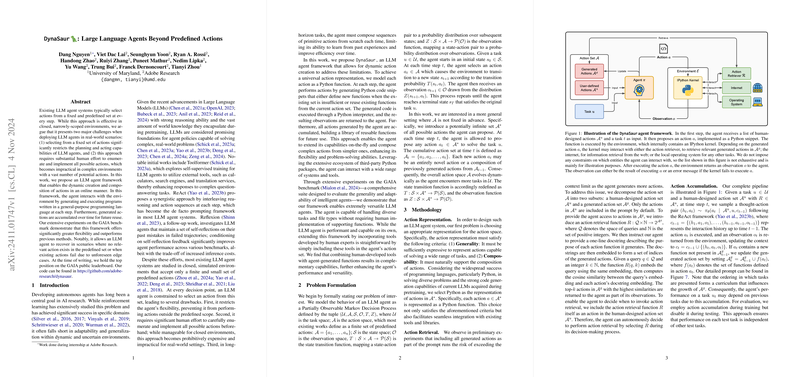

The DynaSaur framework models each action as a Python function, capitalizing on Python's expressiveness and compatibility with a wide array of libraries and tools. At each decision-making step, the agent generates Python code snippets either to define new actions or reuse existing ones from its growing library of functions. Importantly, generated actions are accumulated over time, building an annotated library of functions stored for future reference and composition. In terms of interaction, the agent leverages an existing ecosystem of Python packages, which allows it to engage with diverse systems and tools effectively.

The implementation of such a framework is structured around a Partially Observable Markov Decision Process, enabling the agent's action space to evolve dynamically based on the tasks it encounters. The representation of actions in Python fulfills the dual requirements of generality and composability, deemed essential for robust LLM agent architectures.

Experimental Setup and Results

The paper reports extensive experimentation on the GAIA benchmark—a comprehensive suite designed to evaluate generality and adaptability in intelligent agents. Notably, the proposed framework not only improves the versatility of LLM agents but also achieves superior performance as demonstrated by holding the top position on the GAIA public leaderboard at the time of evaluation.

The empirical findings exhibit significant performance enhancement over baseline methods in handling diverse GAIA tasks without predefined supporting functions. The incorporation of human-developed tools into the LLM-generated functions library showcases the potential of DynaSaur to synergize with existing methods, further amplifying its efficacy.

Implications and Future Directions

The introduction of DynaSaur marks a substantial evolution in the development of LLM agents, primarily by unlocking unprecedented flexibility in action selection and planning processes. Practically, this could translate to more capable AI systems in domains that require intricate interactions and decision-making pathways, such as autonomous robotics, complex problem-solving in digital assistants, and adaptive learning systems.

Theoretically, the work contributes to the growing body of research on autonomous agent systems augmented by LLM capabilities. It raises interesting questions on how dynamically generated actions can be refined and shared across different tasks and environments, hinting at the emergence of a new form of LLM agent adaptability.

Future work could explore mechanisms to optimize the action library growth, ensuring that the accumulation process remains efficient and operations using the library remain computationally feasible. Further research might also delve into curriculum strategies for presenting tasks that facilitate the systematic and meaningful expansion of reusable actions.

In summary, the DynaSaur framework provides a significant step toward more adaptable and robust LLM agent systems, offering a promising outlook on their deployment in a vast array of real-world scenarios.