Discrete Distribution Networks (2401.00036v3)

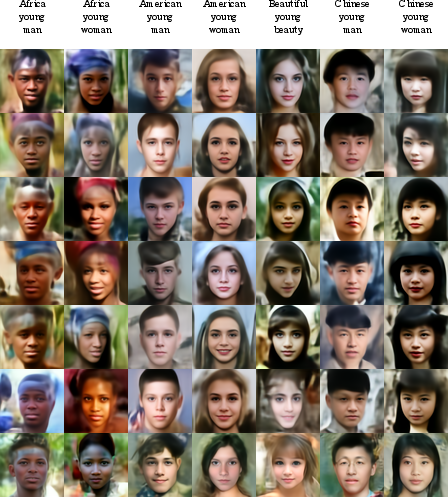

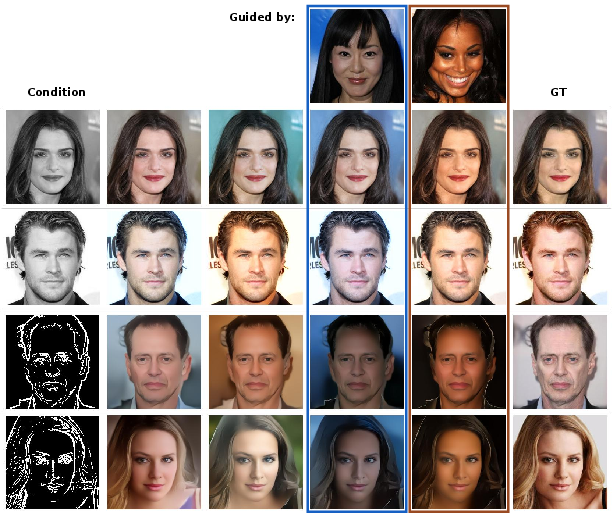

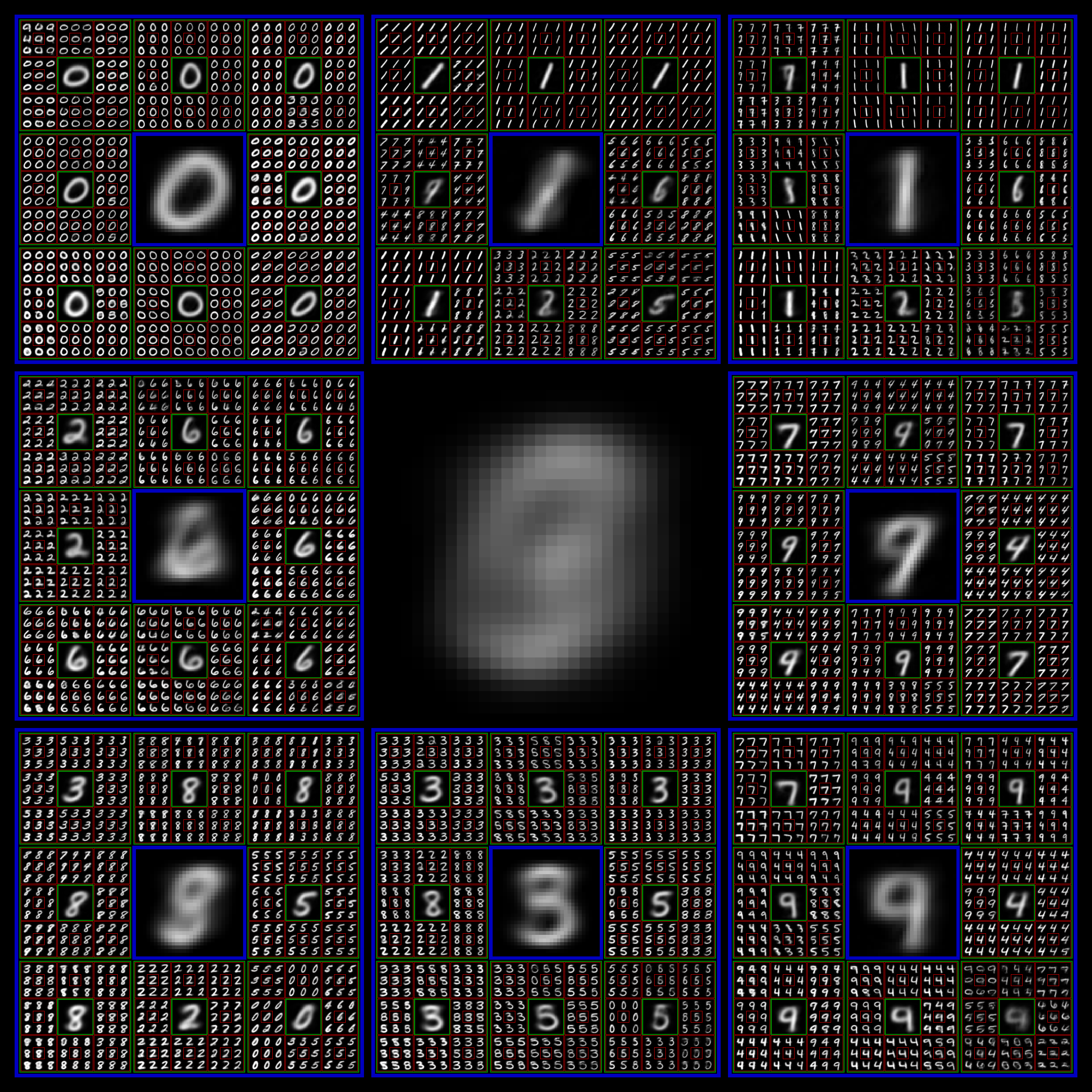

Abstract: We introduce a novel generative model, the Discrete Distribution Networks (DDN), that approximates data distribution using hierarchical discrete distributions. We posit that since the features within a network inherently capture distributional information, enabling the network to generate multiple samples simultaneously, rather than a single output, may offer an effective way to represent distributions. Therefore, DDN fits the target distribution, including continuous ones, by generating multiple discrete sample points. To capture finer details of the target data, DDN selects the output that is closest to the Ground Truth (GT) from the coarse results generated in the first layer. This selected output is then fed back into the network as a condition for the second layer, thereby generating new outputs more similar to the GT. As the number of DDN layers increases, the representational space of the outputs expands exponentially, and the generated samples become increasingly similar to the GT. This hierarchical output pattern of discrete distributions endows DDN with unique properties: more general zero-shot conditional generation and 1D latent representation. We demonstrate the efficacy of DDN and its intriguing properties through experiments on CIFAR-10 and FFHQ. The code is available at https://discrete-distribution-networks.github.io/

- Deep generative modelling: A comparative review of vaes, gans, normalizing flows, energy-based and autoregressive models. IEEE transactions on pattern analysis and machine intelligence, 2021.

- Large Scale GAN Training for High Fidelity Natural Image Synthesis. arXiv:1809.11096, 2019.

- Language models are few-shot learners. arXiv preprint arXiv:2005.14165, 2020.

- Generative adversarial networks: An overview. IEEE signal processing magazine, 35(1):53–65, 2018.

- Taming Transformers for High-Resolution Image Synthesis. arXiv:2012.09841, 2021.

- Exposing the Implicit Energy Networks behind Masked Language Models via Metropolis–Hastings. arXiv:2106.02736, 2021.

- Bayesian flow networks. arXiv preprint arXiv:2308.07037, 2023.

- Deep Residual Learning for Image Recognition. In IEEE CVPR, 2016.

- Denoising diffusion probabilistic models, 2020.

- Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1125–1134, 2017.

- Perceptual losses for real-time style transfer and super-resolution. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11-14, 2016, Proceedings, Part II 14, pages 694–711. Springer, 2016.

- Progressive growing of gans for improved quality, stability, and variation. arXiv preprint arXiv:1710.10196, 2017.

- A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 4401–4410, 2019.

- Glow: Generative Flow with Invertible 1x1 Convolutions. NeurIPS 31, 2018.

- Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

- Auto-Encoding Variational Bayes. ICLR, 2014.

- Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25, 2012.

- Repaint: Inpainting using denoising diffusion probabilistic models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 11461–11471, 2022.

- Sdedit: Guided image synthesis and editing with stochastic differential equations. arXiv preprint arXiv:2108.01073, 2021.

- Huy Phan. github.com/huyvnphan/PyTorch_CIFAR10, 2021.

- Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In ICLR, 2016.

- Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Hierarchical text-conditional image generation with clip latents. ArXiv, abs/2204.06125, 2022.

- High-resolution image synthesis with latent diffusion models. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 10674–10685, 2021.

- Palette: Image-to-image diffusion models. ACM SIGGRAPH 2022 Conference Proceedings, 2021.

- Claude Elwood Shannon. A mathematical theory of communication. The Bell system technical journal, 27(3):379–423, 1948.

- Idempotent generative network. arXiv preprint arXiv:2311.01462, 2023.

- Denoising Diffusion Implicit Models. In ICLR, 2021.

- Conditional Image Generation with PixelCNN Decoders. NeurIPS 29, 2016.

- Conditional image generation with pixelcnn decoders. Advances in neural information processing systems, 29, 2016.

- Pixel Recurrent Neural Networks. In ICML, 2016.

- Neural discrete representation learning, 2018.

- Sketch-guided text-to-image diffusion models. ACM SIGGRAPH 2023 Conference Proceedings, 2022.

- Adding conditional control to text-to-image diffusion models. ArXiv, abs/2302.05543, 2023.

- The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In IEEE CVPR, 2018.

- Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international conference on computer vision, pages 2223–2232, 2017.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.