- The paper presents a novel distributed sampling-based optimization framework that significantly boosts scalability in MPC systems.

- It integrates stochastic search, variational optimization, and variational inference with ADMM to decentralize and streamline multi-agent control.

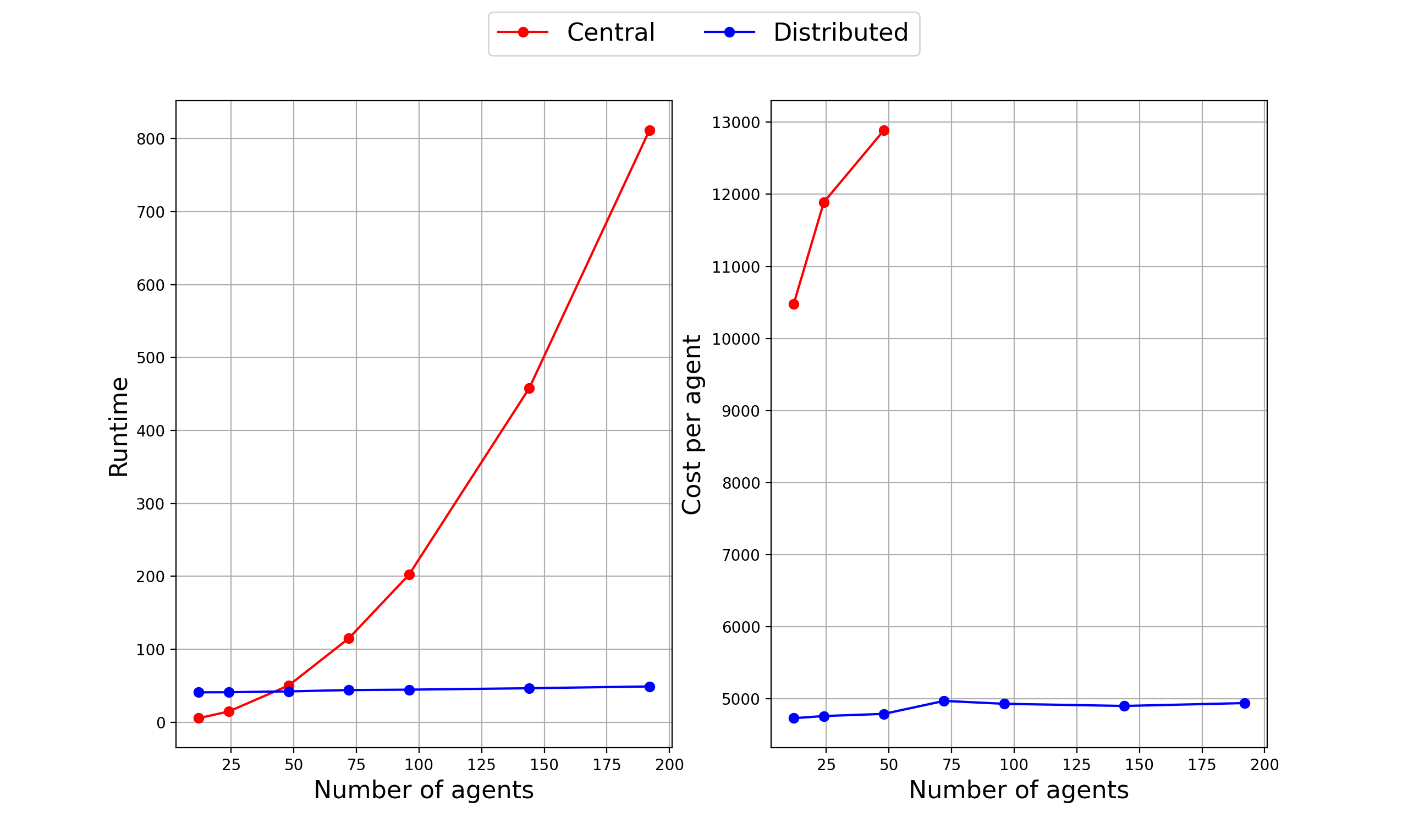

- Simulations using vehicular and quadcopter systems show improved runtime and cost metrics over traditional centralized approaches.

Sampling-Based Optimization for Multi-Agent Model Predictive Control

This paper presents a novel approach for sampling-based optimization in multi-agent model predictive control (MPC) systems, focusing on enhancing scalability and efficiency through distributed frameworks.

Introduction

The research systematically reviews three primary approaches to sampling-based dynamic optimization: Variational Optimization (VO), Variational Inference (VI), and Stochastic Search (SS). These methodologies are examined in the context of Multi-Agent MPC, where the computational complexity poses significant challenges as the number of agents increases. Traditional centralized control techniques are inefficient at scale due to their computational demands, making the paper of distributed optimization both relevant and necessary.

Methodology

Sampling-Based Optimization Techniques

The paper reviews and unifies different sampling-based dynamic optimization methods from the perspectives of SS, VO, and VI. Each technique involves generating decision variables from a sampling distribution, which is iteratively updated.

- Stochastic Search (SS): This approach involves computing gradients of the expected cost transform via sampling and guiding the optimization through steepest ascent on the transformed objective function.

- Variational Optimization (VO): Derives optimizers based on Kullback-Leibler divergence minimization with Gibbs distribution as the target distribution, offering connections to Hamilton-Jacobi-Bellman theory in SOC.

- Variational Inference (VI): Converts the control problem into a Bayesian inference problem, thus allowing for optimality likelihood parameterization, often employing Tsallis divergence for more flexibility.

Distributed MPC Framework

To address scalability issues, the paper proposes a distributed MPC framework utilizing the consensus-based Alternating Direction Method of Multipliers (ADMM). This framework allows the decoupling of a large-scale optimization problem into more manageable sub-problems at the neighborhood level, facilitating parallelization. In this model, each agent performs local optimization while enforcing consistency constraints with its neighbors, thus achieving full decentralization.

Simulation and Results

The proposed methodologies are validated through simulation on complex multi-agent tasks involving vehicular and quadcopter systems. The simulation comparisons demonstrate the scalability of the distributed approach with up to 196 agents by showing reduced computational times and enhanced task performance compared to centralized methods.

Key Findings:

Conclusion

The paper contributes a robust, distributed approach to MPC that significantly boosts scalability and efficiency for multi-agent systems. By incorporating ADMM into sampling-based optimization, the researchers successfully expand the practical applicability of MPC to complex, large-scale, multi-agent problems. Future work may explore the integration with real-world systems and further refine policy distribution techniques to enhance decision quality across diverse scenarios.