- The paper presents a novel approach by reframing product categorization as machine translation, achieving higher accuracy through NMT architectures.

- It employs Seq2Seq and Transformer models, leveraging attentional mechanisms to generate dynamic taxonomy paths beyond static classifications.

- Experimental results on Rakuten and Ichiba datasets show improved F-scores and flexible taxonomy generation, indicating a potential paradigm shift in e-commerce.

Multi-Level E-Commerce Product Categorization Via Machine Translation

The paper presents a novel approach to e-commerce product categorization by reframing the problem from traditional classification to machine translation (MT). The approach demonstrates improved predictive accuracy and structural adaptability in product taxonomies. This essay will explore the key concepts, methodologies, and implications of this approach.

Introduction

E-commerce platforms necessitate the categorization of millions of products into extensive taxonomy trees. Conventional methods use ML classification to achieve this, mapping product descriptions to pre-existing categories without altering the taxonomy structure. The paper proposes an alternative using MT to translate product descriptions directly into taxonomy paths, allowing both existing and novel paths, thereby transforming the taxonomy into a directed acyclic graph (DAG).

The underlying advantage of utilizing MT systems is their inherent ability to handle language variability, robustness to noise, and adaptability to different linguistic contexts, leveraging existing LLMs for categorial translation.

Traditional methods have centered on ML classification algorithms, which can be divided into single-step and step-wise approaches. While the latter reduces data imbalance issues, it propagates errors and increases the classifier count. Recent advancements involve deep learning models like RNNs and CNNs, each with different structural efficiencies. However, these methods limit taxonomy paths to existing hierarchical structures.

Methodology

The core of the proposed method uses neural machine translation (NMT) architectures, specifically attentional Seq2Seq models and the Transformer model. These architectures allow flexibility in representing product descriptions as language input to generate taxonomy paths as language output.

Neural Machine Translation Systems

NMT systems, contrasted with phrase-based systems, leverage deep-learning to compress word representations into continuous vectors, fostering complex relationships and reducing data sparsity issues.

- Seq2Seq Models: Capture sentence continuity, using attentional mechanisms to align source and target sequences.

- Transformer Models: Discard RNNs in favor of self-attention mechanisms and parallelizable computation, excelling in long-sentence translation and rich representation spaces.

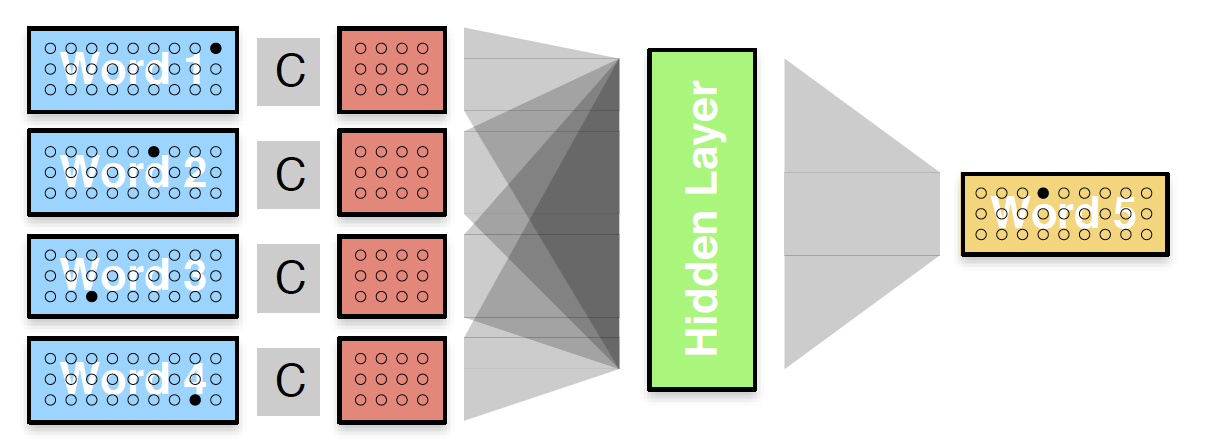

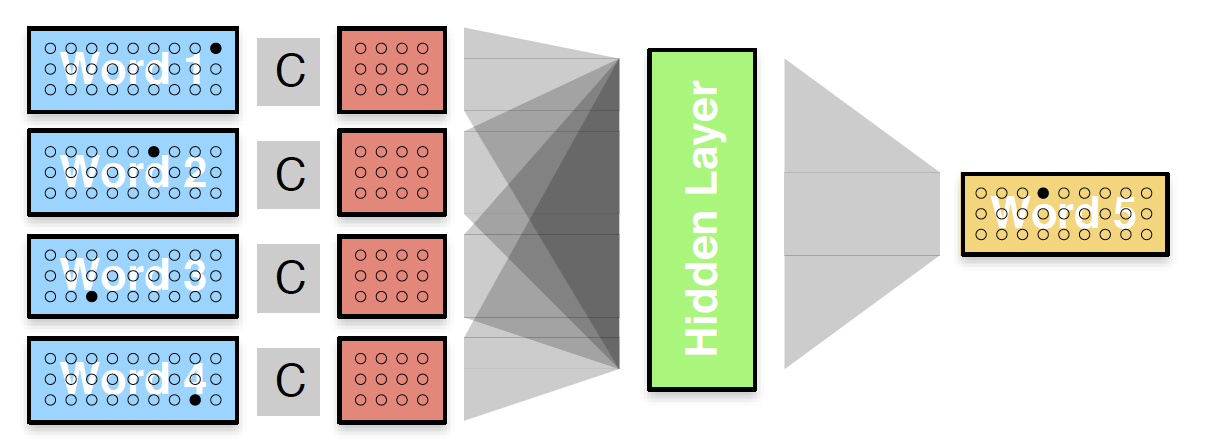

Figure 1: A LLM encoded by a feed forward neural network.

Figure 2: A LLM encoded as a recurrent neural network.

Figure 3: The encoder-decoder model, also known as the sequence-to-sequence model (Seq2Seq).

Experiments and Results

Experiments conducted on Rakuten Data Challenge (RDC) and Ichiba datasets revealed that the NMT approach outperformed state-of-the-art classification systems, demonstrating higher weighted F-scores in predictive accuracy on unseen test data. The Transformer model, particularly, showcased superior results over the comparison systems like CUDeep.

(Table 1 summarizes the results.)

Table 1: Results of our NMT Systems vs CUDeep Classification Systems.

Analysis

The novel paths generated by the NMT models indicate a potential shift in how e-commerce platforms can structure their taxonomies. By not relying on pre-defined outputs, these models suggest integration pathways that accommodate diverse semantic groupings and adapt to new product introductions.

An example shows a songbook categorized under a broader Music rather than Musical Instruments, highlighting taxonomy refinement opportunities.

Conclusion

The MT-based categorization system provides a substantial advancement in both accuracy and flexibility for product categorization. By leveraging existing MT capabilities, this approach mitigates technical debt and aligns with global linguistic operations typical of large e-commerce entities.

The DAG transformation allows taxonomies to capture complex consumer conceptualizations, thereby improving user navigation and product discovery. Future work could explore user-involved evaluation of the proposed paths and further integration with classification models for optimized taxonomy structures. The transition from classification to MT signifies a promising direction in organizing e-commerce databases.