Speedrunning ImageNet Diffusion (2512.12386v1)

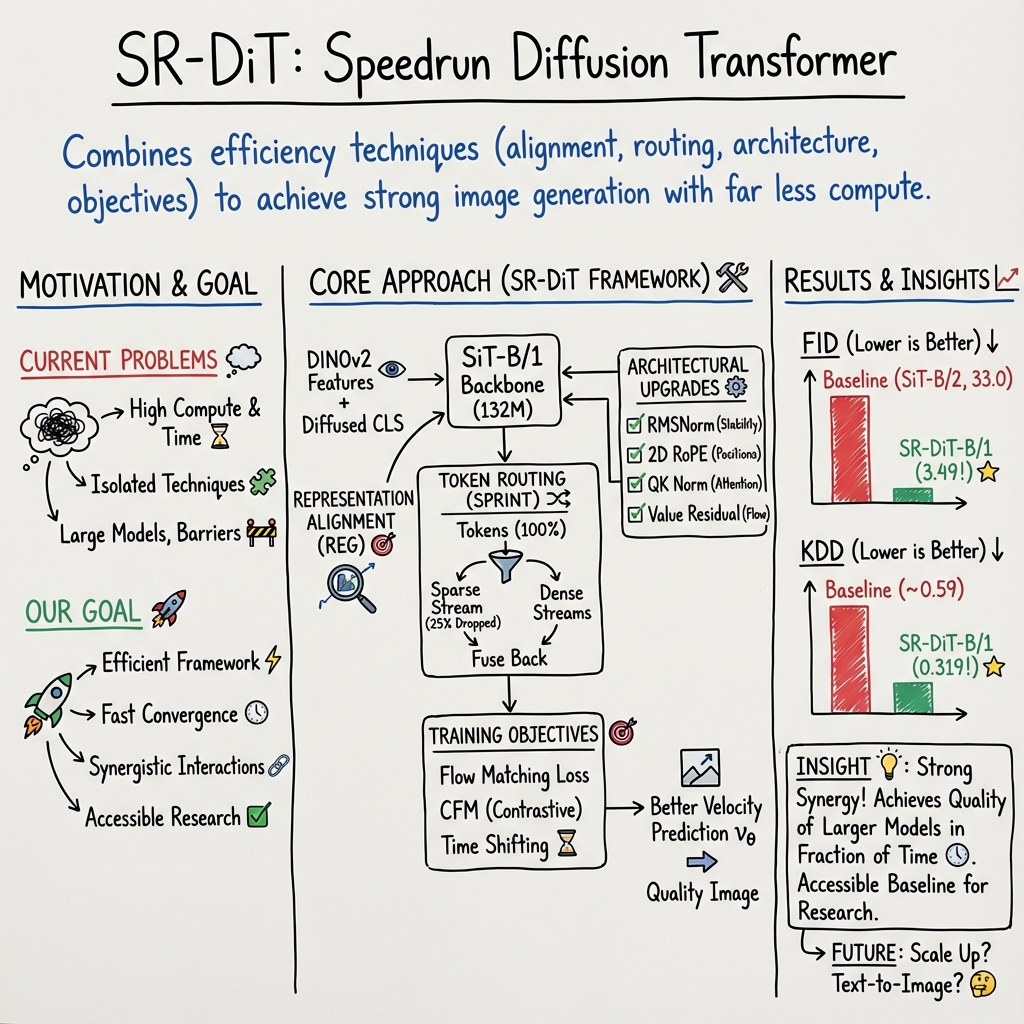

Abstract: Recent advances have significantly improved the training efficiency of diffusion transformers. However, these techniques have largely been studied in isolation, leaving unexplored the potential synergies from combining multiple approaches. We present SR-DiT (Speedrun Diffusion Transformer), a framework that systematically integrates token routing, architectural improvements, and training modifications on top of representation alignment. Our approach achieves FID 3.49 and KDD 0.319 on ImageNet-256 using only a 140M parameter model at 400K iterations without classifier-free guidance - comparable to results from 685M parameter models trained significantly longer. To our knowledge, this is a state-of the-art result at this model size. Through extensive ablation studies, we identify which technique combinations are most effective and document both synergies and incompatibilities. We release our framework as a computationally accessible baseline for future research.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper is about making image-generating AI models train much faster while still making high‑quality pictures. The authors built a system called SR‑DiT (Speedrun Diffusion Transformer) that cleverly combines several ideas so a smaller model can learn quickly and perform like much bigger, more expensive models. They show this on ImageNet, a huge dataset of pictures used to test how well models understand and create images.

Goals and Questions

The paper asks:

- Can we speed up training for image diffusion models by combining many recent tricks instead of using them one by one?

- Which techniques work best together, and which ones don’t?

- How good can a small model get if we integrate these techniques carefully?

How They Did It (Methods, explained simply)

Think of training a model like teaching a team to draw from noisy scribbles into clear pictures. The authors made this “drawing class” faster and smarter by mixing several strategies:

- Representation alignment: Imagine the model learning alongside a top art student (a strong vision model called DINOv2). The model’s internal “features” are encouraged to look like the top student’s features. This gives the model clearer guidance about what matters in images.

- Better image codes (semantic VAE): Before drawing, pictures get turned into compact codes. A “semantic VAE” makes these codes more meaningful, like giving the team clean outlines instead of messy sketches, so learning is easier.

- Token routing (SPRINT/TREAD): When processing images in the transformer, not every piece needs the same amount of work. Token routing lets the model skip a lot of repeated work in the middle, only carrying forward the most important parts. It’s like only sending the most crucial puzzle pieces through a long series of steps to save time.

- Architecture upgrades: The model uses modern transformer parts that help it stay stable and learn better:

- RMSNorm: a simpler way to keep values in a good range.

- RoPE: a smart way to tell the model where things are in the image grid.

- QK Normalization: keeps attention calculations steady.

- Value Residual Learning: gives the model a shortcut to reuse useful information, improving how details flow through layers.

- Training tweaks:

- Flow matching: Instead of jumping straight from noise to a final image, the model learns the “velocity” of how to move step‑by‑step from noise toward the real image. Think of it like learning a smooth path instead of guessing the end directly.

- Contrastive Flow Matching (CFM): The model is nudged away from confusing other images’ targets, so it doesn’t mix them up—like being told, “Don’t mistake this dog’s fur pattern for that cat’s!”

- Time shifting: The training pays more attention to harder, noisier steps (which are often neglected), making the model more robust.

- Balanced label sampling: When judging the model, they make sure all classes are equally represented so the scores are fair.

- Evaluation metrics:

- FID and sFID: Traditional scores for image quality and realism (lower is better).

- KDD (Kernel DINO Distance): A newer metric that aligns better with what humans think looks good (lower is better). It compares features from DINOv2 to check how close generated images are to real ones.

Main Findings and Why They Matter

- Big quality with a small model, fast:

- On ImageNet‑256, SR‑DiT (140 million parameters) reaches FID 3.49 and KDD 0.319 after 400,000 training steps, without extra guidance tricks.

- This rivals or beats results from much larger models (about 685 million parameters) that need far more training.

- On ImageNet‑512, SR‑DiT also performs strongly (FID 4.23, KDD 0.306), outperforming reported baselines at the same training length.

- Key ingredients that drove the gains:

- Representation alignment (REPA/REG), token routing (SPRINT), and using a semantic VAE (INVAE) provided the biggest boosts.

- The architecture upgrades (RMSNorm, RoPE, QK Norm, Value Residual) each help a bit, and together they add up.

- CFM and time shifting further improved speed and stability.

- Not everything helped:

- Some activation function changes (like SwiGLU or certain RELU variants) didn’t give consistent improvements and sometimes slowed training.

- Other extra losses (like SARA’s structural loss) didn’t beat the baseline setup here.

- The paper carefully documents these “negative” results so others don’t waste time.

- Fair, human‑aligned evaluation:

- They highlight KDD as a more reliable, human‑aligned metric and use balanced sampling to make evaluations fair across classes.

What This Means (Implications and Impact)

- Lower barriers for researchers: Training powerful image models doesn’t have to demand huge computers and massive models. SR‑DiT shows you can get top‑tier results faster and cheaper, which is great for students, small labs, and open‑source communities.

- A strong baseline to build on: Because the authors release code, checkpoints, and experiment logs, others can reproduce the results and try new ideas on top of a solid, efficient foundation.

- Smarter combinations beat isolated tricks: The main lesson is that combining techniques that help in different ways—better guidance, skipping redundant work, stable architectures, and improved training objectives—can produce big wins that individual tricks alone don’t achieve.

- Better evaluation habits: Using KDD and balanced sampling encourages more trustworthy comparisons between models, which helps the whole field progress.

In short, the paper shows how to “speedrun” training: use the right mix of guidance, efficient processing, and stable components to teach a smaller model to draw great pictures quickly—and share the recipe so everyone can improve it.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored in the paper, phrased to enable follow-up research.

- Generalization beyond ImageNet: The framework is only evaluated on ImageNet-256 and ImageNet-512; robustness and performance on diverse datasets (e.g., CIFAR-10/100, LSUN, FFHQ, COCO, ADE20K) and domains (e.g., medical, satellite, sketches) is untested.

- Human evaluation and metric bias: KDD relies on DINOv2 features, while training uses DINO-based representation alignment; this coupling may bias evaluation in favor of alignment-based methods. The paper lacks human preference studies and metrics independent of DINOv2 to validate that gains reflect true perceptual quality.

- Apples-to-apples baselines: Comparisons mix different tokenizers (SD-VAE vs INVAE), patch sizes (SiT-B/2 vs SiT-B/1), and training settings. A controlled, matched-compute comparison against recent strong baselines under identical tokenizers, patch sizes, NFE, label sampling, and guidance settings is missing.

- Statistical robustness: Metrics are reported for single runs and single seeds without confidence intervals or variability across seeds; statistical significance and training variance are not quantified.

- Precision–recall trade-offs: Several improvements increase precision while decreasing recall (e.g., final recall drops from 0.563 to 0.546 on ImageNet-256); the paper does not analyze whether mode dropping or reduced diversity underlies these changes, nor propose remedies.

- Low-NFE regime and speed–quality curves: All metrics are reported at NFE=250; performance, stability, and efficiency at low-step sampling (e.g., NFE≤50) and under distillation or consistency training are not explored.

- Guidance behavior: CFG is not used for quantitative results and PDG is only used for visuals; how SR-DiT interacts with CFG/PDG across guidance scales, timesteps, and classes (including potential over-saturation or artifacts) is unknown.

- Token-drop ratio and schedules: SPRINT uses a fixed 75% drop; there is no sweep over drop ratios, routing schedules across layers/timesteps, or dynamic/adaptive routing strategies conditioned on content or noise level.

- Learned vs heuristic routing: Tokens are routed via a fixed scheme; the paper does not evaluate learned routing/gating (e.g., content-aware selection, top-k importance learned end-to-end) or routing conditioned on timestep t.

- Interpolant and noise schedule sensitivity: Flow-matching interpolants (e.g., cosine vs linear) and noise schedules are not ablated; sensitivity to schedule choice during training and sampling remains unquantified.

- Time shifting design: The shift factor s is tied to latent dimensionality using a fixed reference (4096), applied at both training and sampling; sensitivity analyses of s, dataset-specific tuning, and resolution-specific effects (e.g., 256 vs 512) are missing.

- RoPE choices: The CLS token is excluded from RoPE rotations without empirical justification; ablations testing inclusion/exclusion, different 2D RoPE parameterizations, and high-resolution stability are lacking.

- Hyperparameter sweeps for alignment losses: Fixed weights are used for REPA (0.5), CLS (0.03), and CFM (0.05), with limited exploration (only TCFM at λ=0.10). Systematic sweeps and joint tuning of these weights to optimize KDD vs FID vs recall are absent.

- VAE/tokenizer design space: INVAE (16× compression) is adopted, but the paper does not systematically compare tokenizers (INVAE vs LightningDiT tokenizer vs SD-VAE), compression levels (8× vs 16×), reconstruction vs semantic objectives, or end-to-end co-training with the diffusion transformer.

- Patch size and latent resolution: SiT-B/1 is selected due to INVAE’s 16× compression, but the impact of patch size (1 vs 2) and latent resolution on training dynamics, routing effectiveness, and final metrics is not mapped.

- Interaction mechanisms: The paper documents incompatibilities (e.g., RELU² with Value Residual) but does not analyze why these arise or provide guidance on when to prefer specific activations/architectural components; mechanistic or theoretical explanations are missing.

- Multi-resolution training and scaling laws: There is no scaling-law analysis across parameter counts (B/L/XL), dataset sizes, or training steps; the transferability of gains to larger (SiT-L/XL) or smaller models is untested.

- Compute accessibility: Although total GPU-hours are modest, results require 8× H200s; throughput, memory footprint, and wall-clock performance on widely available GPUs (e.g., A100, 3090/4090) and single-GPU training feasibility are not reported.

- Balanced label sampling in evaluation: Balanced label sampling is used for metric calculation, which may artificially improve scores compared to random sampling used in other works; the magnitude of this effect and fairness in cross-paper comparisons are not analyzed.

- Robustness to label imbalance and class difficulty: Training uses standard sampling; effects of balanced label sampling during training, class-conditional performance (hard vs easy classes), and long-tail behavior are unexplored.

- Failure modes and robustness: Robustness to distribution shifts (e.g., corruptions, augmentations), adversarial perturbations, and out-of-distribution classes, as well as stability under extreme guidance or sampling noise, is not assessed.

- PDG quantification: PDG is introduced but not quantitatively evaluated; its impact on KDD/FID, artifacts, and tuning guidelines across guidance scales remain open.

- Optimizer sensitivity: The appendix truncates alternative optimizer experiments; comprehensive comparisons (AdamW vs Prodigy vs Muon, momentum/decay schedules, learning-rate warmup/cosine) and their interactions with alignment/routing are missing.

- Data preprocessing and augmentation: The paper does not detail augmentations, cropping/resizing strategies, or normalization; their impact on representation alignment, token routing efficacy, and final metrics is unknown.

- Interpretability of routing: It is unclear which tokens are retained/dropped and whether routing preserves semantically critical regions; analyses of token importance, spatial patterns, and content-dependent behavior are absent.

- CLS entanglement dynamics: REG diffuses the CLS embedding with a fixed loss weight; how CLS dynamics contribute to sample quality, class fidelity, and cross-class confusion, and whether alternative designs (e.g., multi-CLS, layer-wise CLS coupling) help, remain untested.

- Trade-offs across metrics: Time shifting improves FID but worsens KDD; the paper does not offer principled guidance for navigating such trade-offs or for selecting configurations that optimize for KDD (its stated primary metric).

- Distillation and consistency training: No experiments on sampling-speedup methods (e.g., progressive distillation, consistency models) or their compatibility with SR-DiT’s architecture and alignment objectives.

- Text-to-image extension: The framework is class-conditional only; extending to text conditioning (tokenizer choice, alignment targets, routing under text prompts, CFG behavior) is listed as future work but remains entirely untested.

Glossary

- Balanced label sampling: Ensuring each class is equally represented in generated samples to avoid metric bias. "we use balanced label sampling during generation for metric calculation, ensuring each class is equally represented in the generated samples."

- CLS embedding: A special “class” token embedding from a vision encoder used as a global representation during training. "REG extends this setup by also diffusing the DINO CLS embedding alongside the latents."

- Classifier-free guidance (CFG): A sampling technique that steers generation by mixing conditional and unconditional predictions. "All results are reported without CFG or PDG."

- Contrastive Flow Matching (CFM): An auxiliary loss that contrasts model outputs with random targets to speed convergence. "Contrastive Flow Matching (CFM)~\cite{cfm} introduces an additional training objective that improves convergence speed."

- Denoising diffusion probabilistic models (DDPM): A class of generative models that iteratively remove noise to synthesize data. "Denoising diffusion probabilistic models~\cite{ddpm,sohl2015deep} and score-based generative models~\cite{song2019generative,song2020score} have become the foundation for state-of-the-art image generation."

- DINOv2: A self-supervised vision encoder whose features are used for evaluation and representation targets. "KDD computes distances between generated and real image distributions using DINOv2~\cite{dinov2} features in a kernel-based framework."

- Diffusion Transformer (DiT): A transformer-based architecture for diffusion models that rivals U-Nets. "The Diffusion Transformer (DiT)~\cite{dit} demonstrated that transformer architectures can match or exceed U-Net performance"

- Flow matching: A training formulation that aligns model trajectories with target flows, often simpler than standard diffusion objectives. "Flow matching~\cite{lipman2022flow,liu2022flow} provides an alternative formulation with simpler training objectives."

- Fréchet Inception Distance (FID): A metric comparing distributions of generated and real images via Inception features; lower is better. "Lower FID/sFID/KDD and higher IS/Precision/Recall are better."

- High-SNR: Timesteps with high signal-to-noise ratio that are easier denoising steps during training. "reducing over-emphasis on high-SNR (easy) denoising steps."

- Inception Score (IS): A metric evaluating image quality and diversity using an Inception classifier; higher is better. "Lower FID/sFID/KDD and higher IS/Precision/Recall are better."

- INVAE: A VAE with improved semantic properties (from REPA-E) providing 16× latent compression. "INVAE has 16 spatial compression compared to SD-VAE~\cite{ldm}'s 8 compression."

- Kernel DINO Distance (KDD): A kernel-based distance between distributions using DINOv2 features that correlates with human judgment; lower is better. "Kernel DINO Distance (KDD) correlates most strongly with human perceptual judgments."

- Number of Function Evaluations (NFE): The number of solver steps used during sampling; fewer typically means faster generation. "All methods evaluated at NFE=250 without CFG or PDG."

- Path-drop guidance (PDG): A CFG-style heuristic that weakens the unconditional path by skipping routed mid blocks during sampling. "We also use path-drop guidance (PDG), a CFG-style heuristic in which the unconditional prediction is intentionally weakened by skipping the routed mid blocks entirely (i.e., dropping the sparse path)."

- QK Normalization: Normalizing query and key vectors before attention to stabilize training. "QK Normalization~\cite{qknorm}: Normalizing query and key vectors before computing attention scores stabilizes training dynamics."

- REG (Representation Entanglement for Generation): An extension of REPA adding generative objectives to accelerate convergence. "REG~\cite{reg} extended this with generative objectives, claiming 63 convergence speedup over vanilla SiT."

- REPA (Representation Alignment for Generation): Aligns model hidden states to pretrained vision features to provide strong learning signals. "REPA~\cite{repa} introduced the idea of aligning diffusion model hidden states with pretrained vision encoder features, achieving significant training speedups."

- Representation alignment: Guiding model representations toward semantically meaningful encoder features to accelerate learning. "representation alignment provides strong learning signals, token routing reduces computational redundancy and improves information flow"

- RMSNorm: A normalization layer that scales by root-mean-square to improve stability and reduce computation. "RMSNorm~\cite{rmsnorm} is a normalization layer that scales activations by their root-mean-square (without mean-centering), which reduces computation and can improve stability."

- RoPE (Rotary Position Embeddings): A positional encoding that rotates query/key vectors to encode coordinates, extended here to 2D images. "Rotary position embeddings (RoPE)~\cite{rope} encode positional information by rotating query and key vectors in multi-head attention."

- SD-VAE: The standard VAE used in latent diffusion (Stable Diffusion), optimized for reconstruction rather than generation. "Standard SD-VAE~\cite{ldm} encodes images into latent spaces optimized for reconstruction, but not necessarily for generation."

- Semantic latent spaces: Latent representations trained with semantic objectives to make diffusion learning easier. "LightningDiT~\cite{lightningdit} introduced a VAE trained with semantic objectives, producing latent spaces that are more ``diffusable''---easier for diffusion models to learn."

- SiT-B/1: A specific SiT architecture variant with patch size 1 used as the efficient baseline in this work. "starting from the SiT-B/1 architecture (130M parameters)"

- sFID (spatial FID): A variant of FID that accounts for spatial aspects of image distributions; lower is better. "We report both KDD and traditional metrics (FID, sFID, IS, Precision, Recall) for comprehensive evaluation."

- SPRINT: A routing and fusion modification enabling higher token drop rates for efficiency. "SPRINT~\cite{sprint} introduces architectural modifications that allow increasing the token drop rate to 75\%, achieving greater efficiency gains."

- SwiGLU: A gated activation variant used in modern transformers, sometimes improving optimization. "LightningDiT incorporates SwiGLU activations~\cite{swiglu}, RMSNorm~\cite{rmsnorm}, and RoPE~\cite{rope}."

- Time Shifting: Reweighting the timestep distribution to emphasize noisier inputs during training and sampling. "We use time shifting~\cite{logitnormal} to reweight which timesteps the model sees, reducing over-emphasis on high-SNR (easy) denoising steps."

- Token routing: Dropping and later reintroducing a subset of tokens across transformer blocks to cut compute and improve convergence. "TREAD~\cite{tread} demonstrated that routing 50\% of tokens to skip intermediate transformer layers both reduces computation and improves convergence"

- U-DiT: A U-shaped diffusion transformer architecture used as a baseline at higher resolution. "We compare against the DiT-XL/2 and U-DiT-B baselines reported in U-DiTs~\cite{tian2024udits}"

- Value Residual Learning: Adding a residual across the attention value stream to improve information flow and expressiveness. "Value Residual Learning~\cite{valueresidual} modifies attention by injecting a residual connection across the value stream."

- VAE (Variational Autoencoder): A generative encoder-decoder model that maps images to a latent space used by diffusion. "Standard SD-VAE~\cite{ldm} encodes images into latent spaces optimized for reconstruction, but not necessarily for generation."

- Velocity prediction: Predicting the velocity of the interpolation path as the main training target in flow matching. "We follow SiT~\cite{sit} and train the model with a flow-matching objective using velocity prediction."

- Velocity target: The analytic target for velocity in the interpolant-based noising process. "The corresponding velocity target is"

Practical Applications

Immediate Applications

Below are actionable use cases that can be deployed now using the released code, checkpoints, and methods in the paper. Each includes likely sectors, potential tools/workflows, and feasibility notes.

- Compute-efficient training of class-conditional image generators for proprietary datasets

- Sectors: media/advertising, e-commerce, gaming, design, robotics (perception), software (R&D)

- Tools/workflows: fork SpeedrunDiT; swap ImageNet with your labeled dataset; keep INVAE tokenizer and REG alignment; enable SPRINT token routing (e.g., 75% drop); train to ~400K steps; evaluate with KDD + FID; use balanced label sampling for fair metrics

- Assumptions/dependencies: dataset has class labels; access to GPUs (e.g., 8× H100/H200/A100-class or scaled-down training on fewer consumer GPUs with longer wall time); rely on DINOv2 features for representation alignment (or swap to a domain-specific encoder if available); performance and speed are reported at NFE=250 without CFG—latency may still be non-trivial

- Rapid prototyping of small diffusion models for on-prem or private deployments

- Sectors: enterprise software, regulated industries (finance, government), telecom, security

- Tools/workflows: deploy 140M SR-DiT-B/1 checkpoints on internal inference servers; use token routing at inference to reduce compute; integrate class-conditional generation into asset pipelines

- Assumptions/dependencies: class-conditional use (text-to-image not included yet); NFE=250 by default—consider sampler tuning or later distillation to reduce steps; GPU recommended for real-time or batch throughput

- Low-cost synthetic data generation for downstream vision tasks

- Sectors: robotics, autonomous systems, retail (product classification), manufacturing (defect detection), agriculture (crop recognition)

- Tools/workflows: fine-tune SR-DiT on domain images; generate balanced class-conditional synthetic sets; train classifiers/detectors with mixed real + synthetic; validate via KDD to target semantic fidelity

- Assumptions/dependencies: label quality and domain shift must be managed; best results when a suitable vision encoder for alignment (e.g., DINOv2) is relevant to the domain; human validation advised to avoid synthetic bias

- Faster academic experimentation and teaching labs for diffusion

- Sectors: academia, R&D labs, education/bootcamps

- Tools/workflows: use the consolidated SR-DiT repo and W&B logs for reproducible labs; run ablations (RMSNorm, RoPE, QKNorm, Value Residual, CFM, time shifting) to demonstrate synergistic effects; adopt KDD for student evaluations

- Assumptions/dependencies: access to at least a single GPU node; familiarity with PyTorch and latent diffusion tokenizers; ImageNet-like labeled datasets for simple replication

- Fairer and more human-correlated evaluation via KDD

- Sectors: research groups, MLOps, benchmarking platforms, open-source communities

- Tools/workflows: add KDD computation (DINOv2 feature-based) to CI benchmarking; complement FID/sFID/IS with KDD for model selection; use balanced label sampling to avoid class imbalance bias in metrics

- Assumptions/dependencies: DINOv2 features are available and permitted under licensing; teams agree to incorporate new metrics alongside legacy ones for comparability

- Compute-aware hyperparameter sweeps and A/B testing

- Sectors: MLOps, applied research teams, startups

- Tools/workflows: leverage token routing (SPRINT) and small backbone to run broader sweeps on batch size, drop ratios, and loss weights (λ for REPA/REG/CFM) within fixed GPU budgets; standardize reporting at 50K-sample KDD/FID

- Assumptions/dependencies: careful logging and reproducibility; some interactions (e.g., RELU² with Value Residual) are known incompatibilities—follow documented ablations

- Internal creative tooling for fast class-conditional asset generation

- Sectors: design teams, marketing, game studios, content platforms

- Tools/workflows: set up SR-DiT inference for class-labeled style libraries (e.g., categories, assets); optionally apply Path-Drop Guidance (PDG) for previews (qualitative only)

- Assumptions/dependencies: works best when assets map cleanly to classes; PDG is for visualization—not used for metric reporting and may change aesthetics

- Carbon/energy footprint reductions for model training

- Sectors: sustainability offices, policy-compliance teams, responsible AI groups

- Tools/workflows: adopt SR-DiT to reach target quality with fewer GPU-hours; report metrics with compute budgets (GPU-hours, NFE) in compliance documentation

- Assumptions/dependencies: actual energy savings depend on datacenter PUE, GPU generation, and sampler configuration; ensure like-for-like comparisons

Long-Term Applications

These applications are promising but require additional research, scaling, or engineering beyond the current paper (e.g., extension to new modalities, further inference optimization, or safety validation).

- Text-to-image “speedrun” training with representation alignment and token routing

- Sectors: creative tools, advertising, e-commerce, social platforms

- Tools/products: small compute T2I trainer that mixes REG-style alignment with text encoders (e.g., CLIP/DeCLIP) and SPRINT; guidance methods (CFG/PDG variants) tuned for text prompts

- Assumptions/dependencies: adapting REG/REPA alignment to text features; prompt adherence metrics and safety filters; new ablations to confirm synergy with language conditioning

- Video diffusion and multi-modal generation with routed transformers

- Sectors: media production, VFX, education, simulation

- Tools/products: token routing tailored to spatiotemporal tokens; fused semantic tokenizers for video latents; KDD-style metrics extended to video encoders

- Assumptions/dependencies: temporal coherence objectives; efficient video tokenizers; significantly higher memory/compute management

- On-device or edge deployment via distillation and step-reduction

- Sectors: mobile apps, AR/VR, embedded robotics

- Tools/products: combine SR-DiT with consistency distillation or rectified-flow samplers to reduce NFE; prune/rank layers under token routing; quantized 8–4 bit inference

- Assumptions/dependencies: further research to maintain quality at low NFE (<20–50); hardware-aware kernels; acceptable latency and battery constraints

- Domain-specific medical or scientific image synthesis with aligned encoders

- Sectors: healthcare, pharma, scientific imaging (microscopy, remote sensing)

- Tools/products: swap DINOv2 with domain encoders (e.g., BiomedCLIP, RadImageNet); use SR-DiT for class-conditional or pathology-conditional augmentation; strict evaluation with domain experts

- Assumptions/dependencies: data governance and privacy; robust bias/variance assessment; regulatory compliance (e.g., FDA/EMA) before any clinical use; high-stakes validation beyond ImageNet-like benchmarks

- Federated or private speedrun training for sensitive data

- Sectors: healthcare, finance, public sector, defense

- Tools/products: SR-DiT variants integrated with federated averaging or secure aggregation; low-parameter, efficient backbones accelerate on-prem training across sites

- Assumptions/dependencies: communication-efficient training under token routing; privacy accounting; secure feature alignment (local encoders vs. shared encoders)

- Automated architecture selection using learned synergies

- Sectors: AutoML, platform ML teams

- Tools/products: AutoML controllers that pick among RMSNorm/RoPE/QKNorm/Value Residual/SPRINT/CFM/time shifting based on dataset/regime; KDD-driven early stopping

- Assumptions/dependencies: meta-learning of interactions; robust generalization beyond ImageNet; standardized pipelines for different latent tokenizers

- Synthetic dataset-as-a-service for startups and SMEs

- Sectors: B2B AI services, marketplaces, vertical AI providers

- Tools/products: hosted SR-DiT “spin-up in a day” for class-labeled datasets; deliver curated synthetic image packs with KDD/FID reports and compute disclosures

- Assumptions/dependencies: clear IP/licensing for training data and outputs; sufficient guardrails for content moderation; service-level agreements on quality

- Policy and standards for energy-efficient generative AI

- Sectors: government, NGOs, industry consortia

- Tools/products: guidelines that incorporate metrics like KDD alongside compute disclosures (GPU-hours, NFE); procurement checklists recommending token routing and alignment to reduce compute

- Assumptions/dependencies: consensus on evaluation protocols; mechanisms to audit claims; evolving regulatory landscapes

- Cross-modal foundation pipelines (images → 3D, audio-visual, multimodal agents)

- Sectors: XR, gaming, simulation, assistive tech

- Tools/products: extend representation alignment to cross-modal encoders; routed architectures for multi-stream tokens

- Assumptions/dependencies: suitable cross-modal tokenizers; complex training objectives; synchronization across modalities

Notes on feasibility across applications:

- The reported gains depend on integrating multiple techniques coherently (REG/REPA alignment, semantic tokenizers like INVAE, SPRINT token routing, RMSNorm/RoPE/QKNorm/Value Residual, CFM, and time shifting). Dropping components may reduce the speed/quality benefits.

- Performance metrics were collected without classifier-free guidance and at NFE=250; real-world latency or cost targets may require additional sampler/distillation work.

- Alignment effectiveness depends on the relevance of the frozen encoder (e.g., DINOv2) to the target domain; for niche domains, a suitable encoder may need to be trained or selected.

- Balanced label sampling affects metric comparability for class-conditional setups; ensure consistent evaluation protocols when benchmarking.

Collections

Sign up for free to add this paper to one or more collections.