- The paper presents a modular architecture dividing agent functions into AX, UX, and DX to enhance large-scale codebase management.

- It demonstrates that hierarchical context management and adaptive note-taking can boost Resolve@1 performance by up to 6.6 percentage points.

- The framework employs a Meta-agent for automated tool optimization, reducing token usage by over 10% and enabling continuous system improvement.

Scalable Agent Scaffolding for Real-World Codebases with the Confucius Code Agent

Introduction

The Confucius Code Agent (CCA) introduces a modular, extensible framework for coding agents targeting industrial-scale software engineering workflows. Traditional research agents, while transparent, often lack scalability and robustness needed for practical deployments. In contrast, proprietary systems show competitive performance but are closed, less controllable, and limit experimentation. CCA addresses these limitations through its foundation in the Confucius SDK, which is structured explicitly around three orthogonal perspectives—Agent Experience (AX), User Experience (UX), and Developer Experience (DX)—providing an agentic infrastructure capable of handling massive repositories, sustained long-horizon sessions, and sophisticated toolchains.

Confucius SDK Architecture and Design Principles

The Confucius SDK unifies the orchestration of reasoning, tool use, and long-term memory within a single system. The SDK brings explicit handling of AX, UX, and DX, avoiding the common conflation of agent-internal and user-facing representations that impedes interpretability and performance.

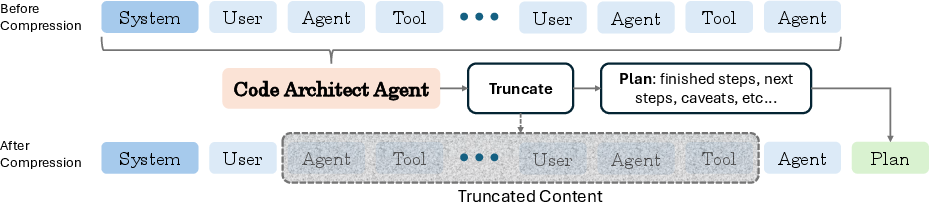

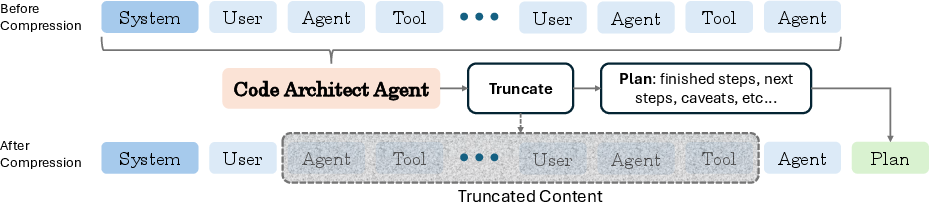

Figure 1: Confucius SDK architecture featuring orchestrator, hierarchical memory, long-term notes, and a modular extension system.

Agent Experience (AX)

AX structures the agent’s cognitive workspace. It intentionally eliminates noisy, verbose traces in favor of hierarchical working memory and adaptive context summarization. Rather than passing unfiltered interaction logs to the LLM, only distilled, task-relevant state is included, maintaining a stable and tractable reasoning context.

User and Developer Experience (UX/DX)

UX maximizes transparency for human developers through fine-grained, instrumented traces and artifact previews. DX allows for modularity and observability, supporting ablations, debugging, and rapid iteration of agent behavior. By designing these axes separately, the SDK enables rich developer tools and meaningful human-agent interaction while isolating each perspective’s requirements.

Hierarchical Context Management and Long-Term Memory

In long-horizon developer workflows, a critical bottleneck for LLM-based agents is bounded context windows. Simple flat history logs or fixed truncation strategies result in loss of salient reasoning steps, while naive retrieval can miss relevant context. CCA’s hierarchical working memory, with adaptive compression, sustains multi-step reasoning without exceeding context limits.

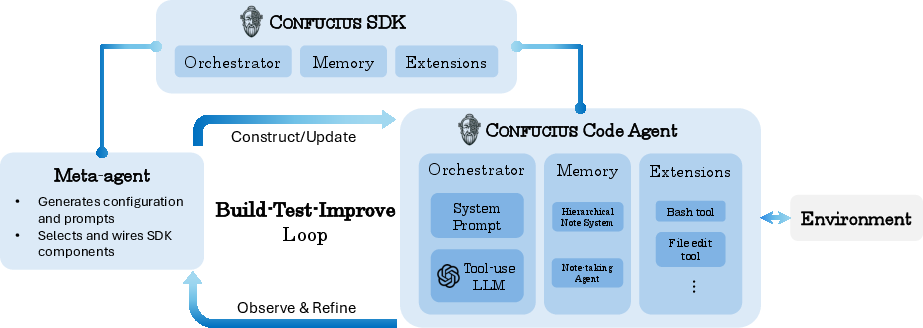

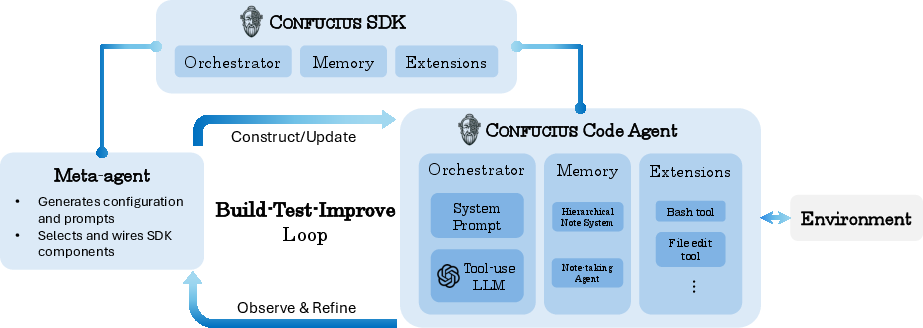

Figure 2: Context compression consolidates earlier turns into structured summaries of goals, decisions, errors, and TODOs, retaining deep reasoning over long horizons while keeping a working window of recent context.

When thresholds are reached, the Architect agent summarizes historical interactions into a structured plan—encoding explicit goals, critical decisions, and pending actions. This structured memory complements recent message windows, ensuring both continuity and retention.

A separate note-taking agent, as part of the agent’s persistent memory, distills cross-session knowledge into hierarchical Markdown documents, tracking reusable patterns and failure modes. These notes serve as test-time memory for task resumption and self-improvement, augmenting the agent beyond transient context.

All tool-invocation and environment interactions in CCA are abstracted into modular extensions. Each extension implements structured callbacks for perception, reasoning, or action—allowing precise control, observability, and compositionality in agent capabilities. Instantiating the production CCA corresponds to selecting and configuring bundles of extensions (file-editing, CLI, code search, etc.), facilitating ablation studies and domain adaptation.

Crucially, agent configuration and improvement are automated by a Meta-agent that operationalizes agent design as a build-test-improve loop.

Figure 3: Meta-agent autonomously synthesizes, evaluates, and iteratively improves agent configurations, tool policies, and prompts using feedback from observed failures.

Developers describe high-level requirements, and the Meta-agent generates configuration, wires orchestrator and extensions, and launches evaluation tasks. It then observes trajectories and modifies prompt or tool policies to maximize empirical performance, systematically reducing reliance on expert tuning.

Empirical Evaluation

CCA demonstrates notable quantitative improvements over prior scaffolds on the SWE-Bench-Pro benchmark:

Figure 4: CCA resolves more SWE-Bench-Pro tasks than both research agent baselines and Anthropic’s proprietary Claude Opus 4.5 agent when normalized for environment, model, and tool access.

With Claude 4.5 Opus, CCA achieves a Resolve@1 of 54.3%, exceeding results reported for Anthropic’s Claude Opus 4.5 system (52.0%). With Claude 4.5 Sonnet, CCA attains 52.7%, outperforming both SWE-Agent and Live-SWE-Agent under identical backends. All improvements are derived from advanced scaffolding alone—context management and tool extensions—rather than differences in underlying LLMs.

Ablations further highlight the impact of CCA mechanisms:

- Context compression and hierarchical working memory enable a +6.6 point boost in Resolve@1 (48.6% vs. 42.0% for Claude 4 Sonnet).

- Meta-agent-optimized tool use yields a substantial performance drop on ablation, confirming its contribution independent of context management.

- Long-term note-taking decreases iteration steps, reduces token usage by over 10%, and increases downstream solve rates by 1.4% when persistent notes are provided for subsequent tasks.

Evaluation across buckets of file-edit volume confirms that CCA sustains robust performance even for multi-file refactorings, with only gradual degradation as task complexity increases.

Comparative Analysis and Traceability

On real-world debugging tasks (e.g., adversarial CUDA memory management failures in PyTorch), CCA demonstrates a principled, precise intervention style, often matching human-engineered solutions, while alternative multi-agent frameworks (e.g., Claude Code) can over-extend or misalign due to decentralized context and thoroughness mandates. CCA’s single-agent chain structure, with persistent hierarchical state, provides continuity essential for large-scale debugging.

Implications and Future Directions

CCA’s modular architecture and empirical superiority establish agentic scaffolding—not model pretraining or parameter count—as a key determinant for code agent performance at scale. The separation of AX, UX, and DX allows for simultaneous interpretability, extensibility, and reasoning stability. The use of automated configuration via Meta-agents introduces a trajectory-friendly mechanism for continual agent improvement.

These design principles suggest a natural path toward integration with reinforcement learning frameworks, where agent traces and feedback signals (from tool outcomes, error corrections, and note-taking) can provide multi-granular rewards. The Confucius SDK’s trajectory infrastructure is well-suited for RL-driven policy refinement, curriculum learning, and long-term deployed agent evolution.

The persistent, interpretable memory mechanisms also enable natural extensions toward formal program verification, provenance tracking, and organization-scale agent deployment.

Conclusion

The Confucius Code Agent and SDK concretely advance scalable, extensible agent scaffolding for real-world codebases, showing that principled architectures, memory management, and automated extension refinement substantially boost agentic effectiveness beyond what is attainable with backbone model improvements alone. This framework lays a foundation for deployable, continuously improving developer agents and provides a platform for future research at the intersection of agent architecture, reinforcement learning, and practical software engineering.