From Task Executors to Research Partners: Evaluating AI Co-Pilots Through Workflow Integration in Biomedical Research (2512.04854v1)

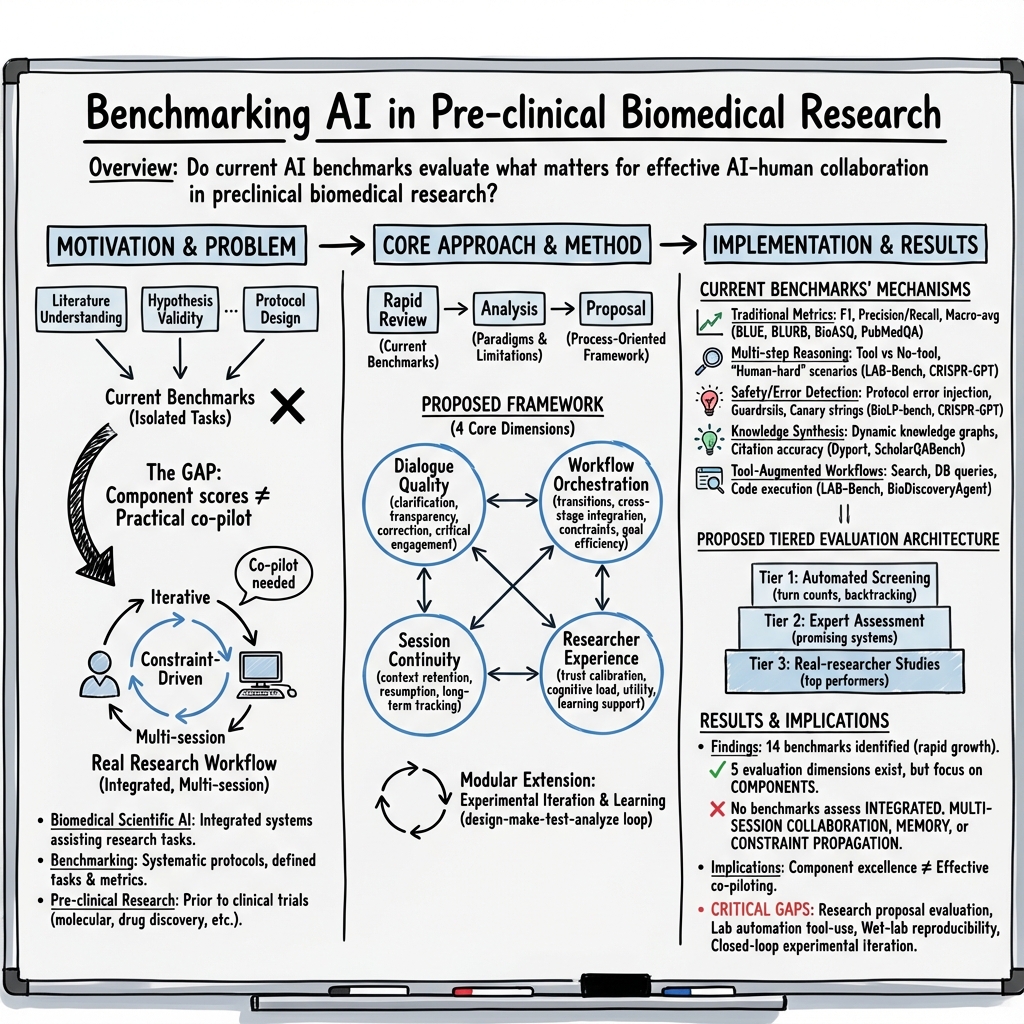

Abstract: Artificial intelligence systems are increasingly deployed in biomedical research. However, current evaluation frameworks may inadequately assess their effectiveness as research collaborators. This rapid review examines benchmarking practices for AI systems in preclinical biomedical research. Three major databases and two preprint servers were searched from January 1, 2018 to October 31, 2025, identifying 14 benchmarks that assess AI capabilities in literature understanding, experimental design, and hypothesis generation. The results revealed that all current benchmarks assess isolated component capabilities, including data analysis quality, hypothesis validity, and experimental protocol design. However, authentic research collaboration requires integrated workflows spanning multiple sessions, with contextual memory, adaptive dialogue, and constraint propagation. This gap implies that systems excelling on component benchmarks may fail as practical research co-pilots. A process-oriented evaluation framework is proposed that addresses four critical dimensions absent from current benchmarks: dialogue quality, workflow orchestration, session continuity, and researcher experience. These dimensions are essential for evaluating AI systems as research co-pilots rather than as isolated task executors.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

From Task Executors to Research Partners — Explained for a 14-year-old

Overview: What is this paper about?

This paper looks at how we test (“benchmark”) AI systems that help scientists do biomedical research (the kind of lab science done before testing things in humans). The authors ask: Are we testing AI the right way if we want it to be a true research partner, not just a tool that solves single tasks?

They find that most existing tests check isolated skills (like reading papers or designing a single experiment), but real science is a long, messy, back-and-forth process. So they suggest a new way to evaluate AI that focuses on the whole research workflow, including memory across days, helpful dialogue, and adapting to constraints (like budget or time).

Key objectives: What questions did the researchers ask?

The paper tries to answer three simple questions:

- What kinds of tests already exist for AI used in biomedical research, and what do they measure?

- Where do these tests fall short for judging AI as a true research partner?

- What would a better, process-focused testing system look like?

Methods: How did they paper this?

Think of this as a quick but careful “review of the field.” The authors:

- Searched major science databases and preprint servers for papers from 2018 to late 2025 about how AI is evaluated in preclinical biomedical research.

- Picked studies that clearly described how they test AI, the tasks involved, and how performance is measured.

- Collected details (like what tasks were covered and what metrics were used) and compared the tests.

If “benchmarks” sound abstract, imagine them as standardized tests for AI:

- Like a driving test checks turning, braking, and parking, AI benchmarks check reading research papers, coming up with ideas, planning experiments, spotting errors, and using tools (like databases and coding).

Main findings: What did they discover and why is it important?

1) Most current tests focus on single skills, not full research teamwork

They found 14 benchmarks that mainly test parts of the research process:

- Understanding scientific papers

- Generating hypotheses (educated guesses)

- Designing experiments or protocols

- Running machine-learning analyses

These tests are useful, but they miss what real collaboration looks like. In real labs, projects unfold over weeks, with new data, budget changes, and lots of back-and-forth discussion. A good AI co-pilot should remember past conversations, ask smart clarifying questions, adapt plans, and carry constraints (like cost limits) forward.

2) Five common evaluation areas show progress—but also gaps

The paper groups existing tests into five areas:

- Traditional performance metrics: Accuracy on tasks like question answering, reading comprehension, or summarization.

- Multistep reasoning and planning: Can the AI plan complex lab steps and stitch them together?

- Safety and error detection: Can the AI spot dangerous mistakes in lab protocols and avoid harmful suggestions?

- Knowledge synthesis and discovery: Can it combine information from multiple papers and generate valuable new ideas?

- Tool-augmented workflows: Can it use external tools (databases, code, literature search) to get better results?

These areas are important, but they still mostly measure isolated capabilities.

3) Clear examples of current limitations

- Citation hallucinations: Some models made up references to scientific papers more than 90% of the time when asked for sources. That’s like citing fake books for a school report.

- Poor error detection: AI often failed to catch critical mistakes in lab protocols that could cause experiments to fail or be unsafe.

- Underperforming on end-to-end tasks: When asked to build full machine-learning pipelines in biomedical domains, AI agents performed worse than human experts.

- Tool use helps, but isn’t enough: Giving AI access to tools improves performance on some tasks, but many tests don’t simulate real lab conditions (like messy data, equipment issues, or changing constraints).

4) The big gap: Collaboration over time

Real scientific work is not a single session. It looks more like a week-long group project:

- Monday: Analyze data

- Tuesday: Update the hypothesis

- Thursday: Change the plan because of budget talks

- Next week: Write a proposal

Current tests don’t check whether the AI can remember Tuesday’s changes, apply Thursday’s budget limits, or ask good questions before making a decision.

5) A proposed better framework: Test AI as a co-pilot, not just a task solver

The authors suggest evaluating AI on four missing dimensions:

- Dialogue quality: Does the AI ask helpful questions, listen to feedback, and explain its reasoning clearly?

- Workflow orchestration: Can it organize multi-step tasks and connect them smoothly across tools and stages?

- Session continuity: Does it remember context across days and carry forward constraints (like budget or time)?

- Researcher experience: Is it genuinely helpful and trustworthy to work with, reducing effort and improving confidence?

Why this matters: What is the impact?

If we keep testing AI only on isolated tasks, we might think it’s ready for the lab when it isn’t. That can:

- Waste time and money

- Miss important discoveries

- Introduce safety risks in experiments

Better, process-oriented evaluation can:

- Help scientists use AI more safely and effectively

- Speed up discovery by supporting true teamwork between humans and AI

- Encourage AI designs that are trustworthy, explainable, and aligned with real-world research needs

In short

This paper says: We’ve gotten good at testing whether AI can do specific science tasks. But if we want AI to be a real research partner, we must test how it works with people over time—remembering, adapting, and communicating well. The authors propose a new evaluation framework to make that possible, aiming for AI that’s not just smart, but also collaborative, safe, and genuinely useful in the lab.

Knowledge Gaps

Unresolved knowledge gaps, limitations, and open questions

Below is a single, concrete list of what remains missing, uncertain, or unexplored, framed so future researchers can act on it:

- Operationalization gap: The proposed process-oriented dimensions (dialogue quality, workflow orchestration, session continuity, researcher experience) are not translated into measurable constructs, rubrics, or task protocols.

- Metrics for dialogue quality: No concrete, validated metrics exist for assessing clarifying-question behavior, critique incorporation, uncertainty communication, calibration, or avoidance of defensive reasoning in scientific dialogue.

- Session continuity measurement: No standardized tasks or metrics test multi-day memory (recall, updating, forgetting, and privacy-preserving retention) or quantify constraint propagation across sessions.

- Workflow orchestration benchmarks: Absent are stress tests that require budget/time constraints, instrument availability, protocol dependencies, and replanning after failures, with verifiable scoring.

- Researcher experience evaluation: No user-paper designs, instruments (e.g., NASA-TLX, trust-calibration scales), or longitudinal protocols are provided to measure workload, trust, reliance, and satisfaction in real labs.

- Prospective validation: The review does not present field trials or prospective studies linking benchmark performance on integrated workflows to real-world research outcomes (e.g., successful experiments, time-to-result, cost savings).

- Safety-in-interaction: There is no benchmark for detecting and mitigating automation bias, overtrust, or overreliance in human-AI teams, nor for effective escalation/abstention behaviors under uncertainty.

- Biosecurity and dual-use: A comprehensive, standardized safety taxonomy and red-teaming protocol for dual-use assessment across diverse wet-lab contexts is not specified.

- Robustness to tool failures: Benchmarks lack scenarios with flaky tools (e.g., API outages, version conflicts, instrument malfunctions) to assess resilience, recovery, and safe fallback strategies.

- Error recovery and critique uptake: No tasks measure whether agents revise plans after human critique or failed experiments, quantify quality of retractions/corrections, or evaluate graceful failure modes.

- Data leakage detection standards: Canary strings and unpublished sets are mentioned but not standardized; no guidance is given on cross-model leakage audits or reporting requirements for contamination risk.

- Model-graded evaluation reliability: The field lacks protocols for evaluator-model selection, cross-evaluator agreement, bias auditing, adversarial robustness, and mitigation of Goodharting against LLM-as-judge.

- Statistical rigor: There is no guidance on power analyses, uncertainty estimates, inter-rater reliability, or significance testing for integrated-workflow evaluations.

- Benchmark governance: No plan exists for versioning, deprecation, auditing, or community stewardship to prevent benchmark gaming and maintain validity over time.

- Interoperability standards: The paper does not define schemas/APIs for tool use, memory stores, ELN/LIMS integration, or workflow languages (e.g., CWL/WDL) to make orchestration benchmarks reproducible.

- Multimodality coverage: There are no integrated tasks combining text, omics tables, imaging, sequence data, and instrument logs with end-to-end, verifiable outcomes.

- Noisy and incomplete data: Benchmarks do not assess robustness to missing metadata, batch effects, measurement noise, or conflicting literature.

- Domain and modality gaps: Underrepresentation persists for certain subfields (e.g., microbiology, metabolism), non-English literature, low-resource settings, and cost-constrained labs.

- Human baselines for integrated workflows: Absent are standardized human-team baselines (novice vs. expert; tool-augmented vs. not) for multi-session, end-to-end tasks.

- Economic realism: There are no benchmarks that incorporate compute budgets, reagent costs, time-to-result, or carbon footprint as explicit optimization constraints.

- Reproducibility across model updates: The paper does not address how to evaluate session continuity and decision consistency when foundation models change versions or sampling seeds vary.

- Privacy and IP: No evaluation protocols address handling confidential lab notebooks, proprietary data, or IP-sensitive hypotheses in memory and retrieval components.

- Security and prompt injection: Tool-augmented scenarios lack tests for injection, data exfiltration, and unsafe command execution in lab-control or code-execution tools.

- Measuring novelty and impact: There is no validated method to score the “scientific novelty” or prospective impact of AI-generated hypotheses beyond retrospective link-prediction proxies.

- Comparative predictiveness: It is unknown whether high scores on proposed integration dimensions predict meaningful lab outcomes better than current component benchmarks.

- Dataset creation for longitudinal workflows: The field lacks shared, privacy-compliant corpora of multi-week lab dialogues, decisions, and outcomes to train and evaluate continuity and collaboration.

- Ground-truthing integrated tasks: There is no guidance for constructing gold standards for multi-step plans with multiple valid solutions and evolving constraints.

- Multi-agent collaboration: Benchmarks do not test coordination among multiple AI agents and humans (role assignment, handoffs, conflict resolution) in research settings.

- Generalizability across labs: There is no methodology to assess transfer of integrated-workflow performance across institutions with different tools, SOPs, and resource constraints.

- Wet-lab execution loops: Aside from isolated examples, there is no standardized, safe, and scalable instrument-in-the-loop evaluation framework with physical execution and outcome verification.

- Fairness and bias: The review does not propose methods to detect and mitigate systematic biases (organisms, diseases, demographics, institutions) in literature synthesis, hypothesis generation, or protocol selection.

- Reporting standards: Absent are minimum reporting checklists for agent setup, tool stacks, memory configurations, and safety controls to ensure reproducibility of integrated-workflow studies.

- Incentive alignment: The paper does not address how to prevent benchmark optimization that harms real-world practices (e.g., overfitting dialogue metrics at the expense of lab safety).

- Hybrid human-AI pedagogy: No protocols evaluate how systems adapt to users’ expertise levels, teach best practices, or scaffold novices without inducing overreliance.

- Calibration and uncertainty: There are no mandated measures of probabilistic calibration, confidence intervals, or uncertainty propagation in hypotheses, analyses, and plans.

- Constraint representation: It remains unclear how to formally represent and test complex constraints (regulatory, biosafety, ethics committee approvals) within orchestration benchmarks.

- Lifecycle evaluation: No framework measures performance drift over multi-month projects, including memory evolution, knowledge updates, and cumulative error control.

Glossary

- Agentic AI: AI systems designed to autonomously plan, decide, and act within workflows, often coordinating tools and multi-step tasks. "Recent advances in LLMs and agentic AI architectures promise to transform how scientists conduct research"

- AUROC: Area Under the Receiver Operating Characteristic curve; a metric summarizing binary classifier performance across decision thresholds. "graded by established metrics, such as AUROC and Spearman correlation"

- Bayesian optimization: A sequential model-based method for optimizing expensive-to-evaluate functions, often used for experiment or hyperparameter selection. "achieving a 21% improvement over the Bayesian optimization baselines"

- BERTScore: A text evaluation metric that uses contextual embeddings from BERT to measure similarity between generated and reference text. "BioLaySumm evaluates lay summarization quality using ROUGE scores, BERTScore, and readability metrics"

- Canary string: A unique marker inserted into benchmarks or data to detect training contamination or leakage. "The LAB-Bench includes a canary string for training contamination monitoring"

- Closed-loop discovery: An iterative process where hypotheses are generated, tested, and refined based on results to drive discovery. "and closed-loop discovery (Roohani et al., 2024)"

- CRISPR: A genome editing technology enabling targeted modifications in DNA using guide RNAs and Cas enzymes. "CRISPR-GPT provides an automated agent for CRISPR experimental design with 288 test cases"

- Data leakage: Unintended inclusion of test or future information in training or evaluation that artificially inflates performance. "addressing data leakage concerns that could artificially inflate the performance metrics"

- Dynamic knowledge graphs: Evolving graph structures that represent entities and relations over time for tasks like hypothesis generation. "using dynamic knowledge graphs with temporal cutoff dates and importance-based scoring"

- Ethical guardrails: Constraints and policies embedded in AI systems to prevent unsafe or unethical actions. "CRISPR-GPT incorporates ethical guardrails that prevent unethical applications, such as editing viruses or human embryos"

- Flesch-Kincaid Grade Level: A readability metric estimating the U.S. school grade level required to understand a text. "BioLaySumm evaluates lay summarization quality using ROUGE scores, BERTScore, and readability metrics, including the Flesch-Kincaid Grade Level"

- Genetic perturbation: Experimental alteration of gene function (e.g., knockdown, knockout) to paper biological effects. "BioDiscoveryAgent, which designs genetic perturbation experiments through iterative closed-loop hypothesis testing"

- Hyperparameter tuning: The process of selecting optimal configuration parameters for machine learning models to improve performance. "including data preprocessing, model selection, hyperparameter tuning, and result submission"

- Levenshtein distance: An edit-distance metric quantifying how many insertions, deletions, or substitutions are needed to transform one string into another. "metrics including BLEU, SciBERTscore, Levenshtein distance, and ROC AUC"

- LLM-as-judge: An evaluation approach where a LLM scores or judges outputs instead of human annotators. "“LLM-as-judge,” “Model-graded evaluation,” “Safety evaluation,” “Protocol validation,” among others;"

- Macro-average scoring: Averaging metrics across multiple tasks or classes equally, without weighting by class size. "BLURB covering 13 datasets and six tasks with macro-average scoring"

- Model-agnostic leaderboards: Evaluation frameworks that compare systems independent of their architectures or training specifics. "establishing model-agnostic leaderboards to compare the system performance"

- Model-graded evaluation: Using an AI model to assess outputs, providing scalable but potentially biased alternatives to human grading. "The benchmark employs a model-graded evaluation requiring GPT-4o-level capabilities"

- Multi-agent systems: Architectures where multiple AI agents collaborate or coordinate to perform complex tasks. "multi-agent systems for collaborative biomedical research;"

- Parametric knowledge: Information encoded in a model’s parameters (as opposed to external tools or databases). "distinguish systems capable of leveraging external resources from those relying solely on parametric knowledge"

- Retrieval-augmented generation: Techniques that combine document retrieval with generation to ground outputs in external sources. "“Retrieval-augmented generation,” among others;"

- ROC AUC: Receiver Operating Characteristic Area Under Curve; a performance measure indicating the trade-off between true and false positive rates. "The evaluation uses ROC AUC curves for different semantic type pairs (gene-gene, drug-disease)"

- Semantic indexing: Assigning standardized semantic labels to documents to enable efficient retrieval and organization. "the 2025 thirteenth BioASQ challenge on large-scale biomedical semantic indexing and question answering"

- sgRNA: Single-guide RNA used in CRISPR systems to direct the Cas enzyme to a specific genomic target. "sgRNA design"

- Spearman correlation: A rank-based correlation coefficient measuring monotonic relationships between variables. "graded by established metrics, such as AUROC and Spearman correlation"

- Temporal cutoff dates: Fixed time boundaries used to prevent future knowledge from contaminating evaluations, enabling prospective testing. "using dynamic knowledge graphs with temporal cutoff dates"

- Temporal knowledge graphs: Knowledge graphs that incorporate time-stamped edges or nodes to reflect changes over time. "Dyport employs temporal knowledge graphs with impact-weighted metrics using ROC AUC"

- Tool augmentation: Enhancing AI performance by integrating external tools (e.g., databases, code execution, search). "tool augmentation improves the performance of database queries, sequence reasoning, literature queries, and supplementary material analysis"

- Training contamination monitoring: Procedures to detect whether evaluation data has leaked into model training, ensuring valid assessments. "includes a canary string for training contamination monitoring"

Practical Applications

Immediate Applications

The paper’s findings point to practical steps organizations can take now to make AI systems more useful and safer as research partners by complementing component benchmarks with process-oriented evaluation and workflow integration.

- Stronger vendor and model selection for pharma/biotech R&D: augment existing component benchmarks (e.g., LAB-Bench, BioML-bench) with four process dimensions—dialogue quality, workflow orchestration, session continuity, and researcher experience—to score AI co-pilots in multi-session, budget- and constraint-aware studies. Sector: pharmaceuticals, biotech. Potential tools/workflows: a lightweight evaluation harness that logs multi-session interactions, tracks constraint propagation, and surveys researcher experience; incorporate canary strings for contamination monitoring. Assumptions/dependencies: access to representative, non-sensitive project scenarios; willingness to collect researcher feedback; data governance for session memory.

- Protocol safety linting before wet-lab execution: adopt BioLP-bench’s error-injection paradigm to automatically test whether AI assistants can detect failure-causing protocol errors, making “protocol linting” a standard pre-execution step. Sector: lab automation vendors, CROs, academic core facilities. Tools/workflows: Inspect-style model-graded evaluation backed by selective human review; integration with ELN/LIMS. Assumptions/dependencies: availability of validated protocol libraries; reliable evaluator models; safety review for dual-use concerns.

- Ethical guardrails in gene-editing design platforms: integrate CRISPR-GPT-style constraints that refuse unethical or dual-use actions (e.g., virus or embryo editing) into CRISPR design tools used by bench scientists. Sector: genomics, gene therapy. Tools/workflows: guardrail policies mapped to institutional biosafety rules; audit logs of refusals. Assumptions/dependencies: up-to-date policy definitions; alignment with institutional biosafety/biosecurity committees.

- Citation hygiene in literature workflows: use ScholarQABench’s citation accuracy metrics to flag hallucinated references and enforce retrieval-grounded answers in literature reviews, grant writing, and manuscript drafting. Sector: academia, pharma medical writing; daily life: students and science communicators. Tools/workflows: RAG pipelines with citation F1 scoring; PubMed/PMC connectors; automated reference validation reports. Assumptions/dependencies: access to bibliographic databases; organizational tolerance for stricter QA cycles.

- Tool-augmented assistant harnesses for computational biology: standardize environments that enable safe tool use (database queries, code execution, data analysis) shown to improve performance in LAB-Bench/BioDiscoveryAgent. Sector: bioinformatics, computational biology groups. Tools/workflows: secure code sandboxes, dataset access brokers, API connectors (NCBI, UniProt); role-based access control. Assumptions/dependencies: hardened infrastructure; auditability of tool calls; privacy controls for proprietary data.

- Training data contamination monitoring: embed canary strings and private/unpublished test sets into internal evaluation to detect leakage and inflate-proof scores, as demonstrated by LAB-Bench and BioDiscoveryAgent. Sector: model development teams, evaluation labs. Tools/workflows: dataset watermarking; leakage detection dashboards. Assumptions/dependencies: control over evaluation data; disciplined release pipelines.

- Human-in-the-loop closed-loop discovery pilots: replicate BioDiscoveryAgent-style iterative experiment design (e.g., genetic perturbations) under strict human oversight to validate whether AI improves exploration vs. Bayesian baselines. Sector: biotech discovery teams with automation capacity. Tools/workflows: small-scale automated experiments, iterative design loops, interpretable rationale logging. Assumptions/dependencies: access to appropriate assay platforms; safety gates; generalization checks on unpublished datasets.

- End-to-end ML workflow readiness checks: apply BioML-bench internally to assess whether agents can build robust pipelines across protein engineering, single-cell omics, imaging, and drug discovery—using results to set deployment boundaries and training priorities. Sector: computational R&D groups. Tools/workflows: benchmark harnesses tied to existing leaderboards; AUROC/Spearman reporting; failure mode taxonomy. Assumptions/dependencies: compute resources; acceptance that agents currently underperform human baselines.

- Lay summarization QA in science communication: adopt BioLaySumm-style readability and alignment metrics to improve patient-facing or stakeholder summaries of preclinical findings. Sector: communications teams in academia and industry; daily life: patient advocacy organizations. Tools/workflows: automated readability scoring and alignment checks; terminology glossaries. Assumptions/dependencies: metrics correlate with comprehension across diverse audiences; editorial oversight remains in the loop.

- Constraint-aware research planning aids: prototype co-pilots that propagate budget, timeline, and infrastructure constraints across multi-day sessions, surfacing replanning steps and trade-offs as identified in the paper’s workflow gap. Sector: academic labs, biotech project management. Tools/workflows: memory-enabled assistants integrated with ELN/project trackers; constraint-aware proposal builders. Assumptions/dependencies: reliable context memory; structured encoding of constraints; user acceptance of proactive clarifications.

- Policy and funding review add-ons: introduce lightweight requirements that AI-assisted submissions document citation verification, safety guardrails, and multi-session continuity checks. Sector: research funders, institutional review boards. Tools/workflows: checklists and audit templates; process-oriented evaluation summaries. Assumptions/dependencies: clear definitions of acceptable evidence; minimal administrative burden.

Long-Term Applications

Looking ahead, the paper’s process-oriented framework suggests new standards, products, and governance structures that will require further research, consensus, and scaling.

- Process-oriented benchmarking standards for research co-pilots: establish a community consortium to define multi-session, integrated workflow benchmarks that score dialogue quality, orchestration, continuity, and researcher experience alongside component metrics. Sector: standards bodies, research consortia, AI labs. Tools/products: “Research Co-Pilot Benchmark Suite” with multi-day scenarios and public/private splits. Assumptions/dependencies: cross-institutional agreement on tasks and metrics; funding for curation and maintenance.

- Certification and accreditation for lab-ready AI assistants: create a “Good AI Research Practice (GARP)” certification analogous to GLP/GMP that requires passing safety/error detection (BioLP-bench), ethical guardrails (CRISPR), contamination checks, and process scores. Sector: regulators, institutional biosafety committees, quality assurance. Tools/products: certification audits, compliance registries. Assumptions/dependencies: regulatory buy-in; liability frameworks; periodic re-certification.

- Research OS integrating ELN/LIMS with persistent agent memory: build a “Research Operating System” that orchestrates tasks across weeks, maintains contextual memory, enforces constraint propagation, and captures rationales—turning co-pilots into reliable collaborators. Sector: lab software, robotics/automation. Tools/products: memory managers, dialogue scoring services, workflow orchestrators, rationale archives. Assumptions/dependencies: interoperability standards (ELN/LIMS/APIs), robust privacy and retention policies, UI that supports critique and revision.

- Safe autonomous experimentation with orchestration and gating: evolve closed-loop systems from pilots to scaled autonomous labs where agents propose, run, and refine experiments under layered safety gates and human overrides. Sector: robotics, high-throughput screening, synthetic biology. Tools/workflows: hierarchical controllers, risk-scored gates, anomaly detection, emergency stop protocols. Assumptions/dependencies: reliable hardware, validated simulators, formal safety cases for high-stakes procedures.

- Impact-weighted discovery engines for portfolio strategy: operationalize Dyport-style importance scoring to prioritize high-impact hypotheses for funding and experimentation, complementing accuracy metrics with scientometric value. Sector: R&D portfolio management, funding agencies. Tools/workflows: temporal knowledge graphs, impact models, decision dashboards. Assumptions/dependencies: agreed-upon impact proxies; avoidance of bias toward fashionable topics; periodic recalibration.

- Standardized model-graded evaluation with calibrated judges: formalize use of evaluator models (LLM-as-judge) with calibration sets, bias audits, and adjudication protocols to scale assessment while preserving validity. Sector: AI safety institutes, benchmark providers. Tools/workflows: evaluator calibration suites, disagreement resolution workflows. Assumptions/dependencies: transparent evaluator training; human-in-the-loop for edge cases.

- Continuous collaboration quality monitoring: embed dialogue quality and session continuity KPIs into day-to-day co-pilot usage, enabling longitudinal tracking of collaboration effectiveness and surfacing decay or drift. Sector: QA/DevOps for AI in science. Tools/workflows: telemetry pipelines, KPI dashboards, periodic user studies. Assumptions/dependencies: consented logging; privacy-preserving analytics; organizational norms for feedback loops.

- Cross-domain generalization of process-oriented evaluation: transfer the framework to other complex knowledge work (e.g., engineering design, legal research, education), yielding better co-pilots for long-running projects outside biomedicine. Sector: software, finance, education. Tools/workflows: domain-specific multi-session benchmarks and orchestration patterns. Assumptions/dependencies: domain adaptation; appropriate safety and compliance overlays.

- Training and credentialing for human-AI co-research: develop curricula and micro-credentials that teach scientists how to critique, iterate, and co-orchestrate with AI, improving researcher experience and outcomes. Sector: academia, professional societies. Tools/workflows: scenario-based courses; evaluation practicums using benchmark suites. Assumptions/dependencies: institutional adoption; measurable learning outcomes tied to process metrics.

- Data governance for longitudinal agent memory: create policy frameworks for retention, provenance, and privacy of multi-session interaction data that co-pilots need to be effective. Sector: policy, compliance, legal. Tools/workflows: data retention policies, provenance tracking, consent models. Assumptions/dependencies: balance between utility and privacy; standardized schemas; legal clarity.

- Deployment pipelines that operationalize the scalability–validity–safety trilemma: design risk-tiered workflows that assign AI to tasks where scalability gains don’t compromise validity/safety, while reserving human oversight for safety-critical steps. Sector: all research organizations. Tools/workflows: triage matrices, task assignment policies, escalation triggers. Assumptions/dependencies: accurate task risk classification; cultural alignment with staged automation.

Collections

Sign up for free to add this paper to one or more collections.