- The paper introduces DiG-Flow, which uses sliced Wasserstein discrepancy to modulate observation features for robust action generation.

- It integrates a gating mechanism at the representation level, preserving pre-trained backbones while suppressing shortcut learning.

- Empirical results demonstrate significant gains in long-horizon and perturbed tasks, ensuring stable convergence in both simulation and real-robot scenarios.

Discrepancy-Guided Flow Matching for Robust Vision-Language-Action Models

Motivation and Background

Robustness and generalization in Vision-Language-Action (VLA) models for robot manipulation remain challenging, particularly under distribution shifts and in complex, multi-step tasks where sequential errors propagate and lead to significant failures. Existing architectures leveraging flow matching for action generation have achieved notable performance on standard benchmarks, yet their learned representations are fragile and prone to shortcut learning—where spurious correlations dominate and robustness under unseen conditions degrade substantially.

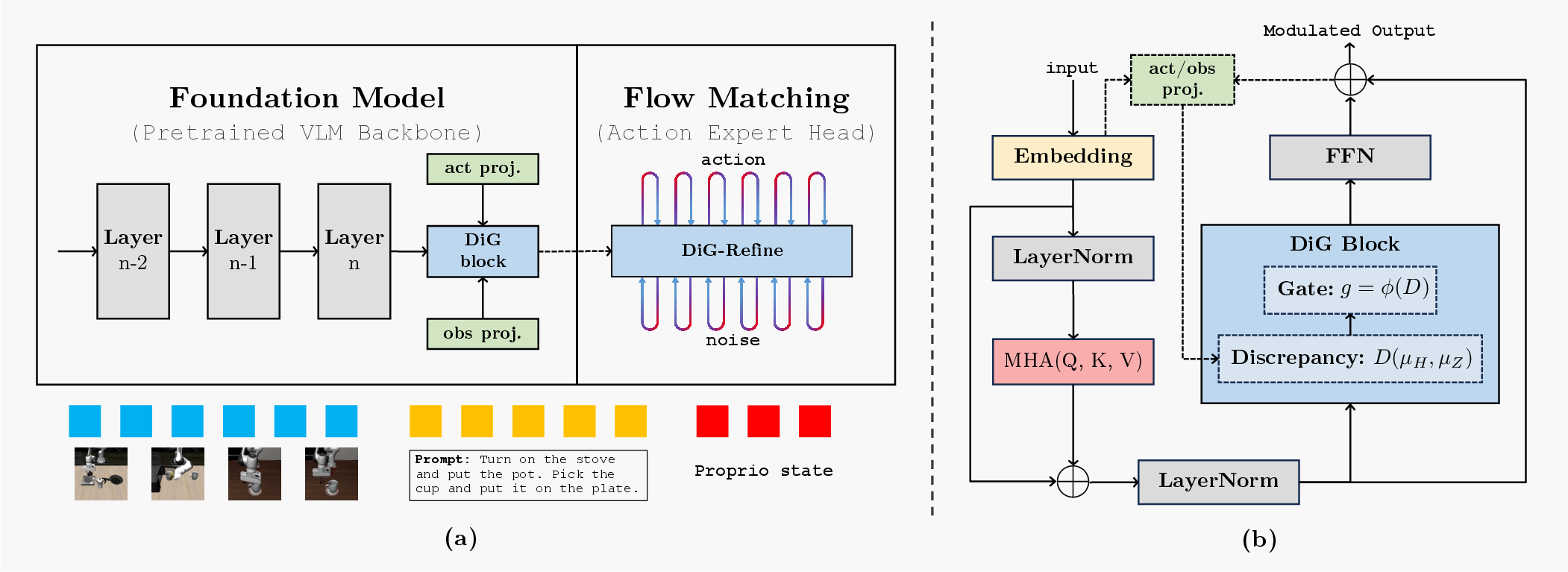

This work introduces DiG-Flow, a discrepancy-guided flow matching framework to regularize VLA model representations by exploiting geometric signals between visual-language observation embeddings and action embeddings. DiG-Flow leverages optimal transport-based discrepancy measures, specifically sliced Wasserstein distances, to quantify the geometric compatibility between observation and action distributions. This signal is then mapped through a monotone gating function and applied as a residual update to the observation features, modulating them before they are passed to the flow matching policy head.

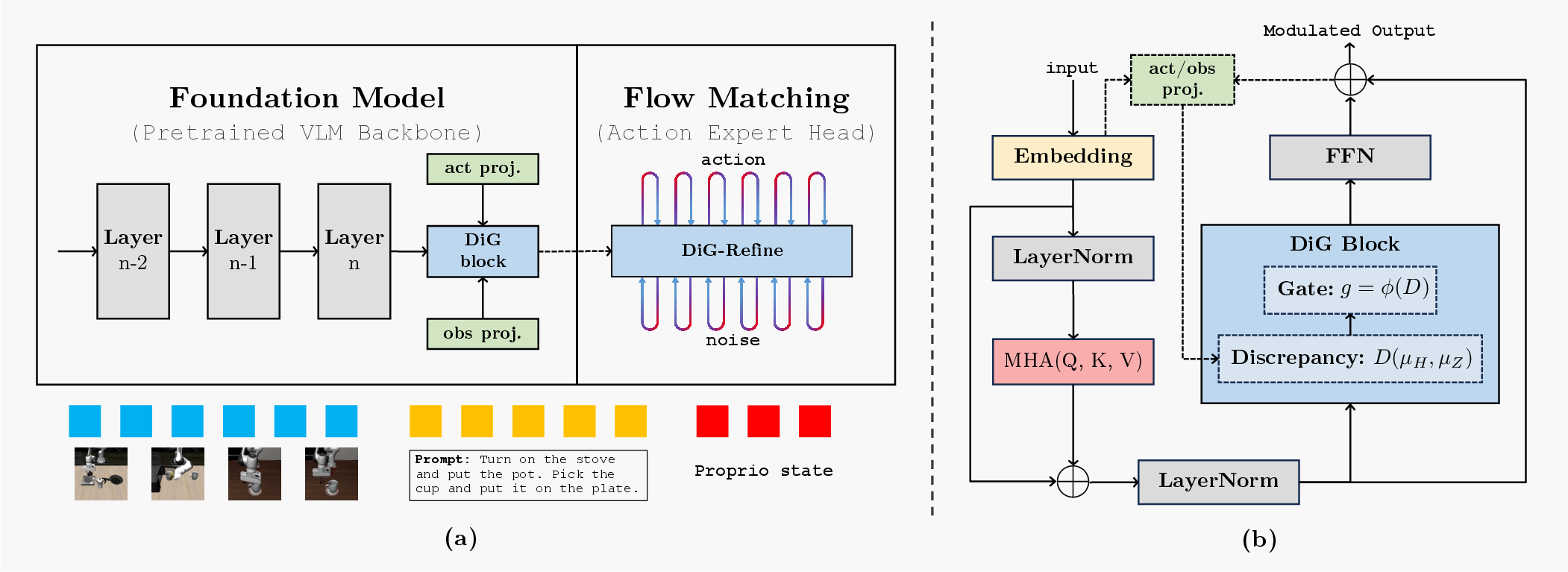

Figure 1: DiG-Flow architecture integrates a discrepancy-guided block at the representation level before flow matching, applying gated residual updates based on observed transport cost.

Methodology

Discrepancy Function and Modulation

Given empirical distributions of observation features μH and action embeddings μZ, DiG-Flow calculates a discrepancy D(μH,μZ) using sliced 2-Wasserstein distance—a computationally tractable measure for high-dimensional spaces. This discrepancy is mapped to a gating factor g=ϕ(D) via a monotone, exponentially decaying function clipped at a minimum value to prevent gate collapse. The gate modulates a lightweight residual operator that adjusts the observation embeddings prior to policy inference.

This process allows the pretrained backbone architectures to remain intact, as DiG-Block operates at the representation level without modifying either the probability transport path or the target vector field used in flow matching.

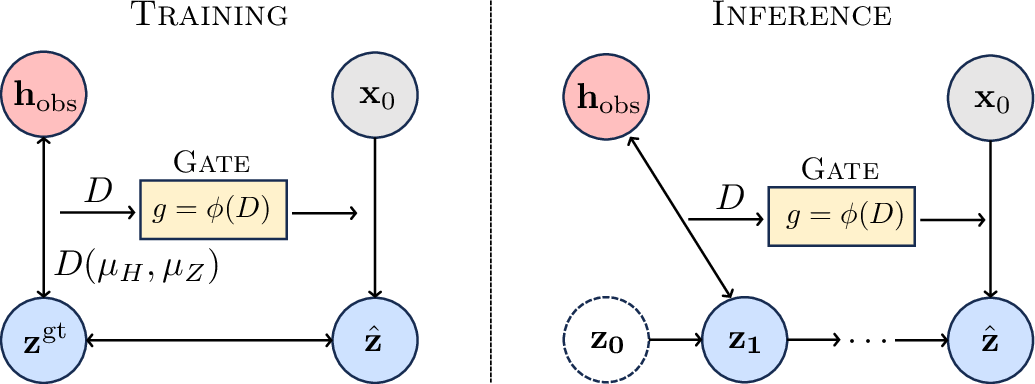

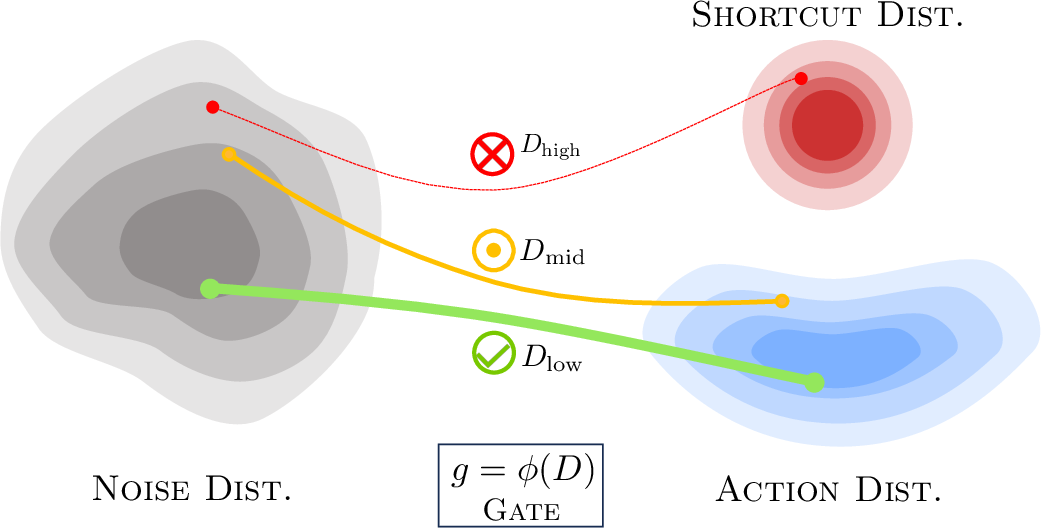

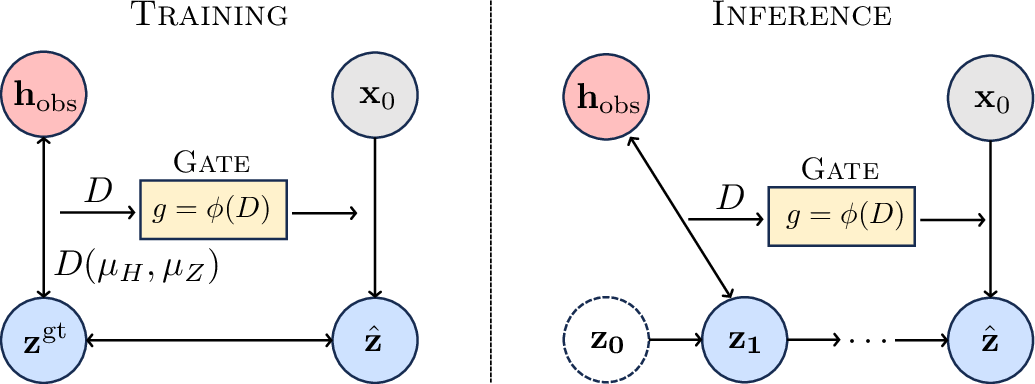

Figure 2: The gating mechanism operates at both training (using ground-truth actions) and inference (using predicted actions), reweighting loss and feature modulation via geometric discrepancy.

Training and Inference Protocol

During training, ground-truth action chunks are encoded, projected, and compared with observation embeddings to compute the geometric discrepancy and derive the gate for residual feature enhancement. This dynamically reweights the flow-matching loss, suppressing shortcut solutions and amplifying semantically aligned behavior.

At inference, the policy utilizes predicted action embeddings in the discrepancy computation. Optionally, an iterative DiG-Refine procedure repeatedly refines actions through gated residual enhancements, with theoretical guarantees of contraction under suitable operator regularization.

Theoretical Guarantees

The analysis provides several results:

Empirical Evaluation

Simulation Benchmarks

DiG-Flow was evaluated on LIBERO (diverse manipulation tasks) and RoboCasa (photorealistic household scenes). When integrated with state-of-the-art VLA backbones (π0.5 and GR00T-N1), DiG-Flow consistently improved performance across suites, most pronouncedly on long-horizon, multi-step tasks (LIBERO-Long: +4 points for π0.5-DiG and +1.5 for GR00T-N1-DiG). Under few-shot and severe data-limited settings (RoboCasa, 50 demonstrations/task), DiG-Flow achieved up to +11.2 percentage points increased average success.

Robustness to Distribution Shift

Non-stationary visual and proprioceptive perturbations led to marginal performance drops in standard VLA models. DiG-Flow models exhibited 4–6 points gain in robustness on perturbed LIBERO suites, especially on LIBERO-Long (+14% for cosine perturbation, +17% for sine), demonstrating improved resistance to shortcut correlations and error accumulation.

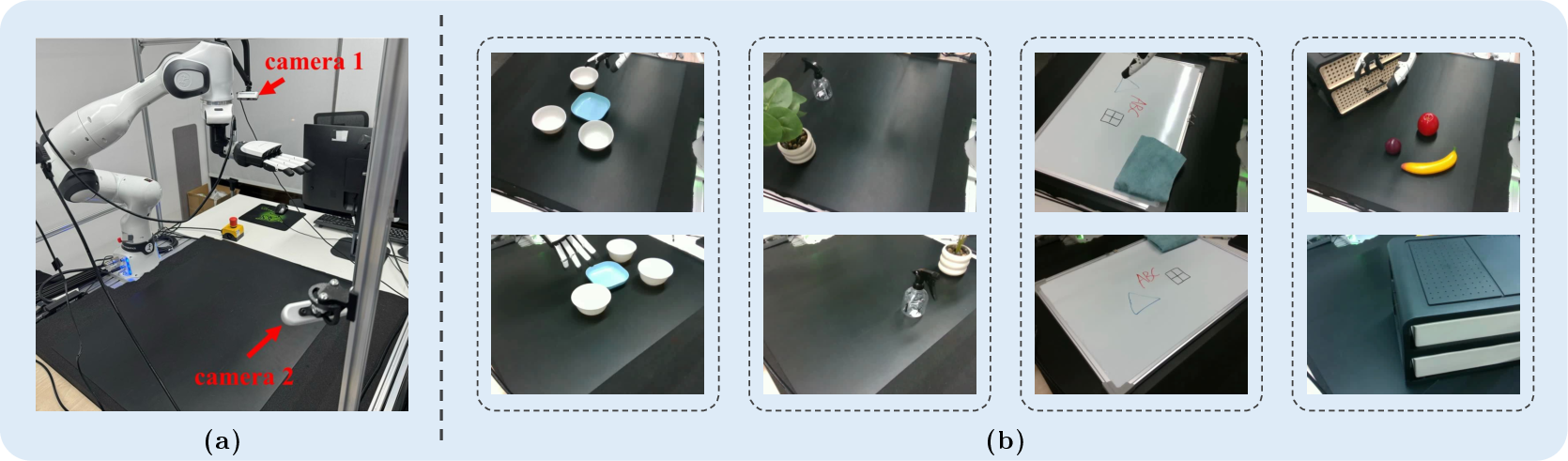

Real-World Robotic Manipulation

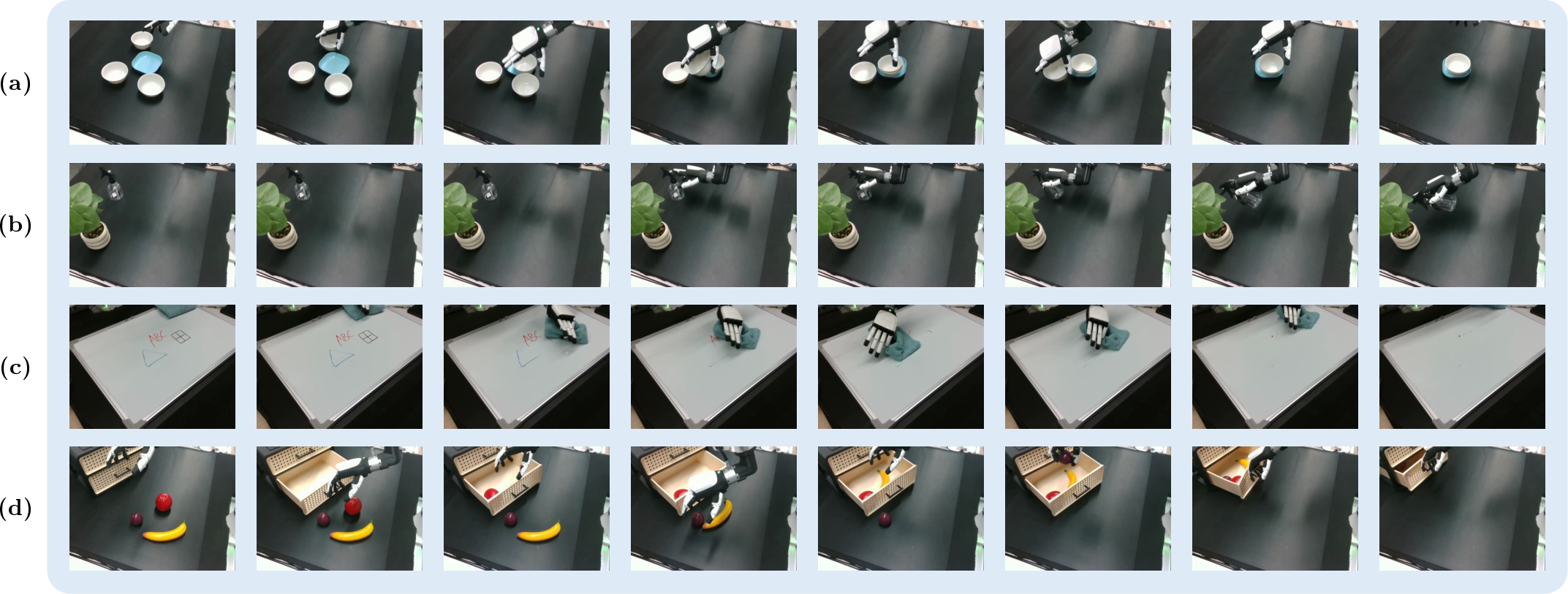

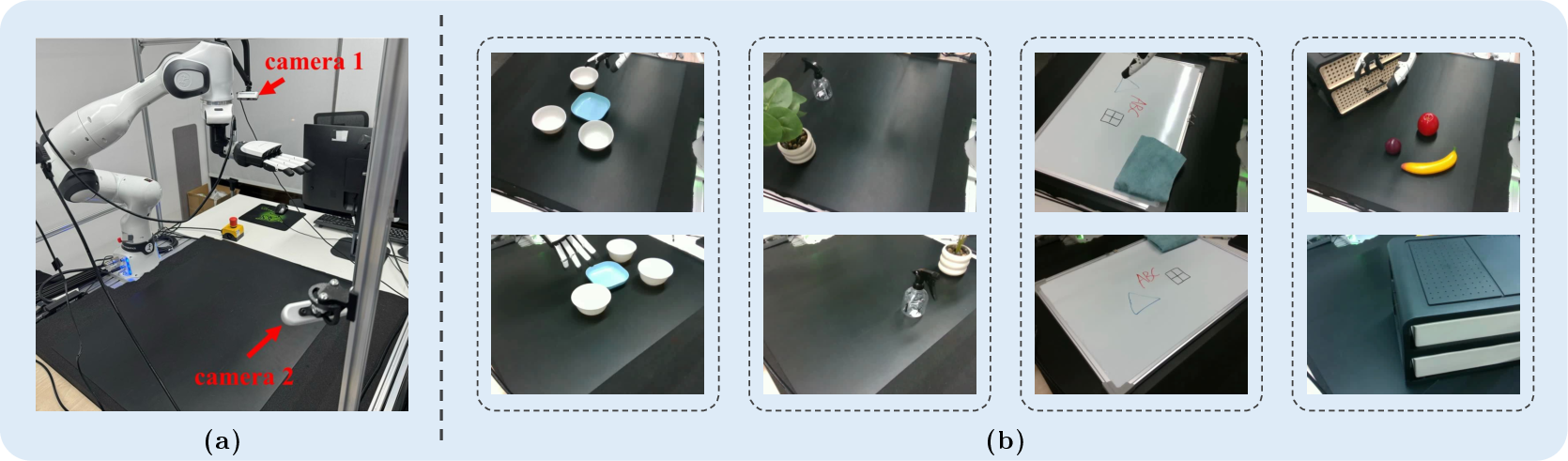

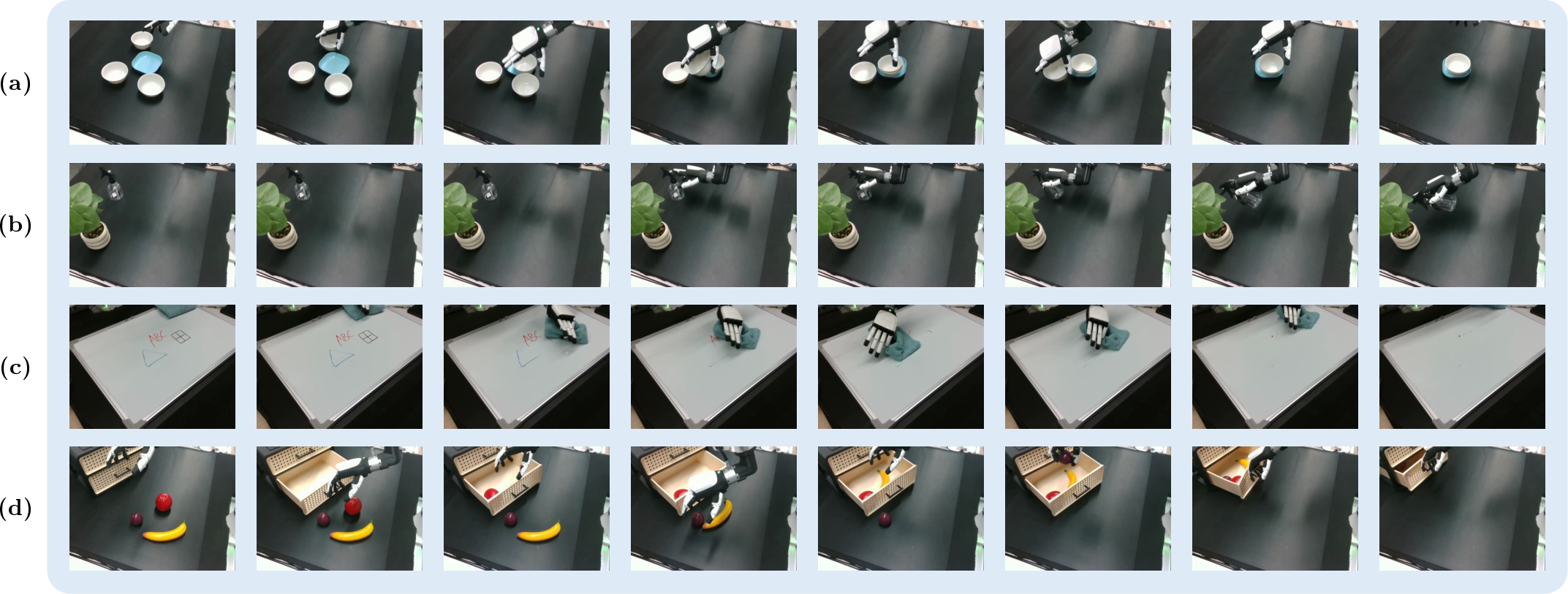

DiG-Flow was validated on a multi-camera, dexterous-hand real robot setup executing four diverse manipulation tasks: stack-bowls, spray-plant, wipe-whiteboard, and sort-into-drawer. With only 50 human demonstrations per task, DiG-Flow improved whole-task and sub-task success rates uniformly, particularly on long-horizon tasks (e.g., sort-into-drawer: +8% whole task success).

Figure 4: Real robot setup with dual cameras and dexterous hand, executing complex manipulation benchmarks.

Figure 5: Qualitative multi-step rollouts by π0.5-DiG in diverse manipulation tasks, showcasing robust behavior under open-loop execution.

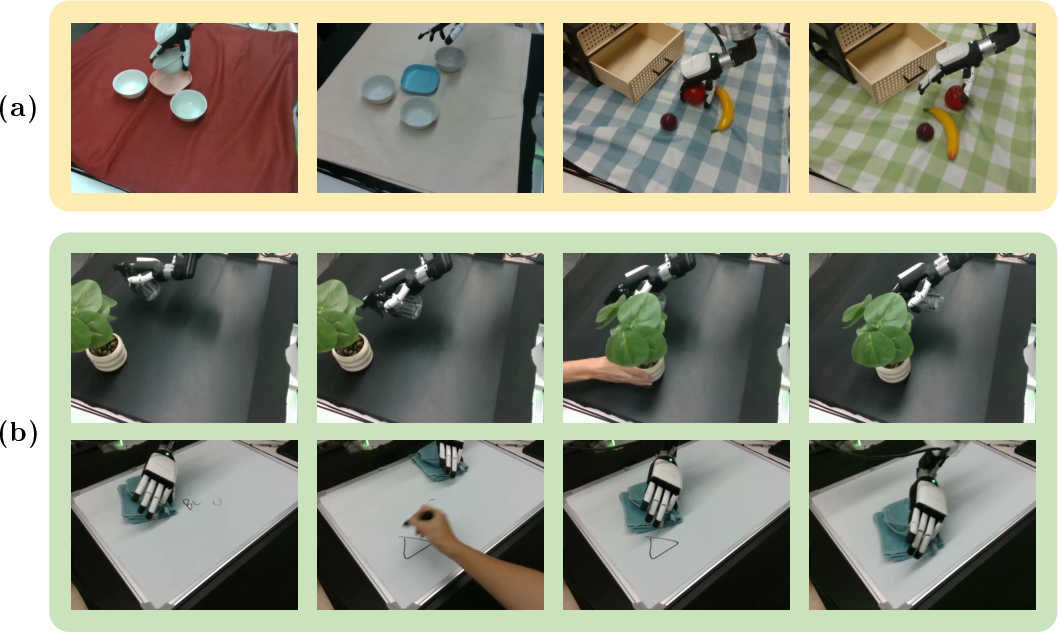

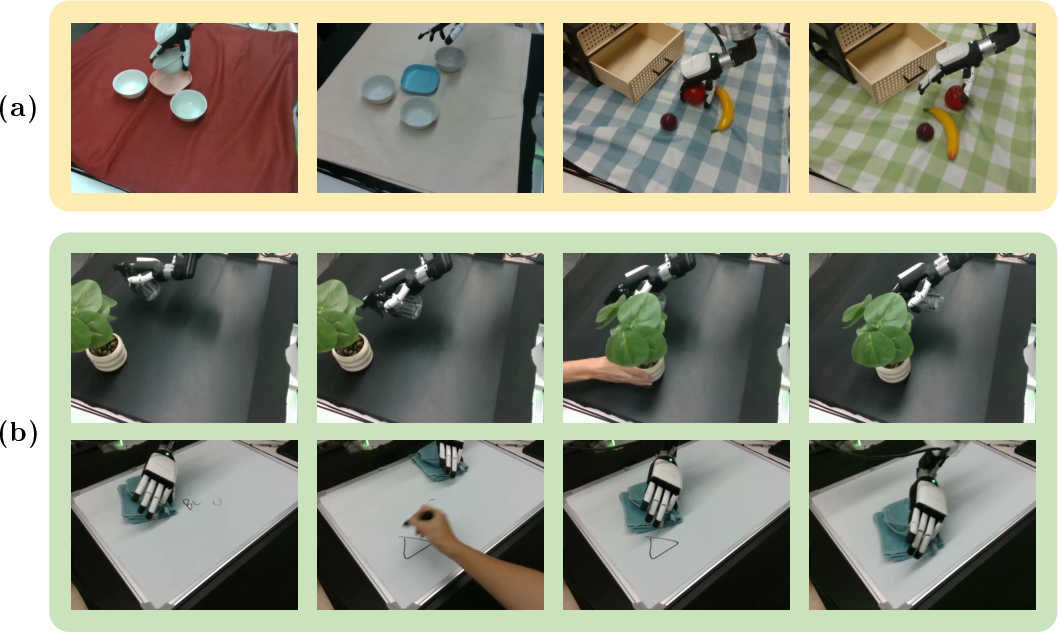

Distribution shift experiments (background/sensor changes and human interference) induced severe degradation in baseline models, while DiG-Flow maintained significantly higher robustness in both success rate and error pattern stability.

Figure 6: Testing robustness under background changes and real-time human interference, DiG-Flow sustains policy alignment and generalization.

Design Analysis

Ablation studies underscore the importance of using Wasserstein-based geometric discrepancy in gating and feature modulation. Alternative measures (cosine, MMD) yield moderate gains but are consistently inferior. Fixed or random gating strategies degrade long-horizon task performance substantially (−12 to −16%), compared to discrepancy-guided transport gating.

Hyperparameter analysis validates method robustness with respect to most choices, provided residual strength and gating temperature are coordinated to keep effective step size moderate. Empirical monitoring of transport cost demonstrates non-trivial stabilization, confirming that the discrepancy acts as a discriminative signal rather than a loss term to be minimized outright.

Implications and Future Directions

DiG-Flow presents a scalable, backbone-agnostic mechanism for enhancing VLA robustness against distributional drift, shortcut learning, and feature misalignment without altering core generative architectures. Theoretical characterization enables method extension to other domains with geometric action-observation misalignment. Potential future developments include integration with self-supervised or RL regimes, adoption of more robust discrepancy estimates, and deployment on higher-DoF platforms and other sensor modalities.

Conclusion

DiG-Flow introduces discrepancy-guided regularization for VLA models through optimal transport-based geometric signals, enabling more robust policy generation and enhanced resistance to spurious pattern exploitation. Both theoretical guarantees and extensive empirical evaluations in simulation and real-world environments substantiate its efficacy. The residual update mechanism and gating protocol allow non-intrusive integration, fostering generalizability and scalable deployment in robot learning systems.