STARFlow-V: End-to-End Video Generative Modeling with Normalizing Flow (2511.20462v1)

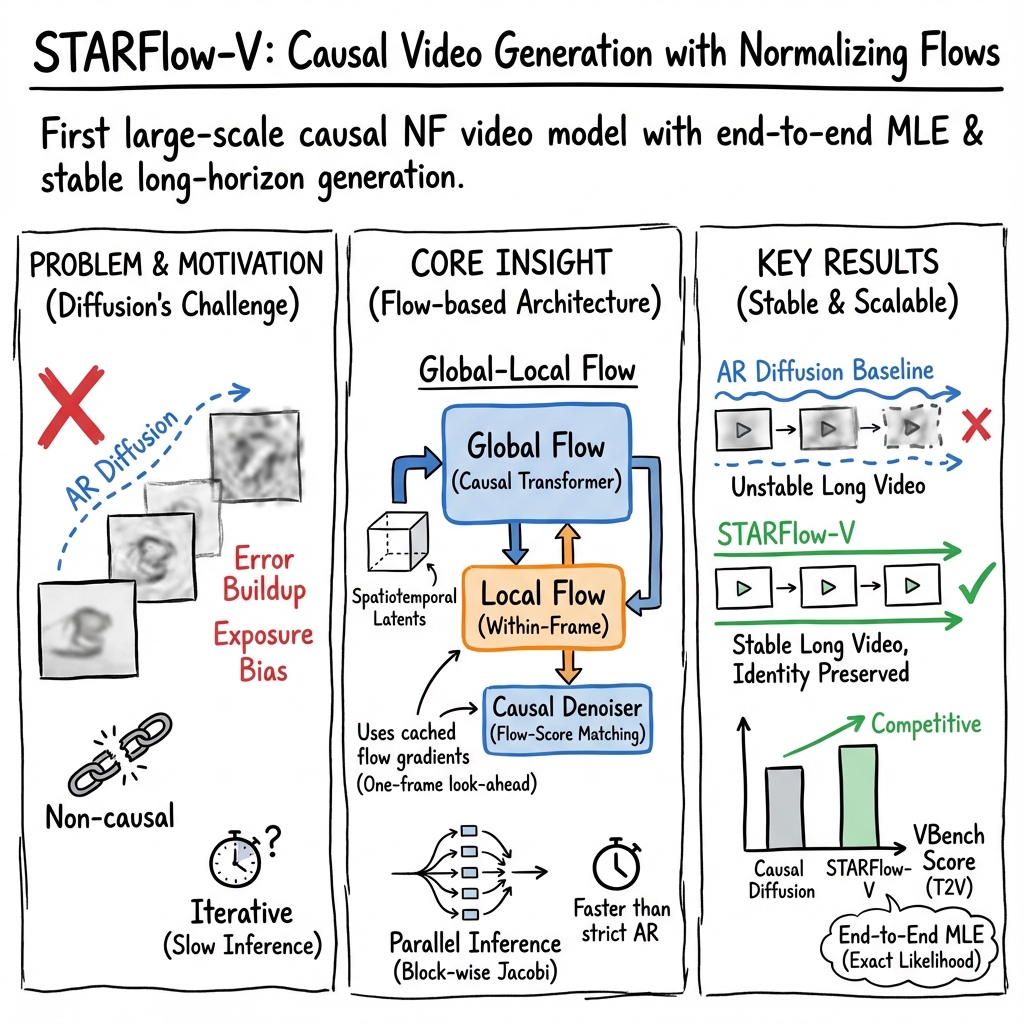

Abstract: Normalizing flows (NFs) are end-to-end likelihood-based generative models for continuous data, and have recently regained attention with encouraging progress on image generation. Yet in the video generation domain, where spatiotemporal complexity and computational cost are substantially higher, state-of-the-art systems almost exclusively rely on diffusion-based models. In this work, we revisit this design space by presenting STARFlow-V, a normalizing flow-based video generator with substantial benefits such as end-to-end learning, robust causal prediction, and native likelihood estimation. Building upon the recently proposed STARFlow, STARFlow-V operates in the spatiotemporal latent space with a global-local architecture which restricts causal dependencies to a global latent space while preserving rich local within-frame interactions. This eases error accumulation over time, a common pitfall of standard autoregressive diffusion model generation. Additionally, we propose flow-score matching, which equips the model with a light-weight causal denoiser to improve the video generation consistency in an autoregressive fashion. To improve the sampling efficiency, STARFlow-V employs a video-aware Jacobi iteration scheme that recasts inner updates as parallelizable iterations without breaking causality. Thanks to the invertible structure, the same model can natively support text-to-video, image-to-video as well as video-to-video generation tasks. Empirically, STARFlow-V achieves strong visual fidelity and temporal consistency with practical sampling throughput relative to diffusion-based baselines. These results present the first evidence, to our knowledge, that NFs are capable of high-quality autoregressive video generation, establishing them as a promising research direction for building world models. Code and generated samples are available at https://github.com/apple/ml-starflow.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What this paper is about

This paper introduces STARFlow‑V, a new kind of AI model that can make videos from things like text descriptions, a starting image, or another video. Unlike most top video makers today (which use “diffusion” models), STARFlow‑V is built on “normalizing flows,” a different approach that aims to be:

- end‑to‑end (trained in one clean objective),

- causal (generates frames in order, good for streaming), and

- reversible (the same model can both generate and “read” videos).

The authors show that normalizing flows—recently successful for images—can also work well for videos, which are more complex because they add time.

What questions the paper tries to answer

- Can normalizing flows (a different family from diffusion) make high‑quality videos, not just images?

- How can we keep videos consistent over time so they don’t “drift” or fall apart in long clips?

- Can we make generation causal (one frame after another) for streaming or interactive use?

- Can we speed up generation so it doesn’t take forever?

- Can one model handle text‑to‑video, image‑to‑video, and video‑to‑video without special add‑ons?

How it works (in everyday language)

Normalizing flows in a nutshell: a reversible recipe

Imagine a reversible machine: you put a video in, it turns it into simple “numbers” (like a tidy recipe). Because the machine is reversible, you can also go backwards: feed in simple numbers and get a video out. Training teaches the machine to map real videos to simple patterns it understands well, and then reverse the process to generate new videos.

- This mapping is “exact” in a math sense (the model can say how likely a video is), and it samples in one pass (no long, step‑by‑step denoising like diffusion).

Latent space: working with sketches instead of full videos

Instead of working on full‑size frames, STARFlow‑V uses a 3D causal VAE (a smart compressor) to turn videos into a compact “sketch” called latent space. It’s like writing a detailed outline instead of the full story at once. This makes training and generation much faster.

Global–local design: director and camera crew

To keep things stable over time, the model splits its work into two parts:

- Global (the “director”): a deep, causal component that handles the story across frames (what happens next, in order).

- Local (the “camera crew”): light, per‑frame components that polish details inside each frame.

This setup reduces the “error snowball” that often happens when a model generates videos frame by frame.

Flow‑Score Matching: a tiny “clean‑up” helper that stays causal

Training adds a small amount of noise to make the model robust. That can leave slightly noisy outputs at generation time, so STARFlow‑V learns a tiny “cleaner” network to remove this noise:

- Instead of using the model’s own noisy, non‑causal signals to clean the video, it trains a small, causal denoiser to predict how to nudge frames back to clean results.

- With a one‑frame look‑ahead, it stays practical for streaming while avoiding sparkly artifacts.

Think of it like polishing each frame as soon as it’s produced, without peeking far into the future.

Faster generation with block‑wise Jacobi: solve puzzles in parallel

Purely step‑by‑step generation can be slow. STARFlow‑V speeds this up using a trick called block‑wise Jacobi iteration:

- Break the video’s latent tokens into blocks (chunks).

- Update all tokens in a block together several times (in parallel) until they agree, then move to the next block.

- Warm‑start each new frame using the previous one, which helps it settle faster.

This keeps the overall process causal while making it much faster—about 15× faster than strict one‑by‑one decoding in their tests.

One model, many tasks

Because the model is reversible:

- Text‑to‑Video: generate from a prompt.

- Image‑to‑Video: encode the first frame with the same model, then continue.

- Video‑to‑Video: encode the whole source clip, then edit or extend it (e.g., style, in/outpainting) without a separate encoder.

What they found and why it matters

- Quality and consistency: STARFlow‑V produces videos with strong visual quality and smooth motion over time. It stays stable longer than several autoregressive diffusion baselines, which often blur or drift on long clips.

- Causal and streamable: The model naturally generates frames in order—useful for streaming and interactive settings (like games or robotics).

- Practical speedups: With the block‑wise Jacobi method and pipelined stages, it’s much faster than strict step‑by‑step decoding, while keeping quality high.

- Versatile: The same backbone handles text‑to‑video, image‑to‑video, and video‑to‑ stockholmvideo without extra task‑specific parts.

- First strong flows‑for‑video result: It shows, for the first time at this level, that normalizing flows can compete in video generation, not just images.

Why this is important: Most top video models today use diffusion. Showing that normalizing flows can also work well opens a new path for building “world models” (systems that predict what will happen next), with benefits like exact likelihoods (confidence scores), reversible mappings, and cleaner, end‑to‑end training.

Limits and what’s next

- Still not real‑time: Even with speedups, generation is not yet fast enough for instant results on normal hardware.

- Data quality matters: Noisy or biased training data can limit performance. The authors didn’t yet see clean “scaling laws” because of this.

- Physics mistakes: Sometimes the model makes impossible scenes (like objects passing through walls). Better data and training should reduce this.

Next steps include making generation faster, distilling the model into smaller versions without losing much quality, and training on cleaner, more physics‑aware video collections.

Bottom line

STARFlow‑V shows that a reversible, end‑to‑end, causal model—built with normalizing flows—can generate high‑quality, temporally consistent videos and work across multiple tasks with the same backbone. It’s a promising alternative to diffusion‑based systems, especially for streaming and interactive applications where generating the future without peeking ahead really matters.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

Below is a concise list of what remains missing, uncertain, or unexplored in the paper, formulated to be actionable for future research.

- Real-time generation is not achieved or quantified: no end-to-end latency numbers (per-frame and per-second throughput) across hardware configurations; unclear how close the current pipeline is to interactive streaming targets at common resolutions (e.g., 720p/1080p, 24–60 fps).

- Convergence guarantees and quality–latency trade-offs for block-wise Jacobi iteration are not analyzed: no theory or empirical study relating block size, residual threshold, iteration count, and sample quality (including failure cases when convergence is incomplete).

- Effect of approximate inversion on sample distribution is unmeasured: when Jacobi iterations stop early, how far do samples deviate from exact flow inversion and how does this impact video quality, likelihood, and temporal consistency?

- Ambiguity in the denoiser’s operating space and interface: the paper alternates between latent-space and pixel-space denoising; a clear specification and ablation of where FSM is applied (latents vs pixels), and how outputs are fed back into the flow/decoder, is missing.

- Train–test mismatch for the denoiser is unaddressed: FSM trains on global scores (using future frames) but infers with one-frame look-ahead; quantify how this mismatch affects quality, drift, and causality, and explore strictly causal or limited-look-ahead training targets.

- Number of denoising steps is not explored: FSM appears to use a single update; assess whether multi-step or learned iterative denoising improves robustness and long-horizon stability.

- Impact of the noise augmentation parameter σ is not ablated: no study of how σ affects training stability, final noise level in samples, denoiser performance, and overall perceptual quality.

- Likelihood estimation is claimed but not evaluated: no reported log-likelihoods (per-frame or sequence), calibration metrics, or downstream uses (e.g., OOD detection, anomaly scoring), especially given the post-hoc denoising step that breaks invertibility.

- Long-horizon stability lacks quantitative evaluation: beyond 30-second demos, there are no metrics (e.g., identity preservation, color drift, motion consistency) across minutes-long sequences, nor controlled analyses of sliding-window overlap size and its boundary artifacts.

- Streaming behavior is not rigorously validated: no measurements of per-frame latency, buffer sizes, KV-cache footprints, or causal lag introduced by the one-frame look-ahead denoiser under real streaming constraints.

- Universality under global–local constraints is asserted but not tested: ablate the capacity split between deep (global) and shallow (local) flows to validate expressivity, inter-frame reasoning, and within-frame detail retention under different allocations.

- Sensitivity to VAE choice and compression rates is unexplored: no ablation over 3D causal VAE architectures, spatial/temporal downsampling factors, or decoder receptive fields, despite decoder fine-tuning being ineffective for temporal consistency.

- The pipeline’s final output is not invertible, weakening NF guarantees: after denoising, samples leave the NF manifold; quantify the mismatch between the flow’s learned qσ and the denoised p, and investigate end-to-end training that models p directly without a post-hoc denoiser.

- Conditioning mechanisms are under-specified: details of the text encoder, guidance formulation (e.g., weights for μ/σ guidance), and integration of multi-modal signals (audio, pose, camera) are missing; evaluate robustness to prompt length/quality and cross-condition generalization.

- Evaluation breadth is limited: comparisons focus on autoregressive diffusion baselines at 480p/16 fps; include SOTA non-AR diffusion, higher resolutions/fps, and user studies to benchmark perceptual quality, semantics, and temporal coherence at typical deployment settings.

- Spatial reasoning and aesthetic weaknesses are not addressed: VBench scores lag on Spatial and Aesthetic dimensions; analyze failure modes and propose architectural/data interventions targeting these categories.

- Exposure bias claims are not quantified: while flows are argued to mitigate compounding errors, there is no empirical measure (e.g., error accumulation curves, token-level perplexity in latent space) contrasting NF vs AR diffusion across rollout lengths.

- Data scaling and curation are under-explored: the paper reports no clean scaling law; conduct controlled experiments varying video sizes/qualities, caption sources (raw vs synthetic, 1:9 ratio), motion complexity, and physical plausibility to identify scaling regimes.

- Video-to-video/editing evaluation lacks metrics: beyond qualitative demos, measure edit faithfulness, temporal consistency in preserved regions, and identity preservation under common editing tasks, and compare to specialized V2V baselines.

- Memory footprint and system efficiency are not reported: no measurements of GPU memory, KV-cache sizes, and pipeline parallelism utilization; provide guidance for deployment on commodity hardware.

- Robustness to distribution shifts is unknown: evaluate performance under extreme motion, occlusions, camera shake, lighting changes, and domain shifts (e.g., robotics, simulation) to substantiate “world model” claims.

- Safety and non-physical generation are acknowledged but unsolved: develop physics-aware losses, constraints, or datasets; quantify the rate and types of non-physical artifacts and assess interventions that reduce them.

- Reproducibility gaps remain: many implementation details are deferred to the appendix/repo (e.g., exact training schedule, σ, denoiser architecture/latency, guidance parameters); supply comprehensive recipes and hyperparameters to enable faithful replication.

Glossary

- Affine transform: A linear scaling and shift applied to variables, parameterized by a network to enable invertible flow layers. "where each block applies an affine transform whose parameters are predicted by a causal Transformer under a (self-exclusive) causal mask $$:"</li> <li><strong>Autoregressive diffusion models</strong>: Diffusion models that generate sequences by modeling each conditional step given previous outputs, aligning with the chain rule. "Autoregressive (AR) diffusion models~\citep{chen2024diffusion,song2025history,yin2025slow}âa line of work that combines chain-rule factorization with diffusionâaim to alleviate prior limitations"</li> <li><strong>Autoregressive flows (AFs)</strong>: Normalizing flows whose invertible transformations are parameterized autoregressively so each variable depends only on earlier ones. "Both methods instantiate autoregressive flows (AFs)âNFs whose invertible transformations are parameterized autoregressivelyâ"</li> <li><strong>Bijection</strong>: A one-to-one, invertible mapping between data and latent variables, enabling exact likelihood and sampling in flows. "an NF learns a bijection $f_\theta:\mathbb{R}^D\!\to\!\mathbb{R}^D%%%%1%%%%=f_\theta()$."</li> <li><strong>Causal denoiser</strong>: A denoising module constrained to use only past (and minimal look-ahead) context to maintain causality in generation. "we propose flow-score matching, which equips the model with a light-weight causal denoiser to improve the video generation consistency in an autoregressive fashion."</li> <li><strong>Causal mask (self-exclusive)</strong>: An attention mask that prevents positions from attending to future tokens and, in self-exclusive form, to themselves. "under a (self-exclusive) causal mask $$:"

- Chain-rule factorization: Decomposing a joint distribution as a product of conditionals, the basis of autoregressive modeling. "combines chain-rule factorization with diffusion"

- Change-of-variables formula: The formula for transforming densities under invertible mappings; central to NF likelihood training. "By the change-of-variables formula,"

- Continuity equation: A differential equation ensuring probability conservation; used to connect noise scale derivatives to the score. "the continuity equation gives ${\partial_{\sigma} \tilde{}= -\sigma \nabla_{\tilde{}\log q_\sigma(\tilde{})$."

- Deep–shallow decomposition: Splitting a flow into a deep global component for semantics and a shallow local component for reshaping. "we use a deepâshallow decomposition ,"

- Diffusion-based approaches: Generative models trained to denoise progressively from noise, dominant in image/video synthesis. "diffusion-based approaches~\citep{ho2020denoising,rombach2022high,peebles2023scalable,lipman2023flow,esser2024scaling} have emerged as the dominant backbone for text- and image-conditioned video synthesis,"

- Euler step: A first-order numerical update used to integrate differential equations, here for score-based denoising. "a single Euler step yields the Tweedie estimator:"

- Exposure bias: Train–test mismatch in autoregressive models caused by conditioning on ground truth during training but on self-generated outputs at inference. "exposure bias: during training, models condition on ground-truth contexts, whereas at inference they must rely on their own (imperfect) predictions."

- Fixed-point system: An equation of the form x = F(x) solved iteratively; used to parallelize inversion of autoregressive flow layers. "recasting inversion as solving a nonlinear fixed-point system with parallel solvers such as Jacobi iteration"

- Flow-score matching (FSM): Training a small denoiser to predict the model’s score (gradient of log-density) produced by the flow for stable, causal denoising. "we propose flow-score matching, which learns a lightweight causal denoiser to enhance temporal consistency in video scenarios."

- GAN objective: Adversarial training loss used for generative modeling via a discriminator–generator game. "fine-tuning the VAE decoder to denoise noisy latents using a GAN objective~\citep{rombach2022high}."

- Gaussian Next-Token Prediction: Framing next-step prediction as estimating Gaussian parameters for the conditional distribution in latent space. "acts as Gaussian Next-Token Prediction (cf.\ the affine form in \Cref{eq.affine_f}) in latent space,"

- Global–local architecture: A design where global latents handle long-range causal dependencies while local blocks refine within-frame structure. "operates in the spatiotemporal latent space with a global--local architecture"

- Guidance-compatible: Compatible with guidance mechanisms that modify sampling via adjusted parameters (e.g., guided μ and σ). "The procedure is also guidance-compatible, as proposed in ~\citep{gu2025starflow},"

- Hadamard product: Elementwise multiplication of vectors/tensors, used within affine flow updates. " denotes the Hadamard product."

- Invertible transformations: Functions with exact inverses used in flows to enable exact likelihoods and reversible sampling. "are likelihood-based generative models built from invertible transformations."

- Jacobi iteration: A parallel iterative method that updates all variables using the previous iterate; used to accelerate AR inversion. "Jacobi iteration~\citep{porsching1969jacobi,kelley1995iterative},"

- Jacobian term: The determinant of the Jacobian matrix that captures volume change under the transformation in the flow’s likelihood. "and the Jacobian term accounts for the local volume change induced by , preventing collapse."

- KV cache: Cached key/value tensors that store past attention states for efficient autoregressive decoding. "we encode the observed frame via the flow forward to initialize the KV cache;"

- Latent space: A compressed representation space in which the model operates to simplify learning and sampling. "operates in the spatiotemporal latent space with a global--local architecture"

- Likelihood-based generative models: Models trained by maximizing exact data likelihood, providing native log-likelihood evaluation. "Normalizing flows (NFs) are end-to-end likelihood-based generative models for continuous data,"

- Maximum-likelihood objective: The training objective that maximizes the log-likelihood of data under the model. "NFs are trained end-to-end via a tractable maximum-likelihood objective derived from the change-of-variables formula:"

- Pipeline parallelism: A scheduling technique that overlaps stages of computation to reduce latency. "a pipelined schedule (analogous to pipeline parallelism~\citep{huang2019gpipe}):"

- Raster order: A tokenization/decoding order within an image frame (e.g., left-to-right, top-to-bottom). "raster order within each frame"

- Score-based denoising: Denoising by stepping in the direction of the score, the gradient of the log-density. "Score-based Denoising~ Instead of decoder fine-tuning, TARFlow~\citep{zhai2024normalizing} proposes to denoise using the learned flow itself via score-based updates."

- Sliding-window schedule: Generating long sequences by overlapping windows to extend beyond the training horizon. "via a sliding-window (chunk-to-chunk) schedule in the deep block."

- Triangular system: A dependency structure where each variable depends only on previous ones, enabling efficient iterative solvers. "This induces a triangular system that admits convergence under nonlinear Jacobi iteration~\citep{saad2003iterative}:"

- Tweedie estimator: A denoising estimator adding variance times the score to the noisy sample. "a single Euler step yields the Tweedie estimator:"

- Universal approximation guarantee: A property that the model class can approximate any target density under certain conditions. "preserves the universal approximation guarantee of STARFlow~\citep{gu2025starflow}:"

- Variational Autoencoder (VAE): A probabilistic autoencoder that learns latent representations via variational inference. "using a pretrained 3D causal VAE~\citep{wan2025wan}."

- World models: Generative models that simulate environments or dynamics for planning, simulation, or embodied AI. "establishing them as a promising research direction for building world models."

Practical Applications

Immediate Applications

Below are practical, deployable use cases that can be implemented now, leveraging STARFlow‑V’s end-to-end normalizing flows, causal generation, invertibility, and the proposed flow-score matching denoiser and block-wise Jacobi inference.

- Creative media production (sector: media/entertainment)

- Use case: Offline text-to-video, image-to-video, and instruction-based video-to-video generation for ads, trailers, social media, and previsualization.

- Workflow: Integrate an NF-based generator as a plugin in non-linear editors (e.g., Adobe Premiere, Final Cut) for scene generation, in/outpainting, identity-preserving edits (seed from a reference frame), and long-horizon rolls for previsualization.

- Tools/products: “Causal Generative Video” NLE plugin; batch generator service using the global–local architecture and FSM denoiser; identity-locked animation from a single frame.

- Assumptions/dependencies: High-end GPU(s); pretrained 3D causal VAE; large-scale captioned datasets; current inference latency is minutes per 5s clip (not real-time); quality not yet top-tier relative to strongest diffusion models.

- Marketing and A/B creative testing (sector: marketing/advertising)

- Use case: Automatically produce multiple temporally consistent variants of a concept video (colors, objects, camera moves) while preserving brand identity.

- Workflow: Image-to-video seeding with invertible encoding of the key frame; controlled edits via video-to-video with instruction prompts; likelihood-based quality checks to flag drift.

- Tools/products: Variant generator with “identity-lock” and drift monitor; batch scheduler with block-wise Jacobi speedups.

- Assumptions/dependencies: Reliable captioning and prompt engineering; trained on domain-relevant data; legal clearance for source materials.

- Post-production “freeze-first” editing (sector: media/software)

- Use case: Timeline-safe revisions where earlier frames remain unchanged due to causal generation.

- Workflow: Re-run generation from a changed cut point; only subsequent frames are affected; use FSM denoiser to refine outputs without breaking causality.

- Tools/products: Timeline-aware causal generator; edit-on-cursor, forward-only recompute.

- Assumptions/dependencies: Editor integration; sufficient compute; denoiser trained with one-frame look-ahead.

- Synthetic data generation for computer vision (sector: software/AI)

- Use case: Create temporally stable sequences to augment training of trackers, re‑identification, and segmentation models.

- Workflow: Generate long-horizon clips with consistent identities and camera motion; use log-likelihood as an out-of-distribution (OOD) filter; deploy FSM denoiser for motion-heavy scenes.

- Tools/products: Data factory with “likelihood meter” to select usable clips; identity consistency checks by round-trip encoding.

- Assumptions/dependencies: Physical plausibility is limited; careful domain selection is needed; labeling of generated data should be explicit.

- Content authenticity scoring and moderation (sector: policy/platform trust & safety)

- Use case: Use exact likelihood estimates to flag unlikely or “synthetic-looking” frames during generation or ingestion pipelines.

- Workflow: Monitor per-frame log-likelihood and FSM residuals for anomaly detection; route low-likelihood segments to human review.

- Tools/products: Likelihood-based authenticity score; drift alarms during long-horizon generation; moderation dashboard.

- Assumptions/dependencies: Scores depend on training distribution; must avoid conflating “novel but real” content with synthetic; complementary signals (metadata, provenance) recommended.

- Academic research and benchmarking (sector: academia)

- Use case: Study exposure bias, long-horizon robustness, and likelihood-based training in video models; compare diffusion vs NF under controlled settings.

- Workflow: Reproduce STARFlow‑V; evaluate with VBench; ablate denoiser (FSM vs alternatives), block-wise Jacobi parameters, causal vs non-causal constraints.

- Tools/products: Open-source training scripts; evaluation harness; curricular training from single-frame to multi-frame.

- Assumptions/dependencies: Access to large datasets and compute; pretraining the causal 3D VAE; careful reporting on biases and physical realism.

- Game engine offline assets (sector: gaming/software)

- Use case: Generate cutscenes and background loops offline with causal consistency and identity control.

- Workflow: Unity/Unreal integration for batch asset creation; image-to-video seeding from concept art; pipeline parallel decoding for throughput.

- Tools/products: Generative asset SDK; scene variant generator with KV cache seeding.

- Assumptions/dependencies: Offline workflow only (current latency); design prompts and constraints per IP.

- Education content creation (sector: education)

- Use case: Produce explainer videos, animated diagrams, and scenario demonstrations from text.

- Workflow: T2V templates with domain-specific prompt libraries; V2V edits to adjust pacing and visuals; likelihood screens to filter artifacts.

- Tools/products: Educator-facing generator with prompt presets and drift guardrails.

- Assumptions/dependencies: Domain-specific accuracy still limited; educator review required.

Long-Term Applications

These applications require further research, scaling, optimization, or standardization—particularly reducing latency, improving physical plausibility, and expanding data coverage.

- Real-time streaming video generation and overlays (sectors: media, live events, social platforms)

- Use case: Interactive, causal generation for live broadcasts or streaming overlays, where future frames cannot influence past frames.

- Workflow: Pipeline-parallel deep block with hardware acceleration; low-latency FSM; chunked KV-cache warm starts; on-device inference.

- Tools/products: Real-time generative overlay engine; low-latency Jacobi schedulers; lightweight student models via distillation/pruning.

- Assumptions/dependencies: Significant latency reduction; optimized kernels; memory-efficient architectures; high-bandwidth IO.

- World models for games and embodied AI (sectors: gaming, robotics/embodied AI)

- Use case: Predictive, causal video rollouts for simulation, planning, and agent learning.

- Workflow: Use invertible flows for rollouts; employ likelihood to quantify uncertainty; integrate with control loops; generate long-horizon, physically grounded sequences.

- Tools/products: Causal video world-model API; uncertainty-aware planners; simulation curriculum built from long-motion datasets.

- Assumptions/dependencies: Strong physical plausibility and data coverage; safety constraints; robust evaluation under control tasks.

- Robotics perception and planning (sector: robotics)

- Use case: Video-based predictive modeling for anticipatory perception, active vision, and simulation-to-real training.

- Workflow: Autoregressive causal predictions; likelihood-based anomaly detection for sensor feeds; round‑trip latent editing to simulate edge cases.

- Tools/products: Robot stack integration (ROS plugins) with likelihood monitors; fast coarse predictors with denoiser refinement.

- Assumptions/dependencies: Real-time performance; sensor-domain alignment; physically realistic dynamics.

- Learned video compression and codecs (sector: media/telecom)

- Use case: Invertible, likelihood-based codecs enabling efficient compression and streaming with causal decoding.

- Workflow: Train NF-based codecs in latent space; block-wise Jacobi for parallelizable decode; causal framing for low-latency playback.

- Tools/products: Compress‑and‑generate codec; streaming client with causal NF decoder.

- Assumptions/dependencies: Standardization and interoperability; robust rate–distortion trade-offs; hardware acceleration.

- Provenance, watermarking, and detection standards (sector: policy/standards)

- Use case: Pair likelihood-based detection with cryptographic provenance to set practical authenticity standards for video.

- Workflow: Joint use of log-likelihood scores, FSM residuals, and embedded watermarks; establish thresholds and audit trails for platforms.

- Tools/products: Authenticity scoring toolkit; standards proposals combining NF likelihoods and provenance metadata.

- Assumptions/dependencies: Policy buy-in; balanced thresholds to avoid false positives; privacy and legal compliance.

- On-device creative tools (sector: consumer software)

- Use case: Mobile/desktop generative video editors that run locally.

- Workflow: Distill large NF models to compact students; prune and quantize; engineer streaming-friendly schedulers.

- Tools/products: Lightweight causal video editor; real-time style transfer via NF backbones.

- Assumptions/dependencies: Effective distillation without quality loss; energy and thermal constraints; UI/UX for prompt control.

- Educational simulators and training (sector: healthcare, education)

- Use case: Realistic scenario simulations (e.g., surgical steps, lab experiments) generated from text, with causal consistency.

- Workflow: Domain-specific fine-tuning on curated, physically grounded datasets; causal rollout with likelihood-based validation.

- Tools/products: Simulator generator for curricula; scenario “grader” using likelihood and drift metrics.

- Assumptions/dependencies: High-fidelity, domain-curated data; expert validation; safety constraints for sensitive domains.

- Data curation and active learning at scale (sector: academia/industry AI)

- Use case: Use likelihood and drift metrics to select, prioritize, or discard training clips; target large-motion, physically grounded content.

- Workflow: Build curation pipelines that score candidate videos by NF likelihood; actively sample challenging sequences; track scaling laws.

- Tools/products: Likelihood-driven dataset curation service; active sampling dashboard.

- Assumptions/dependencies: Diverse, licensable datasets; compute budgets; careful handling of distribution shift.

Cross-cutting assumptions and dependencies

- Compute and latency: Current models require substantial GPU resources; inference is not real-time yet. Achieving live/interactive uses depends on architectural and systems optimizations, distillation, and hardware acceleration.

- Data quality and coverage: Performance and physical plausibility depend on large, high-quality, and domain-appropriate datasets; scaling laws are not yet clean under current curation.

- Safety and legal compliance: Synthetic media risks (deepfakes) require guardrails, provenance, and moderation; ensure copyright/licensing compliance.

- Integration prerequisites: Text encoders, caption quality, prompt engineering, and editor/engine integration are crucial for reliable deployments.

- Model components: Pretrained 3D causal VAE and the FSM denoiser are integral to quality and temporal consistency; causal constraints include one-frame look-ahead.

Collections

Sign up for free to add this paper to one or more collections.