- The paper introduces ActiveUMI, a framework using VR-based human demonstrations to improve robotic manipulation via active perception, achieving a 70% success rate on standard tasks.

- It employs a novel setup combining VR tracking, custom controllers, and portable computation to accurately map human motions to robot kinematics and drive visuomotor learning.

- The approach outperforms conventional methods by offering efficient data collection, precise calibration, and robust generalization with a 56% success rate in novel scenarios.

ActiveUMI: Robotic Manipulation with Active Perception from Robot-Free Human Demonstrations

Introduction

The paper presents ActiveUMI, a data collection framework designed to improve robotic manipulation through active perception by leveraging human demonstrations without the direct involvement of robots during the data collection process. ActiveUMI integrates a VR setup to capture human movements, synchronize them with robot kinematics, and enhance the learning of visuomotor policies. This method bridges the gap between human demonstrations in everyday environments and the embodiment requirements of robots, focusing on active perception to achieve higher task success rates.

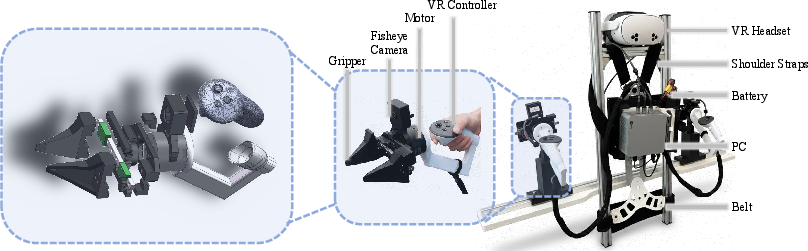

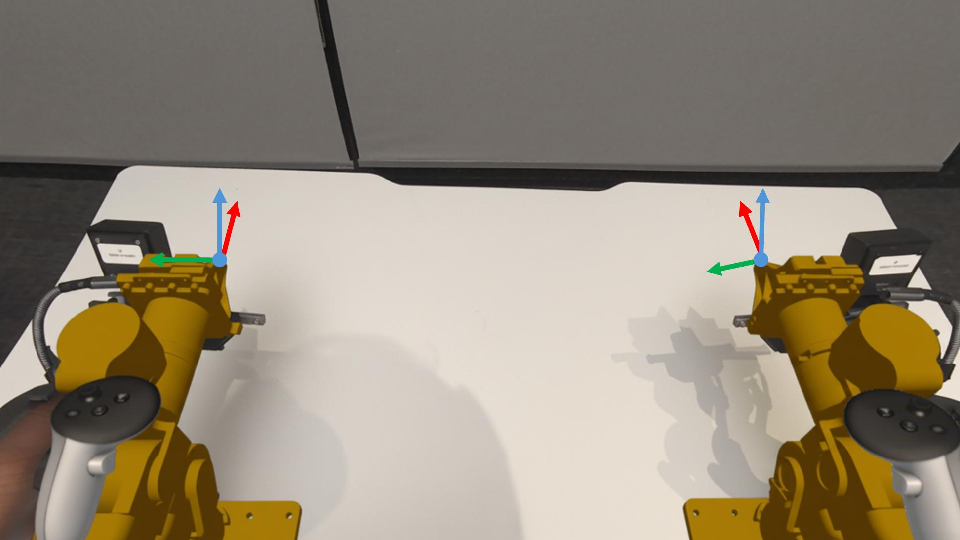

System Architecture and Setup

ActiveUMI utilizes a VR headset, custom controllers, and a portable computational setup to map human actions to robotic movements:

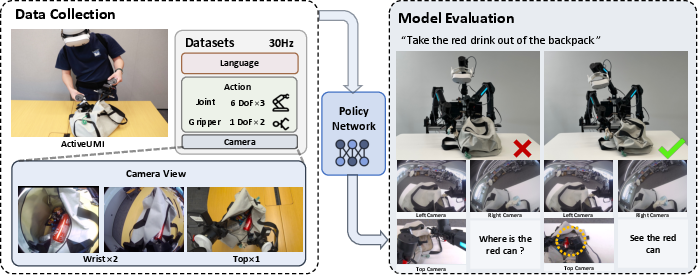

Data Collection Process

The data collection process is designed for capturing diverse human demonstrations that are aligned with robotic capabilities:

Experimental Evaluation

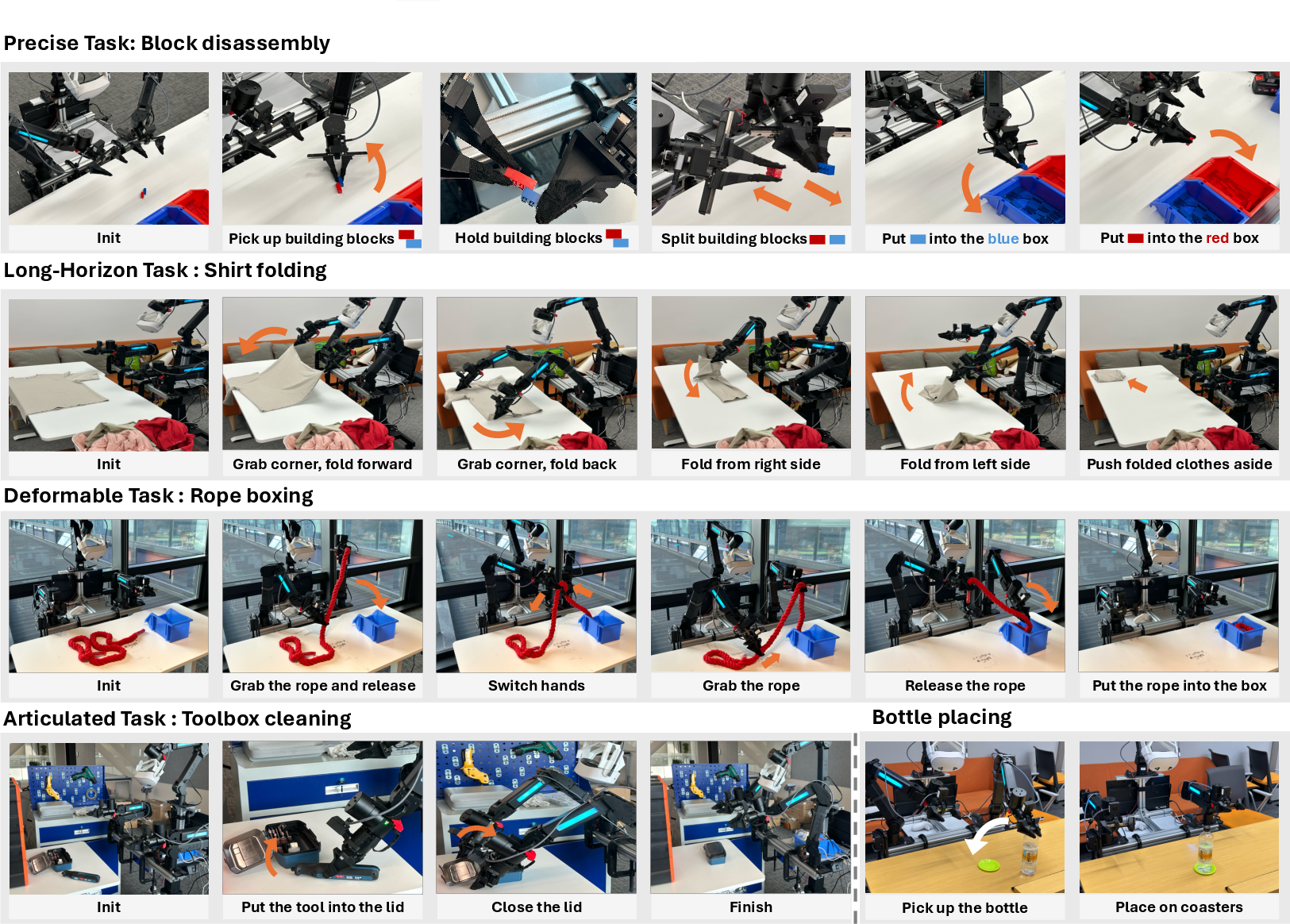

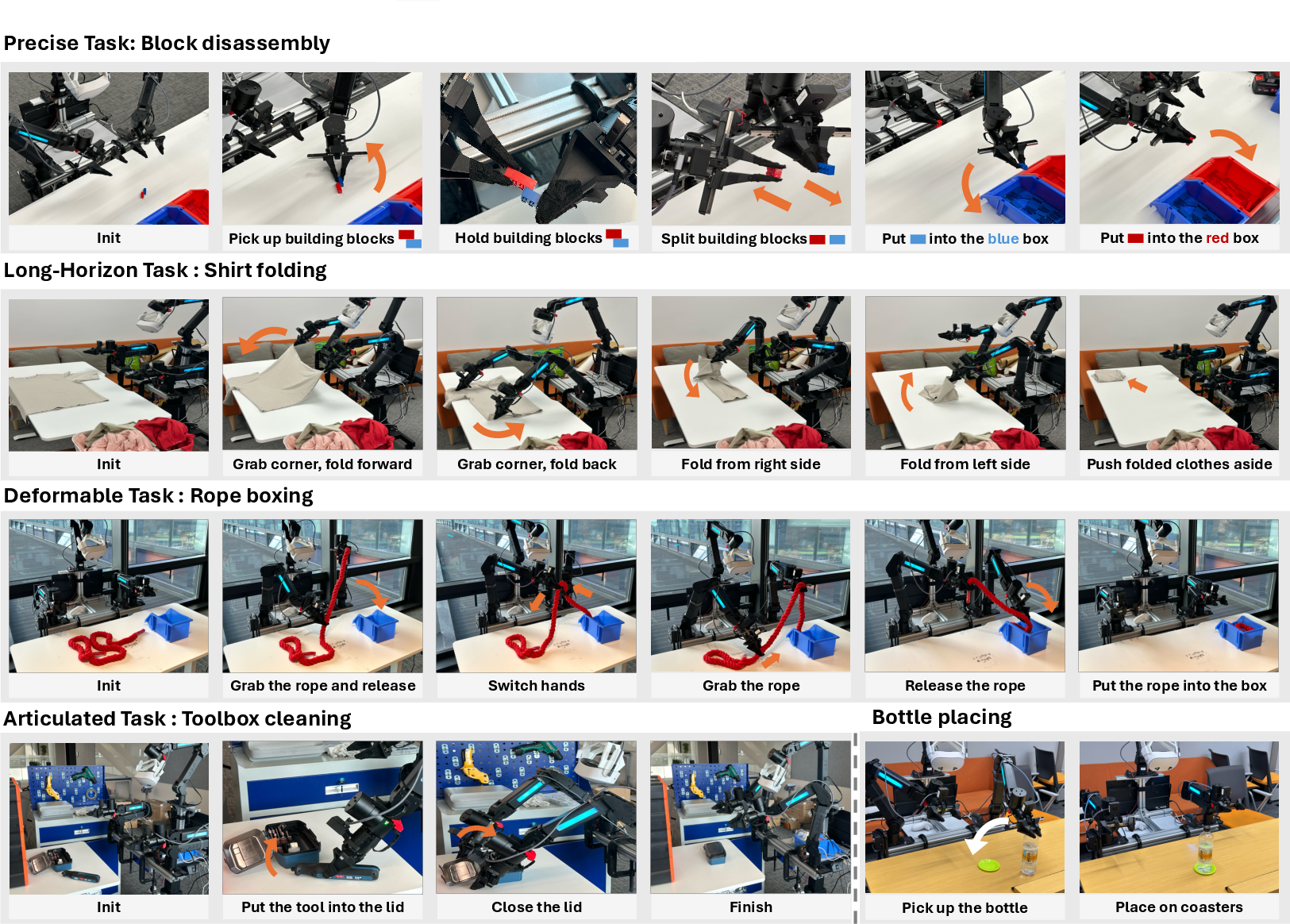

ActiveUMI's effectiveness was validated through experiments on six challenging bimanual tasks, testing both in-distribution performance and generalization:

Comparison with Conventional Approaches

An in-depth comparison was made between ActiveUMI and other prevalent methods, such as static camera setups and wrist-camera-based data collection. The results highlighted:

- Efficiency: ActiveUMI's data collection process is faster and yields higher-quality data compared to teleoperation systems. Using tasks like rope boxing and shirt folding as benchmarks, ActiveUMI demonstrated substantial throughput advantages.

- Accuracy: The use of VR tracking inherently reduces error, offering a precise data collection modality that aligns well with robotic control requirements.

Figure 4: Data Collection Comparison. Shows efficiency comparisons among ActivateUMI, bare hand, and teleoperation, highlighting ActiveUMI's efficiency and accuracy benefits.

Implications and Future Work

The research underscores the significance of active perception in robotic manipulation, advocating for further integration of human-like visual strategies into robotic learning systems. The portable, cost-effective nature of ActiveUMI presents a scalable approach to collect vast amounts of high-quality data in real-world settings. Future trajectories may explore augmenting this framework with additional sensory modalities and extending its application to more complex, machine learning-driven robotic tasks.

Conclusion

ActiveUMI effectively addresses the limitations of conventional data collection in robotics by emphasizing active perception. The successful implementation and testing of this framework showcase its potential to enhance robot autonomy and adaptability, setting a foundation for developing robust, generalizable robotic manipulation policies without the constraints of controlled environments.