- The paper introduces LC-FT, a framework that uses concise Chain-of-Thought sketches to build latent codebooks for guiding efficient reasoning in LLMs.

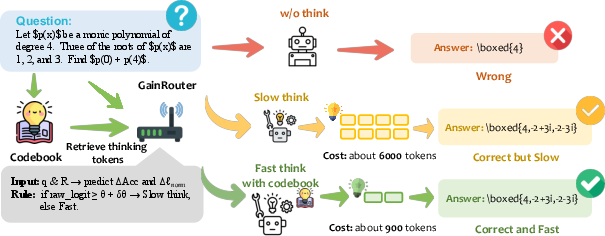

- It employs a GainRouter mechanism that dynamically balances fast codebook-guided inference with slower explicit reasoning based on input complexity.

- Experiments show significant token reduction and competitive accuracy on mathematical and programming benchmarks, underscoring its practical impact.

Introduction

The paper "Fast Thinking for LLMs" (2509.23633) introduces an innovative framework for reasoning-oriented LLMs that leverages concise Chain-of-Thought (CoT) sketches during training to form a latent codebook. This codebook enables fast thinking by providing strategy-level guidance through continuous thinking vectors, significantly reducing the need for elaborate reasoning token generation during inference. To further optimize inference efficiency, the GainRouter mechanism adapts between fast codebook-guided inference and slow explicit reasoning. This approach addresses the latency and token costs traditionally associated with CoT methods while maintaining competitive accuracy across reasoning benchmarks.

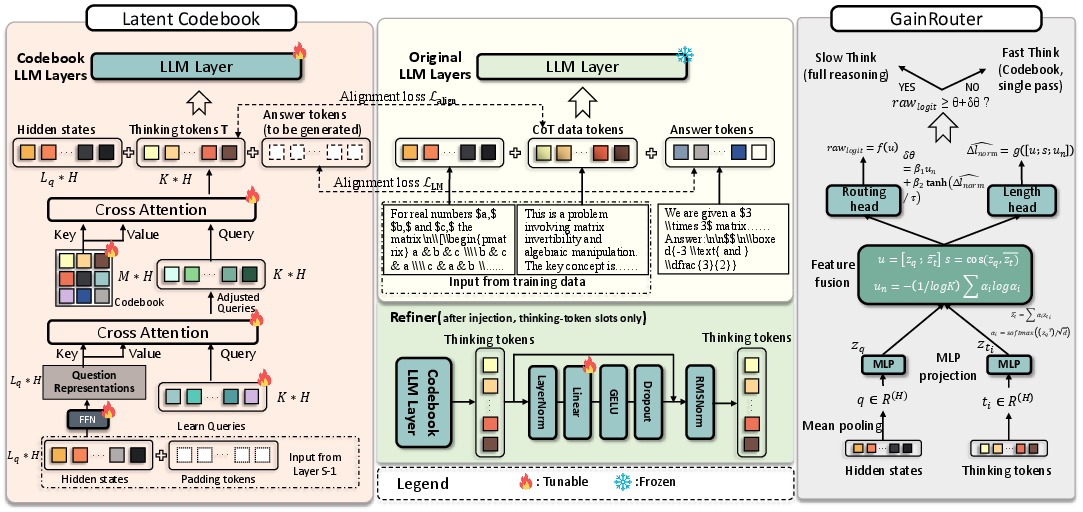

Figure 1: Overview of our framework. Latent Codebook for Fast Thinking (LC-FT) provides hint tokens for efficient one-pass reasoning, while GainRouter decides between fast and slow CoT modes to balance accuracy and token cost.

Framework Overview

Latent Codebooks for Fast Thinking (LC-FT)

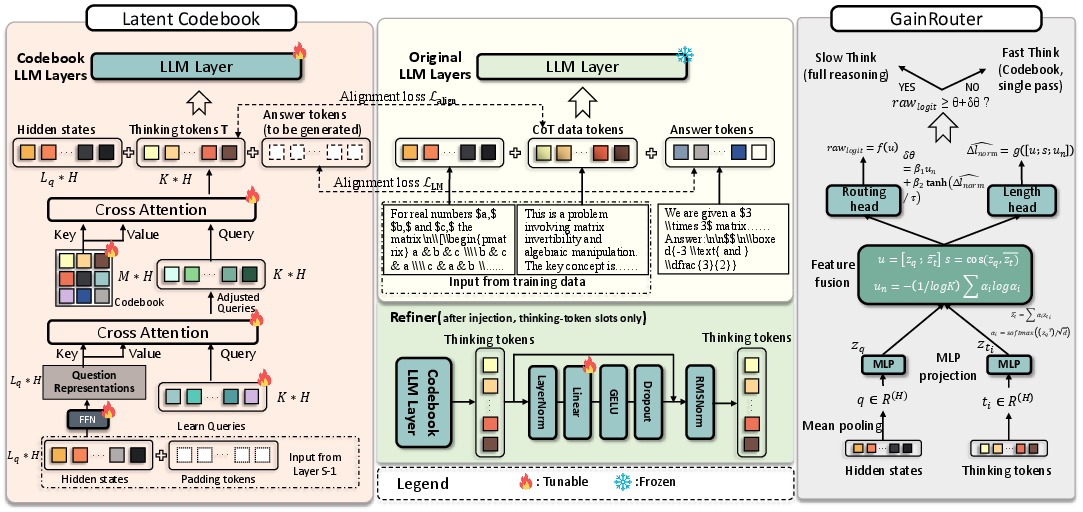

LC-FT employs concise CoT sketches to train a codebook of discrete strategy priors. At inference, the model retrieves strategy-level hints from this codebook, allowing reasoning in a single pass without generating explicit reasoning tokens. The latent codebook is implemented with learnable query vectors that attend over prototype entries to form continuous thinking tokens. These tokens are injected at a specific transformer layer during inference, influencing subsequent computations.

Figure 2: Overview of the latent codebook mechanism. Learnable queries Q attend over the codebook C to produce thinking tokens T, which are refined and injected at layer L to form Z(L).

GainRouter Mechanism

The GainRouter enhances LC-FT by dynamically toggling between fast thinking and CoT reasoning. This lightweight classifier predicts when slow reasoning is necessary based on input complexity and token-level evidence. It prevents overthinking, reducing unnecessary token generation without compromising accuracy.

Experimental Results

The framework was evaluated on mathematical reasoning datasets (AIME, OlympiadBench) and programming benchmarks (MBPP, HumanEval). LC-FT demonstrated substantial reductions in token usage while achieving accuracy comparable to traditional CoT methods. For instance, on OlympiadBench, LC-FT matched the top accuracy of 50% while significantly cutting the average generation length from 7075 tokens to 5332.

Tables show detailed metrics for accuracy and token usage across various baselines, underscoring LC-FT's efficiency advantages.

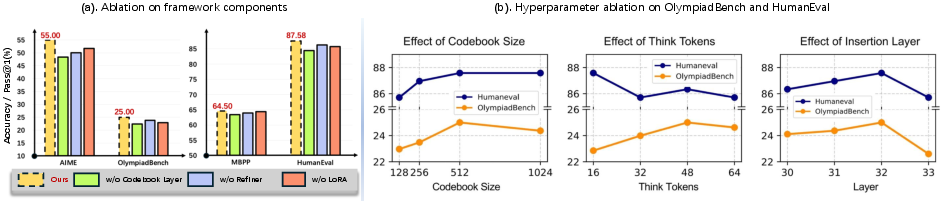

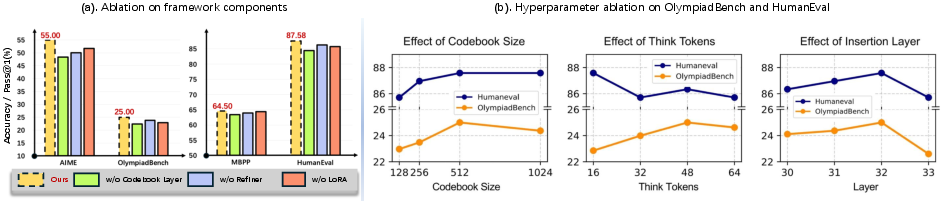

Ablation Studies

Ablation experiments highlighted the crucial role of the codebook and refiner components. Removing the codebook resulted in significant accuracy drops, affirming its importance for fast reasoning. The refiner component also contributed to stability by smoothing hint vectors before injection.

Figure 3: Ablation studies. (a) demonstrates the impact of different framework components across reasoning and programming benchmarks. (b) examines the effect of hyperparameters including codebook size, think tokens, and insertion layer position.

Implications and Future Work

The latent codebook approach offers a practical pathway to scalable and efficient reasoning within LLMs, potentially reducing the infrastructural demands associated with CoT architectures. Future work could explore the integration of LC-FT with other adaptive reasoning paradigms to further enhance inference efficiency. Additionally, further refinements to the GainRouter mechanism could lead to more sophisticated context-aware reasoning that dynamically optimizes computational resources.

Conclusion

The introduction of Latent Codebooks for Fast Thinking marks a significant development in the quest for efficient reasoning in LLMs. By assimilating concise CoT sketches into a searchable strategy memory, LC-FT reduces the dependency on high-cost token generations. Coupled with the adaptive GainRouter mechanism, this framework balances reasoning quality with computational efficiency, establishing a promising avenue for the deployment of advanced reasoning models in real-world applications.