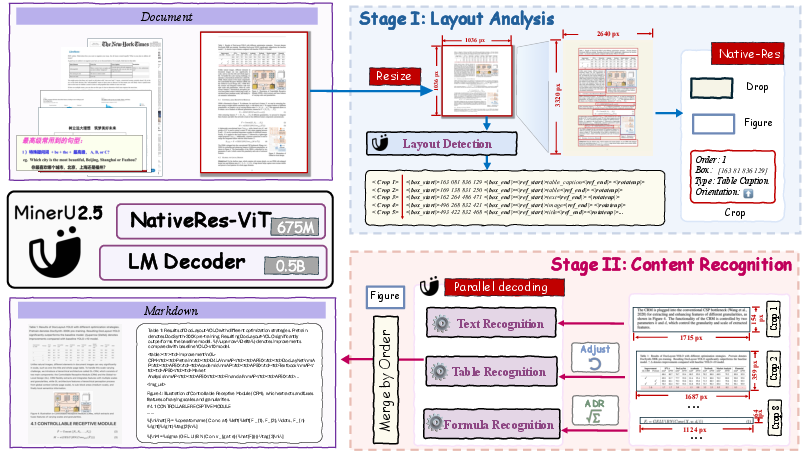

- The paper demonstrates a novel decoupled two-stage parsing strategy that mitigates token redundancy and improves efficiency in high-resolution document parsing.

- It integrates a NaViT vision encoder, Qwen2-Instruct language model, and pixel-unshuffle patch merger for precise layout analysis and fine-grained content recognition.

- Experimental evaluations show state-of-the-art performance on benchmarks, with notable improvements in speed and accuracy for tables, formulas, and overall layout detection.

MinerU2.5: A Decoupled Vision-LLM for Efficient High-Resolution Document Parsing

Introduction and Motivation

MinerU2.5 introduces a 1.2B-parameter vision-LLM (VLM) specifically designed for high-resolution document parsing. The model addresses the computational and semantic challenges inherent in document images, which typically exhibit high resolution, dense content, and complex layouts. Existing approaches—either modular pipelines or end-to-end VLMs—suffer from error propagation, hallucination, and severe inefficiency due to token redundancy. MinerU2.5's core innovation is a decoupled, coarse-to-fine two-stage parsing strategy that separates global layout analysis from local content recognition, enabling both high accuracy and computational efficiency.

Model Architecture and Two-Stage Parsing

MinerU2.5's architecture comprises a Qwen2-Instruct LLM (0.5B params), a NaViT vision encoder (675M params) supporting dynamic resolutions and aspect ratios, and a patch merger utilizing pixel-unshuffle for efficient token aggregation. The model leverages M-RoPE for improved positional encoding generalization.

The two-stage parsing strategy is as follows:

This decoupling reduces the O(N2) token complexity of end-to-end approaches, mitigates hallucination, and allows independent optimization of each stage.

Data Engine and Training Pipeline

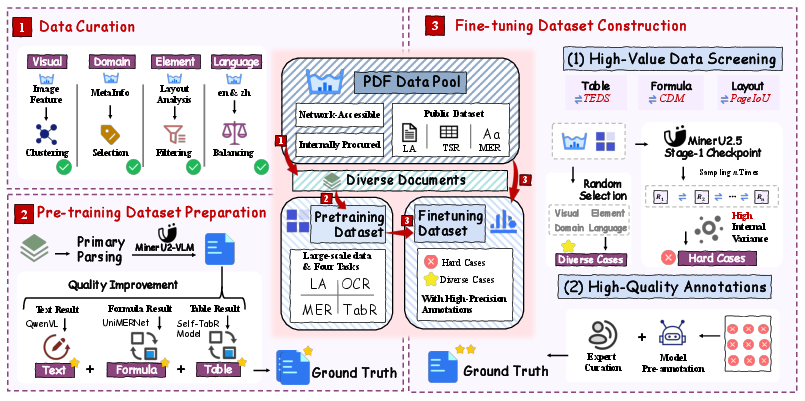

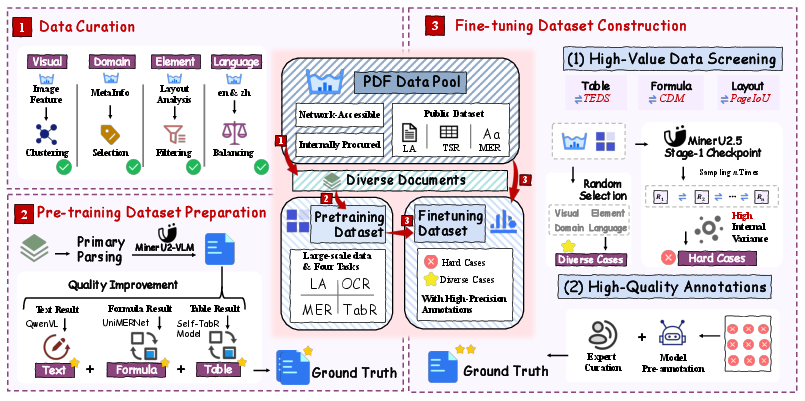

MinerU2.5's performance is underpinned by a comprehensive data engine that generates large-scale, high-quality training corpora. The pipeline consists of:

- Data Curation: Stratified sampling ensures diversity in layout, document type, element balance, and language.

- Pre-training Data Preparation: Automated annotation is refined using expert models for text, tables, and formulas.

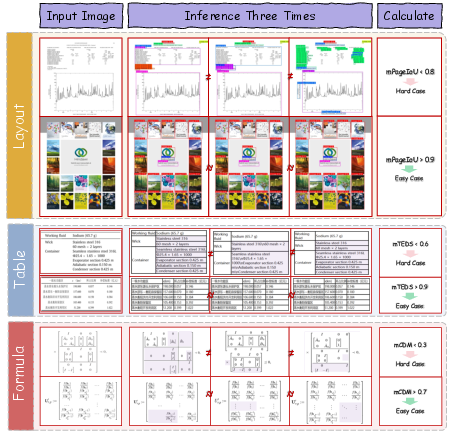

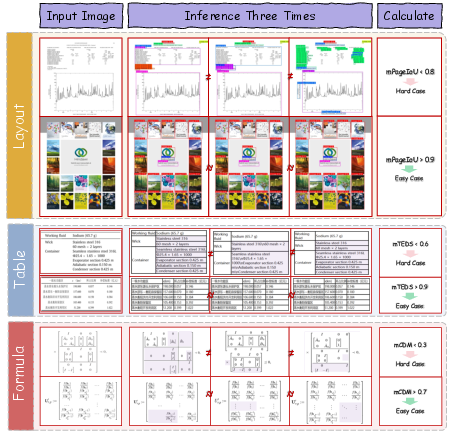

- Fine-tuning Dataset Construction: The IMIC (Iterative Mining via Inference Consistency) strategy identifies hard cases via stochastic inference consistency metrics (PageIoU, TEDS, CDM), which are then manually curated.

Figure 2: Data engine workflow: curation, automated annotation refinement, and IMIC-driven hard case mining for fine-tuning.

Layout Analysis

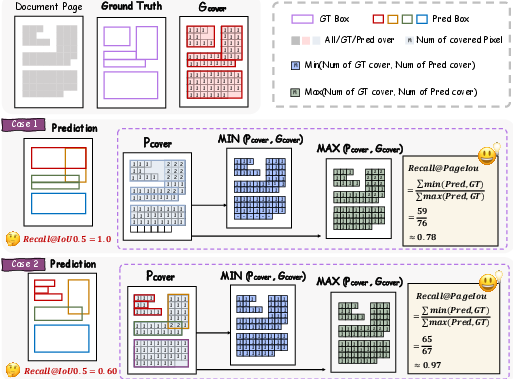

MinerU2.5 introduces a hierarchical tagging system for layout elements, ensuring comprehensive coverage (including headers, footers, page numbers) and fine granularity (distinct tags for code, references, lists). Layout analysis is reformulated as a multi-task problem, jointly predicting position, class, rotation, and reading order.

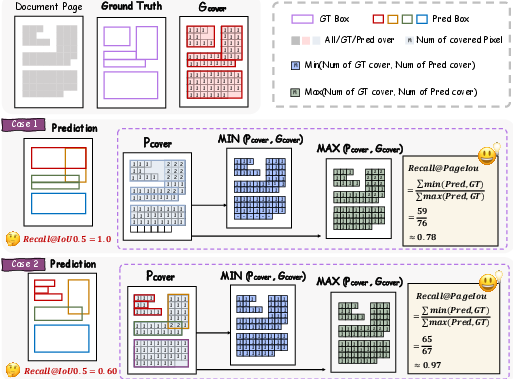

The PageIoU metric is proposed for layout evaluation, measuring page-level coverage and aligning quantitative scores with qualitative human assessment.

Figure 3: PageIoU metric: page-level coverage aligns with visual inspection, resolving inconsistencies in IoU-based recall.

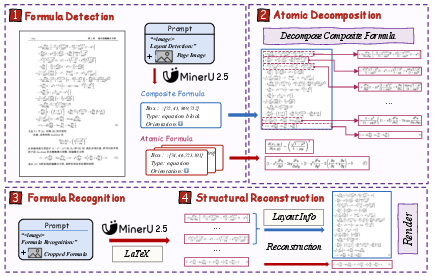

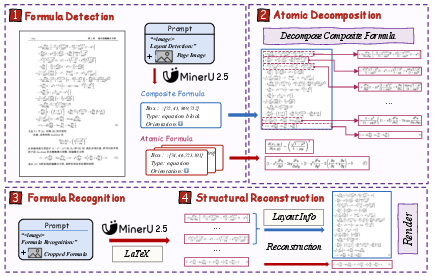

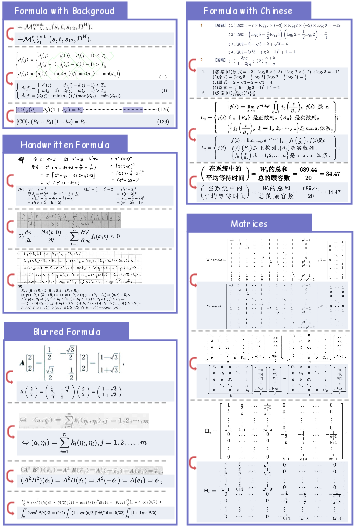

MinerU2.5 employs the ADR (Atomic Decomposition and Recombination) framework, decomposing compound formulas into atomic lines, recognizing each into LaTeX, and structurally recombining the results. This approach addresses the limitations of monolithic formula recognition and reduces structural hallucination.

Figure 4: ADR framework: compound formulas are decomposed, recognized, and recombined for high-fidelity LaTeX output.

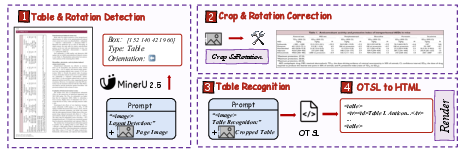

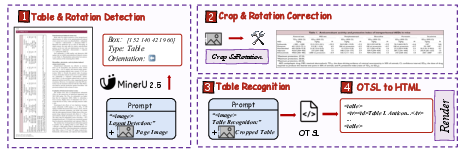

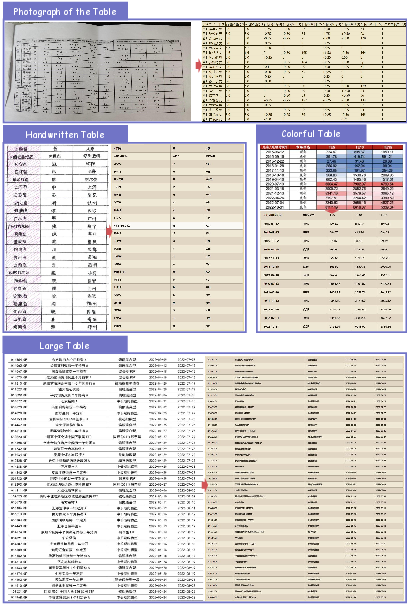

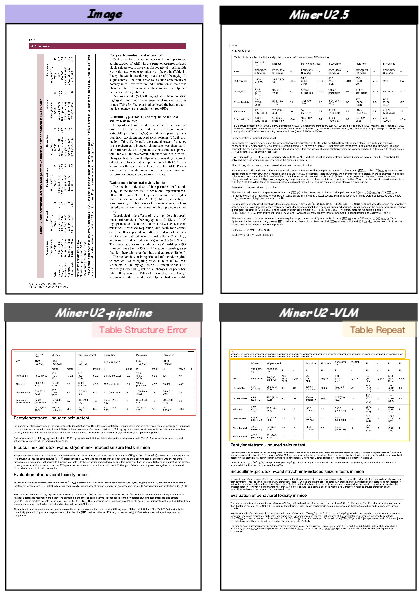

Table Recognition

The model adopts a four-stage pipeline: detection, rotation correction, recognition into OTSL (Optimized Table Structure Language), and conversion to HTML. OTSL reduces structural token redundancy and sequence length, improving recognition of complex tables.

Figure 5: Table recognition pipeline: detection, geometric normalization, OTSL generation, and HTML conversion.

Iterative Mining via Inference Consistency (IMIC)

IMIC leverages stochastic inference to identify hard cases—samples with low output consistency across multiple runs—using task-specific metrics. These cases are prioritized for manual annotation, focusing human effort on the most valuable data for model improvement.

Figure 6: IMIC strategy: low-consistency samples in layout, table, and formula recognition are mined for targeted annotation.

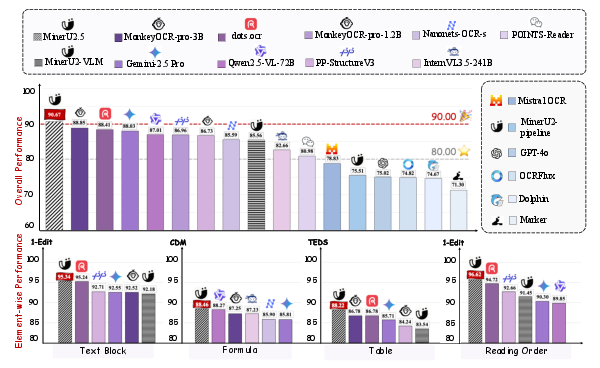

Quantitative Evaluation

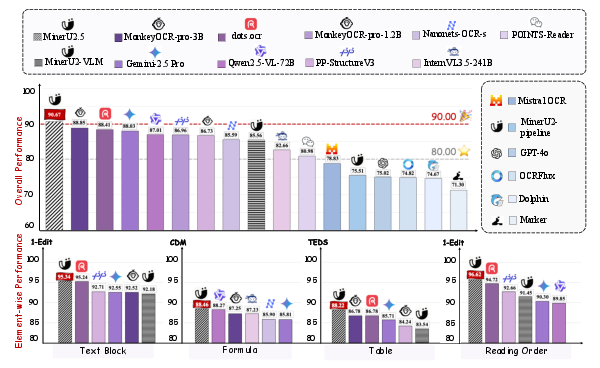

MinerU2.5 achieves state-of-the-art results across multiple benchmarks:

- OmniDocBench: Overall score of 90.67, outperforming MonkeyOCR-pro-3B and dots.ocr. Best-in-class edit distance (0.047), formula CDM (88.46), table TEDS (88.22), and reading order edit distance (0.044).

- Ocean-OCR: Lowest edit distance (0.033) and highest F1-score (0.945) on English documents; highest F1-score (0.965) and precision (0.966) on Chinese documents.

- olmOCR-bench: Overall score of 75.2, leading in arXiv Math (76.6), Old Scans Math (54.6), and Long Tiny Text (83.5).

- Layout Analysis: Top Full Page F1-score@PageIoU across OmniDocBench, D4LA, and DocLayNet.

- Table Recognition: SOTA on FinTabNet, OCRBench v2, and in-house benchmarks; competitive on PubTabNet and CC-OCR.

- Formula Recognition: SOTA on SCE, LaTeX-80MM, Fuzzy Math, and Complex datasets; second-best on CPE, HWE, SPE, and Chinese.

Figure 7: MinerU2.5's performance highlights on OmniDocBench: consistently surpasses general-purpose and domain-specific VLMs in text, formula, table, and reading order tasks.

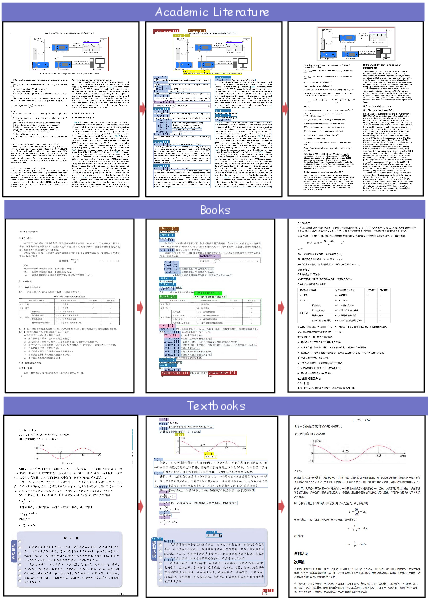

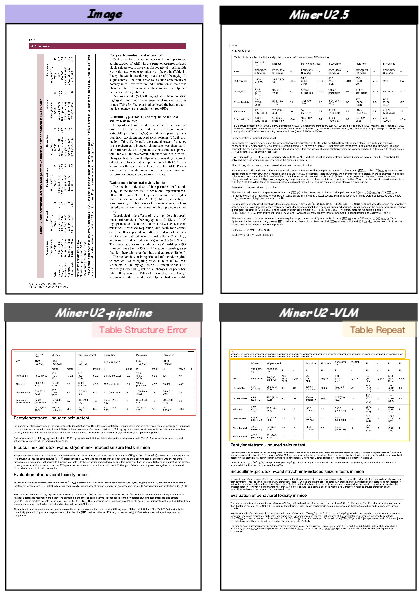

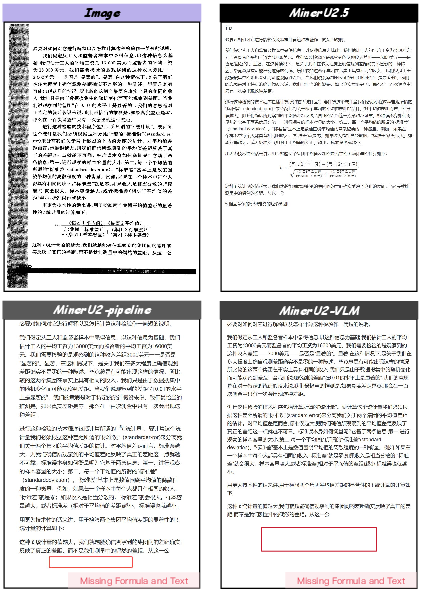

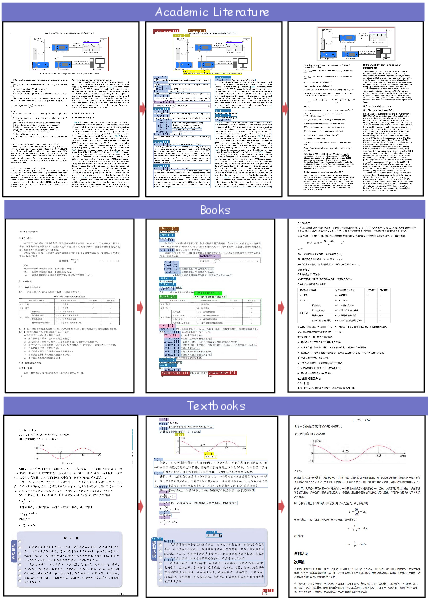

Qualitative Analysis

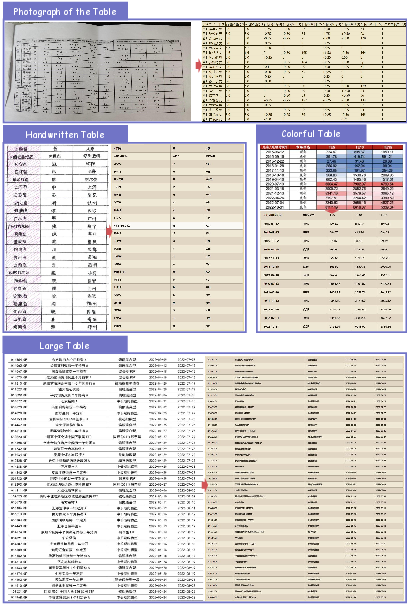

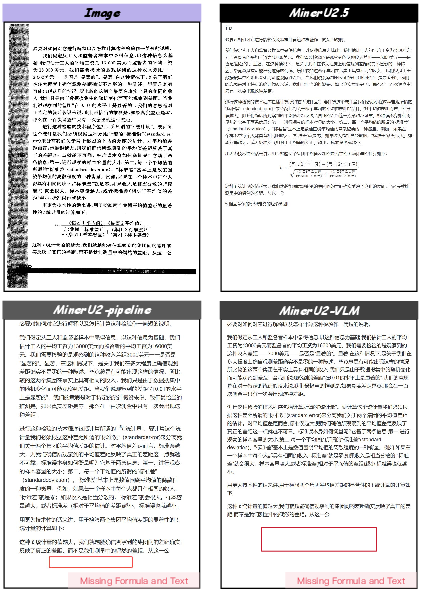

MinerU2.5 demonstrates robust parsing across diverse document types (academic literature, books, reports, slides, newspapers, magazines), complex tables (rotated, merged cells, borderless, colored, dense), and intricate formulas (multi-line, nested, mixed-language, degraded).

Figure 8: Layout and markdown output for academic literature, books, and textbooks.

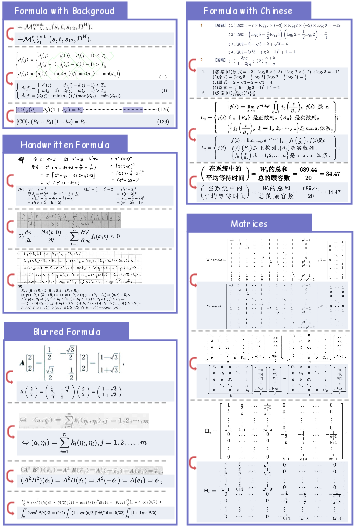

Figure 9: Rendered outputs for various types of tables.

Figure 10: Rendered outputs for various types of formulas.

Comparisons with previous versions and other SOTA models show MinerU2.5's superior handling of rotated tables, merged cells, Chinese formulas, multi-line formulas, finer layout detection, and watermark pages.

Figure 11: MinerU2.5 outperforms previous versions in rotated table recognition.

Figure 12: MinerU2.5 excels in formula recognition with Chinese content compared to prior models.

Deployment and Efficiency

MinerU2.5 is deployed via an optimized vLLM-based pipeline, with asynchronous backend and decoupled inference stages to minimize latency. Dynamic sampling penalties are applied based on detected layout types to suppress degenerate repetition. The model achieves 2.12 pages/s and 2337.25 tokens/s on A100 80G, outperforming MonkeyOCR-Pro-3B by 4× and dots.ocr by 7× in throughput.

Implications and Future Directions

MinerU2.5's decoupled architecture and data-centric training pipeline establish a new paradigm for efficient, high-fidelity document parsing. Its ability to rapidly convert unstructured documents into structured data is critical for curating pre-training corpora and enhancing RAG systems. The preservation of semantic integrity in tables, formulas, and layouts positions MinerU2.5 as a foundational tool for next-generation AI applications in knowledge extraction, information retrieval, and multimodal understanding.

Future work may explore further scaling of the data engine, integration with more advanced layout and semantic tagging systems, and adaptation to multilingual and domain-specific document types. The IMIC strategy offers a blueprint for continual model improvement via targeted annotation, potentially generalizable to other structured data extraction tasks.

Conclusion

MinerU2.5 demonstrates that a decoupled, coarse-to-fine VLM architecture, combined with a rigorous data engine and targeted hard case mining, can achieve state-of-the-art document parsing accuracy and efficiency. Its design resolves key trade-offs in high-resolution document understanding and sets a new standard for practical deployment in large-scale, real-world scenarios. The model's contributions extend beyond document parsing, offering substantial benefits for downstream AI systems reliant on structured document data.