- The paper presents PhysCtrl, a framework that integrates physics dynamics into a diffusion model using a novel spatiotemporal attention mechanism.

- The methodology leverages 550K synthetic animations and 3D point trajectory encoding to enforce physics-based constraints in video generation.

- PhysCtrl outperforms baselines in Semantic Adherence, Physical Commonsense, and Video Quality, demonstrating enhanced control and realism.

"PhysCtrl: Generative Physics for Controllable and Physics-Grounded Video Generation" (2509.20358)

Introduction

The paper introduces PhysCtrl, a framework designed for physics-grounded image-to-video generation with control over physical parameters and forces. Unlike conventional video generation models that often lack physical plausibility due to their reliance on 2D data-driven approaches, PhysCtrl incorporates a diffusion model for capturing the dynamics of various materials, namely elastic, sand, plasticine, and rigid. This framework enhances the physical realism and controllability of the resulting video outputs.

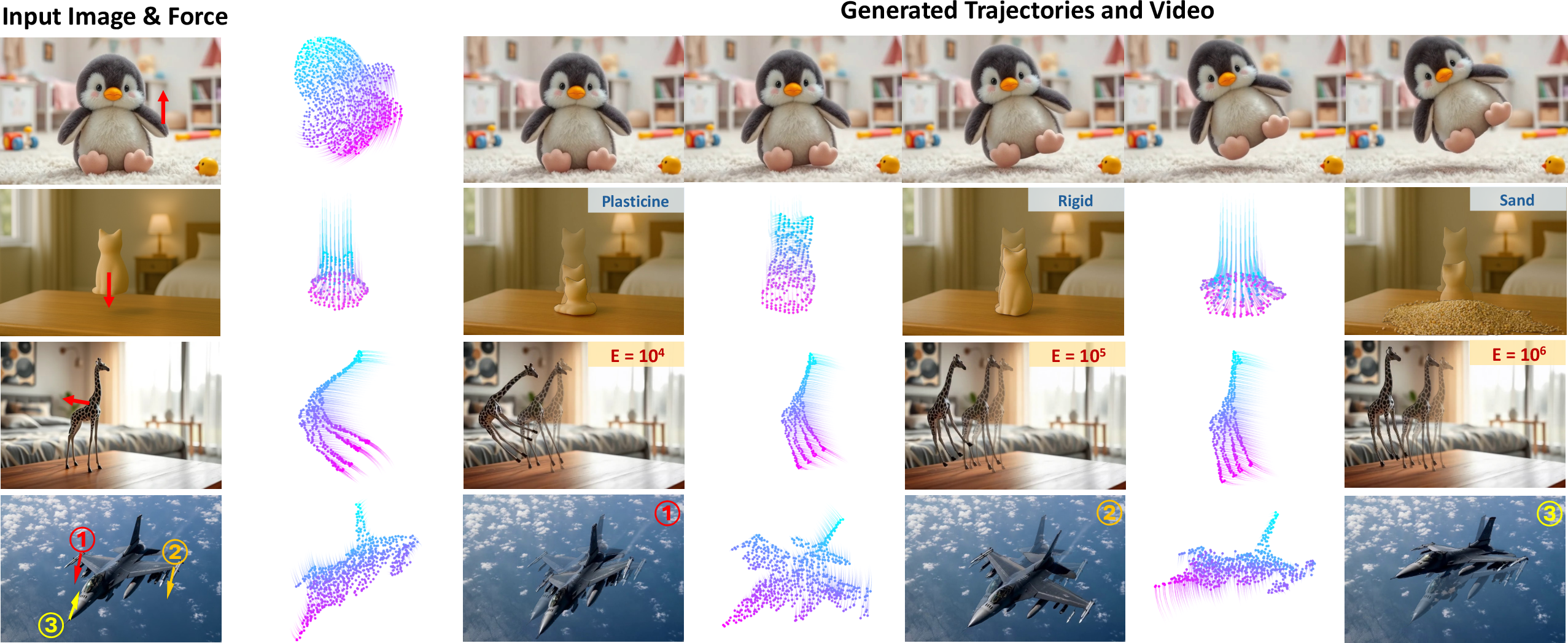

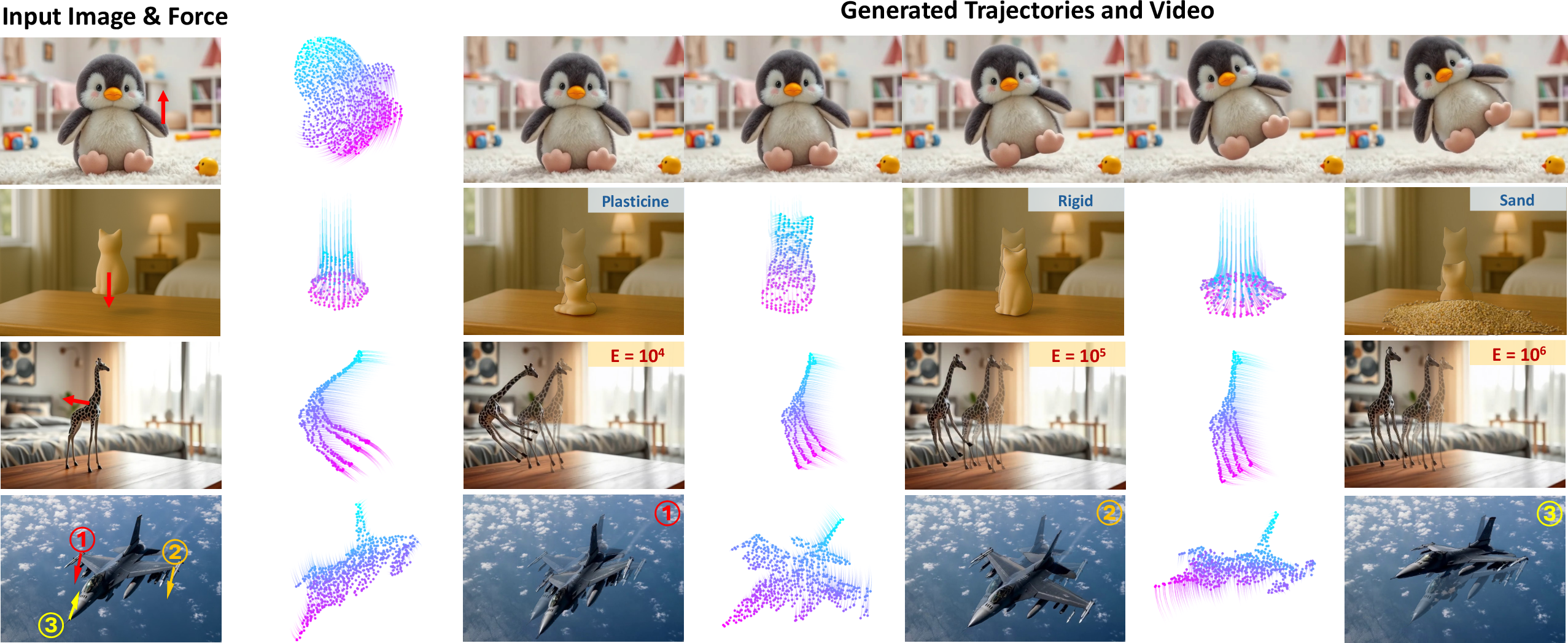

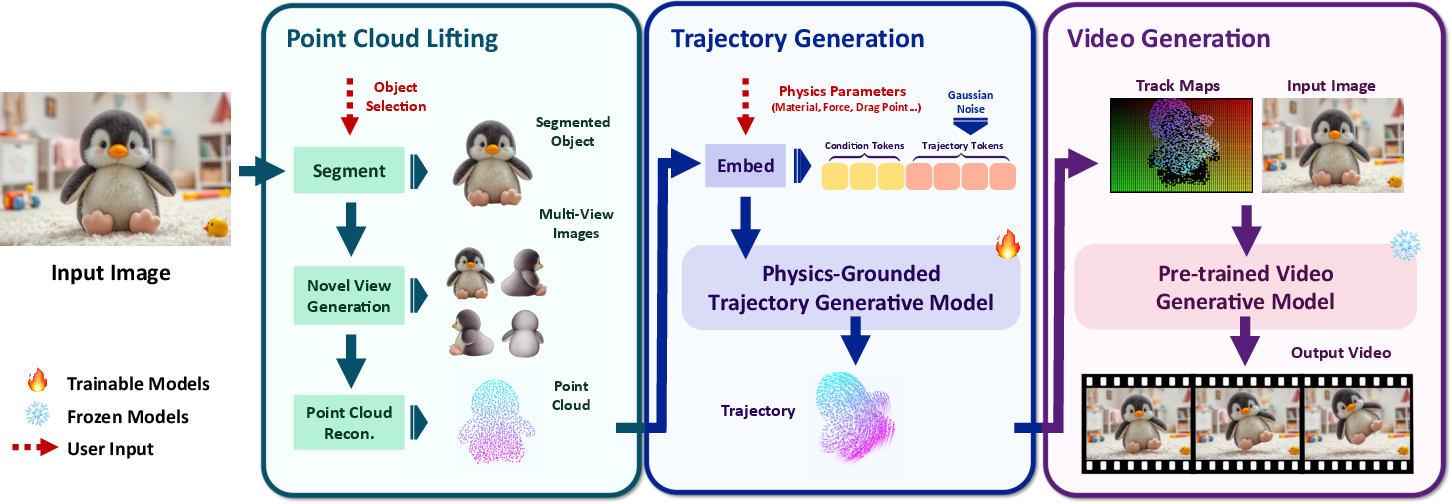

Figure 1: We propose PhysCtrl, a novel framework for physics-grounded image-to-video generation with physical material and force control.

Methodology

PhysCtrl employs a Generative Physics Network, leveraging a large-scale synthetic dataset of 550K animations. The core of this network is a diffusion model enhanced with a novel spatiotemporal attention mechanism that simulates particle interactions and enforces physics-based constraints. This approach allows PhysCtrl to generate motion trajectories that are realistic and physics-compliant.

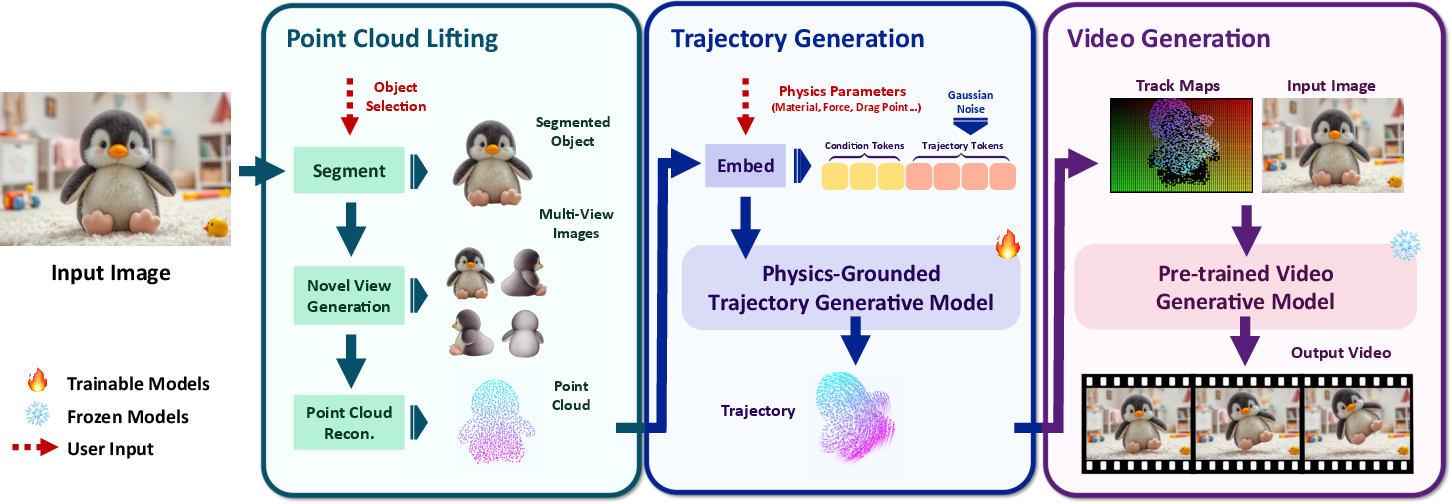

Figure 2: An overview of PhysCtrl including 3D point lifting, diffusion model for trajectory generation, and video synthesis.

1. Physics-Grounded Dynamics:

- Data Representation: Physics dynamics are encoded as 3D point trajectories, which are effective for controlling video models and generalizing across different materials.

- Diffusion Model: The model utilizes spatial and temporal attention to capture particle interactions and temporal correlations efficiently.

2. Image-to-Video Integration:

PhysCtrl processes a single input image to extract a 3D point representation, then generates physics-based motion trajectories that serve as control signals for pre-trained video generative models. This allows for the synthesis of videos that not only inherit the visual fidelity of the original models but also adhere to physics-based constraints.

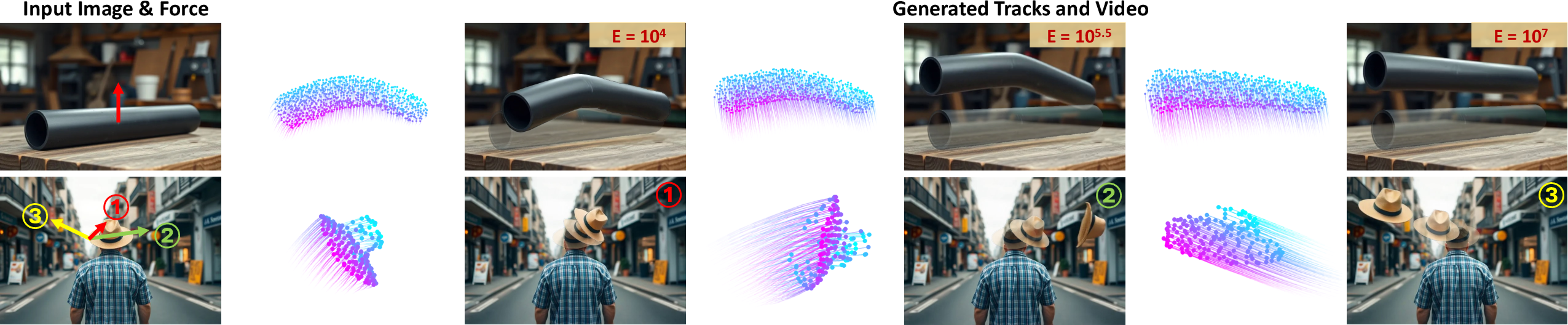

Figure 3: Qualitative comparison between PhysCtrl and existing video generation methods.

Results

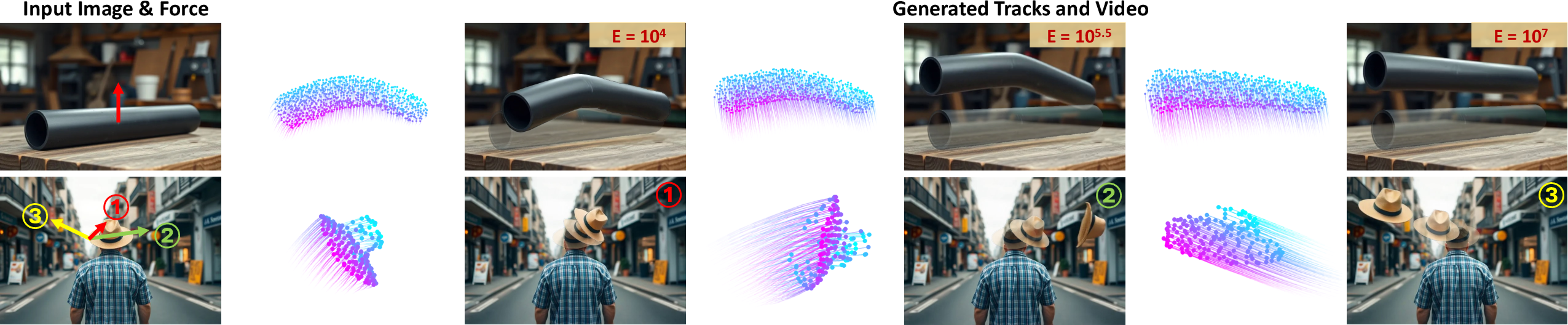

PhysCtrl outperforms existing models in generating high-fidelity, physics-compliant videos. The framework's evaluations show superior results in terms of physical plausibility and visual quality. Extended experiments demonstrate PhysCtrl's capability to manipulate videos under varying conditions, providing users with a robust tool for realistic video generation.

Figure 4: PhysCtrl generates videos of the same object under different physics parameters and forces.

Quantitative Evaluation:

Data shows a marked improvement in Semantic Adherence (SA), Physical Commonsense (PC), and Video Quality (VQ) measurements compared to baselines like DragAnything and CogVideoX. This underscores the framework's efficacy in integrating physics into video generative tasks.

Trade-offs and Limitations

PhysCtrl's reliance on large synthetic datasets poses a scalability challenge when extending to more complex or diverse materials and phenomena. The system predominantly covers single-object dynamics and may not adequately model multi-object interactions or complex boundary conditions.

Conclusions and Future Work

PhysCtrl represents a significant stride toward incorporating realistic physics into video generation, addressing key limitations of previous models by enhancing physical plausibility and user control. The research lays a foundation for future work in modeling more intricate physical interactions and broadening the types of controllable video content.

Implications and Future Directions

Practically, PhysCtrl's ability to synthesize controllable, physics-grounded videos opens up new possibilities in fields such as animation, gaming, and virtual reality, where realistic physical interactions enhance user experiences. Future research directions include addressing current limitations by exploring multi-object dynamics and integrating more diverse physical phenomena into the generative process.