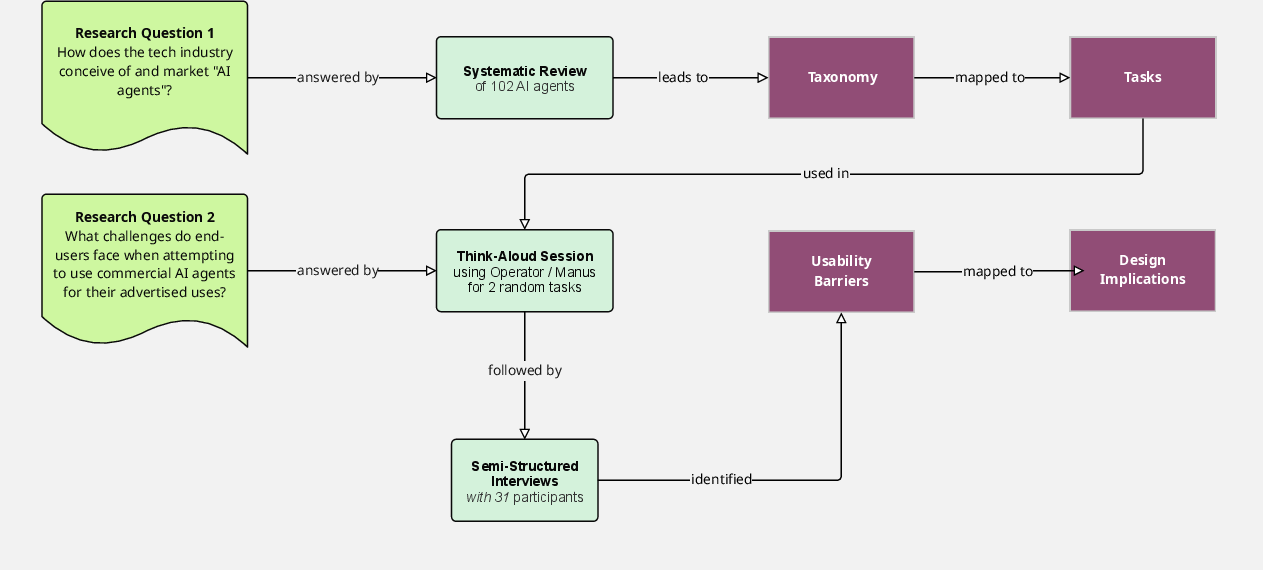

- The paper systematically investigates usability issues in AI agents by reviewing 102 products and conducting a user study of two platforms.

- It categorizes AI agent functions into orchestration, creation, and insight, providing a taxonomy to assess market claims.

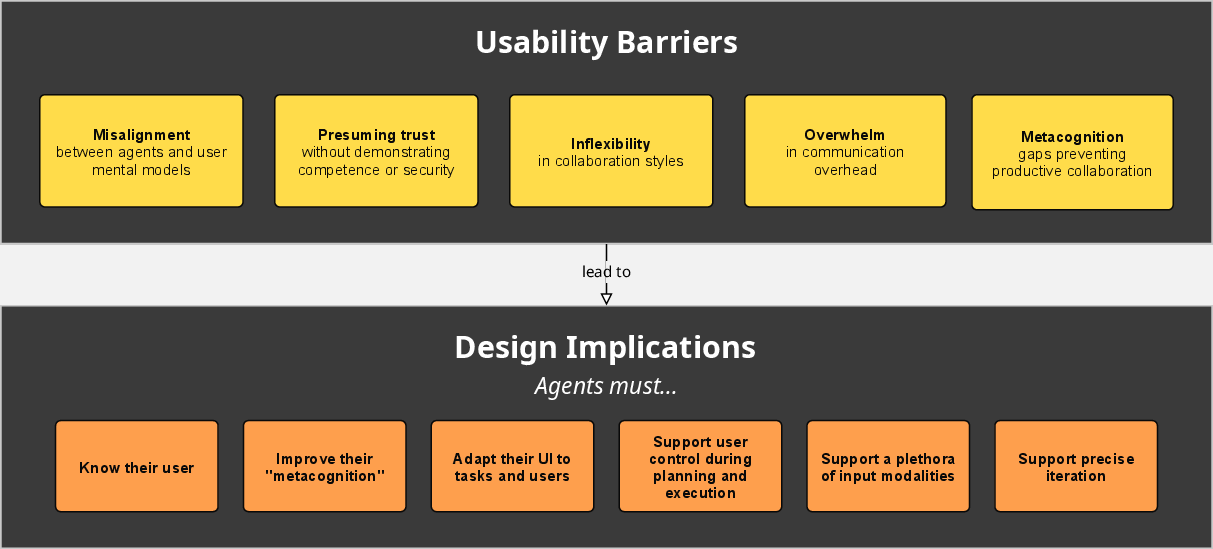

- Empirical tests reveal critical barriers such as misaligned mental models, excessive communication, and a lack of metacognitive features.

Usability Barriers in Commercial AI Agent Software: Industry Aspirations vs. User Realities

Introduction

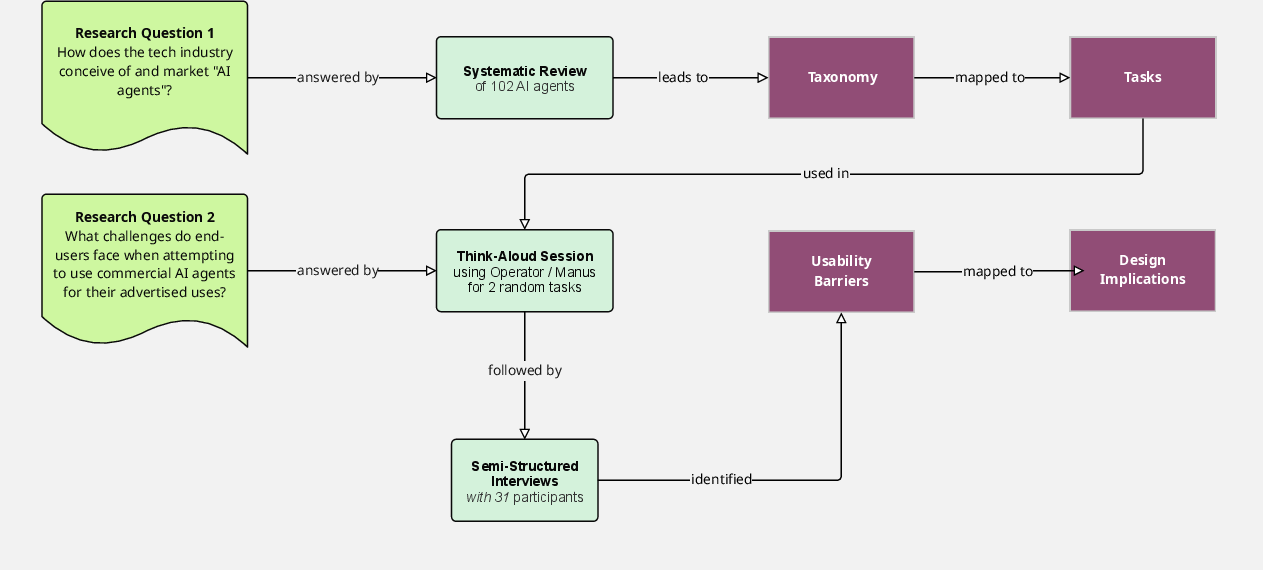

The paper "Why Johnny Can't Use Agents: Industry Aspirations vs. User Realities with AI Agent Software" (2509.14528) presents a systematic investigation into the disconnect between the marketed capabilities of commercial AI agents and the practical usability challenges faced by end-users. The authors employ a two-pronged methodology: a comprehensive review of 102 commercial AI agent products to construct a taxonomy of use cases, and an empirical user paper (N=31) evaluating two state-of-the-art agent platforms (Operator and Manus) across representative tasks. The work is situated at the intersection of HCI, empirical studies of Human-AI collaboration, and agent evaluation, and provides actionable design implications for next-generation agentic systems.

Figure 1: Overview of the research process, from systematic review to user paper and design implications.

Taxonomy of Marketed AI Agent Use Cases

The systematic review identifies three orthogonal categories of AI agent capabilities:

- Orchestration: Agents that automate direct manipulation of GUIs, performing procedural actions on behalf of users (e.g., data entry, workflow automation).

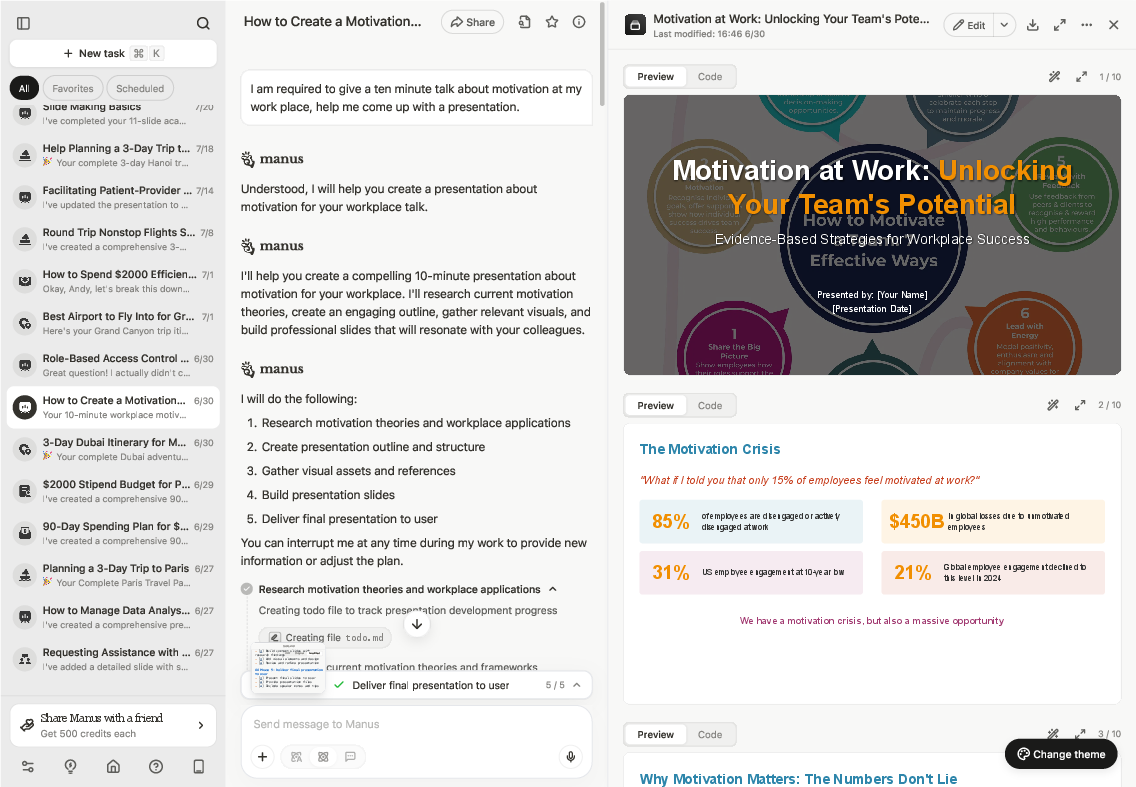

- Creation: Agents that generate structured content, such as documents, presentations, websites, or media, focusing on formatting and presentation.

- Insight: Agents that synthesize, analyze, and retrieve information, supporting decision-making and knowledge work.

This taxonomy is derived from open coding of product descriptions and user journeys, and is validated across a diverse set of commercial offerings. Notably, most agents are task- or domain-specific rather than general-purpose, and complex workflows often require a combination of orchestration, creation, and insight capabilities.

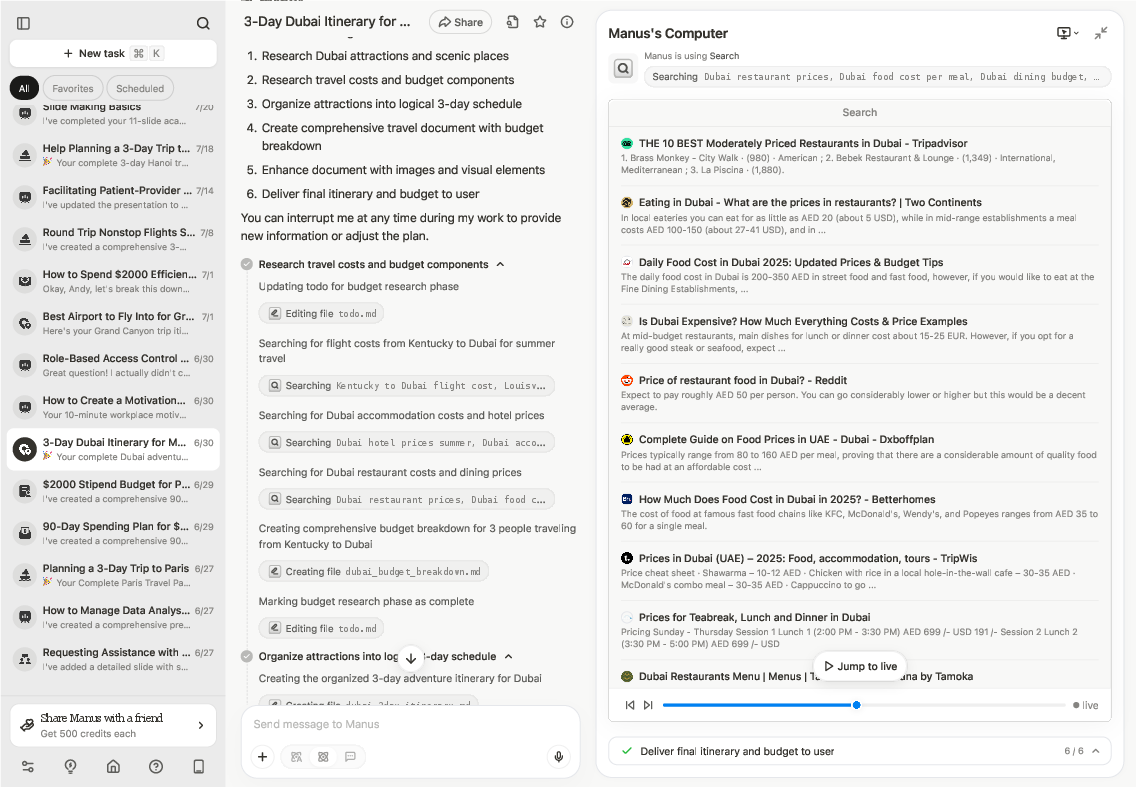

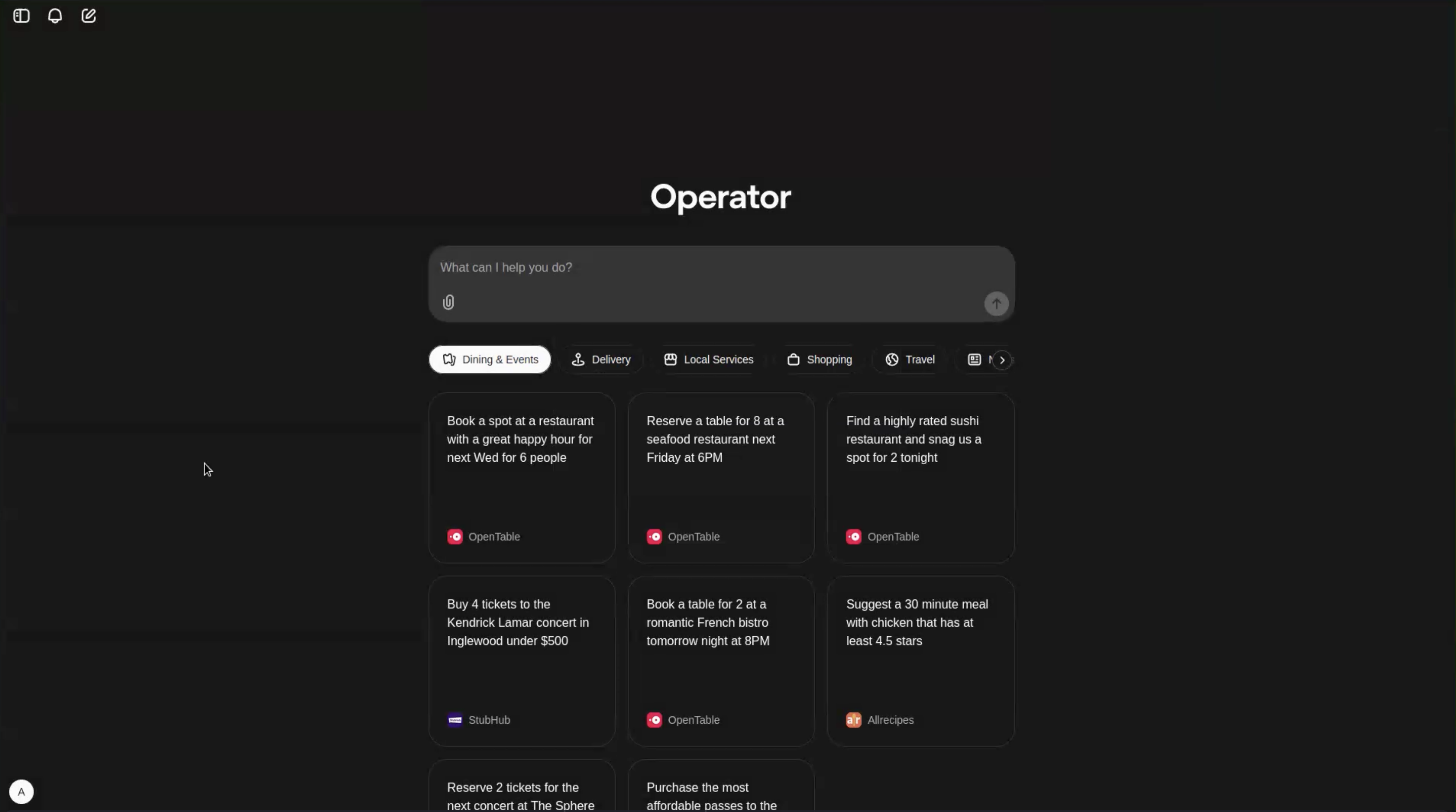

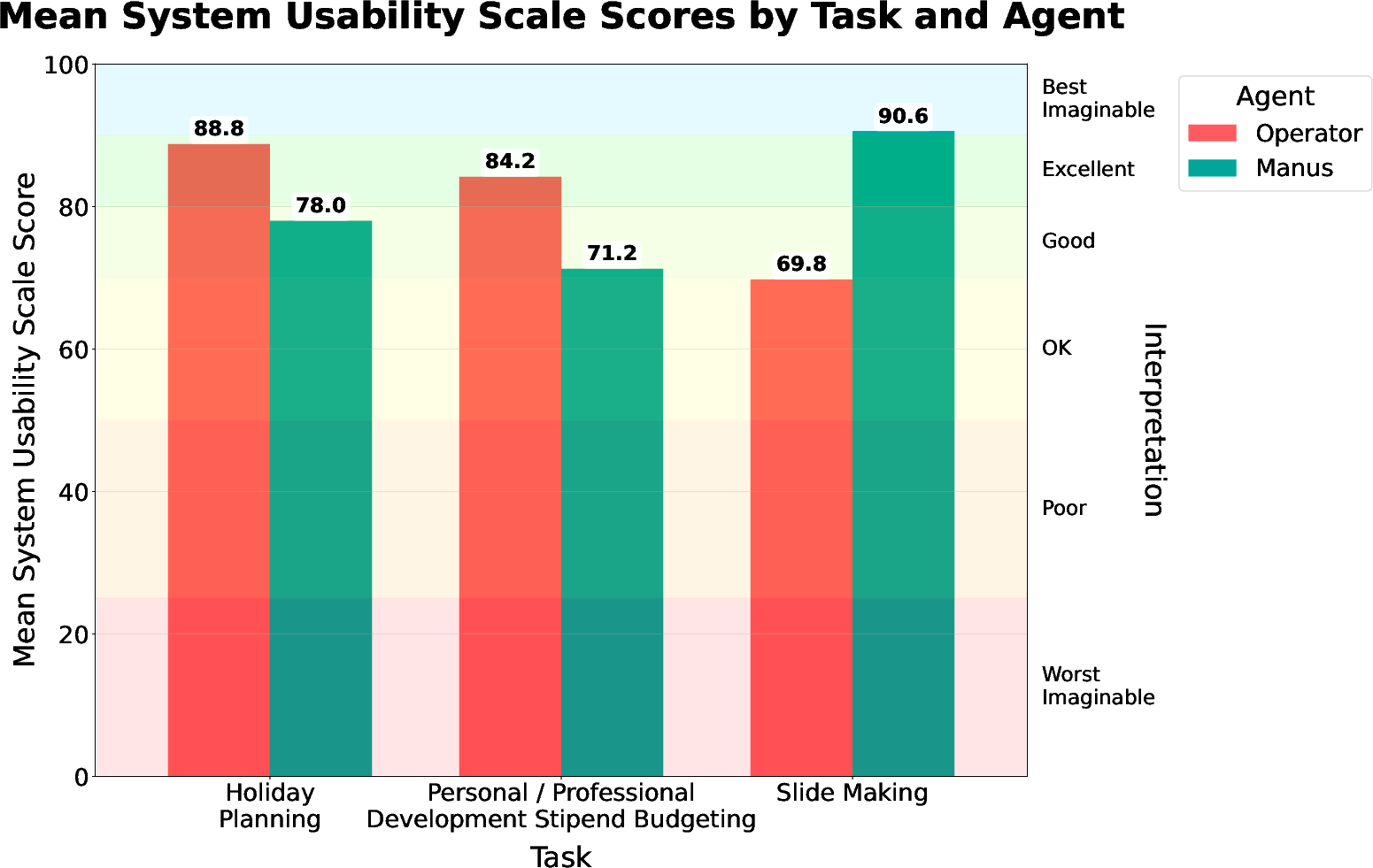

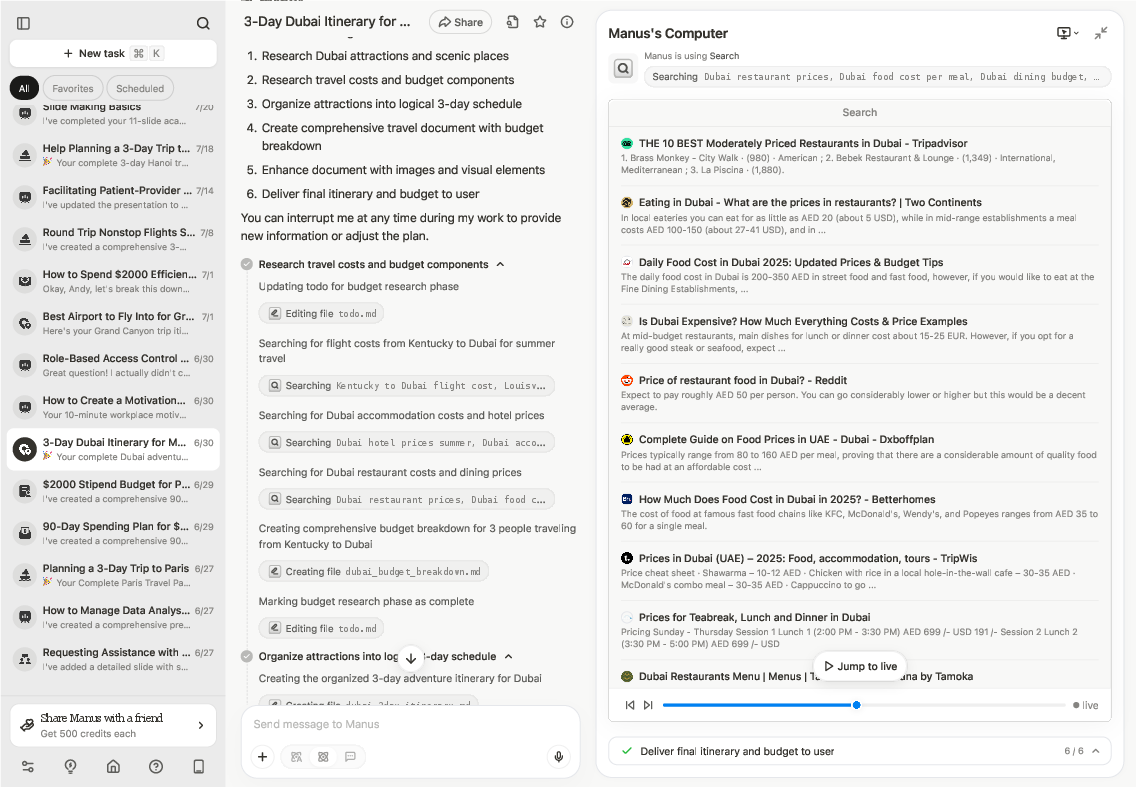

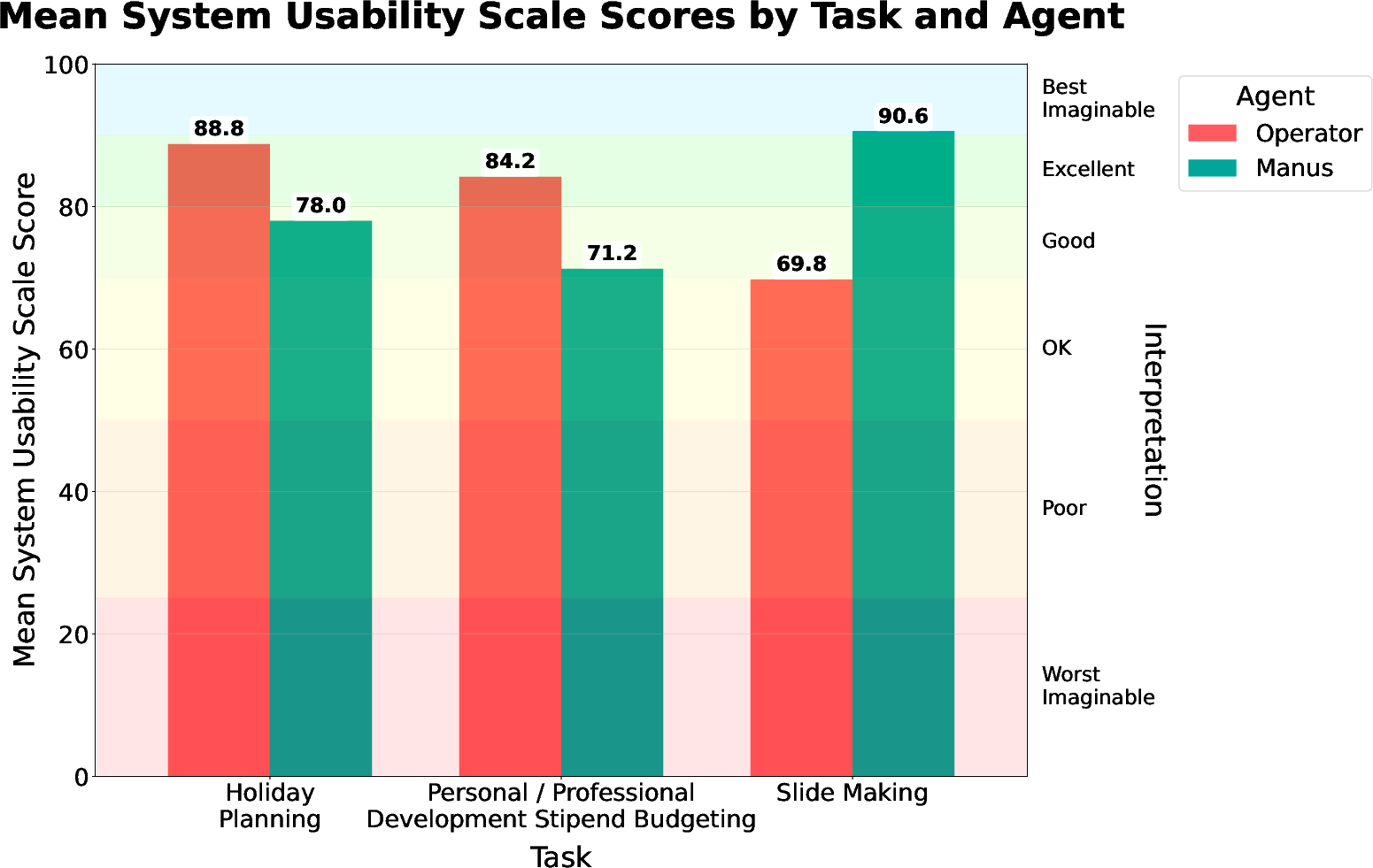

The user paper employs a think-aloud protocol and semi-structured interviews, with participants attempting tasks representative of each taxonomy category using Operator and Manus. Tasks include holiday planning (orchestration), slide making (creation), and budgeting for personal/professional growth (insight). The agents selected support text-based prompting, computer control, and web search, and are accessible to non-technical users.

Figure 2: Manus agent operating on the Holiday Planning task.

Figure 3: Operator's initial screen, featuring prompt input and example tasks.

Usability Findings: Five Critical Barriers

Despite generally positive user impressions and successful task completion, the paper identifies five recurring usability barriers:

- Misalignment with User Mental Models: Users struggle to predict agent capabilities and outcomes, leading to "prompt gambling" and uncertainty in specification. The chat-based interface obscures affordances, and users lack effective strategies for decomposing tasks or iterating on outputs.

- Presumption of Trust Without Credibility: Agents request sensitive actions (e.g., credential entry, purchases) without establishing trust or demonstrating competence. Users are reluctant to delegate high-stakes tasks and desire more explicit elicitation of preferences and constraints.

- Inflexible Collaboration Styles: Agents do not accommodate diverse user preferences for involvement, proactivity, or control. Users vary in their desire for oversight, iteration, and manual intervention, but agents default to a "lone wolf" execution model.

- Overwhelming Communication Overhead: Agents produce excessive workflow logs and verbose outputs, exceeding user bandwidth for sensemaking. Preferences for progress reporting and interaction modalities are heterogeneous and often unmet.

- Lack of Metacognitive Abilities: Agents fail to recognize their own limitations, get stuck in repetitive error loops, and do not proactively seek clarification or critique. Users are unable to supervise agent actions when unfamiliar tools are invoked, and error recovery is opaque.

Figure 4: Manus working on the Creation task, Slide Making.

Figure 5: Mean System Usability Scale (SUS) scores by task and agent, indicating generally good to excellent usability except for notable exceptions.

Design Implications for Next-Generation AI Agents

The authors distill six design recommendations to address the identified barriers:

Implications and Future Directions

The findings highlight a persistent gap between industry aspirations for agentic software and the realities of end-user interaction. While technical competence is advancing, human-centered dimensions—usability, interpretability, trust, and satisfaction—remain under-addressed. The paper corroborates longstanding critiques in HCI regarding opaque automation, initiative misalignment, and the need for mixed-initiative interfaces. The proposed design implications suggest a path forward for agentic systems that are adaptive, transparent, and collaborative.

Theoretically, the work underscores the importance of shared mental models, trust calibration, and metacognitive scaffolding in Human-AI teaming. Practically, it calls for evaluation frameworks that go beyond benchmark scores to incorporate real-world usability and user experience metrics. Future research should explore scalable methods for user modeling, agent self-reflection, and multimodal interaction, as well as longitudinal studies of agent adoption and adaptation in diverse sociocultural contexts.

Conclusion

This paper provides a rigorous taxonomy of commercial AI agent use cases and an empirical account of usability barriers in state-of-the-art agent platforms. The results demonstrate that while agents are increasingly capable, critical gaps in alignment, trust, collaboration, communication, and metacognition hinder their effective use by non-technical end-users. Addressing these barriers through adaptive, user-centered design is essential for realizing the potential of AI agents as collaborative thought partners in knowledge work.