An Evaluation Framework for LLM Agents in Professional Contexts

The paper "TheAgentCompany: Benchmarking LLM Agents on Consequential Real World Tasks" presents a novel evaluation framework intended to rigorously assess the capabilities of AI agents, particularly those powered by LLMs, in performing tasks characteristic of digital work environments. This research is rooted in the escalating integration of AI into workflows across industries, accentuated by the improvements in LLMs that promise to automate a spectrum of work-related tasks. The principal contribution of this work is the introduction of TheAgentCompany, a comprehensive benchmark designed to systematically evaluate AI agents through a series of professional tasks modeled after a digital workplace.

Framework Design and Methodology

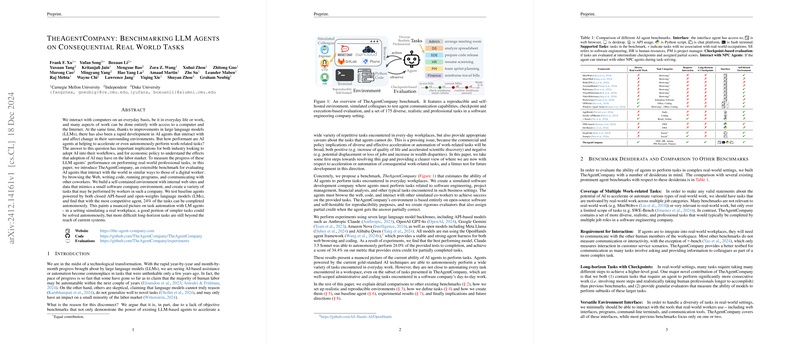

TheAgentCompany creates a controlled, reproducible environment that simulates operations within a small software company. This includes access to self-contained internal websites and databases, enabling tasks to be carried out that mirror those of a digital worker. Key activities for agents include browsing the web, coding, executing programs, and engaging in communications with simulated colleagues. This environment is designed to address a notable gap in existing benchmarks, which often lack the complexity or realism needed to authentically assess an AI's efficacy in typical professional settings.

In setting up the environment, the authors prioritize a range of interface interactions that an AI might need in a real-world context, including using Python scripts, web browsers, and chat platforms. Moreover, the benchmark incorporates tasks from various domains such as software engineering, project management, finance, and human resources, thereby providing a diverse set of challenges for AI agents.

Experimental Results and Observations

Experiments conducted with a set of foundational LLMs using the OpenHands agent framework reveal that even the most capable AI models of their time, such as Claude 3.5 Sonnet, can autonomously complete only 24% of tasks. This underscores a significant gap between current AI capabilities and the comprehensive automation of professional tasks. Other models, like Gemini 2.0 Flash and OpenAI's GPT-4o, also illustrate this limitation, with performance notably varied across different task types and domains.

A deep dive into task performance by category highlights discrepancies in AI proficiency across domains. Tasks that involve social interaction or require intricate web navigation, such as those using RocketChat or ownCloud, pose substantial challenges. This suggests that while AI can manage straightforward software engineering tasks with relative competence, it falters in domains requiring nuanced understanding and interaction.

Implications and Future Directions

The outcomes of this research have significant implications both practically and theoretically. Practically, they highlight the limitations of current AI in automating broad swathes of professional tasks, suggesting a need for continued advancements in AI's ability to understand and interact with complex environments and systems. Theoretically, the paper suggests that AI development might benefit from a broader focus beyond traditional coding and software tasks to include skills relevant to administrative and interactive tasks.

The authors outline several future directions, emphasizing the expansion of the benchmark to cover tasks across other industries and more complex creative or conceptual tasks that are not currently well-represented. They also suggest developing tasks with less defined goals to better reflect real-world conditions where task ambiguity is common.

In conclusion, TheAgentCompany provides a vital evaluative tool that underscored the capabilities and limitations of contemporary AI agents, setting a benchmark for future improvements and adaptations. It serves as a crucial point of reference for both academic inquiry and practical development in AI, aiming ultimately for agents that can more fully integrate into and contribute to professional environments.