- The paper introduces a concept-level memory system that abstracts and modularizes LLM reasoning to enable persistent, reusable, and compositional knowledge.

- It implements structured (PS) and flexible (OE) memory formats with explicit read/write operations to boost inference efficiency and generalization.

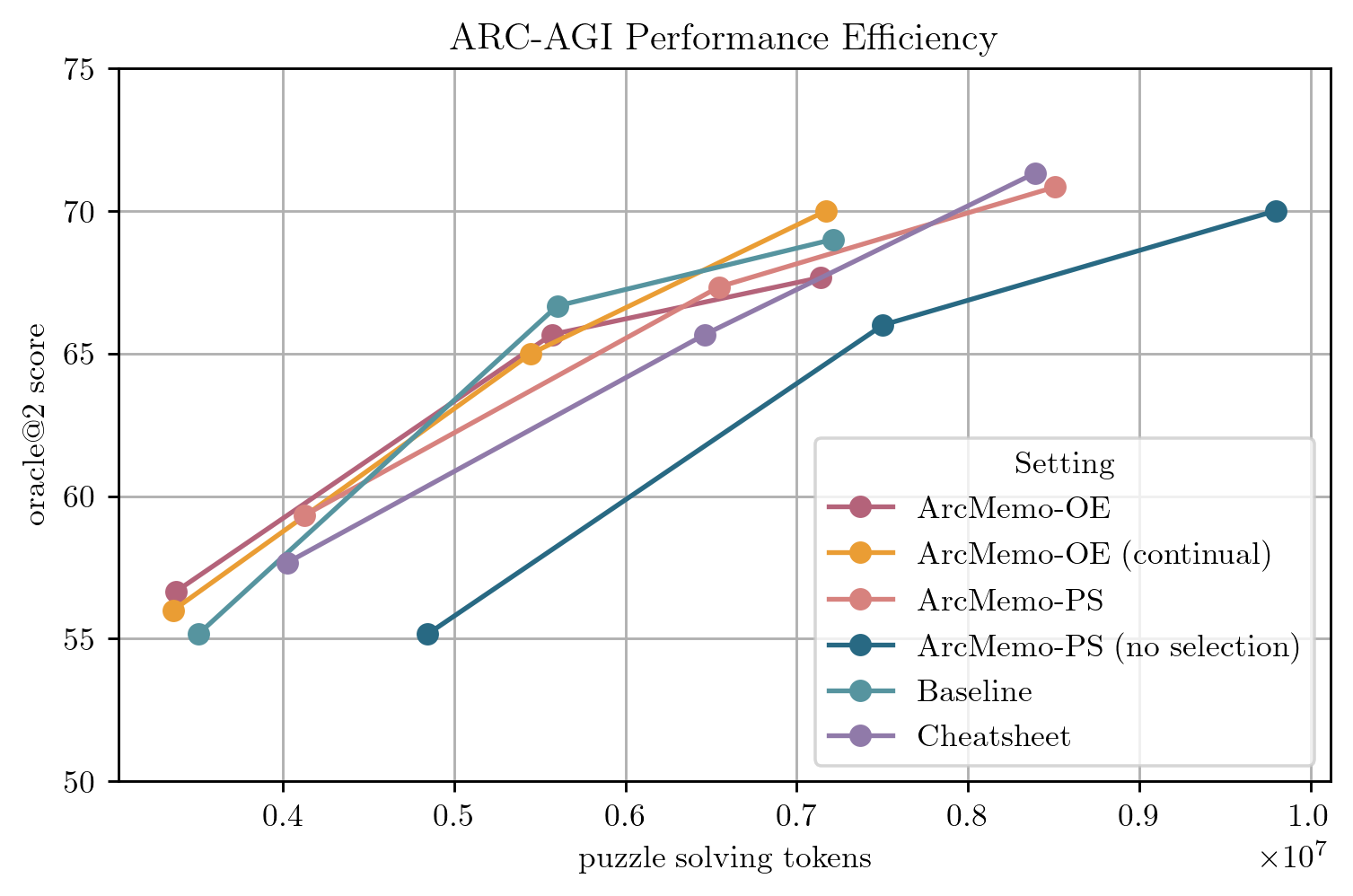

- Empirical evaluations on the ARC-AGI-1 benchmark show that ArcMemo-PS outperforms no-memory baselines and scales effectively with continual learning.

ArcMemo: Abstract Reasoning Composition with Lifelong LLM Memory

Motivation and Problem Setting

LLMs have demonstrated strong performance on reasoning-intensive tasks, but their inference-time discoveries are ephemeral—once the context window is reset, any patterns or strategies uncovered are lost. This is in contrast to human problem solving, where abstracted insights and modular concepts are retained and recombined for future tasks. Existing external memory approaches for LLMs have primarily focused on instance-level storage (e.g., query/response pairs or tightly coupled summaries), which limits generalization and scalability. ArcMemo addresses this by introducing a concept-level memory system that abstracts and modularizes reasoning patterns, enabling persistent, reusable, and compositional knowledge for continual test-time learning.

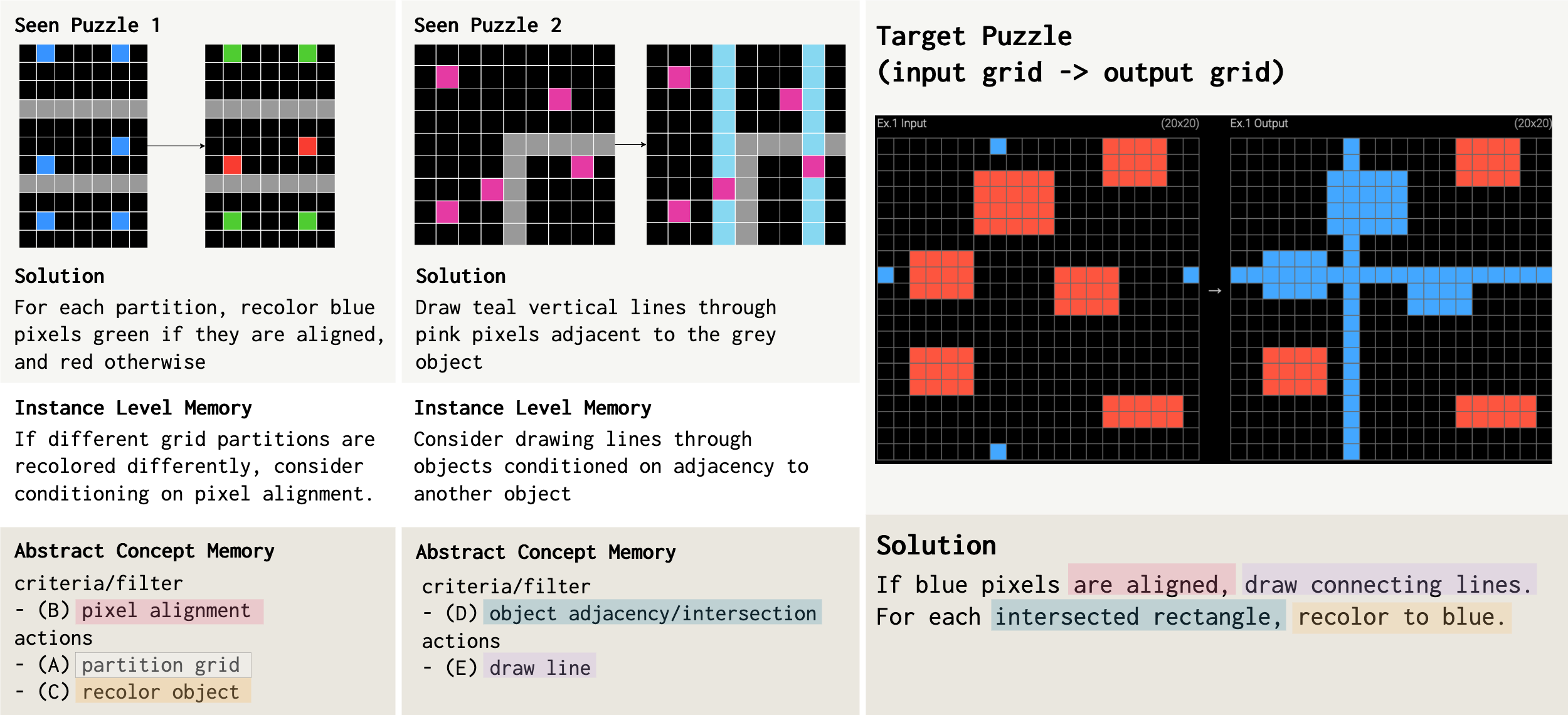

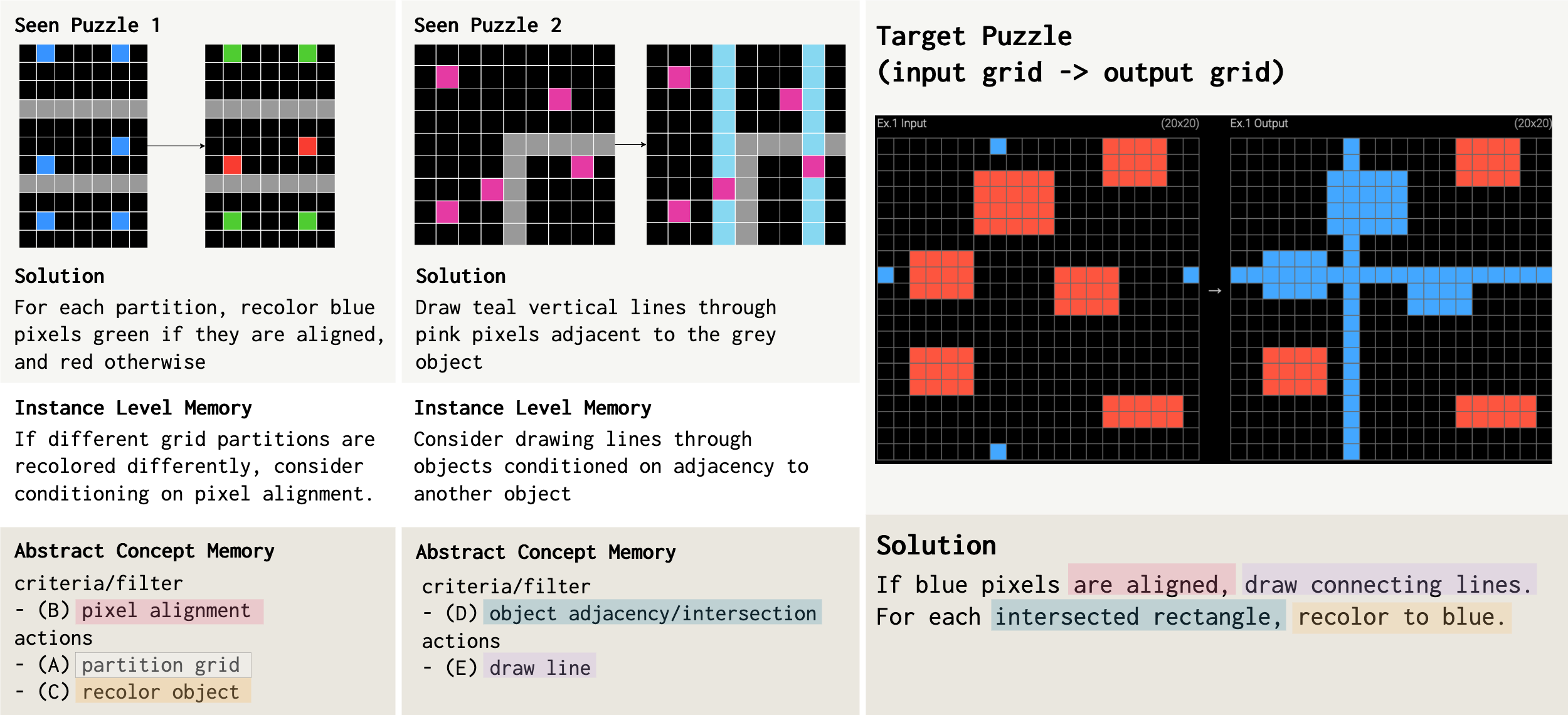

Figure 1: Instance-level memory stores tightly coupled rules, while abstract memory decomposes and modularizes concepts for flexible recombination across tasks.

ArcMemo Framework: Abstraction and Modularity

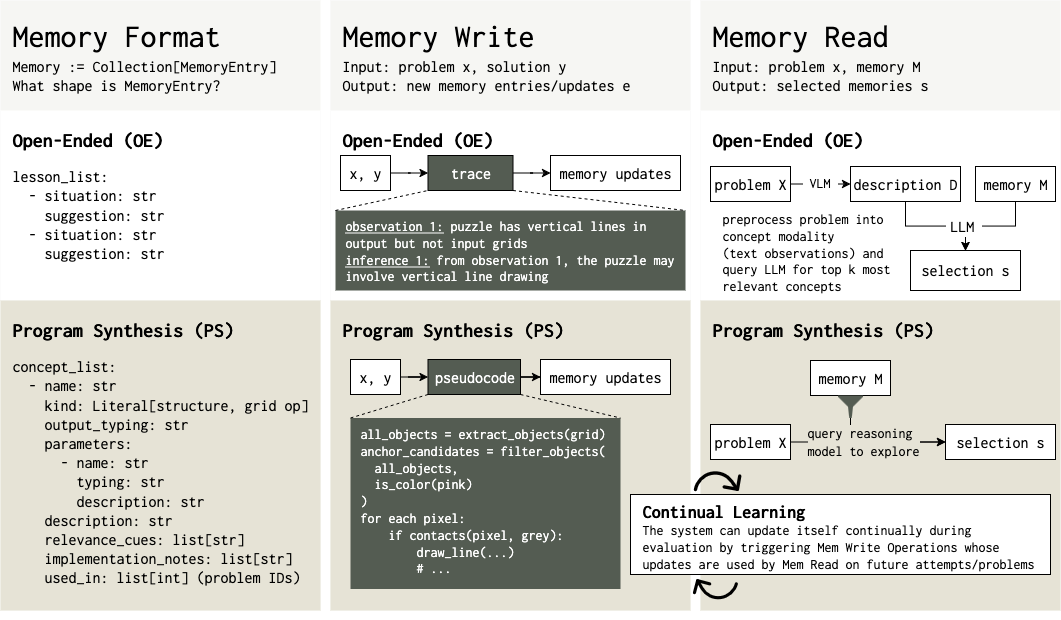

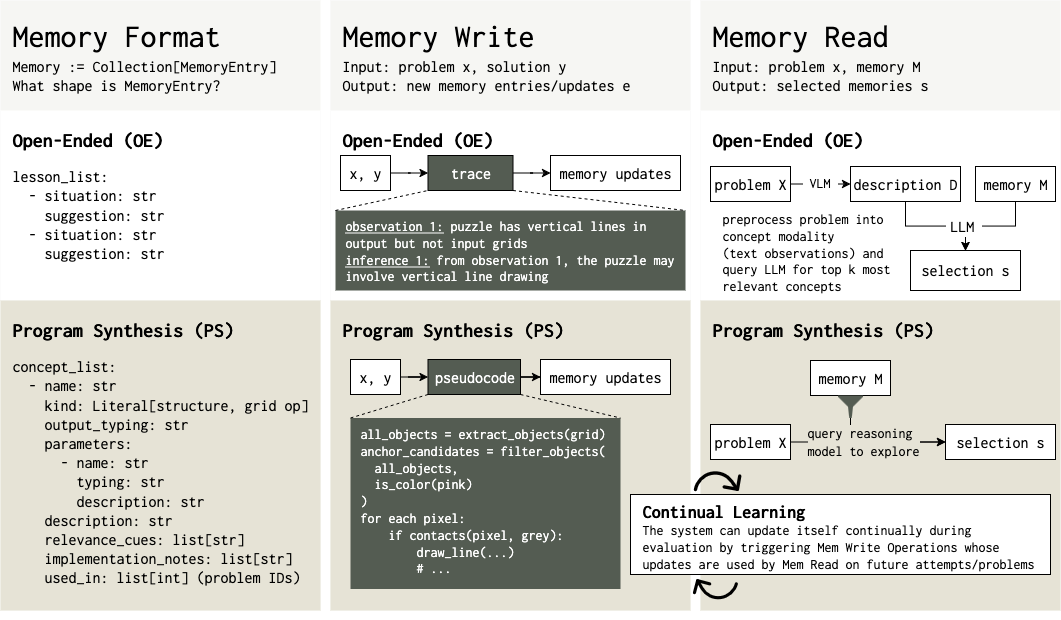

ArcMemo formalizes external memory as a collection of entries with explicit read and write operations. The core design axes are:

- Memory Format: What is stored—instance-level vs. abstract, modular concepts.

- Memory Write: How solution traces are abstracted and persisted.

- Memory Read: How relevant concepts are retrieved and integrated for new queries.

The framework supports two memory formats:

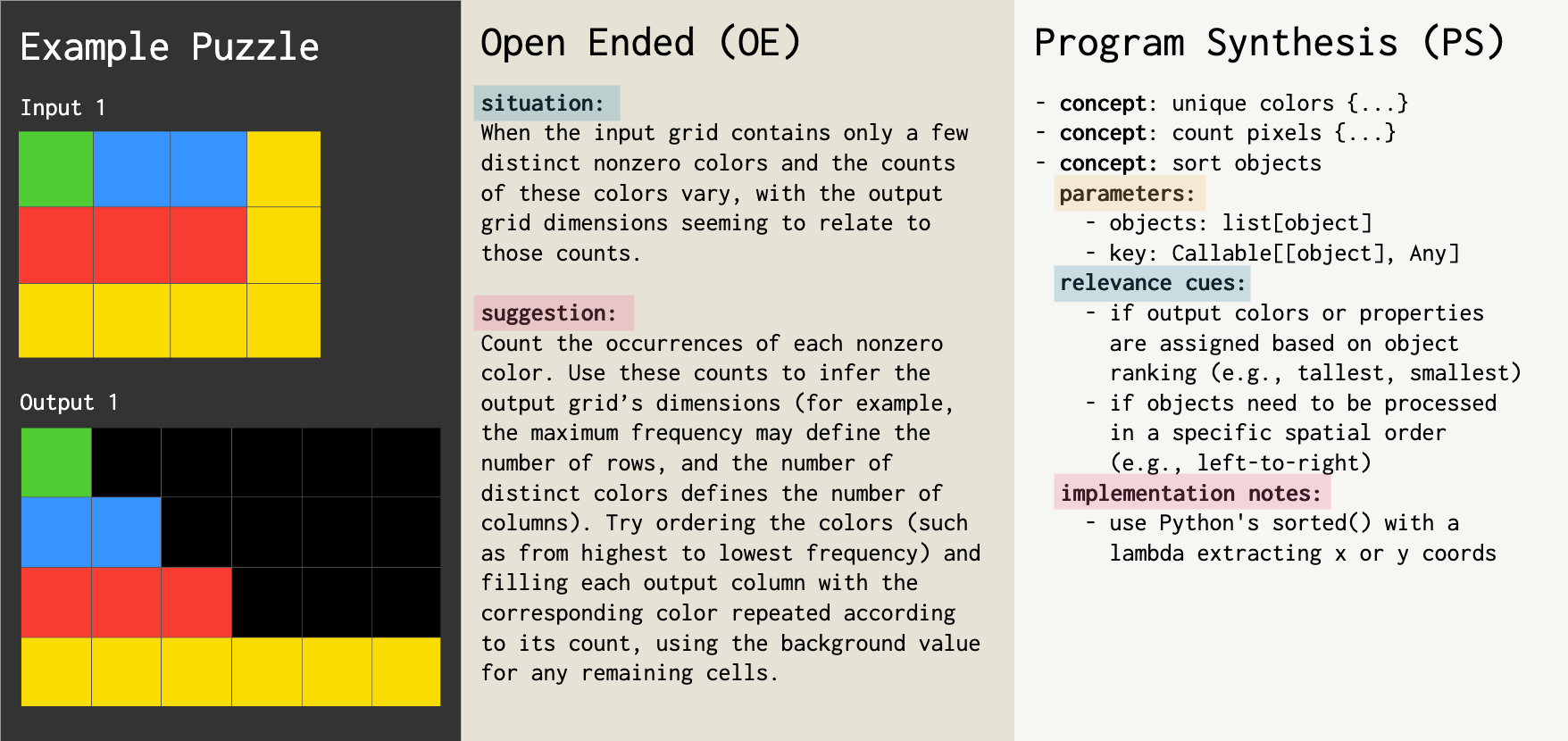

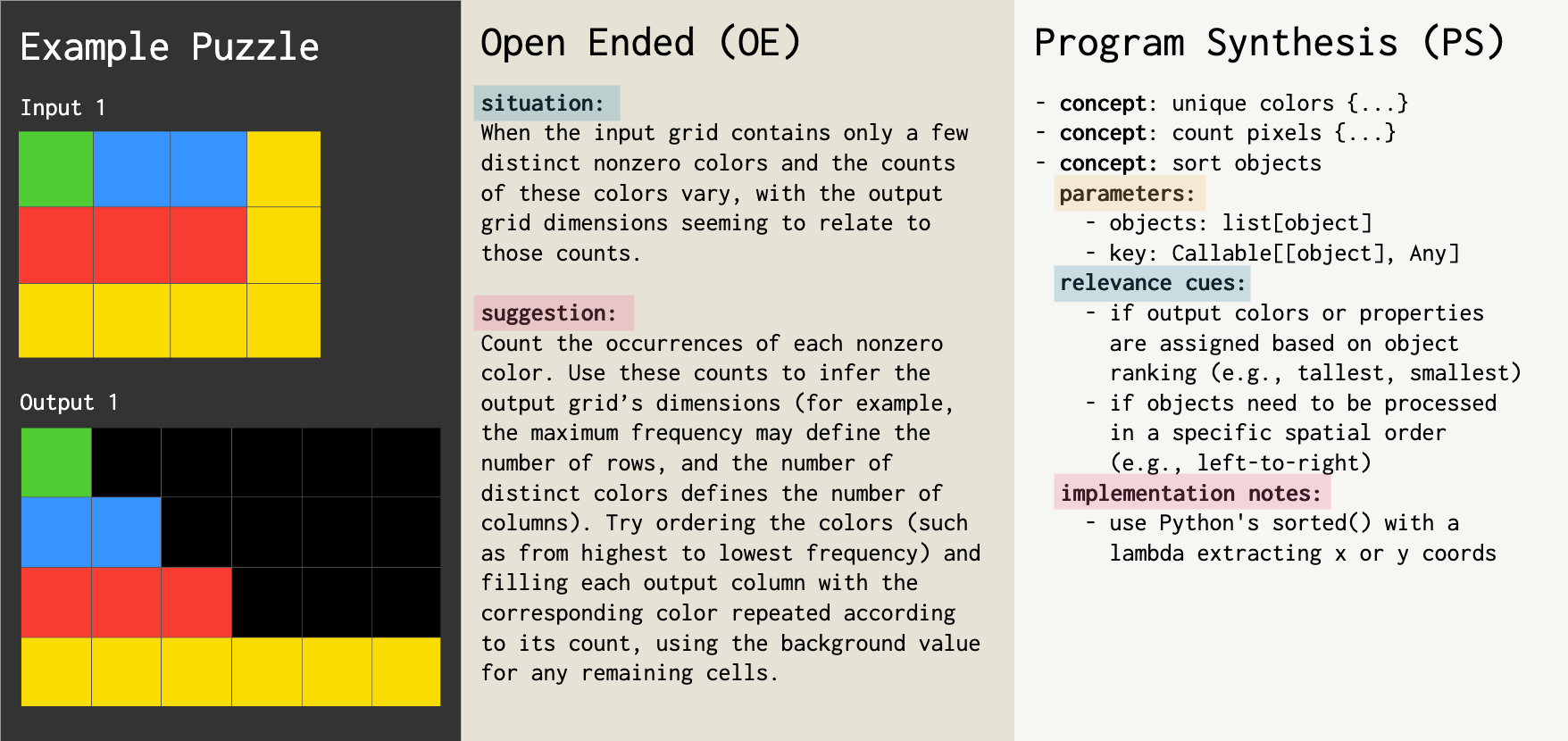

- Open-Ended (OE): Minimal structure, with each entry as a (situation, suggestion) pair. This format is flexible but can lead to overspecification.

- Program Synthesis (PS): Structured, parameterized concepts inspired by software engineering, with type annotations and higher-order functions to promote abstraction and modularity.

Figure 2: ArcMemo’s method diagram highlights abstraction and modularity, with parameterization and typed interfaces enabling compositional reasoning.

Figure 3: OE concepts defer abstraction to the model, while PS concepts enforce structure and parameterization, supporting higher-order behavior and modularity.

Memory Operations: Abstraction, Selection, and Continual Learning

Memory Write: Concept Abstraction

- OE Abstraction: Summarizes solution traces into reusable (situation, suggestion) pairs, using post-hoc derivations to disentangle context and generalize ideas.

- PS Abstraction: Converts solutions into parameterized routines, leveraging pseudocode to focus on high-level operations. The abstraction process is memory-aware, encouraging reuse and revision of existing concepts, and supports higher-order functions for further generalization.

Memory Read: Concept Selection

- OE Selection: Uses a vision-LLM to caption the input, then matches against stored situations to select the top-k relevant concepts. This preprocessing step aligns abstract domain inputs with memory representations.

- PS Selection: Employs reasoning-based (System 2) selection, where the model explores the problem space, identifies relevant concepts via cues and type annotations, and recursively fills in parameters, supporting compositional assembly of solutions.

Continual Concept Learning

ArcMemo supports continual updates at test time, ingesting new solution traces and abstracting additional concepts. This enables self-improvement as new problems are solved, with memory growing and evolving over time. The system is sensitive to problem order, introducing an accuracy-throughput trade-off in batched inference.

Experimental Evaluation

Benchmark and Setup

ArcMemo is evaluated on the ARC-AGI-1 benchmark, which requires inferring transformation rules for input/output pixel grids. The evaluation uses a subset of 100 puzzles from the public validation split, with memory initialized from 160 seed solutions. The main backbone is OpenAI’s o4-mini model, with auxiliary tasks handled by GPT-4.1.

Main Results

Continual Learning

Continual memory updates during evaluation yield further improvements, especially at higher retry depths. This supports the hypothesis that solving more problems and abstracting new patterns enables further solutions, manifesting a form of self-improvement.

Implementation Considerations

- Memory Format: PS format requires careful design of parameterization and type annotations. Higher-order functions should be supported to maximize abstraction.

- Abstraction Pipeline: For PS, preprocess solutions into pseudocode before abstraction. Use few-shot demonstrations and comprehensive instructions to scaffold memory writing.

- Selection Mechanism: For OE, leverage VLMs for input captioning and semantic matching. For PS, implement a recursive reasoning-based selection that can handle compositional assembly.

- Scalability: Selective retrieval is essential to prevent context window overflow and maintain efficiency as memory grows.

- Feedback Integration: The system requires some form of test-time feedback (e.g., test cases, self-reflection) to ensure only productive patterns are persisted.

- Order Sensitivity: Continual updates introduce dependency on problem order; batching and curriculum strategies may be needed for robustness.

Implications and Future Directions

ArcMemo demonstrates that abstract, modular memory enables LLMs to persist and reuse reasoning patterns, supporting continual learning and compositional generalization without weight updates. The approach is particularly effective in settings where inference compute is limited, and memory can substitute for redundant exploration. The findings suggest several avenues for future research:

- Hierarchical Memory Consolidation: Developing mechanisms for memory consolidation and hierarchical abstraction to further improve scalability and robustness.

- Order-Robust Update Strategies: Reducing sensitivity to problem order via curriculum learning or batch-aware updates.

- Broader Evaluation: Extending to more challenging benchmarks and real-world domains to quantify upper bounds of memory-augmented reasoning.

- Optimization of Representations: Exploring richer abstraction methods, retrieval strategies, and memory organization for improved performance.

Conclusion

ArcMemo introduces a principled framework for abstract, modular, and continually updatable memory in LLMs, enabling persistent compositional reasoning and test-time self-improvement. The empirical results on ARC-AGI-1 validate the efficacy of concept-level memory, with strong gains over instance-level and cheatsheet-style baselines. The work opens new directions for lifelong learning in LLMs, emphasizing abstraction, modularity, and efficient memory management as key ingredients for scalable, generalizable reasoning systems.