- The paper introduces M2N2, which employs dynamic merging boundaries, resource competition, and attraction heuristics to effectively fuse models.

- The methodology enables gradient-free integration of models trained on different tasks while preserving diversity and robustness.

- Empirical tests on MNIST classifiers, LLMs, and diffusion models demonstrate significant improvements in computational efficiency and accuracy.

Competition and Attraction Improve Model Fusion: An Expert Analysis

Introduction and Motivation

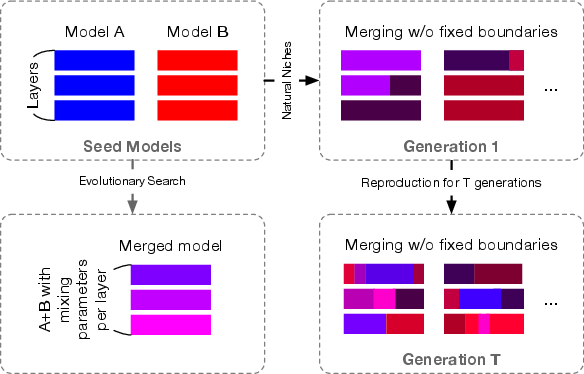

The proliferation of open-source generative models has led to a vast ecosystem of specialized models, each fine-tuned for particular domains or tasks. Model merging, the process of integrating the knowledge of multiple models into a single unified model, has emerged as a practical alternative to traditional fine-tuning, especially when access to original training data is restricted or when combining models trained on disparate objectives. However, existing model merging techniques are constrained by the need for manual parameter grouping and lack robust mechanisms for maintaining diversity and selecting optimal parent pairs for fusion.

This paper introduces Model Merging of Natural Niches (M2N2), an evolutionary algorithm that addresses these limitations through three core innovations: (1) dynamic adjustment of merging boundaries, (2) diversity preservation via resource competition, and (3) a heuristic attraction metric for parent selection. The method is evaluated across a spectrum of tasks, from evolving MNIST classifiers from scratch to merging LLMs and diffusion-based image generators, demonstrating both computational efficiency and state-of-the-art performance.

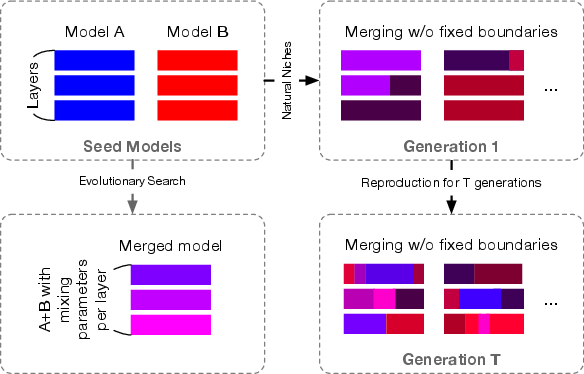

Figure 1: Previous methods use fixed parameter groupings for merging (left), while M2N2 explores a progressively larger set of boundaries and coefficients via random split-points (right).

Methodology: M2N2 Algorithm

Dynamic Merging Boundaries

Traditional model merging approaches require manual partitioning of model parameters (e.g., by layer), which restricts the search space and may miss optimal combinations. M2N2 eliminates this constraint by evolving both the merging coefficients and the split-points that define parameter boundaries. At each iteration, two models are selected from an archive, and a random split-point and mixing ratio are sampled. The resulting merged model concatenates interpolated parameter segments from both parents, enabling a flexible and progressively expanding search space.

Diversity Preservation via Resource Competition

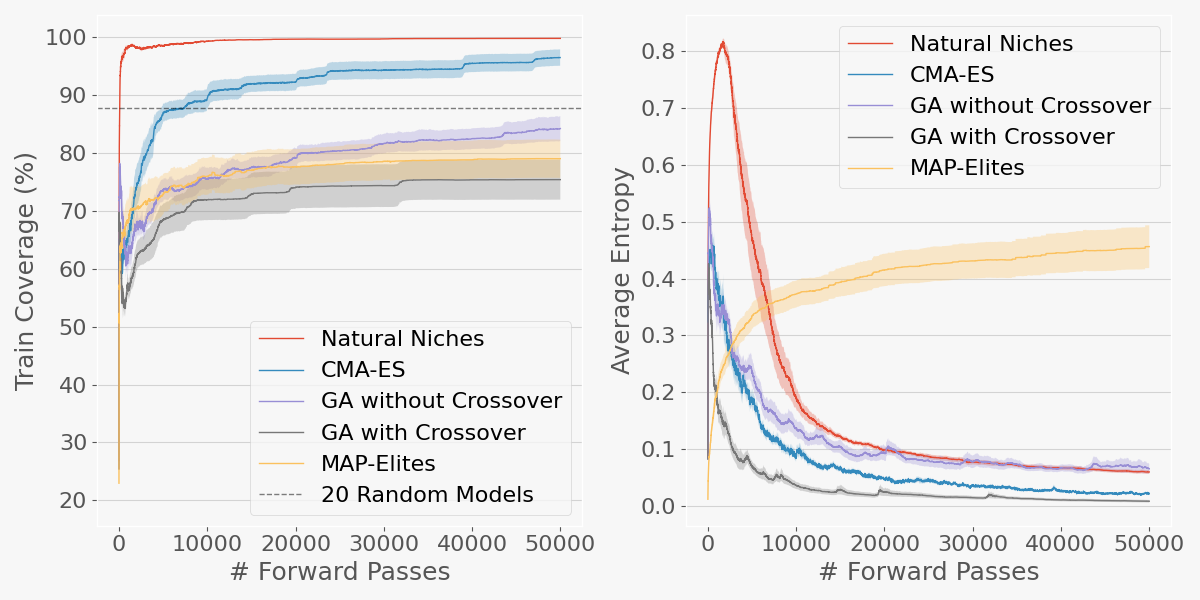

Effective model merging relies on maintaining a diverse population of high-performing models. Rather than relying on hand-crafted diversity metrics, M2N2 employs a resource competition mechanism inspired by implicit fitness sharing in evolutionary biology. Each data point is treated as a limited resource, and the fitness a model derives from a data point is proportional to its relative performance within the population. This approach naturally incentivizes the discovery and preservation of models that excel in underrepresented niches, counteracting the diversity-reducing effects of crossover operations.

Attraction-Based Parent Selection

Crossover operations are computationally expensive, especially for large models. M2N2 introduces an attraction heuristic to select parent pairs with complementary strengths, maximizing the likelihood of beneficial merges. The attraction score favors pairs where one model performs well on data points where the other underperforms, with additional weighting for high-capacity, low-competition resources. This targeted mate selection improves both efficiency and final model quality.

Experimental Results

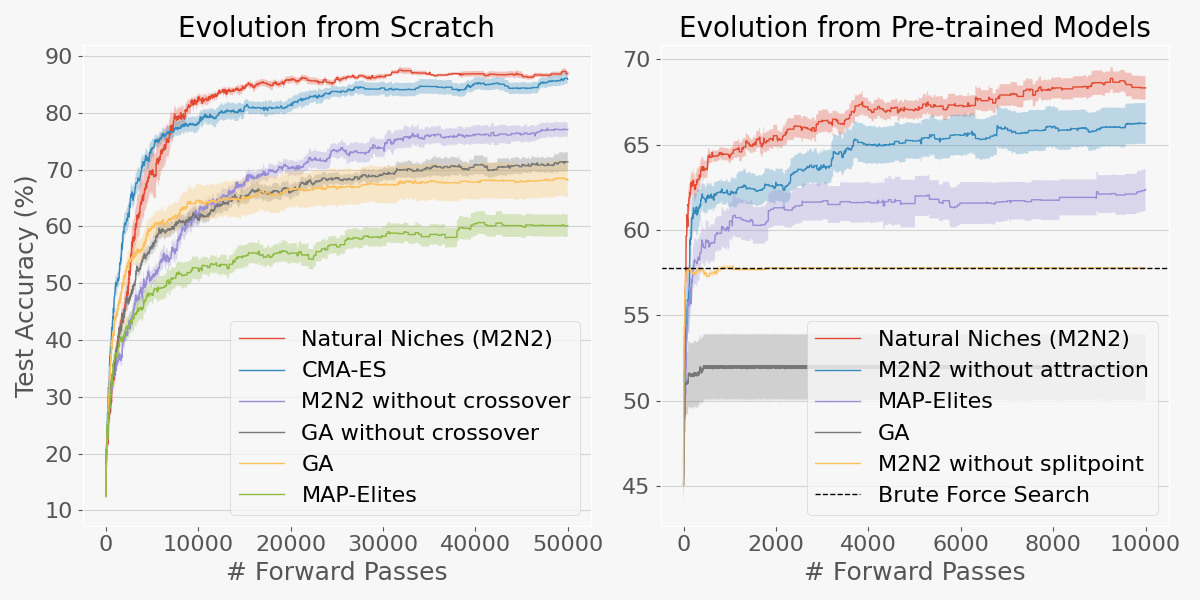

Evolving MNIST Classifiers from Scratch

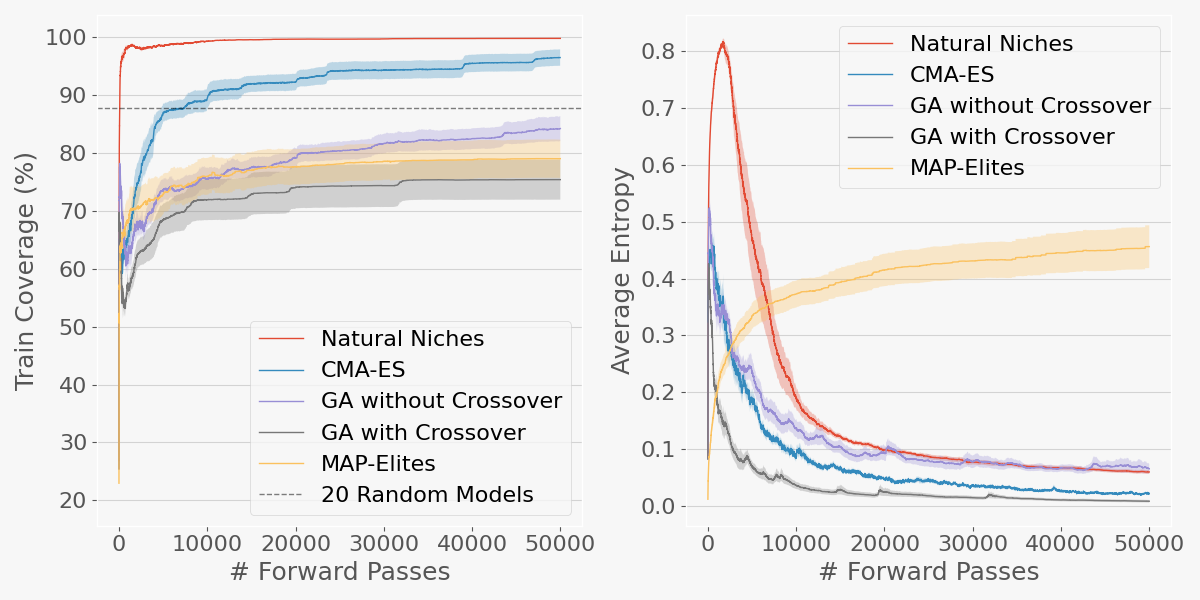

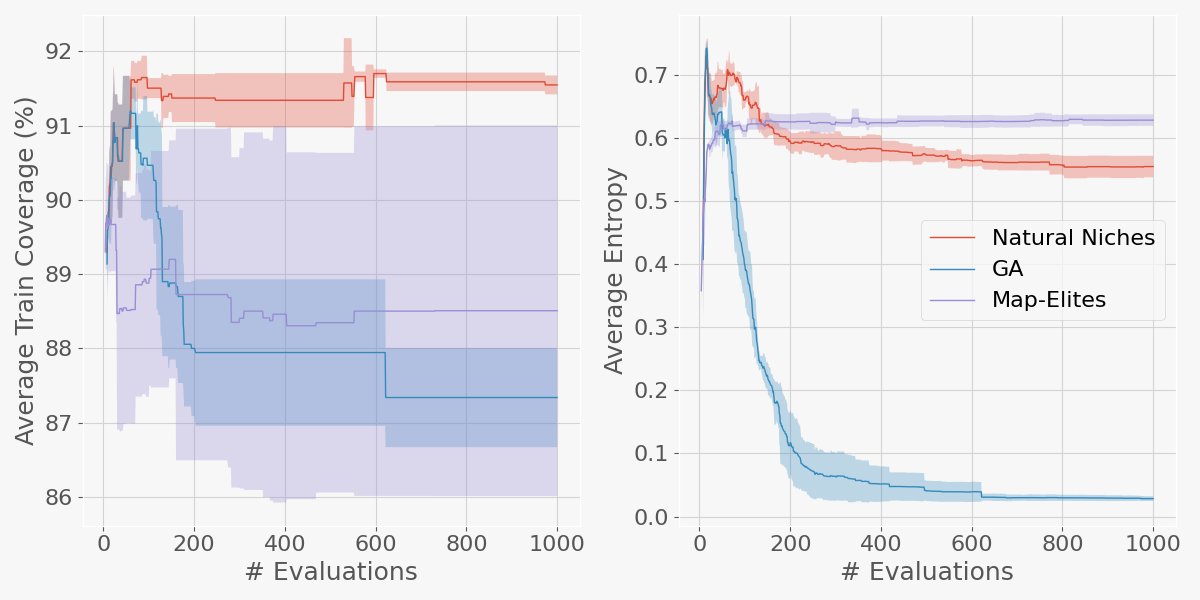

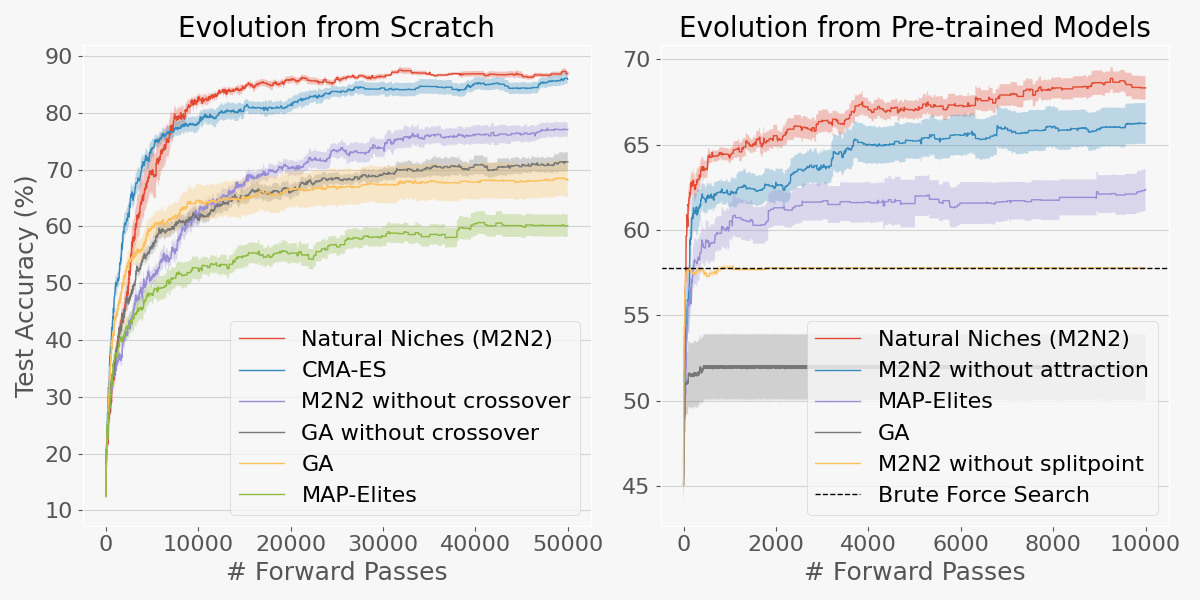

M2N2 is the first model merging approach demonstrated to evolve performant classifiers from random initialization, achieving test accuracy comparable to CMA-ES but with significantly reduced computational cost. Notably, M2N2 maintains high training coverage and population entropy throughout training, in contrast to genetic algorithms (GA) without diversity preservation, which converge prematurely to suboptimal solutions.

Figure 2: Test accuracy vs. number of forward passes for models initialized randomly (left) and from pre-trained seeds (right). M2N2 outperforms other merging methods, especially from scratch.

Figure 3: Left, training coverage (fraction of data points correctly labeled by at least one model). Right, population entropy (diversity) over training. M2N2 maintains both high coverage and diversity.

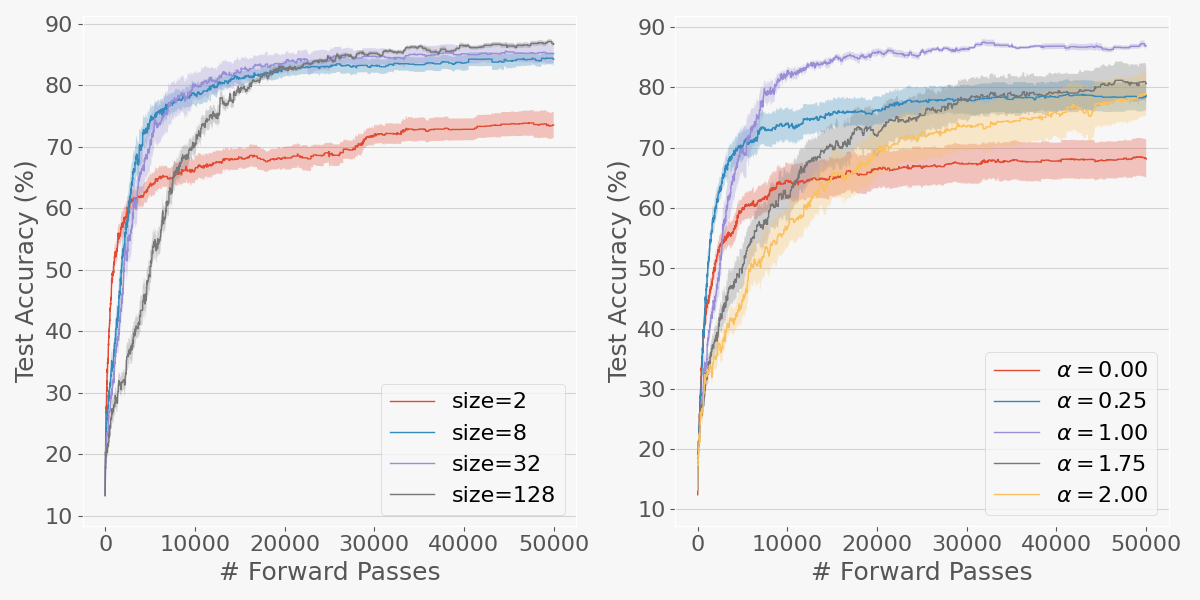

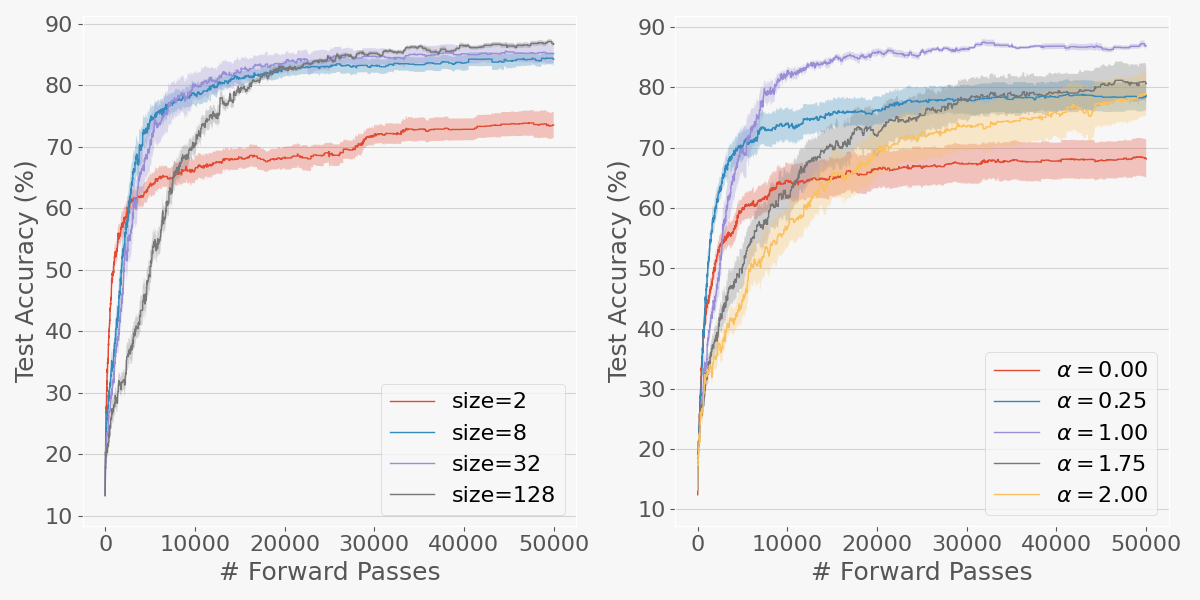

Figure 4: Left, test accuracy of M2N2 across archive sizes. Right, effect of competition intensity (α) on test accuracy. Larger archives and higher competition preserve diversity longer, improving final performance.

Merging LLMs with Complementary Skills

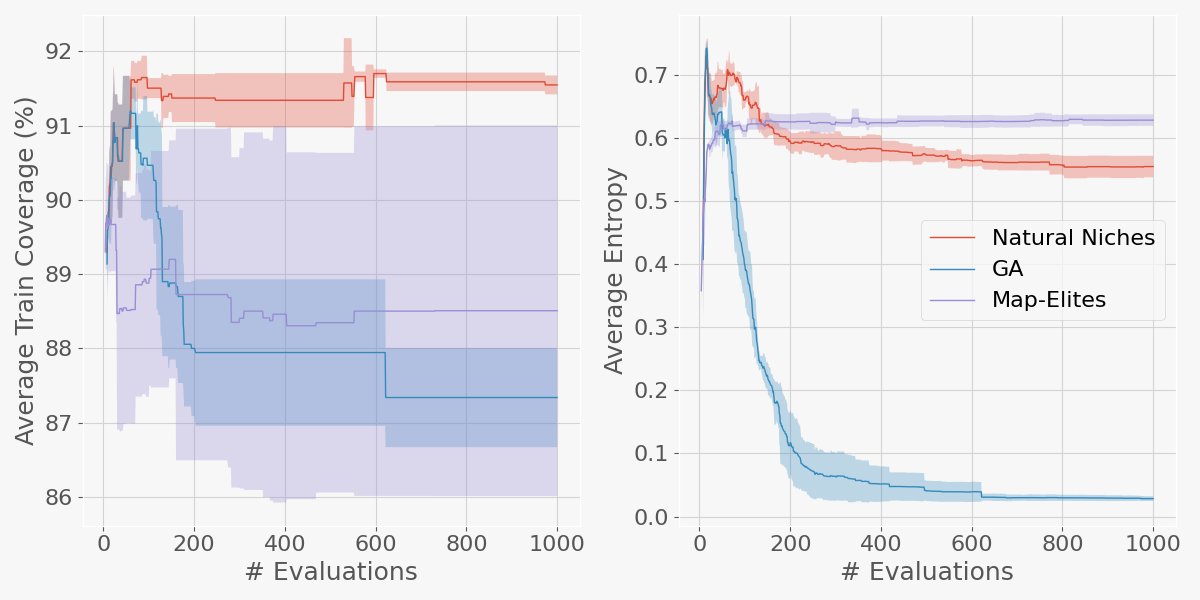

M2N2 is applied to merge WizardMath-7B (math specialist) and AgentEvol-7B (agentic environments specialist), both Llama-2-7B derivatives. The merged model achieves a balanced performance on both GSM8k (math) and WebShop (agentic) benchmarks, outperforming baselines including CMA-ES, MAP-Elites, and standard GA. Ablation studies confirm the critical role of both the split-point mechanism and the attraction heuristic.

Figure 5: Left, training coverage for LLM merging (Math and WebShop). Right, population entropy. M2N2 maintains high coverage and diversity, while GA collapses to a single solution.

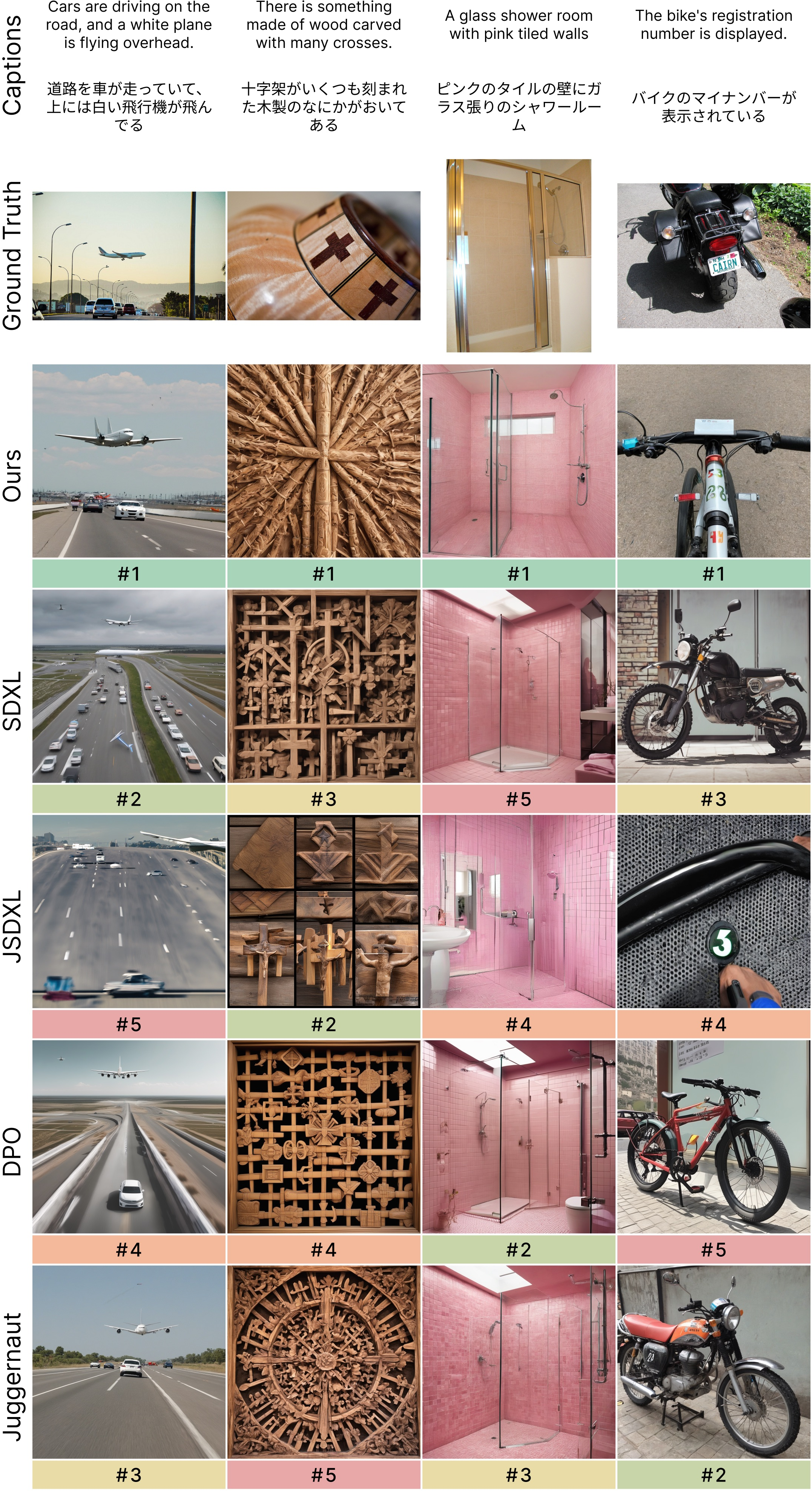

Merging Diffusion-Based Image Generators

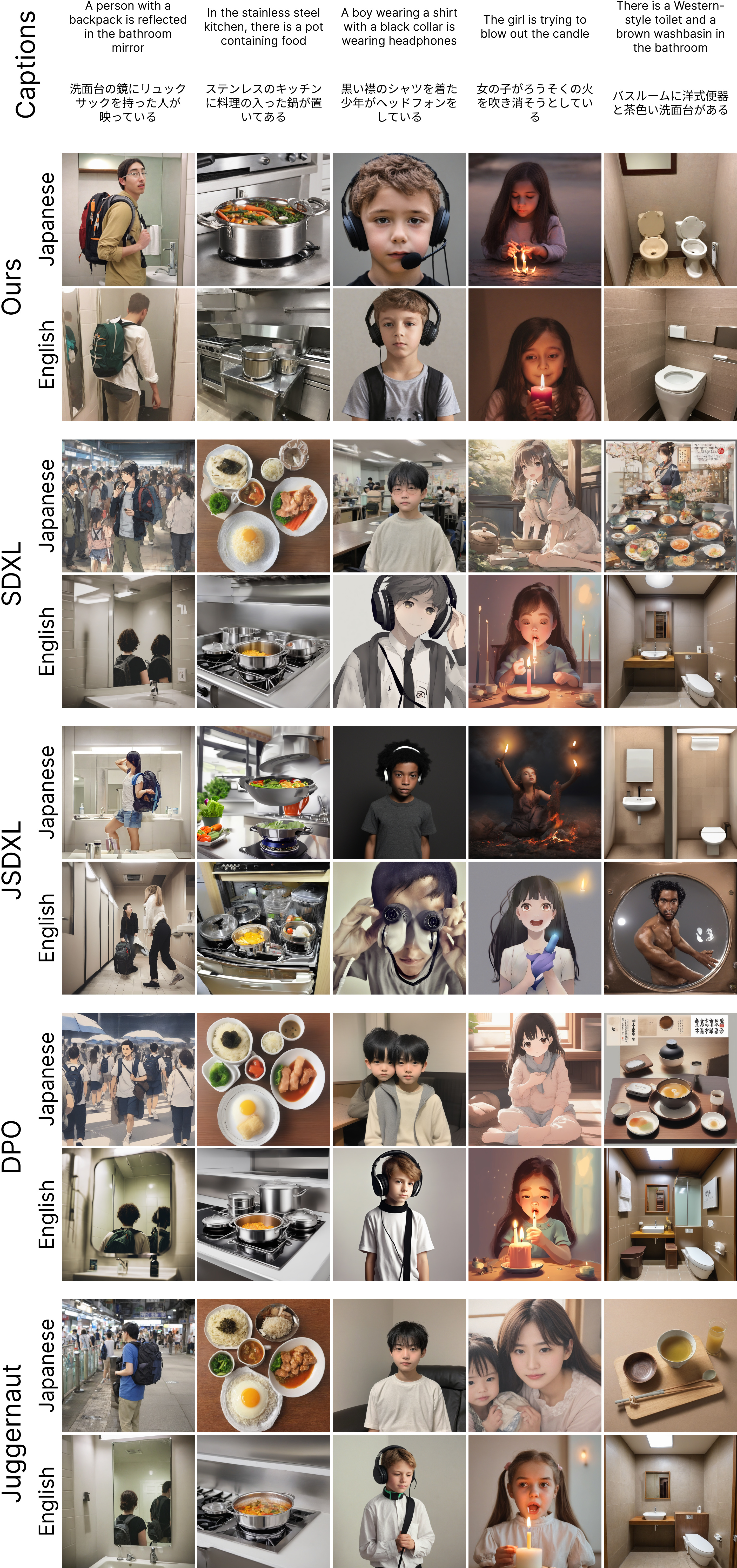

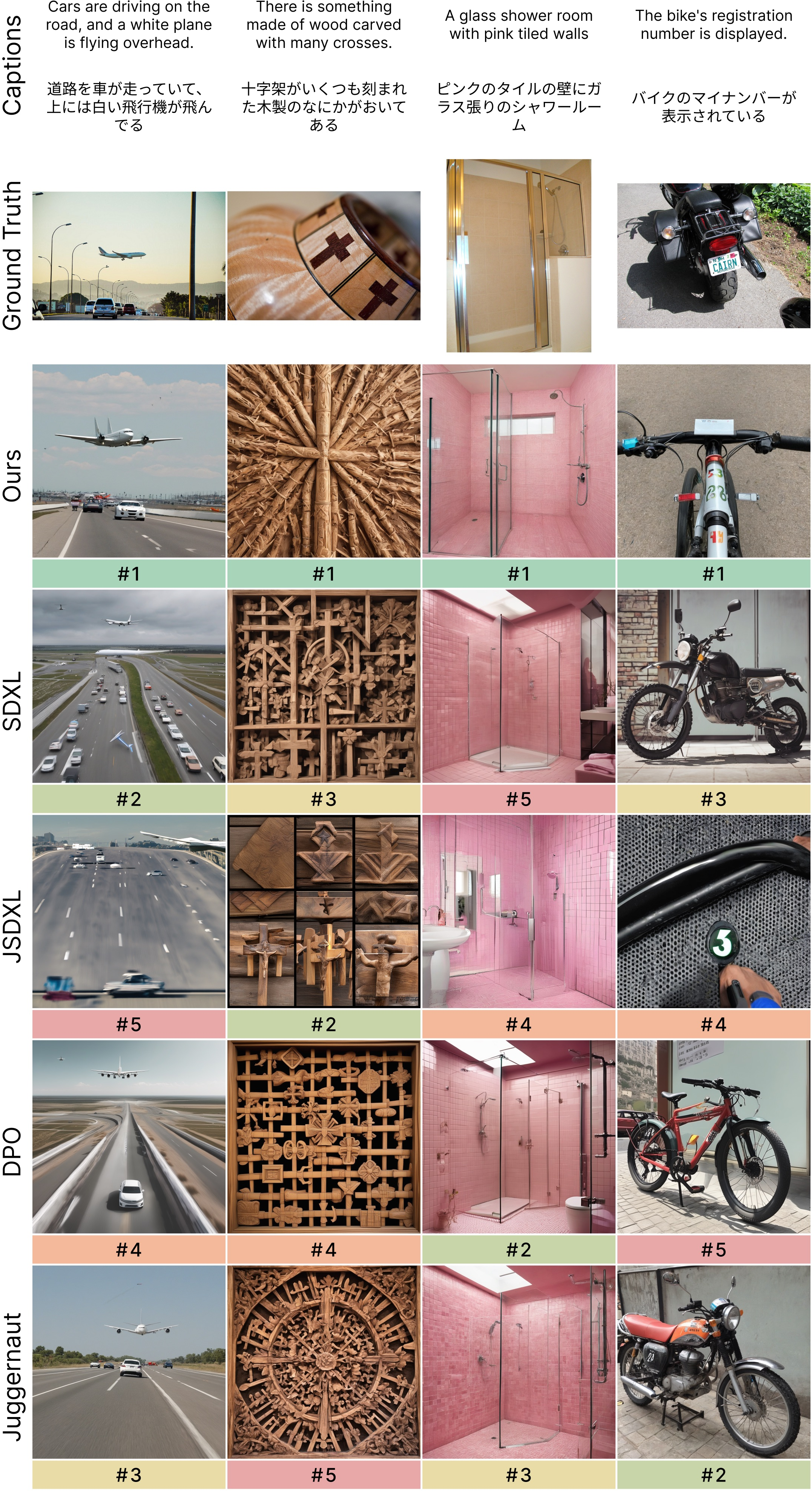

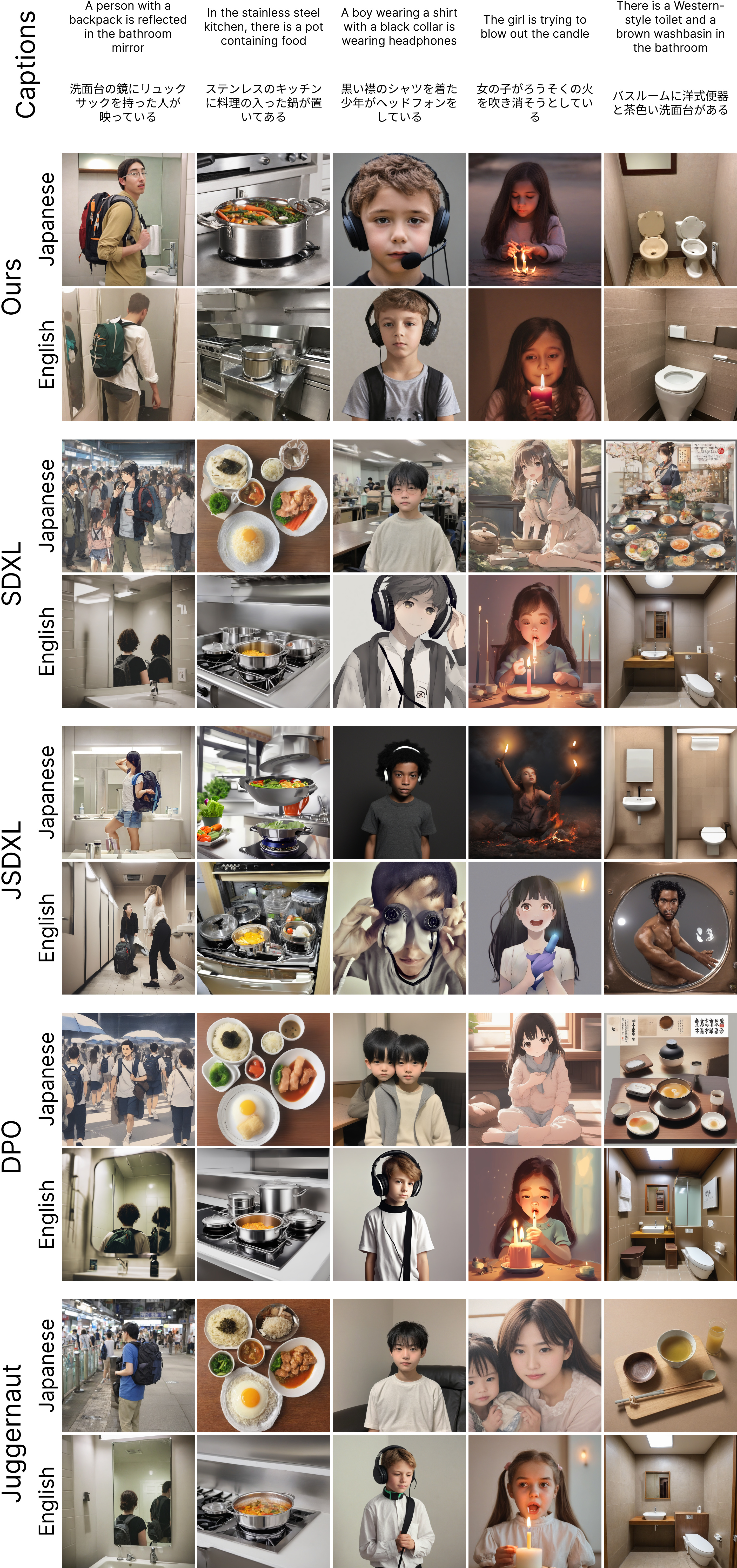

M2N2 is further evaluated on merging text-to-image diffusion models, including JSDXL (Japanese prompts) and several English-trained SDXL variants. The merged model not only aggregates the photorealism and semantic strengths of the seed models but also demonstrates emergent bilingual capabilities, generating high-quality images from both Japanese and English prompts despite being optimized only for Japanese during training.

Figure 6: Comparison of generated images across seed models and the merged model. The merged model aggregates the best qualities and achieves superior photorealism and semantic accuracy.

Figure 7: Images generated by each model for Japanese and English prompts. The merged model exhibits strong cross-lingual understanding.

Quantitatively, the merged model achieves the highest Normalized CLIP Similarity (NCS) and Fréchet Inception Distance (FID) scores among all tested models and baselines. Cross-lingual consistency, measured by CLIP cosine similarity between images generated from Japanese and English prompts, is also maximized in the merged model.

Analysis and Implications

Theoretical Implications

M2N2 demonstrates that model merging, when combined with principled diversity preservation and targeted parent selection, can serve as a viable alternative to gradient-based optimization, even from random initialization. The resource competition mechanism provides a scalable, domain-agnostic approach to diversity maintenance, circumventing the need for hand-crafted metrics that become intractable as model and task complexity increases.

The attraction heuristic for mate selection addresses a longstanding gap in evolutionary algorithms, where crossover partner choice is often naive. As crossover operations become more expensive in modern deep learning, intelligent mate selection is increasingly critical.

Practical Implications

M2N2's gradient-free nature enables merging of models trained on different objectives or with incompatible data, without requiring access to original training sets. This is particularly valuable for privacy-sensitive or proprietary domains. The method is robust to catastrophic forgetting, a common issue in fine-tuning, and preserves capabilities not explicitly optimized during merging.

The approach scales to large models (LLMs, diffusion models) and is computationally efficient relative to direct evolutionary optimization of parameters (e.g., CMA-ES), which is cubic in parameter count and thus infeasible for modern architectures.

Limitations and Future Directions

The success of model merging is contingent on the compatibility of the seed models. When models have diverged significantly during fine-tuning, merging may fail. The development of standardized compatibility metrics is an open research question, with potential applications in regularizing fine-tuning to preserve mergeability. There is also scope for extending the attraction heuristic to incorporate compatibility, enabling the co-evolution of distinct model "species."

Conclusion

M2N2 establishes a new paradigm for model fusion, leveraging dynamic merging boundaries, resource-based diversity preservation, and attraction-driven mate selection. The method is empirically validated across a range of tasks and architectures, achieving strong numerical results and demonstrating robustness, efficiency, and scalability. The findings highlight the importance of implicit fitness sharing and intelligent mate selection in evolutionary algorithms for deep learning, suggesting promising directions for future research in model co-evolution, compatibility metrics, and large-scale model fusion.