- The paper introduces a novel MAMEX framework that dynamically integrates text, image, and audio modalities for improved cold-start recommendations.

- It employs adaptive modality fusion with a gating mechanism and expert layers, achieving significant gains in Recall@10 and NDCG@10 metrics.

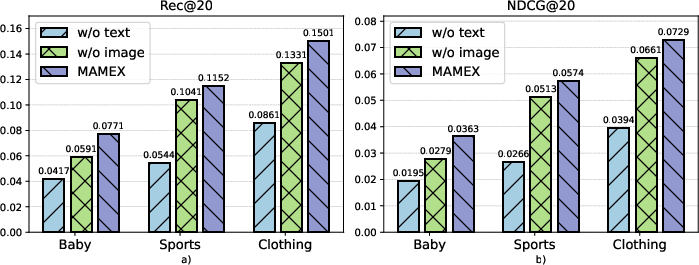

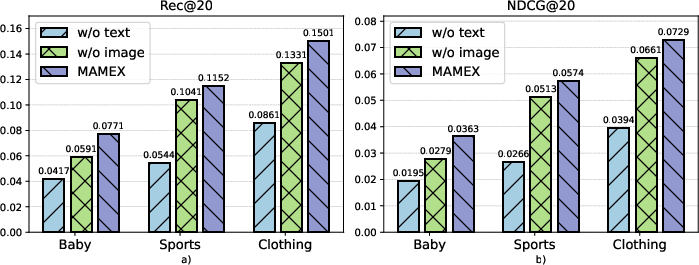

- Extensive ablation studies confirm that combining multi-modal extraction and fusion is critical to mitigate modality collapse and enhance performance.

Multi-modal Adaptive Mixture of Experts for Cold-start Recommendation

Introduction

The cold-start problem is a persistent challenge in recommendation systems, particularly in scenarios where new items have limited interaction history. This paper presents a novel framework, MAMEX (Multi-modal Adaptive Mixture of Experts), which leverages multi-modal data to enhance the performance of recommendation systems in cold-start scenarios. The framework addresses limitations in existing methods by employing a dynamic and adaptive MoE (Mixture of Experts) framework that integrates multiple modalities such as text, images, and audio.

Figure 1: The overview architecture of our proposed framework MAMEX.

Methodology

MAMEX introduces a comprehensive architecture designed to capitalize on multi-modal data. It is composed of two primary modules: the Modality Extraction Module and the Modality Fusion Module. The former is tasked with processing and aligning features from various modalities, while the latter dynamically combines these features through an adaptive fusion mechanism.

Modality Extraction Module: This component incorporates specialized extractors to transform raw data from each modality into high-dimensional feature representations. These are subsequently refined using modality-specific MoE layers, incorporating a gating mechanism that dynamically selects and combines experts to align the features of different modalities effectively.

Mixture of Modality Fusion: In this stage, the model constructs unified item representations by performing a weighted summation of modality-specific embeddings. A dynamic gating mechanism determines the weight of each modality, enhancing the model's adaptability in emphasizing the most informative features.

Results and Discussion

Experiments conducted on Amazon datasets, including Baby, Clothing, and Sport, demonstrate the superior performance of MAMEX compared to state-of-the-art baselines. Notably, the framework achieves substantial gains in Recall@10 and NDCG@10 metrics, signifying its effectiveness in cold-start scenarios. The introduction of a balance regularization term mitigates the risk of modality collapse and improves the robustness of the model.

Figure 2: The impact of different modalities on three datasets.

Ablation Studies

The paper conducts extensive ablation studies to assess the impact of various components within MAMEX. Results indicate that the removal of key elements, such as the MoE layers, leads to performance degradation, underscoring their importance in the model's architecture. Furthermore, testing different MoE adapter designs revealed that the dual-level design of MAMEX surpasses alternatives in capturing modality-specific interactions.

Figure 3: Three MoE adapter designs evaluated in our study.

Conclusion

MAMEX presents a promising solution to the cold-start challenge by dynamically integrating multi-modal data through an adaptive MoE framework. The architecture’s capability to adaptively weight different modalities and its robust performance across multiple datasets highlight its potential for enhancing recommendation systems. Future research may explore extending this approach to address missing modalities and further optimizing the adaptive fusion process to enhance scalability and generalization in diverse recommendation scenarios.