- The paper introduces DGMRec, a framework that disentangles and generates modality features to address missing data in multi-modal recommendations.

- It employs distinct modules for separating general and specific modality attributes and generating features for absent modalities.

- Empirical results on datasets like Amazon Baby and TikTok show that DGMRec outperforms state-of-the-art systems in challenging missing modality scenarios.

Disentangling and Generating Modalities for Recommendation in Missing Modality Scenarios

Introduction

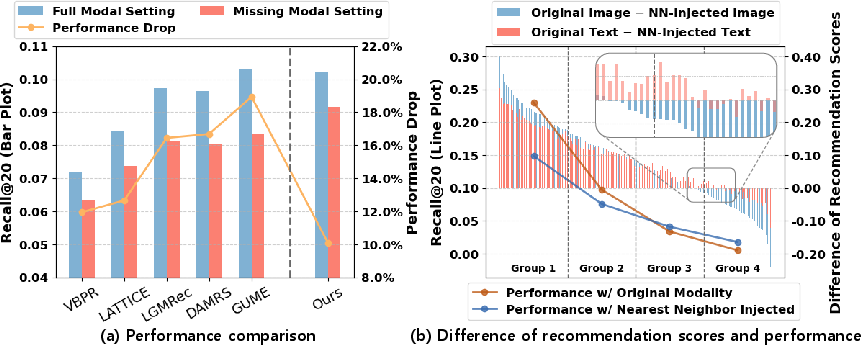

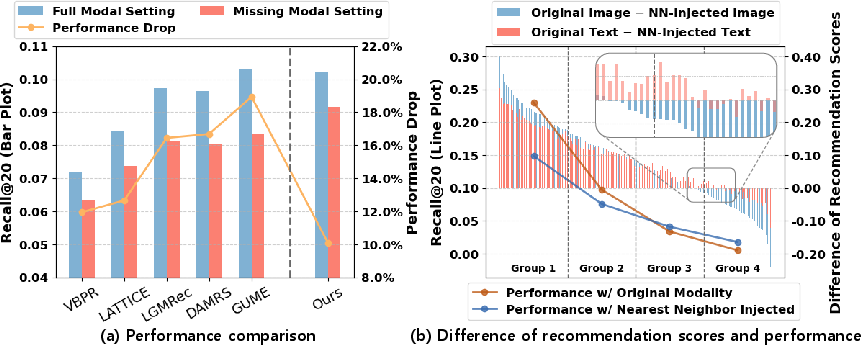

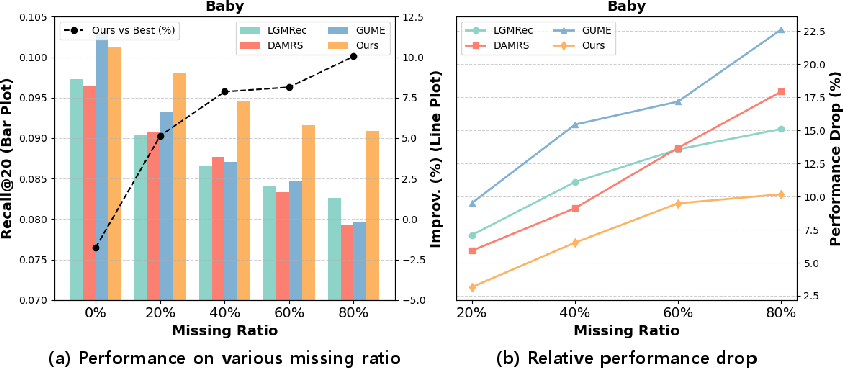

The paper introduces the Disentangling and Generating Modality Recommender (DGMRec), a multi-modal recommender system (MRS) designed to address two prominent challenges in recommendation settings with missing modalities: the adverse impact of missing modality scenarios and the unique characteristics inherent in each modality. MRSs that leverage diverse information sources, like text and images, tend to suffer performance drops when some modalities are absent (Figure 1). DGMRec effectively disentangles general and specific modality features and generates missing modality features from the available ones, thereby offering robust solutions for real-world recommendation problems in the presence of incomplete data.

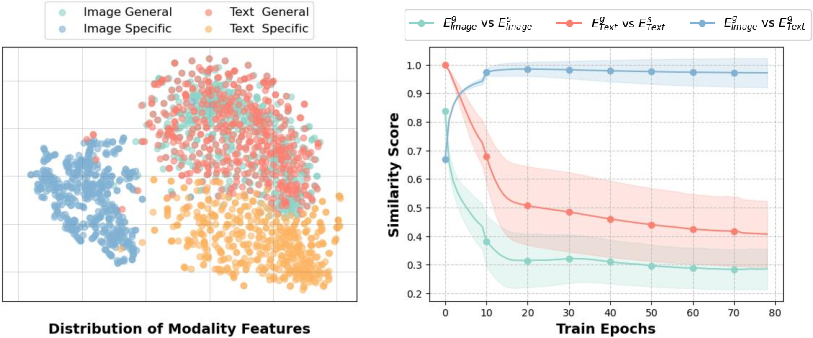

Figure 1: (a) Performance drop of recent MRSs when missing modality exists. (b) Difference in recommendation scores with missing modalities.

DGMRec Overview

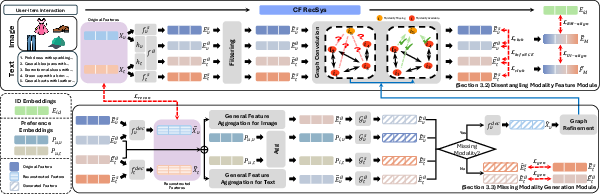

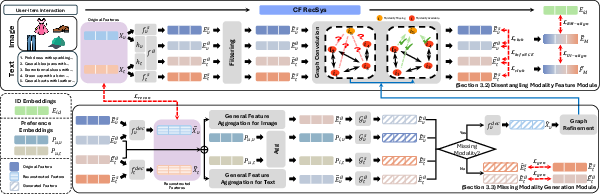

The DGMRec framework consists of two main modules: The Disentangling Modality Feature module and the Missing Modality Generation module (Figure 2). The first module focuses on separating modality-specific features into general and specific components. This disentanglement is crucial because it acknowledges that different modalities convey unique types of information — while an image may capture visual aesthetics, the text might communicate functional details. The module's design ensures that these distinctive attributes are preserved and individually harnessed for recommendation.

Figure 2: Overview of DGMRec framework, showing its modality disentangling and generation capabilities.

DGMRec's Missing Modality Generation module further differentiates it by generating features for missing modalities using a combination of aligned features from other available modalities and user preference data. This capability allows DGMRec to maintain robust recommendation performance even when multiple modalities are absent.

Experimental Results

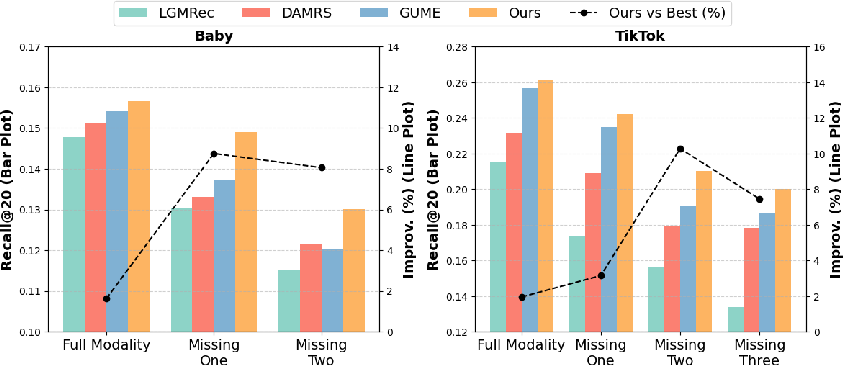

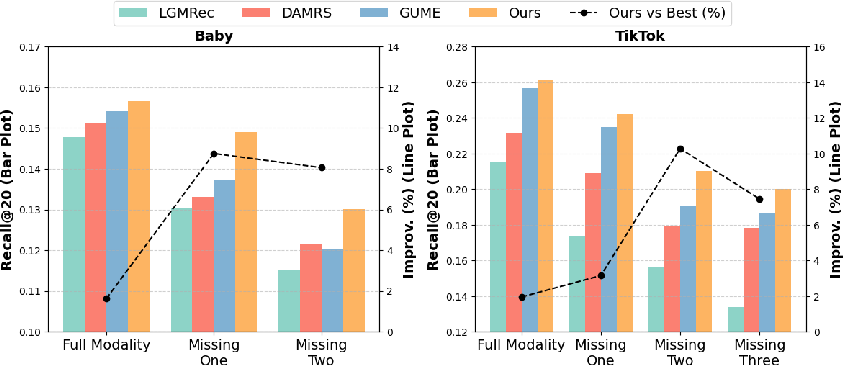

The research presents extensive experimental evaluations of DGMRec across several datasets, including the Amazon Baby and TikTok datasets. DGMRec consistently outperforms existing multi-modal recommendation systems and handles diverse realistic settings, including scenarios with new items and varying levels of missing data (Figure 3).

Figure 3: Performance on various missing levels on the Amazon Baby and TikTok datasets.

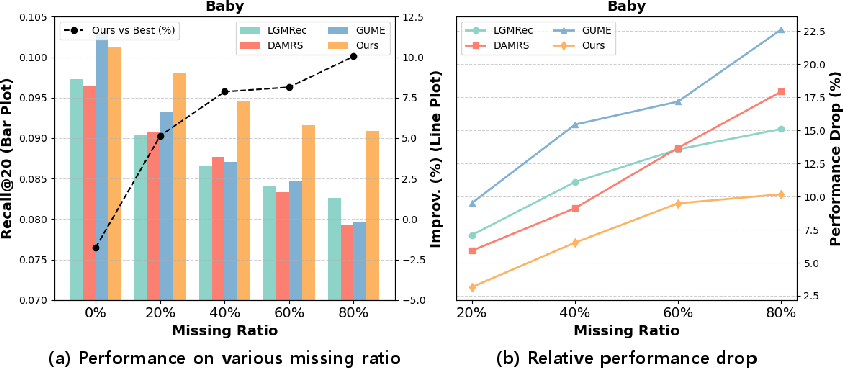

In scenarios of extreme data sparsity — a common case in real-world applications — DGMRec's ability to generate missing modality features results in measurable performance improvements over both traditional collaborative filtering methods and other SOTA multi-modal systems (Figure 4).

Figure 4: (a) Performance on various missing ratios, and (b) relative performance drop on the Amazon Baby dataset.

Effectiveness of Disentanglement

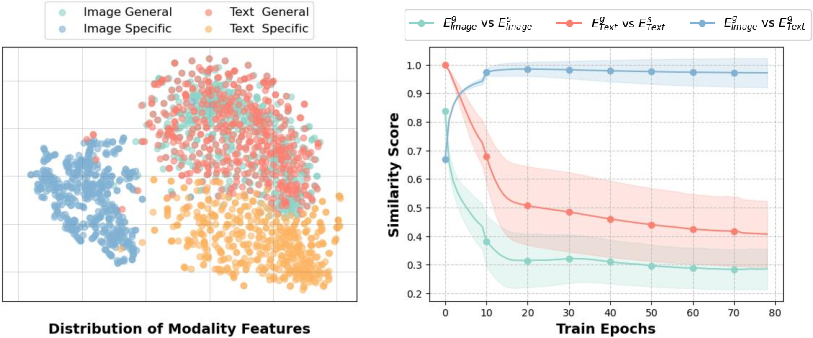

The paper provides compelling evidence that the method of disentangling modality features is highly effective (Figure 5). By using information-based losses (such as CLUB and InfoNCE), DGMRec ensures that general modality features can align across different data types, while specific features remain distinct but informative. These techniques help prevent the loss of valuable modality-specific information and support accurate generation of missing features.

Figure 5: (a) Visualization of disentangled modality features and (b) similarity score between features during training.

Conclusion

DGMRec makes a significant contribution to multi-modal recommendation research by addressing critical challenges related to missing modalities and unaligned modality characteristics. Its framework offers a strong practical advantage, particularly in environments where data completeness is neither guaranteed nor feasible. The paper demonstrates that a disentangling and generating approach is not only effective in maintaining recommendation performance but also adaptable enough to address a wide range of practical scenarios.

Combining high performance in classic recommendation metrics with a strong capability to function in missing modality regimes highlights DGMRec's potential utility in real-world applications. Future developments may see iterations of DGMRec incorporating broader types of modality data and enhancing cross-modality retrieval capabilities, ensuring it remains relevant in constantly evolving data environments.