DMSC: Dynamic Multi-Scale Coordination Framework for Time Series Forecasting (2508.02753v2)

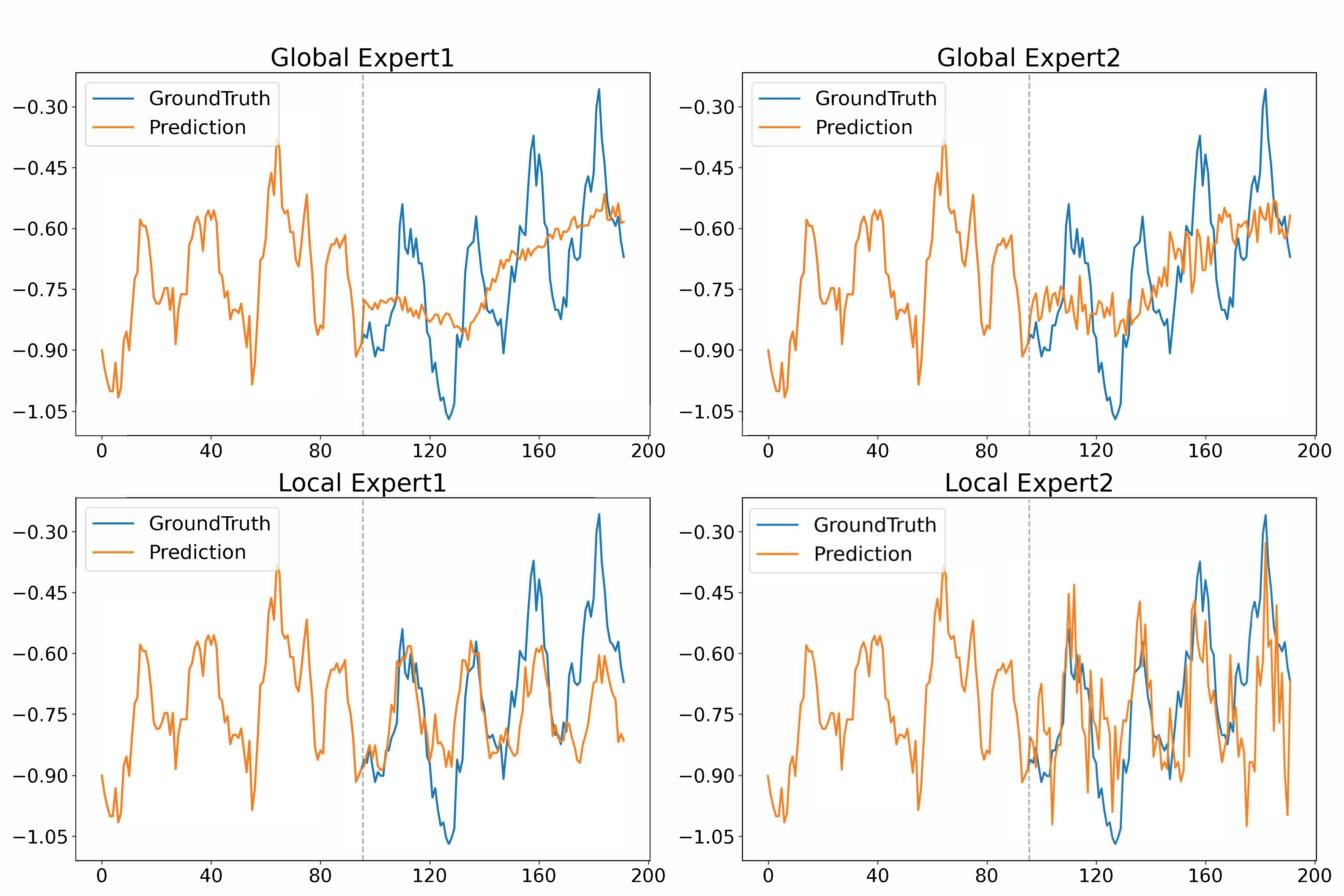

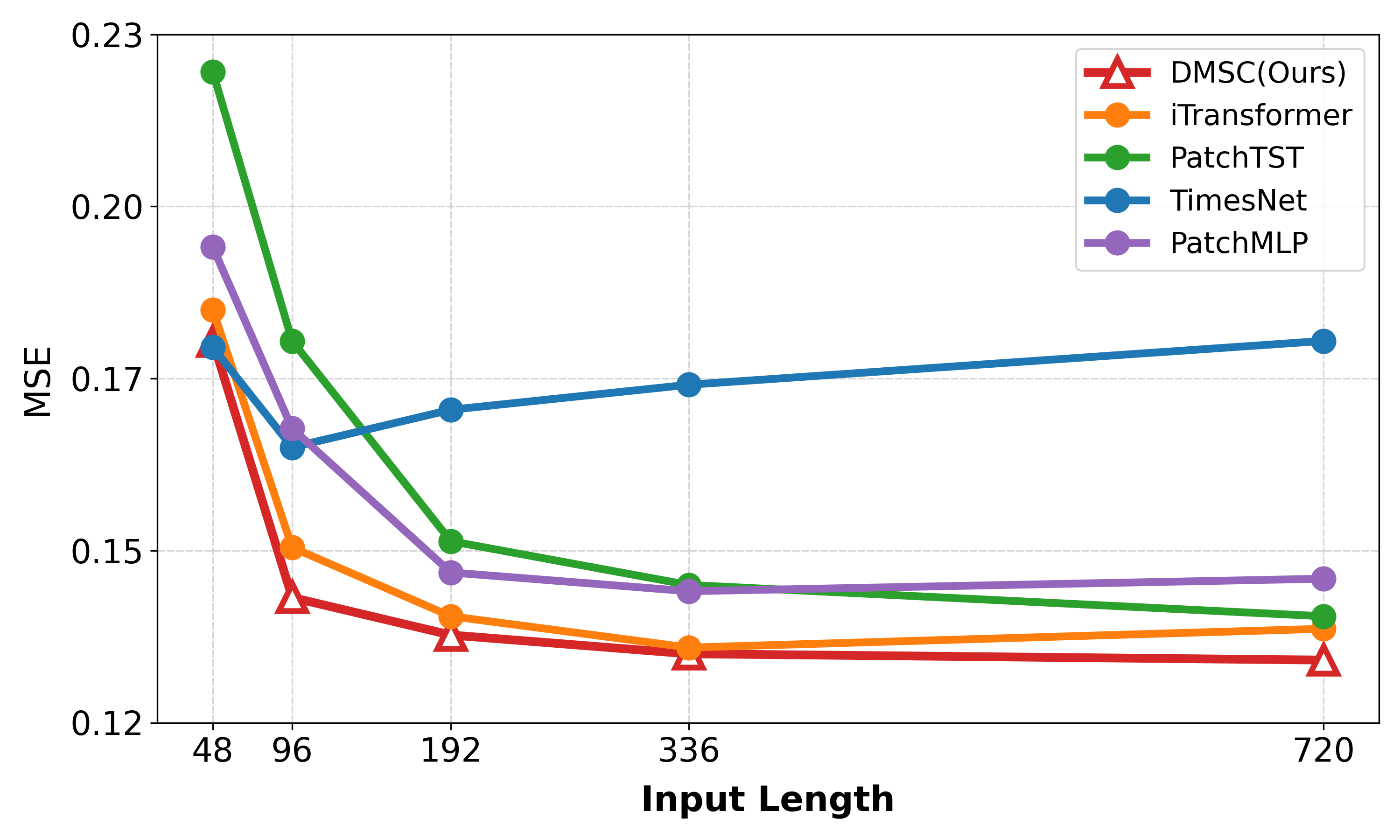

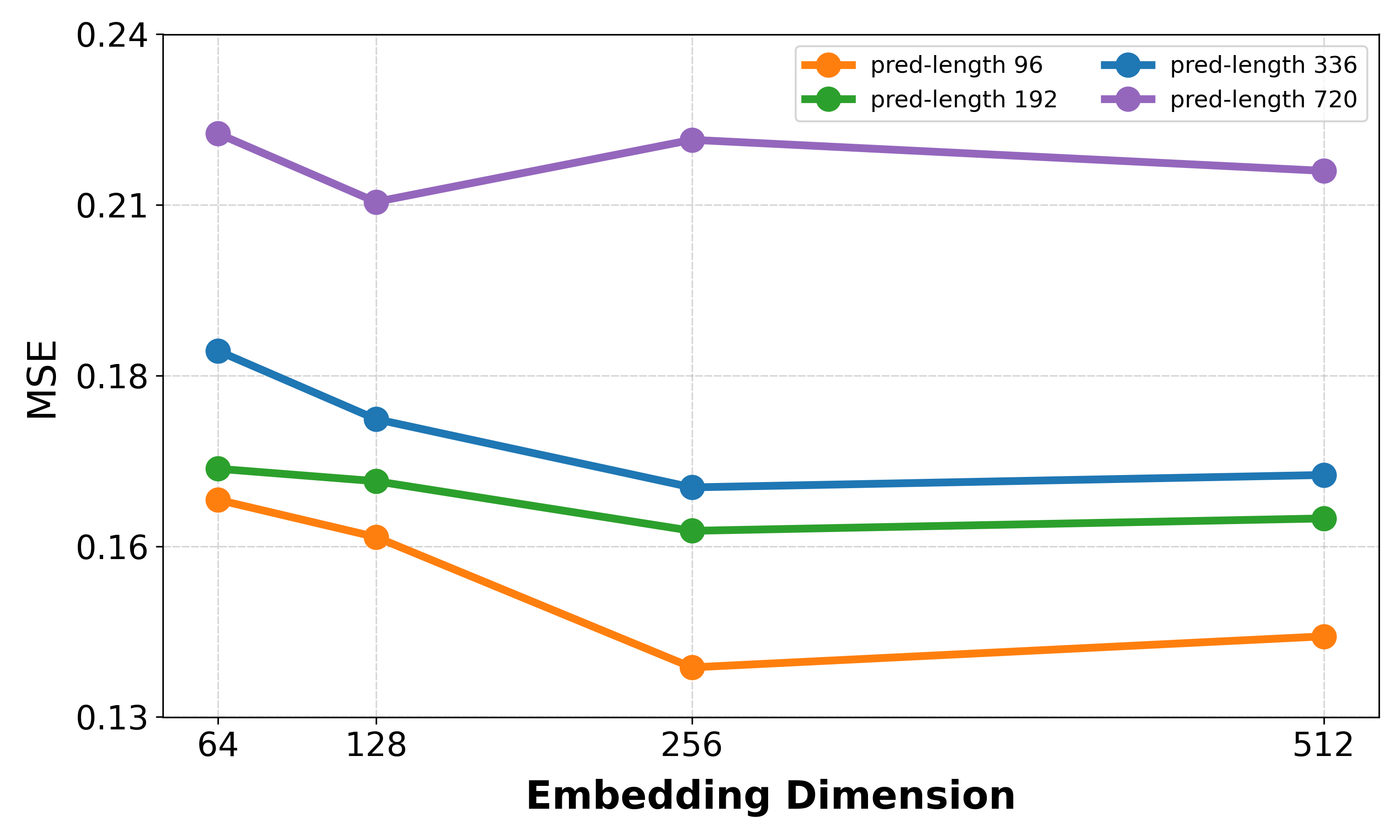

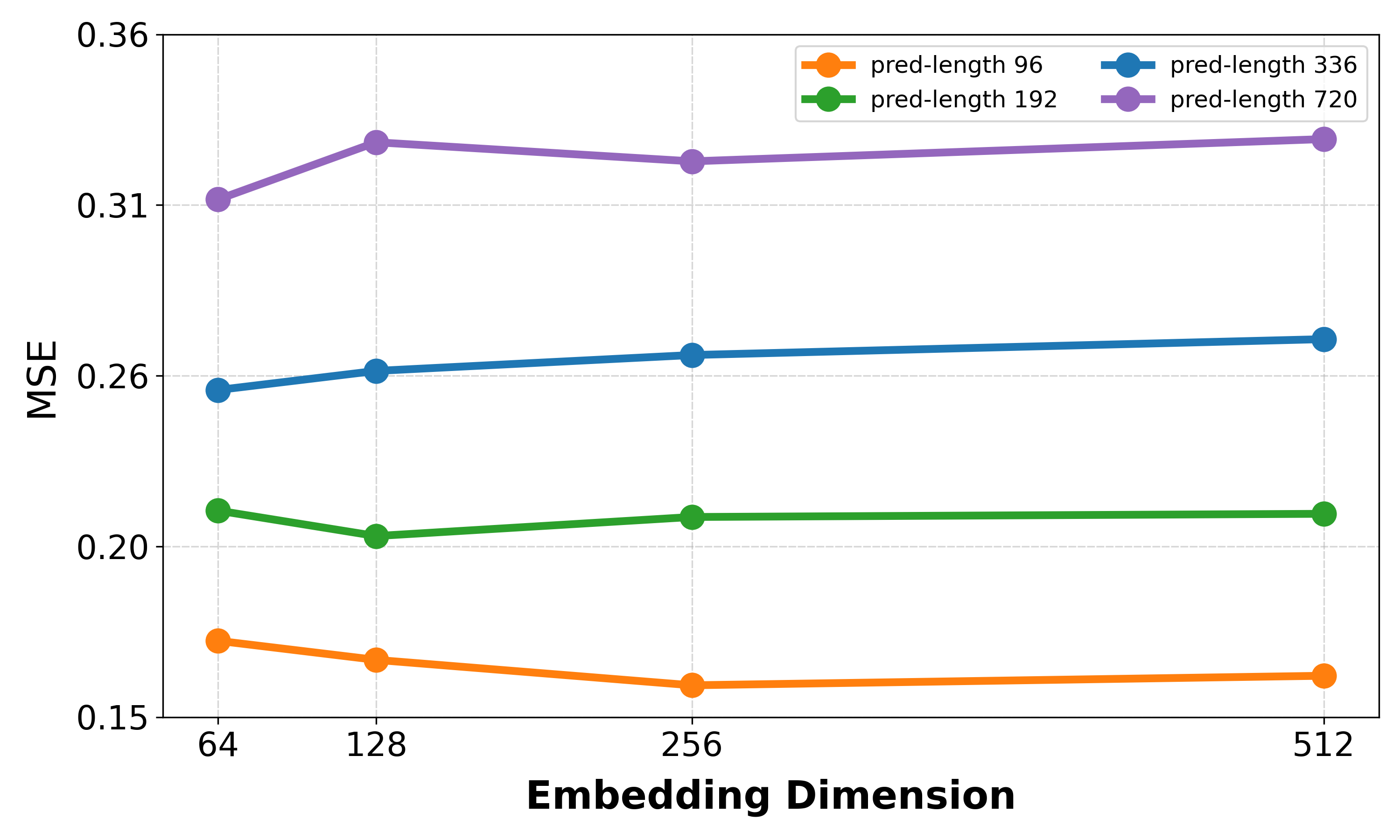

Abstract: Time Series Forecasting (TSF) faces persistent challenges in modeling intricate temporal dependencies across different scales. Despite recent advances leveraging different decomposition operations and novel architectures based on CNN, MLP or Transformer, existing methods still struggle with static decomposition strategies, fragmented dependency modeling, and inflexible fusion mechanisms, limiting their ability to model intricate temporal dependencies. To explicitly solve the mentioned three problems respectively, we propose a novel Dynamic Multi-Scale Coordination Framework (DMSC) with Multi-Scale Patch Decomposition block (EMPD), Triad Interaction Block (TIB) and Adaptive Scale Routing MoE block (ASR-MoE). Specifically, EMPD is designed as a built-in component to dynamically segment sequences into hierarchical patches with exponentially scaled granularities, eliminating predefined scale constraints through input-adaptive patch adjustment. TIB then jointly models intra-patch, inter-patch, and cross-variable dependencies within each layer's decomposed representations. EMPD and TIB are jointly integrated into layers forming a multi-layer progressive cascade architecture, where coarse-grained representations from earlier layers adaptively guide fine-grained feature extraction in subsequent layers via gated pathways. And ASR-MoE dynamically fuses multi-scale predictions by leveraging specialized global and local experts with temporal-aware weighting. Comprehensive experiments on thirteen real-world benchmarks demonstrate that DMSC consistently maintains state-of-the-art (SOTA) performance and superior computational efficiency for TSF tasks. Code is available at https://github.com/1327679995/DMSC.

Sponsored by Paperpile, the PDF & BibTeX manager trusted by top AI labs.

Get 30 days freePaper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.