Analysis of "Mamba or Transformer for Time Series Forecasting? Mixture of Universals (MoU) Is All You Need"

The paper under discussion, "Mamba or Transformer for Time Series Forecasting? Mixture of Universals (MoU) Is All You Need," tackles the complex problem of time series forecasting, which involves predicting future values based on a historical sequence of data. This is crucial in various domains such as climate prediction, financial investments, and energy management. The paper highlights the limitations in existing forecasting methods and introduces a novel architecture, the Mixture of Universals (MoU), which synergizes Transformer and Mamba models to efficiently capture both short-term and long-term dependencies.

Motivation and Contributions

Traditional approaches to time series forecasting have often struggled to balance the need for capturing both short and long-term dependencies. While Transformer models excel at modeling long-range dependencies due to their self-attention mechanism, they are computationally demanding due to their quadratic complexity. Conversely, Mamba models offer a near-linear time complexity but may compromise on capturing information over longer periods. The MoU model is engineered to address these intricate challenges through two primary innovations:

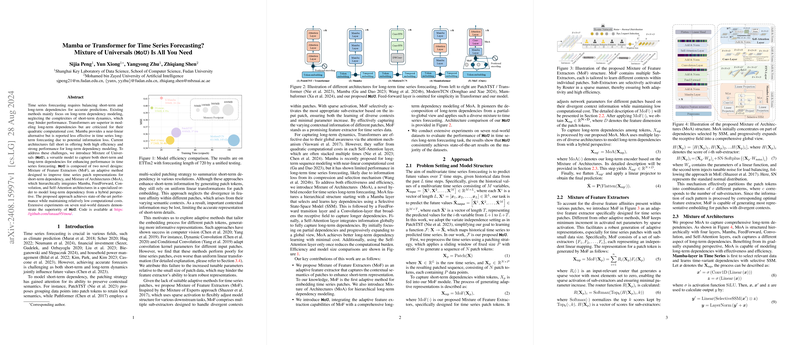

- Mixture of Feature Extractors (MoF): This component is designed to enhance the model's ability to capture short-term dependencies. MoF uses an adaptive strategy by employing multiple sub-extractors that can flexibly adjust to the varying semantic contexts of time series patches. This approach allows for consistent representation of diverse contexts without a significant increase in operational parameters.

- Mixture of Architectures (MoA): This hierarchically integrates different architectural components such as Mamba, Convolution, FeedForward, and Self-Attention layers. The MoA is structured to first focus on important partial dependencies using Mamba's Selective State-Space Model and progressively transforms this information to align with a global perspective using Self-Attention, thereby balancing efficiency and effectiveness across both dependency types.

Experimental Evaluation and Findings

The paper validates the MoU approach through extensive experiments across seven real-world datasets, encompassing a variety of time series prediction challenges. The model demonstrates state-of-the-art performance across these different scenarios. Notably, the MoU model consistently outperforms strong baselines like the linear D-Linear and convolution-based ModernTCN, particularly in scenarios with varying prediction lengths.

The ablation studies further reinforce the strengths of the proposed components. MoF's capability of handling diverse contexts is shown to yield more representative embeddings when compared to standard linear mappings and other adaptive methods like Dynamic Convolution. Similarly, the layered sequencing in MoA, especially the order from Mamba to Self-Attention, proves essential for effectively harnessing long-term dependencies.

Implications and Future Directions

The introduction of MoU marks a significant step forward in time series forecasting. Its dual-focus architecture addresses the prevalent trade-offs in capturing different dependency horizons while maintaining computational efficiency. The research opens several avenues for future inquiry:

- Model Adaptability: The efficacy of the MoU architecture in diverse real-world applications invites potential exploration into its adaptability across other structured data domains.

- Scalability and Real-time Application: Future work could explore the scalability of MoU, particularly its performance in real-time data processing which is increasingly crucial in dynamic and fast-evolving industries.

- Optimizing Computational Resources: Given the rise of edge computing and constraints on computational resources, further optimizing MoU's computational footprint or incorporating sparsity and pruning methods could also enhance its applicability and deployment at scale.

Overall, the paper presents a compelling synergy of existing architectures in MoU and makes substantive contributions to the field of time series forecasting, offering robust avenues for both theoretical advancements and practical implementations in AI.