- The paper demonstrates that incorporating chain-of-thought reasoning enhances synthetic prompt quality, achieving up to 57.2% accuracy improvements on benchmarks.

- It employs a two-stage process—prompt generation followed by filtering via Answer-Consistency and RIP—to ensure high data quality.

- The study shows synthetic prompts can outperform human-curated data in both reasoning and instruction-following tasks, indicating scalable model alignment.

CoT-Self-Instruct: High-Quality Synthetic Prompt Generation for Reasoning and Non-Reasoning Tasks

Introduction

The paper "CoT-Self-Instruct: Building high-quality synthetic prompts for reasoning and non-reasoning tasks" (2507.23751) introduces a synthetic data generation and curation pipeline that leverages Chain-of-Thought (CoT) reasoning to produce high-quality prompts for both verifiable reasoning and general instruction-following tasks. The method addresses the limitations of human-generated data—such as cost, scarcity, and inherent biases—by enabling LLMs to autonomously generate, reason about, and filter synthetic instructions. The approach is evaluated across a range of benchmarks, demonstrating consistent improvements over prior synthetic and human-curated datasets.

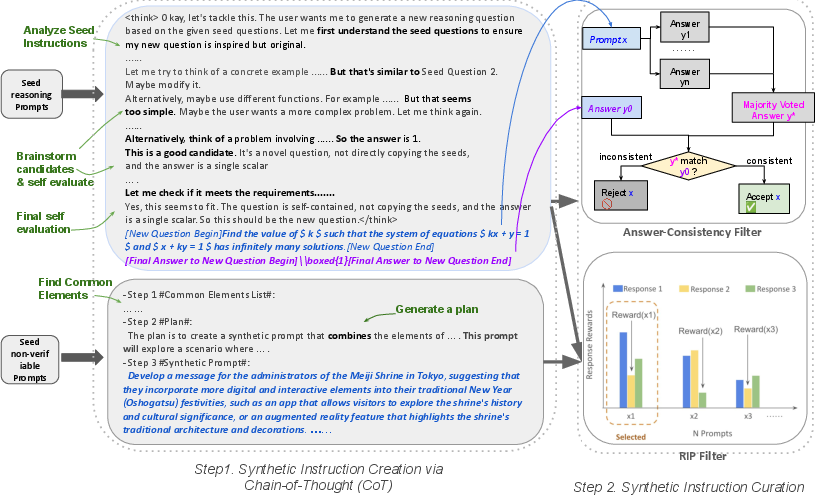

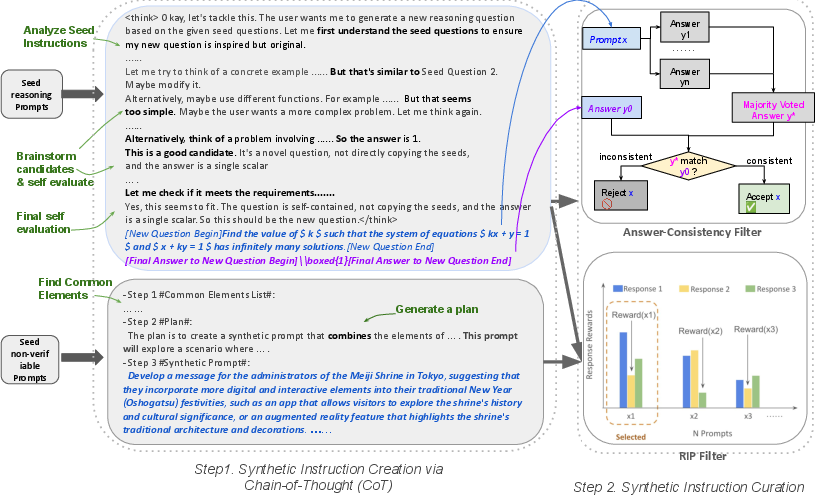

Figure 1: The CoT-Self-Instruct pipeline: LLMs are prompted to reason and generate new instructions from seed prompts, followed by automatic curation using Answer-Consistency for verifiable tasks or RIP for non-verifiable tasks.

Methodology

Synthetic Instruction Creation via Chain-of-Thought

CoT-Self-Instruct operates in two main stages:

- Synthetic Instruction Generation: Given a small pool of high-quality, human-annotated seed instructions, the LLM is prompted to analyze, reason step-by-step, and generate new instructions of comparable complexity and domain. For reasoning tasks, the model is further prompted to solve its own generated question, producing both the instruction and a verifiable answer. For general instruction-following, only the instruction is generated, with responses evaluated later by a reward model.

- Synthetic Instruction Curation: To ensure data quality, the generated instructions are filtered:

- Verifiable Reasoning Tasks: The Answer-Consistency filter discards examples where the LLM’s majority-vote answer does not match the CoT-generated answer, removing ambiguous or incorrectly labeled data.

- Non-Verifiable Tasks: The Rejecting Instruction Preferences (RIP) filter uses a reward model to score multiple responses per prompt, retaining only those prompts with high minimum response scores.

This two-stage process is designed to maximize the quality and utility of synthetic data for downstream RL-based training.

Implementation Details

- Prompt Engineering: The CoT-Self-Instruct templates explicitly require the LLM to reflect on the seed instructions, plan, and reason before generating new prompts. For reasoning tasks, the prompt enforces a strict format for both the question and answer, facilitating downstream verification.

- Filtering: For Answer-Consistency, K responses are sampled per prompt, and only those with matching answers are retained. For RIP, the lowest reward score among K responses is used as the prompt’s score, and prompts below a quantile threshold are filtered out.

- Training: Models are fine-tuned using RL algorithms (e.g., GRPO for reasoning, DPO for instruction-following) on the curated synthetic datasets. Hyperparameters are carefully selected for each domain, and evaluation is performed on established benchmarks.

Experimental Results

Reasoning Tasks

- CoT-Self-Instruct vs. Self-Instruct: Synthetic instructions generated with CoT reasoning consistently outperform those generated without CoT. For example, on a suite of mathematical reasoning benchmarks (MATH500, AMC23, AIME24, GPQA-Diamond), CoT-Self-Instruct achieves an average accuracy of 53.0%, compared to 49.5% for Self-Instruct (both unfiltered).

- Impact of Filtering: Applying Answer-Consistency filtering to CoT-Self-Instruct data further increases average accuracy to 57.2%. Filtering Self-Instruct data with Self-Consistency or RIP also improves performance, but CoT-Self-Instruct maintains a clear advantage.

- Comparison to Human and Public Datasets: CoT-Self-Instruct with filtering outperforms both the s1k (44.6%) and OpenMathReasoning (47.5%) datasets, even when training set sizes are matched.

Non-Reasoning Instruction-Following Tasks

- CoT-Self-Instruct vs. Self-Instruct and Human Prompts: On AlpacaEval 2.0 and Arena-Hard, CoT-Self-Instruct synthetic prompts yield higher win rates and scores than both Self-Instruct and human-curated WildChat prompts. For instance, CoT-Self-Instruct with RIP filtering achieves an average score of 54.7, compared to 49.1 for Self-Instruct+RIP and 50.7 for WildChat+RIP.

- Online DPO: In online DPO settings, CoT-Self-Instruct+RIP achieves 67.1, surpassing WildChat (63.1).

- Effect of CoT Length: Longer CoT chains in prompt generation yield further improvements over shorter CoT or no-CoT templates.

Analysis and Discussion

Data Quality and Scaling

The results demonstrate that the quality of synthetic data, rather than sheer quantity, is critical for effective LLM post-training. CoT reasoning during prompt generation leads to more challenging, diverse, and well-structured instructions, which in turn produce better downstream performance. Filtering methods such as Answer-Consistency and RIP are essential for removing ambiguous or low-quality data, especially as synthetic datasets scale.

Robustness Across Domains

The CoT-Self-Instruct framework is effective for both verifiable reasoning and open-ended instruction-following tasks. The modularity of the curation step (Answer-Consistency vs. RIP) allows the method to adapt to the presence or absence of verifiable ground truth, making it broadly applicable.

Implications for Model Alignment and Self-Improvement

The findings suggest that LLMs can be leveraged not only as data generators but also as data curators, autonomously improving their own training data. This has implications for scalable model alignment, reducing reliance on costly human annotation, and mitigating issues of data contamination and recursive model collapse [shumailov2024ai].

Limitations and Future Directions

While CoT-Self-Instruct demonstrates strong empirical gains, several open questions remain:

- Theoretical understanding of why CoT-based synthetic data is more effective than direct instruction generation.

- Potential for further improvements via more sophisticated planning, reflection, or backtracking mechanisms during prompt generation.

- Exploration of hybrid pipelines combining human and synthetic data, or integrating external knowledge sources.

Conclusion

CoT-Self-Instruct establishes a robust pipeline for generating and curating high-quality synthetic prompts for both reasoning and non-reasoning tasks. By explicitly incorporating step-by-step reasoning and rigorous filtering, the method produces synthetic datasets that consistently outperform both prior synthetic and human-curated data across multiple benchmarks. The approach highlights the potential for LLMs to autonomously generate and curate their own training data, with significant implications for scalable model alignment and continual self-improvement in LLMs.