Enhancing Reasoning in LLMs Through CoT-Decoding

Introduction to Chain-of-Thought (CoT) Decoding

LLMs have exhibited remarkable capabilities in handling complex reasoning tasks, typically elicited through few-shot or zero-shot prompting techniques. However, the traditional approach relies heavily on manual prompt engineering, raising questions about LLMs' intrinsic reasoning abilities. The paper conducted by Xuezhi Wang and Denny Zhou from Google DeepMind marks a departure by exploring a novel strategy: eliciting chain-of-thought reasoning without explicit prompting, leveraging the model's inherent reasoning capabilities.

Discovering Inherent Reasoning Paths

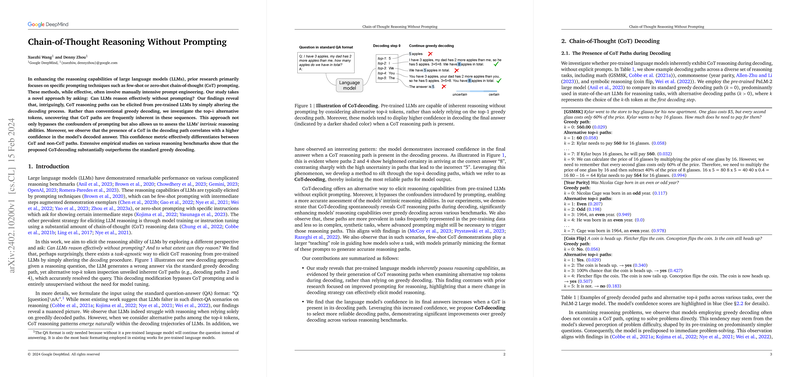

Remarkably, the research indicates that LLMs can indeed reason effectively without being explicitly prompted. This phenomenon is unveiled by modifying the decoding process to consider alternative top- tokens instead of the conventional greedy decoding. The alternative paths thus generated often inherently contain CoT reasoning. This method circumvents the traditional reliance on prompt engineering and sheds light on the LLMs' intrinsic reasoning abilities. Furthermore, the presence of a CoT path during decoding showed a correlation with increased model confidence in the resulting answer, demonstrating that CoT paths can be distinguished based on confidence metrics.

Empirical Findings from Various Benchmarks

The empirical evaluation across multiple reasoning benchmarks reveals substantial improvements in reasoning capabilities when leveraging the proposed CoT-decoding method, compared to standard greedy decoding. It's noteworthy that the improvement in reasoning performance was especially significant in tasks closely represented in the pre-training data. Conversely, for complex, synthetic tasks, the presence of CoT paths was less pronounced, suggesting that advanced prompting might still be necessary for eliciting reasoning in these scenarios.

Implications and Future Directions

This investigation into the unsupervised elicitation of reasoning capabilities presents significant implications for the development of LLMs. It demonstrates the possibility of enhancing reasoning without the intricate process of prompt design, through a mere alteration in decoding strategies. The findings suggest a closer examination of LLMs' pre-training data for better understanding and leveraging inherent reasoning capabilities.

Moreover, the paper opens several avenues for future research, particularly in the efficient application of CoT-decoding and further exploration into the model's intrinsic reasoning strategies. The exploration into the optimal choice of for various tasks and the potential for fine-tuning models based on CoT-decoding paths to further enhance reasoning capabilities represent promising directions.

Conclusion

The research presented by Wang and Zhou marks a significant advancement in our understanding of LLMs' reasoning capabilities. By demonstrating that LLMs can inherently reason without the need for explicit prompting, this work challenges the current paradigms in AI research focused on prompting techniques. As the field continues to evolve, this paper paves the way for more nuanced approaches to eliciting and enhancing the reasoning abilities of LLMs, with implications that stretch far beyond the current methodologies.