- The paper introduces a model-agnostic framework that integrates early, late, and intermediate fusion to boost predictive performance.

- It employs an adaptive mutual learning mechanism to share information among heterogeneous models while reducing generalization errors.

- Empirical results in domains like Alzheimer's detection show that Meta Fusion outperforms traditional fusion methods consistently.

Meta Fusion is a novel framework designed to enhance multimodal data fusion by integrating various classical strategies such as early, late, and intermediate fusion, while introducing a coordinated mutual learning mechanism for improved predictive performance. This essay will explore the technical intricacies of Meta Fusion, highlighting implementation approaches, theoretical insights, and empirical validation.

Framework Overview

The Meta Fusion framework is characterized by its model-agnostic approach, allowing it to adapt to unique characteristics of different modal data instances. The framework constructs a cohort of models, each focused on different latent data representations, integrating soft information sharing based on mutual learning principles to boost ensemble performance. The process involves selecting the most informative data fusion strategy on-the-fly, thus addressing critical questions of "how" and "what" to fuse.

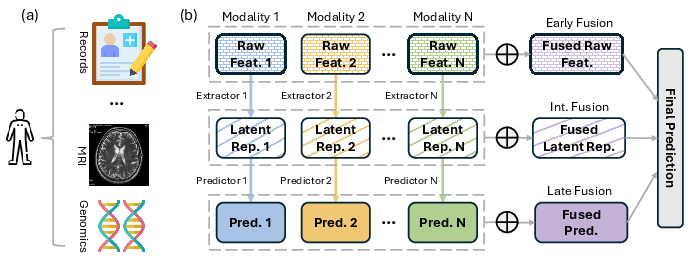

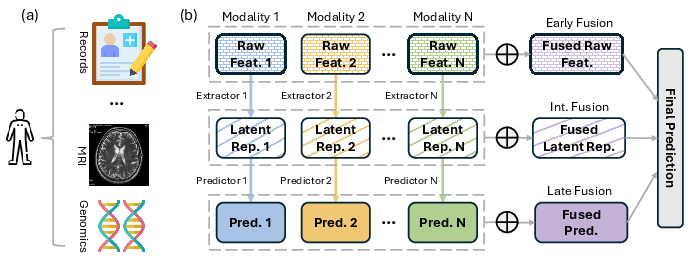

Figure 1: Overview of classical multimodal fusion strategies. (a) Example of multimodal data sources used in medical diagnosis, including patient records, MRI scans, and genomic data. (b) Illustration of the three broad fusion categories: early fusion, late fusion, and intermediate fusion.

Methodology Implementation

Constructing the Student Cohort

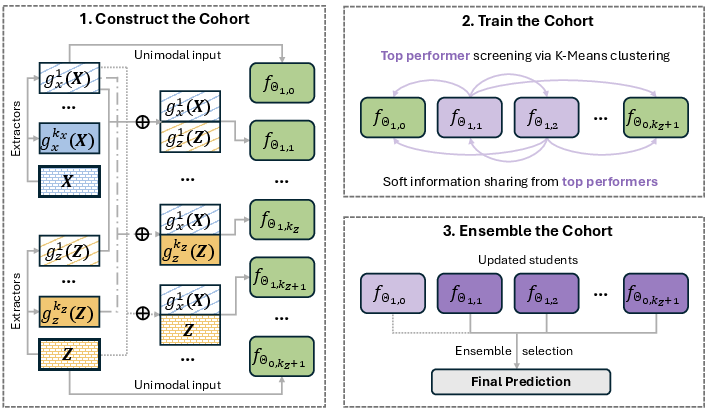

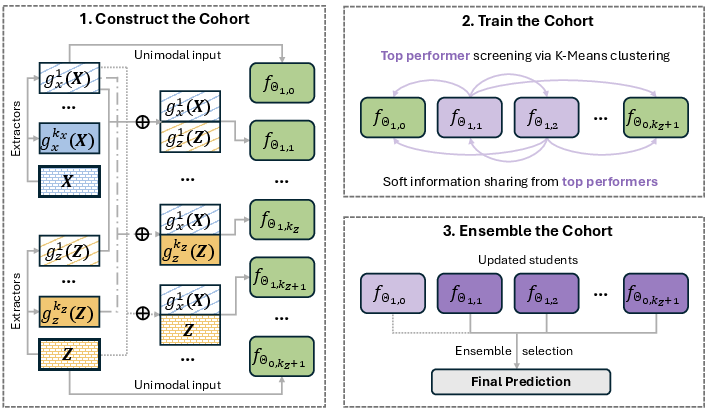

Meta Fusion constructs a cohort of heterogeneous models, with each model leveraging different combinations of latent representations across modalities. This approach supports flexible architecture adaptations, including convolutional networks for images and transformer-based models for textual data. This design expands the breadth of cross-modal learning, encompassing all viable combinations of latent representations for enriched feature discovery (Figure 2).

Figure 2: Overview of the Meta Fusion pipeline.

Mutual Learning Dynamics

The core innovation of Meta Fusion lies in its adaptive mutual learning mechanism. This method selectively shares information between models, penalizing disagreement to discourage negative knowledge transfer. The cohort is trained under a novel soft information sharing protocol, wherein top-performing models influence peer learning, effectively aligning outputs rather than parameters. This adaptive formulation proves critical in minimizing generalization errors, as shown through theoretical analysis and confirmed by empirical data.

Aggregating Predictions

Ensemble learning techniques such as ensemble selection are utilized to combine model predictions effectively. This step implements robust decision-making protocols, prioritizing model diversity while ensuring the inclusion of top-performing models previously identified during soft information sharing. By considering diverse representations and optimizing collective predictive capacity, the final ensemble prediction demonstrates the superior discriminative ability across varied scenarios.

Theoretical Insights

The analysis within the framework establishes that adaptive mutual learning within Meta Fusion decreases the generalization error component associated with intrinsic variance, without impacting biases adversely. Further unpacking the optimization landscape, the framework exposes new theoretical facets of deep mutual learning, documenting how structured interaction during training reduces epistemic uncertainty and enhances task-specific performance metrics.

Empirical Evaluation

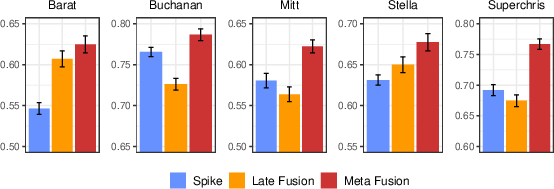

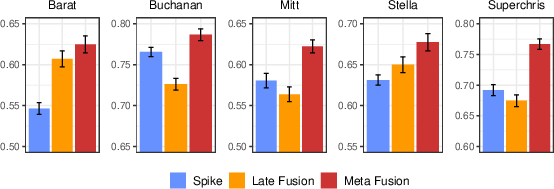

Meta Fusion significantly outperforms traditional fusion methodologies in both synthetic experiments and real-world applications. It has shown efficacy in challenging domains such as Alzheimer's disease detection and neural decoding tasks, where it has notably improved classification accuracies by efficiently integrating disparate data sources. Benchmark comparisons underscore consistent performance gains attributable to the framework's strategic data coordination and adaptive learning capabilities.

Figure 3: Classification accuracy of Meta Fusion and top benchmarks (Spike-only and Late Fusion) on the memory task for all five rats. Error bars indicate the standard errors. Results are summarized over 100 repetitions.

Conclusion

Meta Fusion advances the state of the art in multimodal data fusion by unifying classical techniques with an innovative cohesive framework rooted in mutual learning. Its implementation facilitates seamless integration and superior performance across diverse data landscapes. Future avenues may explore extensions into missing data scenarios, creating pathways for ubiquitous application and evaluation across even more complex predictive environments. In essence, Meta Fusion stands as a pivotal development in leveraging multimodal datasets to drive enriched machine learning insights and applications.