Overview of "MMTM: Multimodal Transfer Module for CNN Fusion"

The paper "MMTM: Multimodal Transfer Module for CNN Fusion" introduces a novel neural network module designed to improve the fusion of multiple modalities in convolutional neural networks (CNNs). This module, termed the Multimodal Transfer Module (MMTM), facilitates the integration of different sensory inputs within a CNN framework through an innovative intermediate merging strategy, addressing challenges associated with conventional late fusion techniques.

Core Contributions

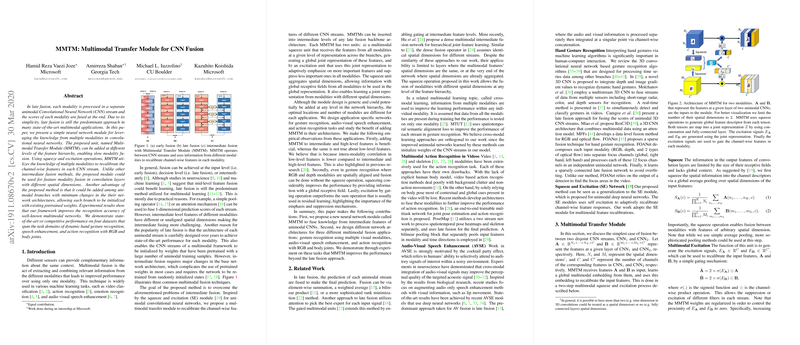

- Multimodal Transfer Module (MMTM): The principal contribution of this research is the MMTM, which systematically combines information from different modalities at various stages within the CNN's hierarchical feature extraction process. The MMTM employs a dual mechanism of squeeze and excitation, adapted from unimodal contexts, to recalibrate channel-wise features based on cross-modal insights. This approach allows the fusion of modalities even when their internal feature maps have differing spatial dimensions.

- Minimal Architectural Changes: A notable advantage of MMTM is its compatibility with existing CNN architectures. It can be integrated into unimodal branches with minimal structural alterations, enabling the reuse of pre-existing pretrained weights, thus facilitating ease of adoption in various applications.

- Empirical Evaluation Across Domains:

The effectiveness of MMTM is demonstrated through experiments on several challenging datasets across diverse domains: - Dynamic hand gesture recognition - Audio-visual speech enhancement - Action recognition involving both RGB and skeletal data

- Superior Performance: Extensive experiments indicate that MMTM achieves state-of-the-art performance or highly competitive results compared to existing methods on the evaluated datasets. This confirms the module's ability to enhance recognition and classification accuracy through improved modality fusion.

Technical Approach

- Architecture of MMTM:

MMTM operates by consistently exploiting squeeze operations to generate global descriptors from spatial data, allowing it to accommodate features from diverse spatial dimensions. Following the squeeze operation, an excitation mechanism tailored for each modality recalibrates channel features, enabling efficient feature interaction and fusion between modalities.

- Integration Strategy:

The design is generic, allowing integration at any level within the network's feature hierarchy. This flexibility supports experimentation with different fusion points to optimize application-specific outcomes.

Practical and Theoretical Implications

- Practical Impact:

The MMTM's ability to incrementally improve baseline architectures without extensive retraining or network redesign makes it a readily applicable tool in real-world applications where leveraging multi-sensor data is crucial.

- Theoretical Insights:

This work highlights the benefits of intermediate feature fusion strategies over traditional late fusion, especially in scenarios where spatial alignment of feature representations across modalities is non-trivial.

Future Directions

Potential future work includes:

- Extending MMTM for even more complex multimodal integration scenarios.

- Investigating the module's applicability in other domains such as autonomous driving and medical imaging, where multimodal data is common.

- Exploring automated architecture search techniques to discover optimal MMTM deployment strategies within deep neural networks.

In conclusion, this paper presents a compelling method for multimodal data fusion in CNNs, demonstrating improved performance across multiple tasks through the innovative MMTM. This contribution has significant implications for the design and implementation of multimodal neural architectures in AI applications.