- The paper presents a neural model that simulates basic language acquisition using Hebbian plasticity and competitive synaptic dynamics.

- The model demonstrates successful word learning, syntactic role differentiation, and sentence generation by reinforcing neural assemblies.

- The study suggests that biologically realistic frameworks can bridge cognitive processes with language processing in neural systems.

Simulated Language Acquisition in a Biologically Realistic Model of the Brain

Introduction

This paper presents a novel biologically plausible neural model designed to emulate language acquisition, harnessing a simplified framework rooted in established neuroscientific principles. The model leverages excitatory neurons, random synaptic connectivity, Hebbian plasticity, and multiple interconnected brain areas to approximate how cognitive phenomena such as language emerge from neuronal activity. By simulating assemblies of neurons, the model achieves basic language tasks: learning semantics of words, syntactic roles (verb vs. noun), word order, and generating new sentences within a limited scope of grounded linguistic input.

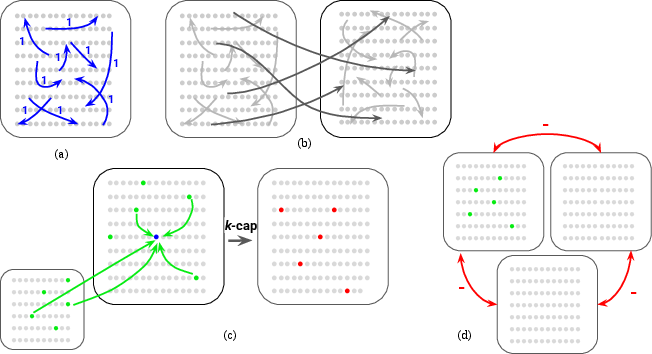

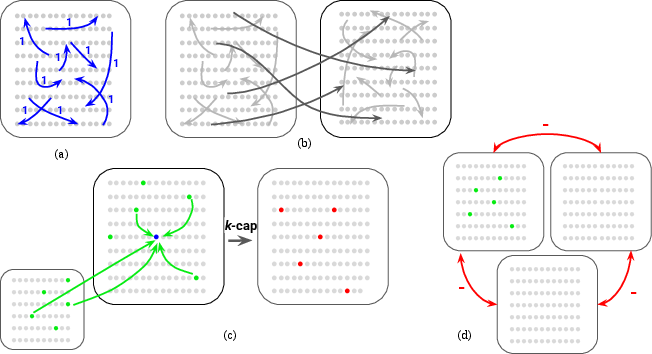

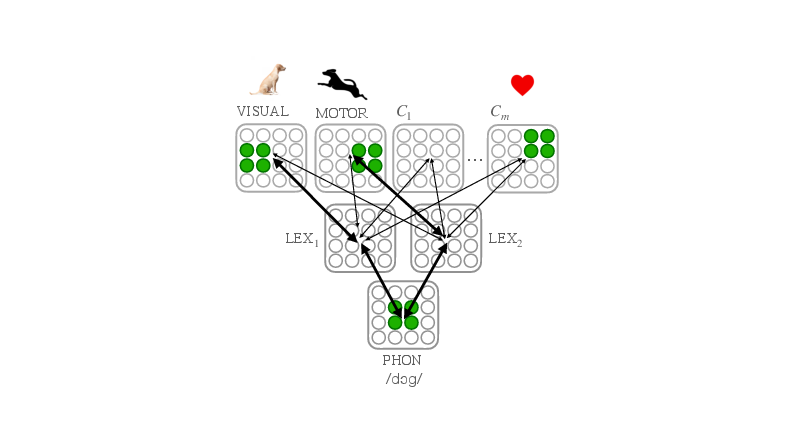

Figure 1: An overview of our biologically constrained neural model (\ for short). The brain is modeled as a set of brain areas, each having n neurons, typically a million, forming a random synaptic graph.

The Neural Model and Language Acquisition

The model, herein referred to as \, is instantiated by a defined number of neuronal areas interconnected by probabilistic synapses forming random directed graphs. The synaptic dynamics utilize a competition mechanism (k-cap) that facilitates Hebbian learning, integrating synaptic input across neurons to form assemblies. Once stabilized, these assemblies represent discrete cognitive symbols and can trigger chain reactions among connected neurons, mimicking cognitive processes.

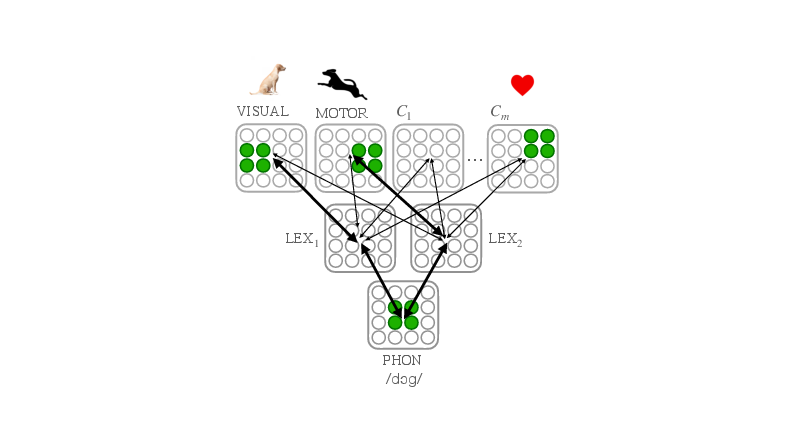

Figure 2: The \ system for learning concrete nouns and verbs. Noun assemblies originate in Lex_1 with high connectivity to the Visual area, and verb assemblies are in Lex_2 with high connectivity to Motor.

Word Learning Experiment

The language system employs input areas initialized to specific sensory representations, lexicons assigned to learn nouns and verbs, and semantic areas representing sensory context. Remarkably, the system, when exposed to sentences like "the dog runs", develops stable assemblies in lexical areas, differentiates verbs from nouns through interaction dynamics, and establishes robust pathways for sensory-to-lexicon mapping. The system portrays an ability to distinguish word categories by firing stability across its neural assemblies.

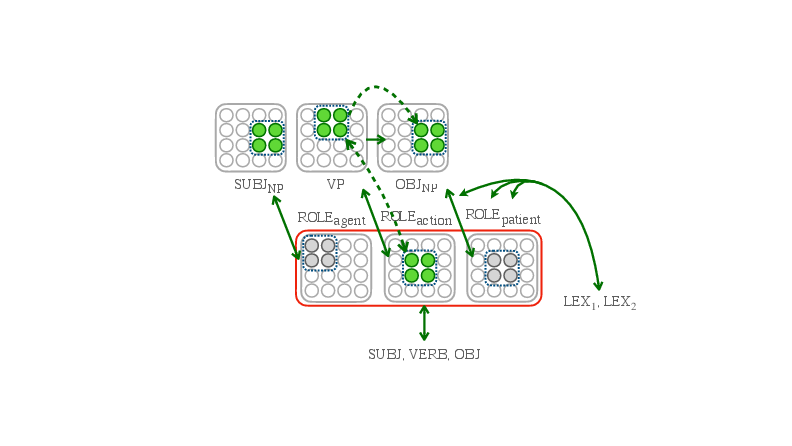

Learning Syntax and Generation

In addressing syntax, the model showcases capabilities to deduce word order through exposure to sentences with diverse word orders (SVO, SOV, etc.). It involves role-based areas simulating thematic role assignments (agent, action, patient). Through strengthened synaptic linkage between semantic representations and syntactic areas, the model learns to enforce and generate correct constituent orders. This ability extends across linguistic moods using dedicated area states, further augmenting the model's alignment with linguistic flexibility.

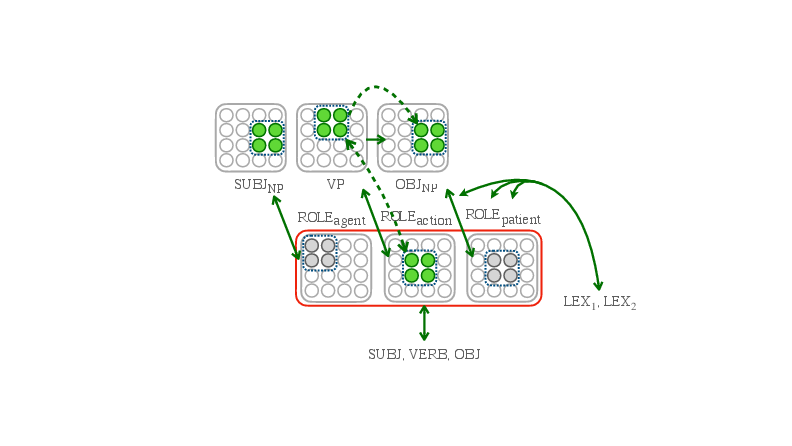

Figure 3: Addition of areas to support hierarchical parsing and generation, enabling construction and detection of nested syntactic structures.

Hierarchical Linguistic Features

Language as a hierarchical construct lies beyond sequential word comprehension. Language systems essentially interface with syntactic trees embodying sentence internal structures and recursion levels, which the model addresses by linking Broca and Wernicke-equivalents through expanded syntactic areas (\textsc{Subj}, \textsc{VP}, \textsc{Sent}). This allows the system to handle basic parsing and sentence generation via recursive activations and structures capable of embedding sentences, albeit necessitating further sophistication for complex embeddings.

Conclusion

This biologically inspired model offers a compelling theoretical depiction of neural operations manifesting elementary language acquisition capabilities. Emphasizing word formation, syntax learning, and hierarchical language aspects, it implies converging points with human cognitive faculties through adaptable neural assemblies. Future exploration spans enhancing abstract word comprehension, social linguistic practices, and interactions with advanced AI models to bridge present boundaries in biologically plausible cognition.