This paper explores how well LLMs align with the human language network in the brain. It's a fascinating area of research because it helps us understand how these models process language compared to how our brains do it. By figuring out the similarities and differences, we can learn more about both artificial and human intelligence.

Background and Motivation

The human brain has a specific network, called the language network (LN), that is responsible for language processing. Scientists have noticed that LLMs, which are trained on huge amounts of text to predict the next word in a sequence, show similarities to the brain's language network. The paper dives into understanding what makes these models align with our brains and how this alignment changes as the models learn.

Key Questions

The paper addresses four main questions:

- What makes untrained LLMs align with the brain even before they start learning?

- Is this brain alignment more related to understanding the rules of language (formal linguistic competence) or using language to interact with the world (functional linguistic competence)?

- Does the size of the model or its ability to predict the next word better explain brain alignment?

- Can current LLMs fully capture how the human brain processes language?

Methods

To answer these questions, the researchers used a "brain-scoring framework" to compare LLMs with human brain activity. Here's how they did it:

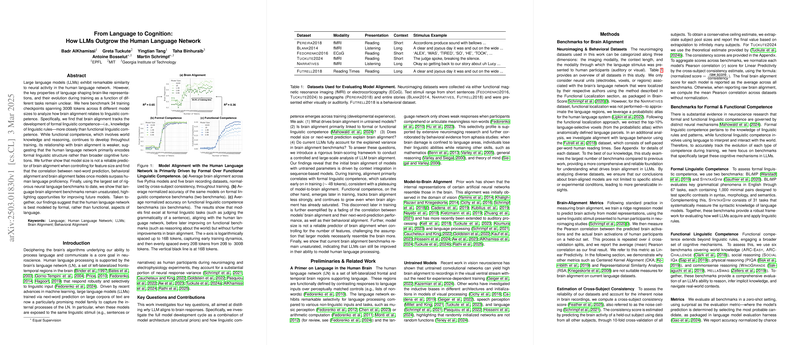

- Brain Activity Data: They used several datasets of brain activity recorded while people were reading or listening to language. These datasets measured brain activity using methods like functional magnetic resonance imaging (fMRI) and electrocorticography (ECoG).

- LLMs: They used a set of eight LLMs called the Pythia model suite, with different sizes, ranging from 14 million to 6.9 billion parameters. These models were trained on about 300 billion tokens of text, and the researchers analyzed them at various stages of training.

- Measuring Brain Alignment: To see how well the models aligned with the brain, they used a technique called linear predictivity. This involved using the model's representations of language to predict the brain activity of humans. The better the prediction, the higher the brain alignment score.

- Evaluating Linguistic Competence: The researchers tested the models on benchmarks that measure both formal and functional linguistic competence. Formal competence was assessed using tasks that test knowledge of grammar and syntax. Functional competence was assessed using tasks that test world knowledge, reasoning, and common sense.

Key Findings

Here's what the researchers found:

- Untrained Models and Context: Even before training, models that could integrate context (like Transformers, LSTMs, and GRUs) showed better brain alignment. This suggests that the ability to process sequences of words is important for aligning with the brain's language network.

- Formal vs. Functional Competence: Brain alignment was more closely linked to formal linguistic competence than to functional linguistic competence. As the models trained, they first got better at understanding language rules, which aligned with the human brain. Later, they improved in functional competence, but this didn't increase brain alignment as much.

- Model Size Isn't Everything: The size of the model wasn't a reliable predictor of brain alignment. What mattered more was the model's architecture and how it was trained.

- Superhuman Models Diverge: As models became better than humans at predicting the next word, their alignment with human reading behavior decreased. This suggests that these models might be using different strategies than humans to process language.

- Benchmarks Aren't Saturated: Current LLMs haven't fully captured the explained variance in brain alignment benchmarks, meaning there's still room for improvement.

Rigorous Brain-Scoring

The researchers emphasized the importance of evaluating brain alignment carefully. They argued that a good brain-aligned model should:

- Respond to Meaningful Stimuli: Alignment should be higher for real language than for random sequences of words.

- Generalize to New Contexts: The model should be able to understand language in different situations, not just memorize specific examples.

To ensure these criteria were met, they used linear predictivity as their main metric and carefully designed their experiments to test generalization.

Digging Deeper into Untrained Models

The paper found that even untrained models showed some degree of brain alignment. To understand why, they looked at different model architectures and found that:

- Sequence-Based Models Matter: Models that process sequences of words (like Transformers) aligned better than models that only look at the last word.

- Positional Encoding Helps: How the model encodes the position of words in a sentence also affects brain alignment.

- Weight Initialization Matters: The way the model's initial weights are set can influence brain alignment, even before training.

How Alignment Changes with Training

The researchers tracked how brain alignment changed as the models were trained. They found that:

- Alignment Peaks Early: Brain alignment increased rapidly in the early stages of training (around 2-8 billion tokens) and then plateaued.

- Formal Competence Drives Alignment: The increase in brain alignment closely followed the development of formal linguistic competence.

The Downside of Being Superhuman

The paper also found that as models become "superhuman" in their language abilities, they start to diverge from human language processing. Specifically:

- Behavioral Alignment Declines: The models' alignment with human reading times decreased as they got better at predicting the next word.

Model Size vs. Architecture

The researchers challenged the common belief that bigger models are always better. They found that:

- Size Doesn't Guarantee Alignment: When controlling for the number of features, larger models didn't necessarily show better brain alignment. This suggests that the architecture of the model and how it's trained are more important than just increasing its size.

Conclusion

The paper concludes that brain alignment in LLMs is primarily driven by formal linguistic competence and that model size isn't the only factor. They propose that the human language network might be more focused on syntax and structure, while other cognitive functions rely on different brain regions. The paper highlights that current LLMs can still be improved in their ability to model human language processing. Future work could explore how LLMs align with other brain regions involved in reasoning and world knowledge and whether the way LLMs learn language mirrors how humans do it.