- The paper presents a systematic framework for assessing claims and evidence in safety cases for Automated Driving Systems.

- It outlines detailed scoring criteria and evaluation processes that ensure consistency and transparency in both internal and external assessments.

- The methodology supports continual improvements and fosters a resilient safety culture critical for the responsible deployment of ADS.

Evaluation of Safety Case Credibility in Automated Driving Systems

Introduction

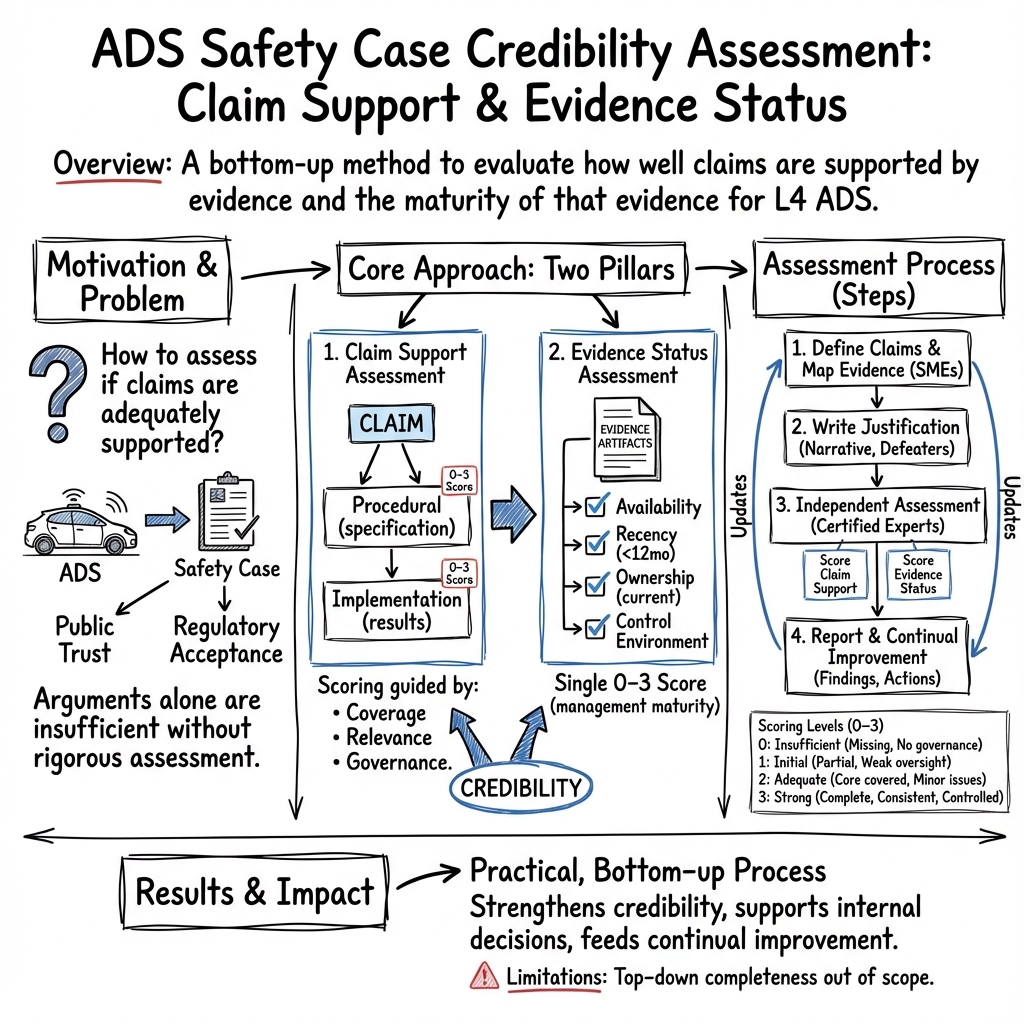

The paper "Assessing a Safety Case: Bottom-up Guidance for Claims and Evidence Evaluation" (2506.09929) presents a systematic framework for evaluating the credibility of safety cases in Automated Driving Systems (ADS). As ADS technology progresses towards Level 4 capabilities, ensuring safety becomes paramount, requiring robust assurance frameworks. This work explores the assessment of claims and supporting evidence within safety cases, which are crucial arguments and artifacts demonstrating a system's safety. The paper contributes to the discourse on safety assurance by operationalizing guidelines for evaluating the technical support of claims and the validation of evidence, aiming to facilitate the responsible integration of ADS into society.

Framework for Evaluating Claims and Evidence

Safety cases are defined as structured arguments supported by evidence, aiming to demonstrate a system's safety for its intended application and environment. The paper identifies two key scenarios for assessing claims: procedural support, which focuses on the formalization of processes, and implementation support, which centers on the application of these processes. Evidence is categorized into procedural documentation and implementation artifacts, both essential to substantiating claims within a safety case.

For each claim, assessors are provided with detailed scoring criteria, ranging from insufficient to strong support, to quantify the credibility of procedural and implementation support. The paper suggests using these guidelines to ensure consistency in evaluating safety cases, distinguishing between internal assessments conducted by ADS developers and external assessments performed by regulatory bodies. The independent assessment process for claims and evidence underscores the importance of neutrality and objectivity in safety evaluations.

Anatomy of a Safety Case

The paper explains the composition of a safety case, which includes claims, supporting evidence, and optional format-dependent entries. Claims, formulated as falsifiable statements, are decomposed hierarchically into sub-claims. This decomposition facilitates systematic evaluation and aggregation of results, helping developers and regulators assess the robustness of the safety determination. Evidence is attached to claims, providing artifacts that substantiate their validity through procedural documentation and implementation results.

The argument within a safety case is constructed through atomic and compound claims, supported by evidence. The paper emphasizes the need for clarity, contextualization, and logical soundness in formulating claims, ensuring that the overall argument is compelling, comprehensible, and valid. Optional elements, such as counter-arguments, limitations, and justification narratives, strengthen the argument, demonstrating due diligence and addressing potential weaknesses.

Assessment Process and Scoring

The assessment process for a safety case includes stages for creating claims, collecting evidence, independent evaluation, and continual improvement. Each stage involves distinct responsibilities and roles for stakeholders, ensuring effective management and governance. The scoring strategies for claims and evidence are developed from maturity assessment models, with disambiguation criteria based on coverage, relevance, and governance. These scores enable assessors to evaluate the procedural and implementation support of claims, guiding internal decision-making on continual improvement efforts.

Reporting of assessment results is crucial for tracking trends and prioritizing safety performance improvements. The paper suggests visualization techniques for aggregating scores, providing stakeholders with intuitive summaries for strategic guidance.

Timing and Continual Improvement

Timing considerations for creating and assessing safety cases hinge on the maturity of the ADS technology and supporting methodologies. The paper argues that safety cases should be initiated when internal practices are sufficiently stable to support a credible argument. Frequent updates and reassessments ensure the safety case remains valid, aligning with continual improvements in technology and processes.

Event-based and time-based triggers for updating safety cases are explored, highlighting the importance of collaboration between safety, engineering, and product teams. The paper emphasizes the need for internal alignment on priority improvements to maintain coherence between engineering practices and safety cases.

Conclusion

The paper presents a systematic approach to assessing the credibility of safety cases for ADS, addressing gaps in current state-of-the-art auditing practices. It offers bottom-up guidance on evaluating claims and evidence, contributing to a comprehensive Case Credibility Assessment (CCA) framework. The insights provided hold significance for ADS developers and regulators in ensuring transparent, rigorous, and credible safety assurance as ADS technology evolves. The relentless focus on consistency and continuous improvement advocates for a resilient safety culture within the broader Safety Management System (SMS), emphasizing the critical role of safety cases in the responsible deployment of ADS technologies.