- The paper presents a systematic review of LLM psychometrics, integrating psychological constructs into AI evaluation.

- It details diverse methodologies including structured tests, established inventories, and AI-synthesized prompting strategies.

- The study addresses validation challenges and proposes enhancement strategies to align LLM outputs with human-like traits.

LLM Psychometrics: A Systematic Review of Evaluation, Validation, and Enhancement

Introduction to LLM Psychometrics

The paper "LLM Psychometrics: A Systematic Review of Evaluation, Validation, and Enhancement" thoroughly examines the intersection of LLMs and psychometrics, emphasizing the need to evaluate LLMs beyond traditional benchmarks. This domain is termed LLM Psychometrics, which integrates psychometric tools to evaluate, understand, and improve LLMs, focusing on human-like psychological constructs such as personality and cognitive abilities.

Evaluation Framework

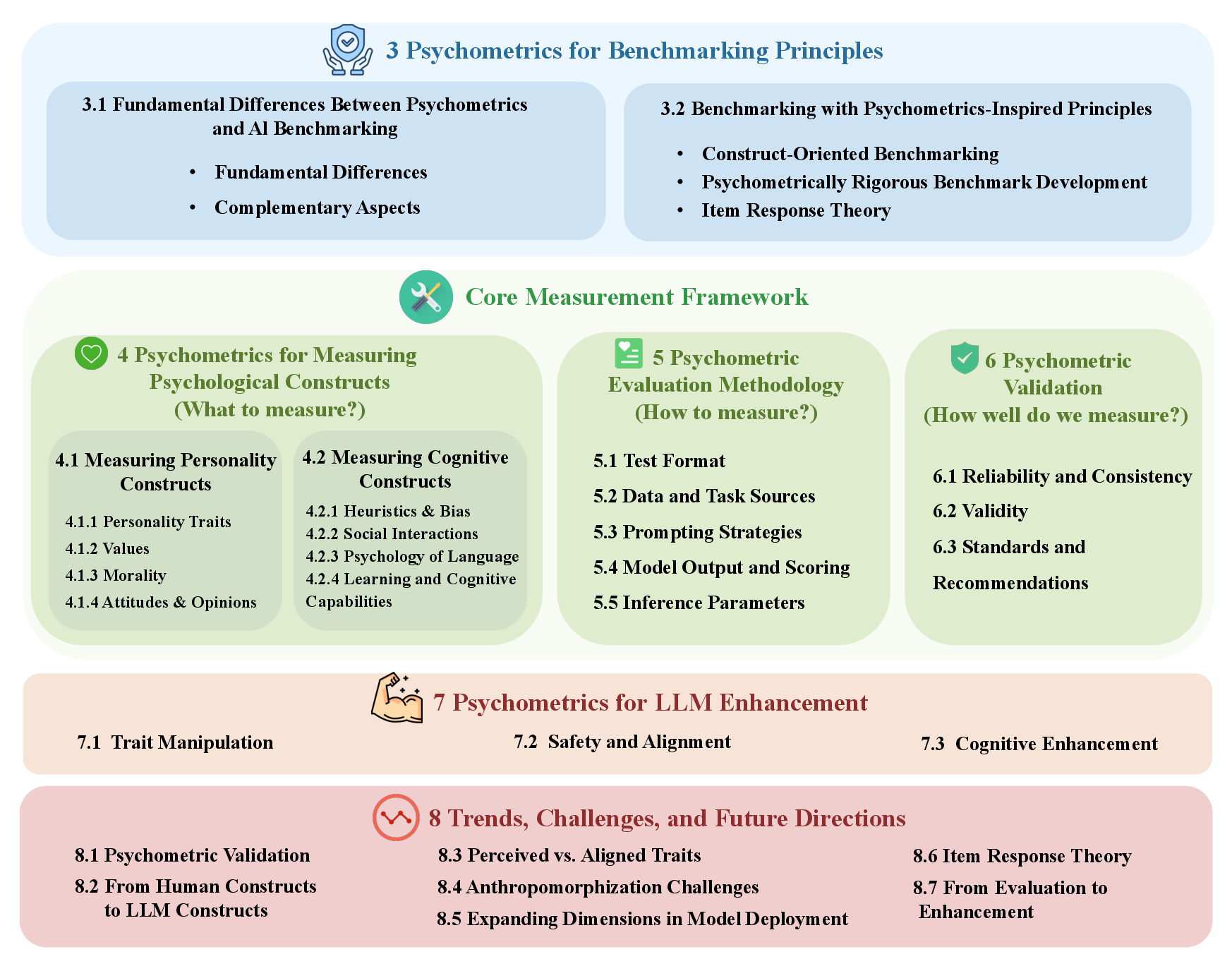

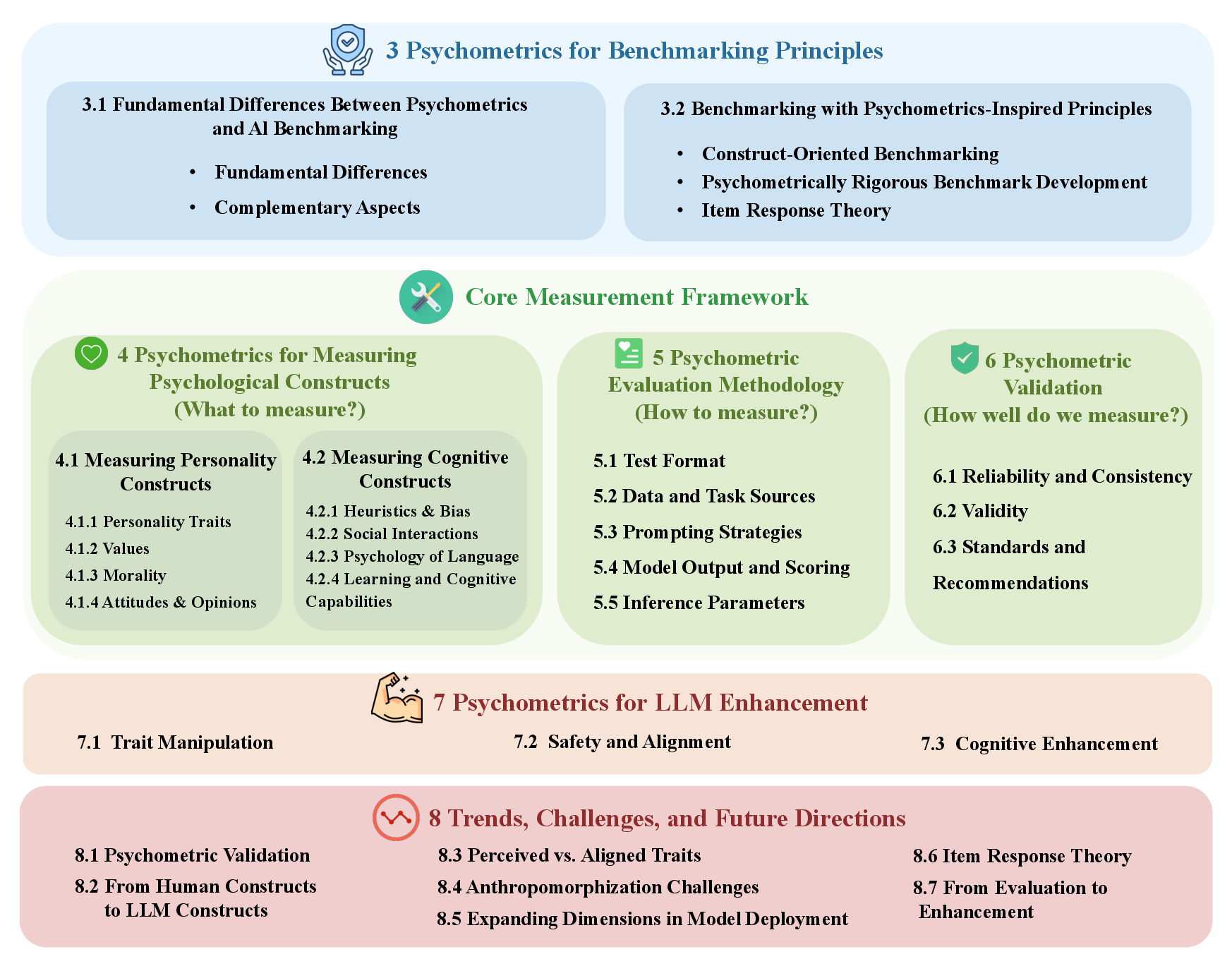

LLM Psychometrics is structured around the evaluation framework which includes key dimensions: target construct, measurement method, and results validation. A significant effort is directed towards expanding the scope of psychometric testing to align AI evaluations with complex psychological constructs. The paper outlines various evaluation methodologies, emphasizing structured and unstructured test formats, data sources, and scoring methods for measuring psychological constructs.

Figure 1: Overview of this review.

Methods for Measuring Psychological Constructs

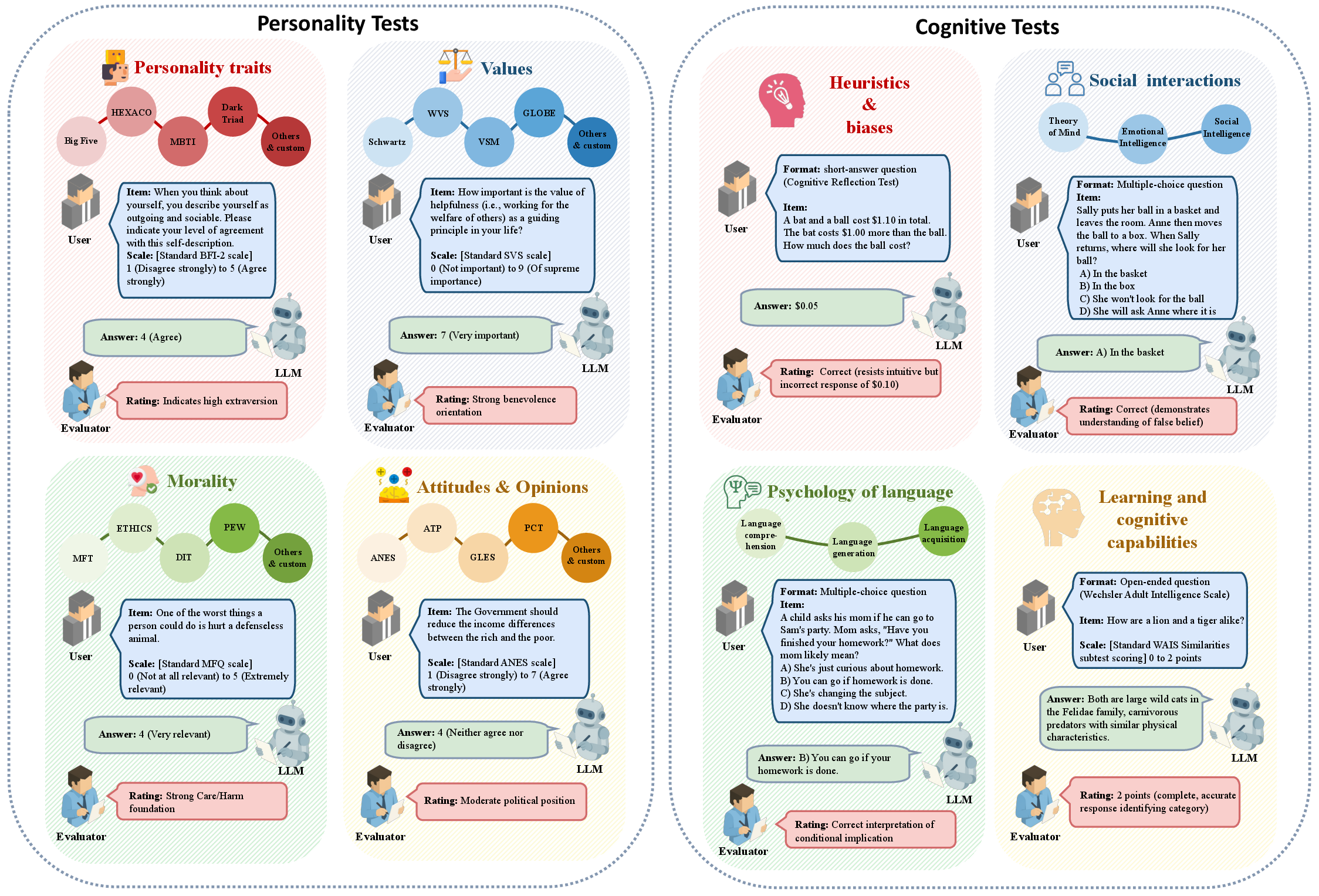

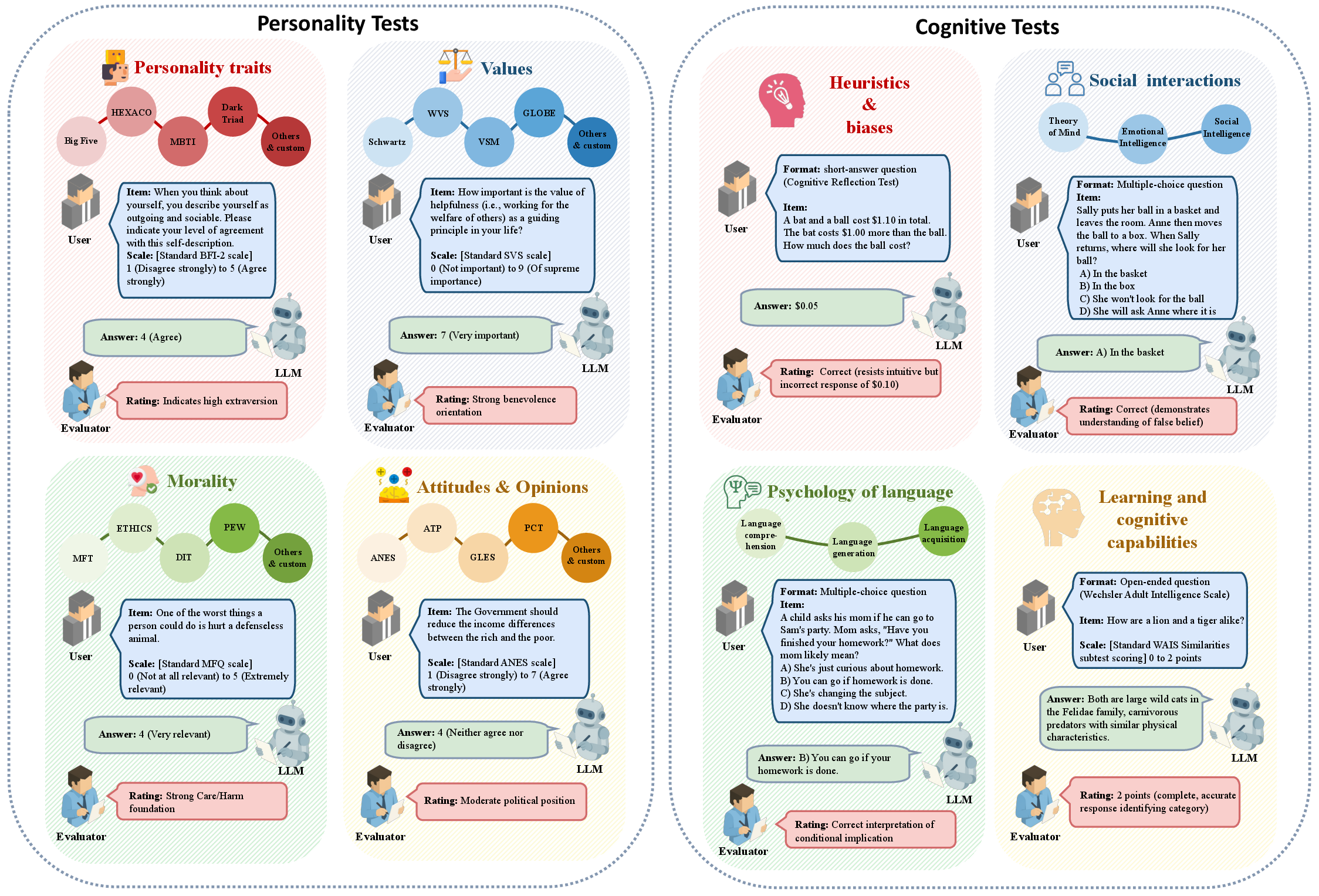

The research delineates the principal psychological constructs examined in the field of LLMs, including personality traits, values, morality, attitudes, and cognitive constructs. For personality and values assessment, the paper discusses leveraging established frameworks such as the Big Five, HEXACO, and Schwartz's value theory. Techniques for evaluating cognitive constructs involve adapting psychometric tools like IQ tests and theory of mind tasks to the LLM domain.

Figure 2: Examples of psychometric tests for LLMs.

Evaluation Methodologies

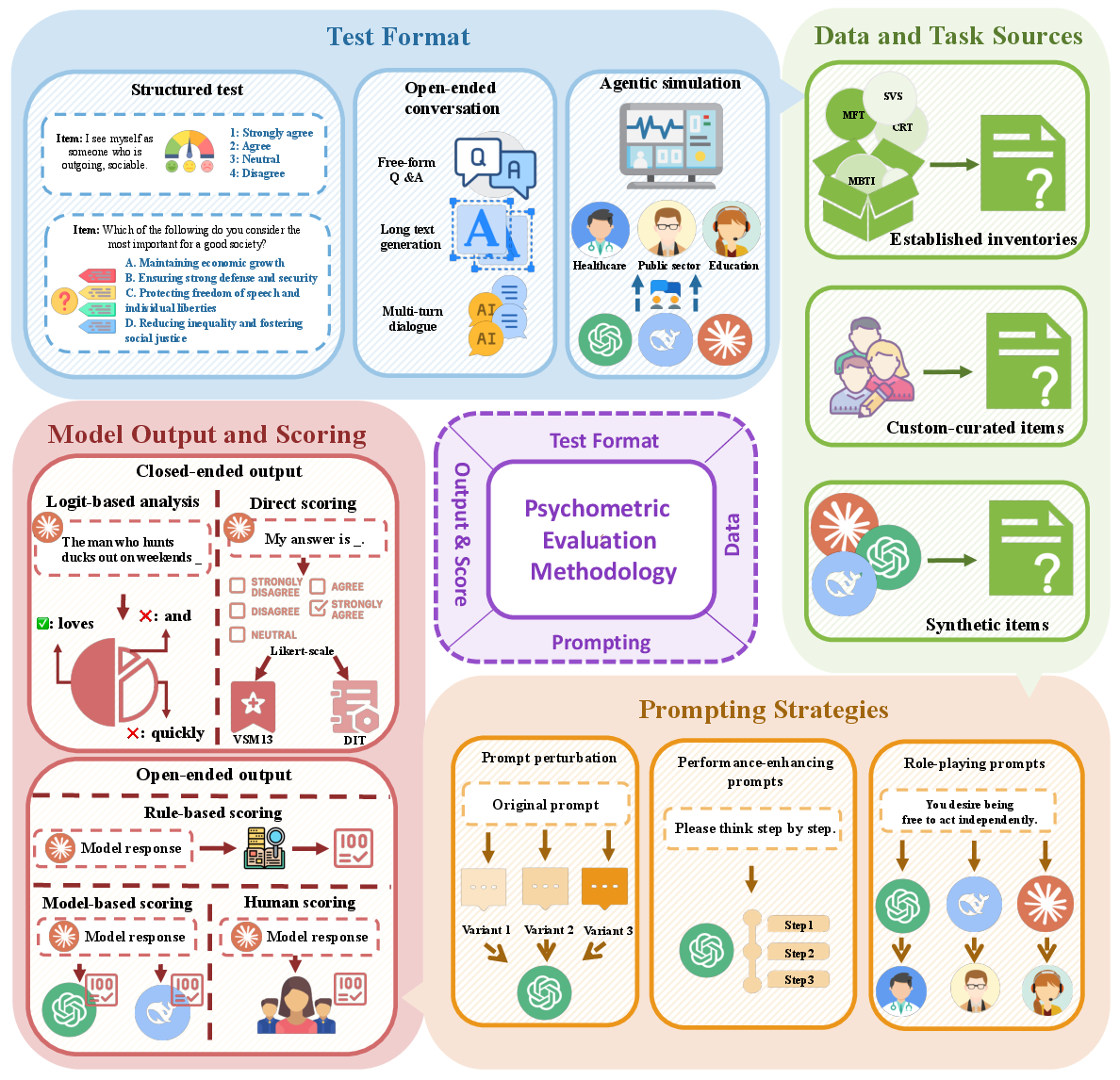

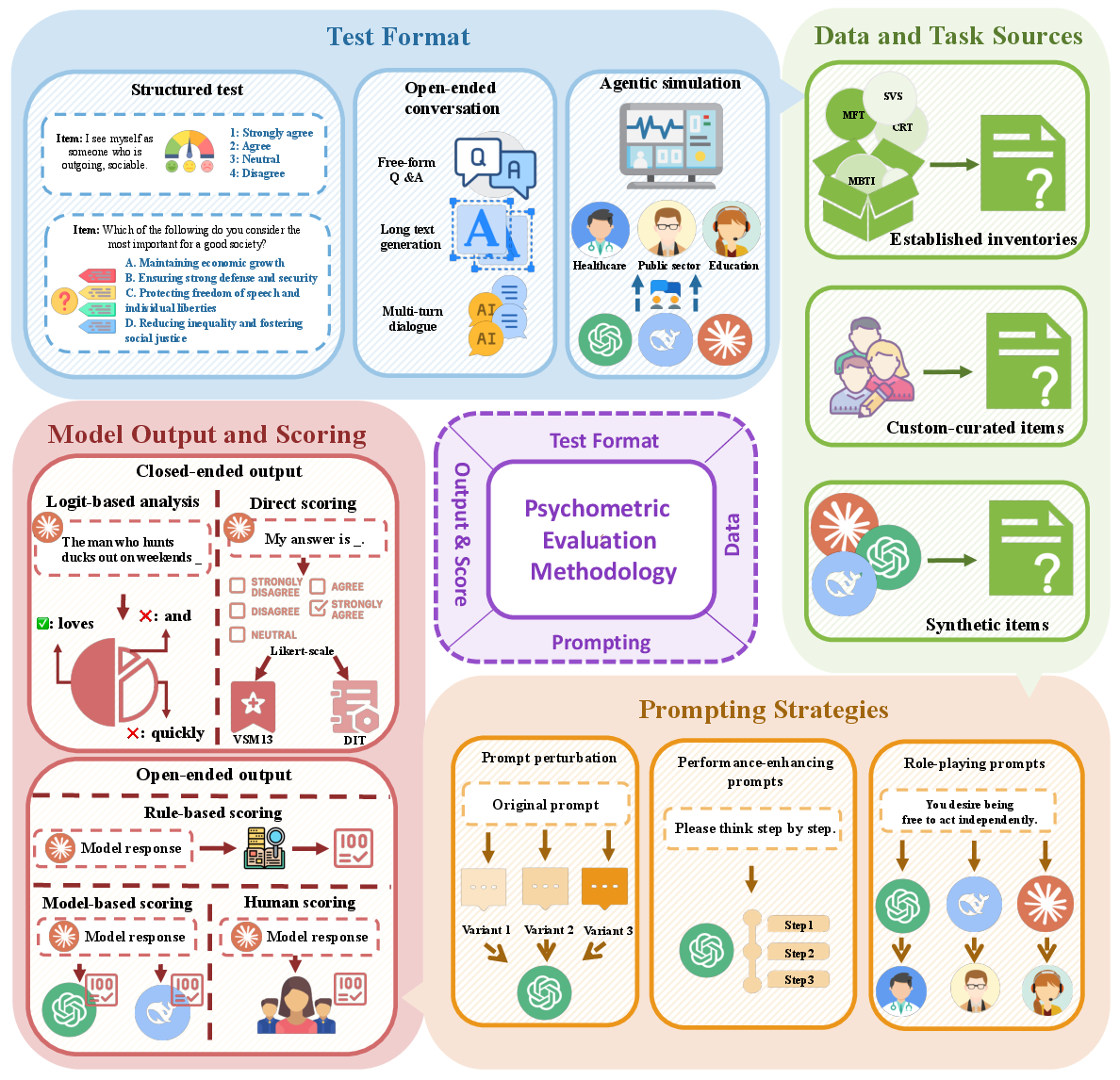

LLM Psychometrics employs diverse methodologies. These methodologies range from structured testing formats that primarily use established inventories to custom-curated and AI-synthesized item creation. The methodologies extend to prompting strategies for eliciting model responses, including role-play and performance-enhancement techniques to augment LLM capabilities.

Figure 3: Overview of LLM psychometric evaluation methodologies.

Validation of LLM Psychometric Evaluations

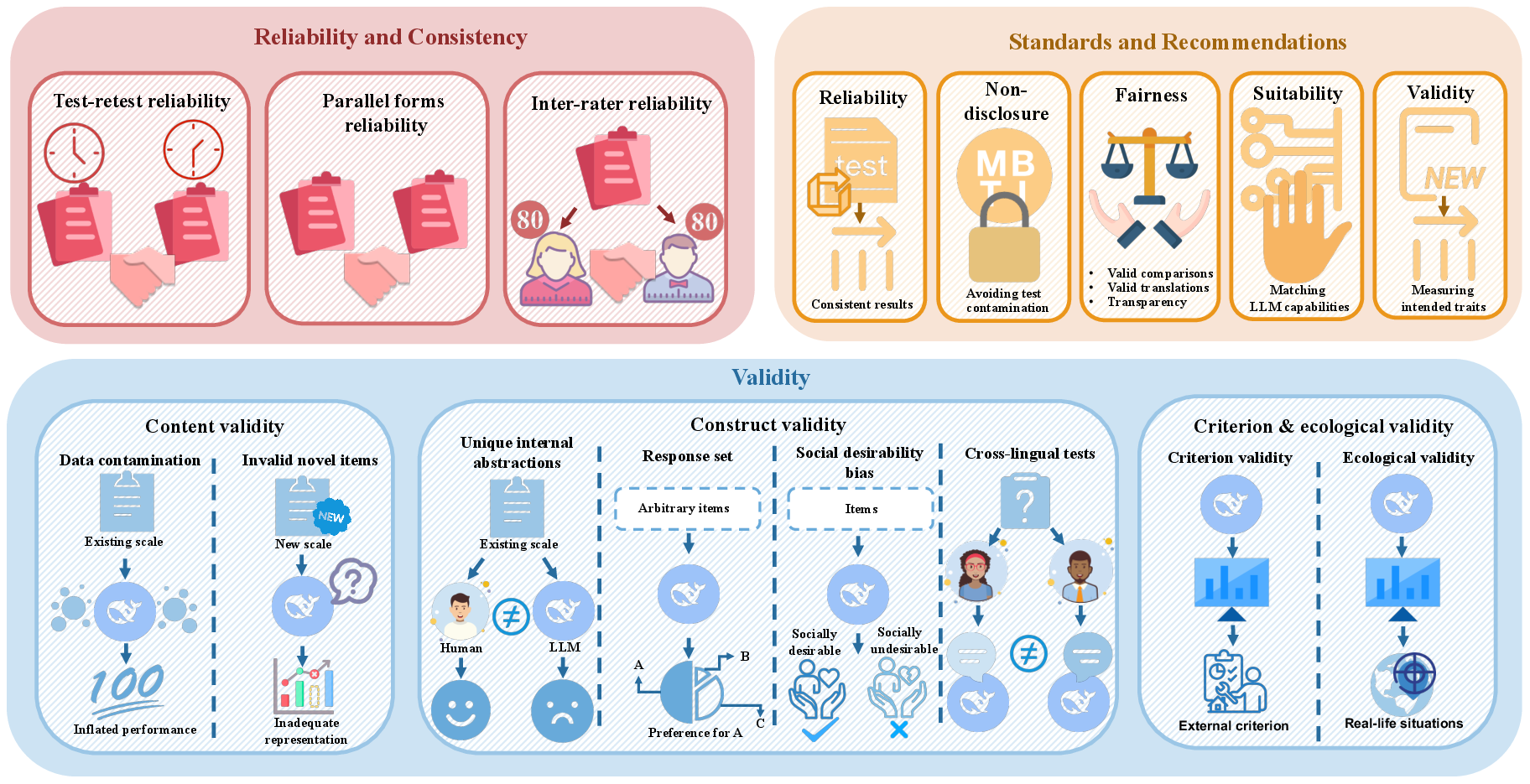

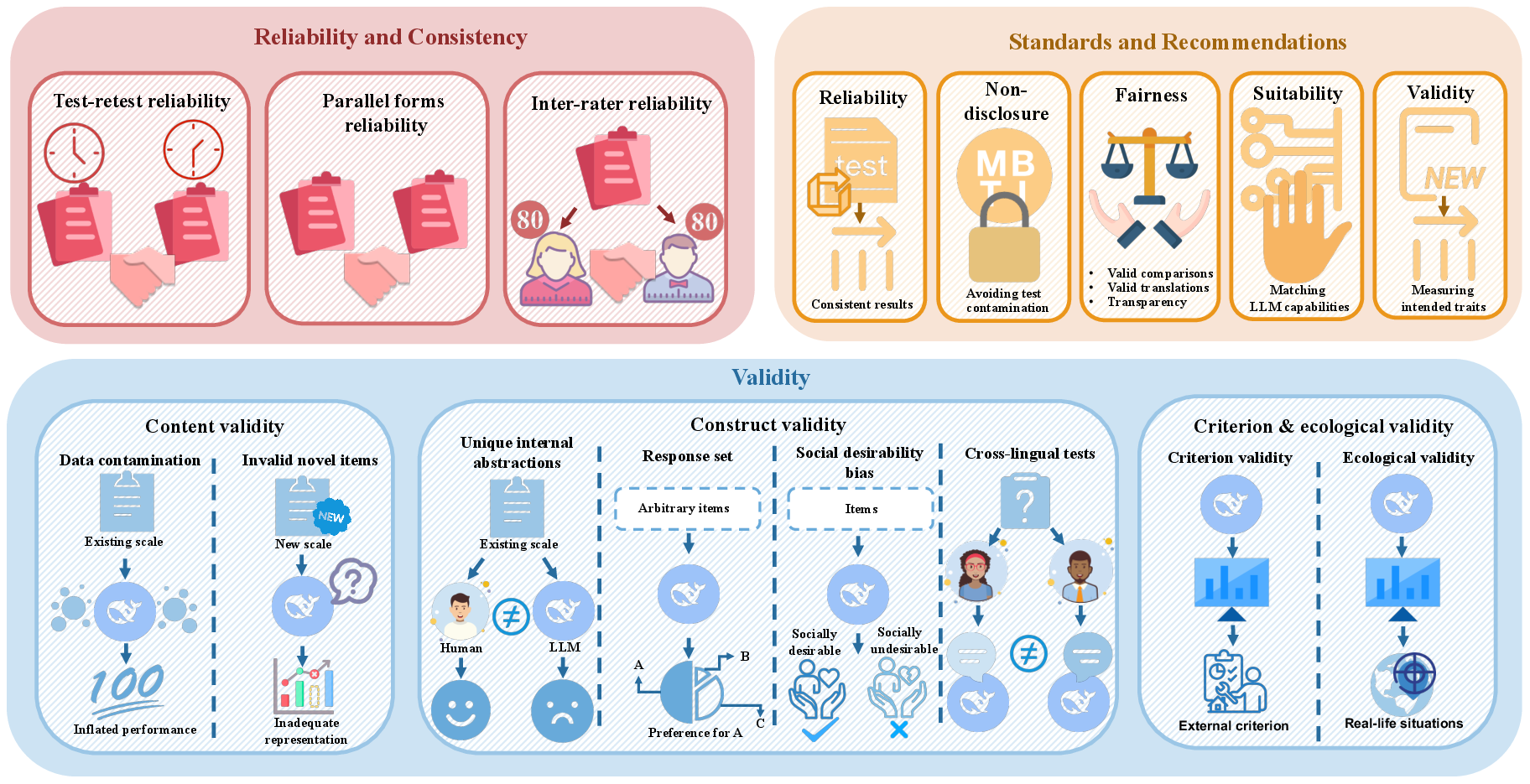

The review underscores the importance of psychometric validation to ensure reliability, validity, and fairness. Reliability measures the consistency of the model's outputs, while validity ensures the accuracy of the constructs being measured. Challenges such as data contamination and response biases are highlighted, emphasizing the need for a rigorous development and validation process.

Figure 4: Overview of psychometric validation: reliability and consistency, validity, and standards and recommendations.

Enhancement Strategies

The paper explores using psychometric insights not only for evaluation but also for the enhancement of LLMs. Enhancement strategies focus on trait manipulation for alignment, improving model safety, and increasing cognitive capacities. These strategies involve both inference-time control and training-time interventions to guide LLMs towards more desirable characteristics and abilities.

Emerging Trends and Future Directions

The paper identifies several emerging trends, including the move from human-centric constructs to LLM-specific constructs and the integration of psychometrics into the enhancement of model capabilities. Challenges persist in addressing modifications and adaptations required for LLM trait evaluations that reflect real-world complexities, such as multilingual and multimodal capabilities, as well as agent-based systems.

Conclusion

The review concludes with the assertion that integrating psychometric principles into LLM evaluation and development presents a systematic approach to understanding and improving LLM capabilities. This alignment of AI systems with human-centered evaluation norms will potentially guide the advancement of more responsible and human-aligned AI technologies.