An Insightful Overview of "Machine Psychology"

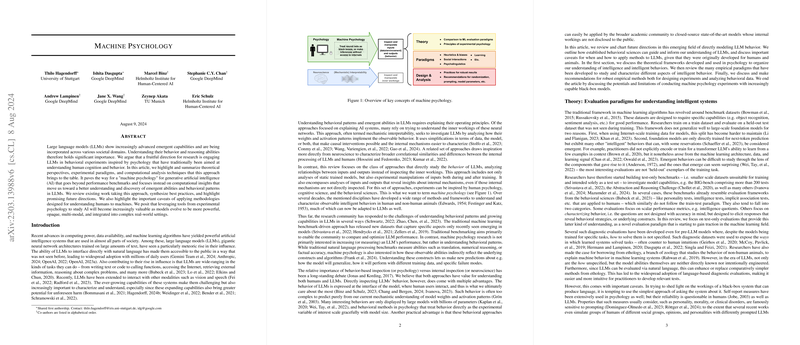

The paper "Machine Psychology" authored by Hagendorff et al. presents a comprehensive framework for studying LLMs through methodologies drawn from psychological, cognitive, and behavioral sciences. This interdisciplinary approach aims to move beyond traditional mechanistic interpretability, which focuses on the internal workings of neural networks, and instead concentrates on analyzing the observable behavioral patterns and emergent capabilities of LLMs.

Key Concepts and Methodologies

The paper begins by introducing the concept of "machine psychology," a novel paradigm that adapts experimental techniques from human cognitive and behavioral studies to investigate the behavior of LLMs. This includes both static analysis of trained models and dynamic manipulations of input data during and after training to reveal insights into the internal mechanisms of LLMs. This approach shifts the focus from merely improving LLM performance to understanding the underlying constructs and algorithms driving their behavior.

Evaluation Paradigms

The authors discuss the limitations of traditional benchmarking methods, which are primarily designed to measure specific capabilities like object recognition or sentiment analysis. These methods fall short when applied to LLMs, which exhibit emergent behaviors not directly encoded in their training objectives. To address this, the paper advocates for the development of test-only benchmarks and diagnostic evaluations inspired by psychological testing, such as intelligence and personality tests, that do not follow the conventional train-test paradigm. These benchmarks aim to characterize behavioral strategies and underlying constructs, rather than just measuring performance.

Empirical Paradigms in Machine Psychology

The paper explores various aspects of intelligent behavior, each studied by different sub-fields of behavioral sciences:

- Heuristics and Biases: The authors explore the application of the heuristics and biases framework to examine the decision-making processes of LLMs. They report findings that earlier LLMs, like GPT-3, exhibit some cognitive biases similar to humans, but these biases have largely disappeared in the latest generation of LLMs.

- Social Interactions: This section applies developmental psychology paradigms to LLMs to assess their social intelligence and capabilities in modeling human communicators. For instance, theory of mind tests, which measure the ability to infer unobservable mental states, reveal that newer LLMs show improved performance over earlier models.

- Psychology of Language: The paper reviews studies that compare LLMs' language processing to human psycholinguistics, using techniques like surprisal measures, priming, and garden path sentences. These studies help assess how well LLMs capture the nuances of human language understanding.

- Learning: The focus here is on understanding the emergent in-context learning abilities of LLMs. Researchers employ cognitive science methods to compare LLM outputs with hypothesized learning algorithms, aiming to uncover the implicit learning algorithms driving LLM behavior.

Designing Robust Experiments

The paper emphasizes the importance of rigor in experimental design to avoid pitfalls like training data contamination and sampling biases. It provides guidelines for constructing prompts that LLMs have not encountered during training and suggests using multiple prompt variations to ensure reliability and generalizability of results. Additionally, the authors discuss leveraging techniques like chain-of-thought prompting and few-shot learning to enhance LLM reasoning capabilities.

Implications and Future Directions

The research outlined in "Machine Psychology" has significant implications for both the practical deployment and theoretical understanding of LLMs. By adopting behavioral sciences methodologies, researchers can uncover new abilities and behavioral patterns in LLMs, contributing to the fields of AI safety and alignment. The paper suggests that future work in machine psychology will be crucial as LLMs continue to evolve and integrate into complex real-world settings. Longitudinal studies, multimodal models, and augmented LLMs interacting with diverse data types will further enrich this nascent field.

In conclusion, the paper by Hagendorff et al. represents a pioneering effort to merge psychology and AI research, offering a novel framework for understanding the complex behaviors of LLMs. This interdisciplinary approach provides robust tools for exploring the emergent capabilities of these models, paving the way for more nuanced and holistic AI systems analysis.