Overview of LLM Evaluation Frameworks

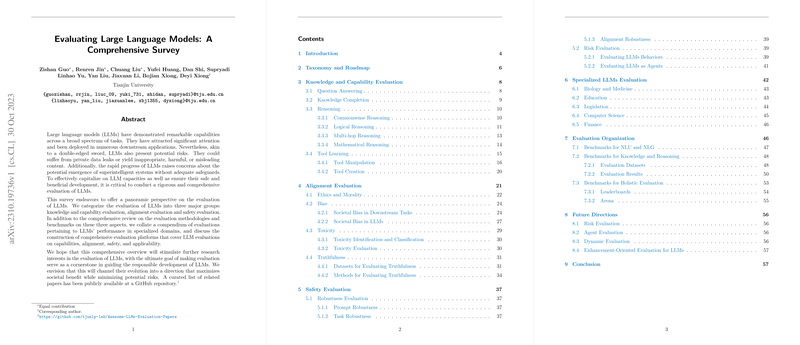

The paper, "Evaluating LLMs: A Comprehensive Survey," offers a detailed exploration into the evaluation systems and methodologies for LLMs. This survey categorizes evaluations into three primary groups: knowledge and capability evaluation, alignment evaluation, and safety evaluation. It aims to provide researchers with structured insights into the challenges and performance of LLMs across various specialized domains.

Knowledge and Capability Evaluation

The paper discusses the significance of evaluating LLMs’ knowledge and reasoning capabilities, emphasizing diverse methods to assess aspects such as question answering, knowledge completion, and reasoning skills. Datasets like SQuAD and benchmarks like MMLU are used to examine capabilities, highlighting the importance of dynamic and comprehensive evaluations. The paper stresses the need for evaluating tool learning and manipulation, illustrating this with benchmarks like API-Bank for assessing how models interact with tools to perform tasks effectively.

Alignment Evaluation

The discussion on alignment evaluation focuses on ensuring that LLMs produce outputs aligned with ethical and moral standards. This includes evaluating biases, toxicity, and truthfulness. The survey describes datasets and metrics used to assess these factors, such as Social Chemistry 101 and REAL Toxicity Prompts. It emphasizes the necessity of refining LLMs to minimize societal biases and misinformation, reinforcing the models’ alignment with human values through rigorous testing.

Safety Evaluation

The survey highlights safety evaluation by categorizing it into robustness and risk assessment. Robustness evaluation examines how LLMs handle adversarial inputs and unexpected scenarios, using tools like PromptBench. Risk assessment focuses on evaluating the potential for harmful behaviors, such as power-seeking, using frameworks like AgentBench. The goal is to develop systems resistant to adversarial manipulation and unintentional harmful outputs.

Specialized Domain Applications

The paper extends the evaluation discourse to specialized domains, including medicine, finance, and education, emphasizing the application-specific challenges and benchmarks. For instance, in the medical field, LLMs are tested against standardized exams like the USMLE to ensure reliability in clinical decision support.

Holistic Evaluation Approaches

The survey also presents holistic evaluation frameworks like HELM and OpenAI Evals, which integrate multiple dimensions of assessment, including comprehensiveness, robustness, and alignment. These benchmarks aim to capture a full spectrum of LLM capabilities and facilitate an understanding of their performance across diverse and complex scenarios.

Future Directions

The survey speculates on future directions, advocating for evaluations that are dynamic, comprehensive, and centered on real-world applications. It emphasizes enhancement-oriented evaluations that do not merely benchmark capabilities but also identify weaknesses, providing avenues for improvements. This future-oriented approach aims to align the evolution of LLMs with societal needs and ethical standards, promoting safer and more effective AI deployment.

The paper serves as a vital resource for understanding the complexity and breadth of LLM evaluation. By offering a structured taxonomy and highlighting specific benchmarks and methodologies, it provides a foundation for advancing AI research and development, ensuring that LLM evolution aligns with societal benefits and ethical standards.