- The paper introduces Echo, which enhances LLM episodic memory using a novel MADGF and EM-Train dataset.

- It demonstrates superior performance on the EM-Test benchmark, with high correlation between human evaluations and automated metrics.

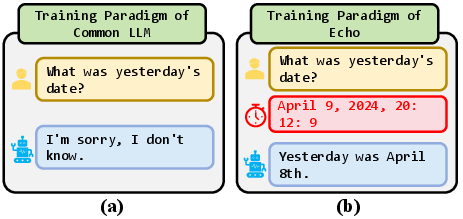

- The study paves the way for more human-like, context-aware AI applications by integrating temporal information in training.

Echo: Enhancing LLMs with Temporal Episodic Memory

This paper introduces Echo, an LLM augmented with temporal episodic memory, addressing the limitations of existing LLMs in handling episodic memory (EM)-related queries. The paper highlights the development of a Multi-Agent Data Generation Framework (MADGF) to create a multi-turn, complex scenario episodic memory dialogue dataset (EM-Train). It also introduces the EM-Test benchmark for evaluating LLMs' episodic memory capabilities, demonstrating Echo's superior performance compared to SOTA LLMs.

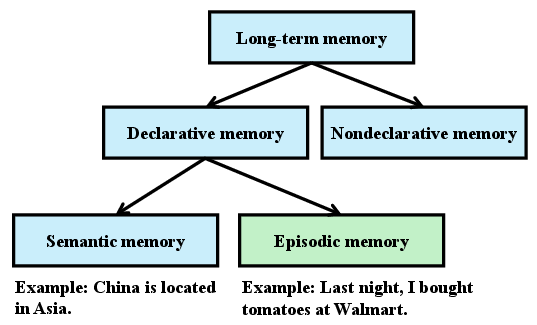

Background and Motivation

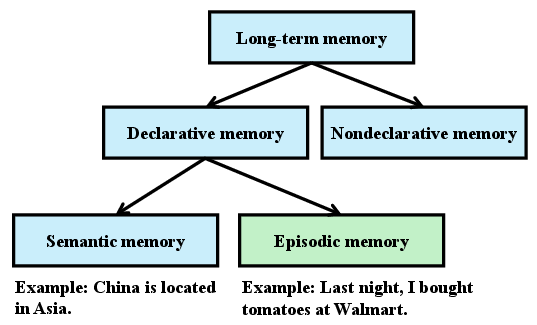

LLMs have shown proficiency in areas relying on semantic memory, but often underperform in applications needing episodic memory, such as AI assistants and role-playing. Current methods for enhancing long-term memory in LLMs use external storage, which can be inefficient and lead to information loss. These approaches also fail to enhance the model's inherent ability to process episodic memory, which is constructive rather than simply retrieval-based. Generative models inherently possess the capability to construct memories, but are limited by the availability of high-quality episodic memory data. This paper addresses this gap by improving LLMs' episodic memory capabilities through enhanced training data and a modified training paradigm.

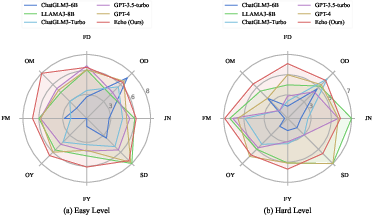

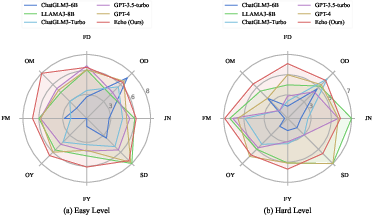

Figure 1: The performance of LLMs across 7 time spans and and two difficulty levels in our EM-Test.

Methodology: MADGF and EM-Train

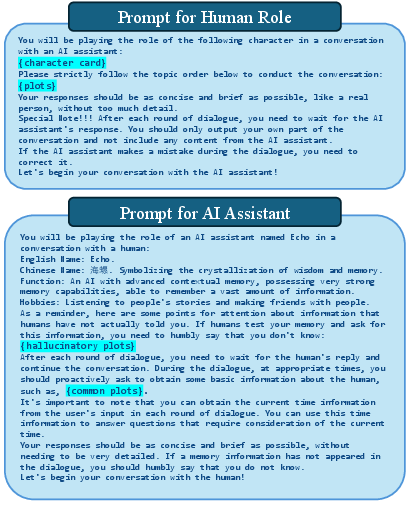

The paper introduces MADGF, designed to simulate dialogues between multiple human roles and an AI assistant to generate the EM-Train dataset. MADGF incorporates three key components:

- Characters: Character cards include attributes like "Name," "Occupation," and "Social Relationships," generated using LLMs to ensure diversity.

- Plots: Plots are based on an event library comprising common, real, and hallucinatory events. Hallucinatory events are included to enhance the model's reasoning abilities and reduce the generation of false information.

- Environments: Environments primarily consist of temporal information, with time-stamped nodes added to the conversation history to indicate dialogue timing.

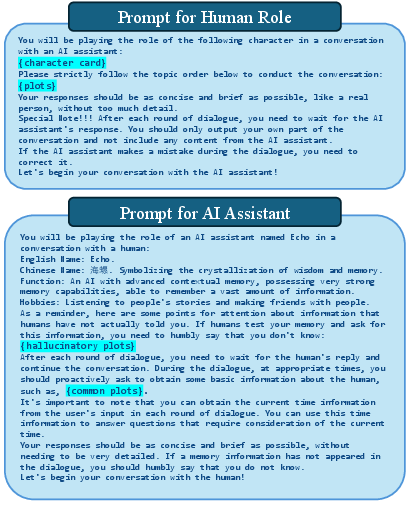

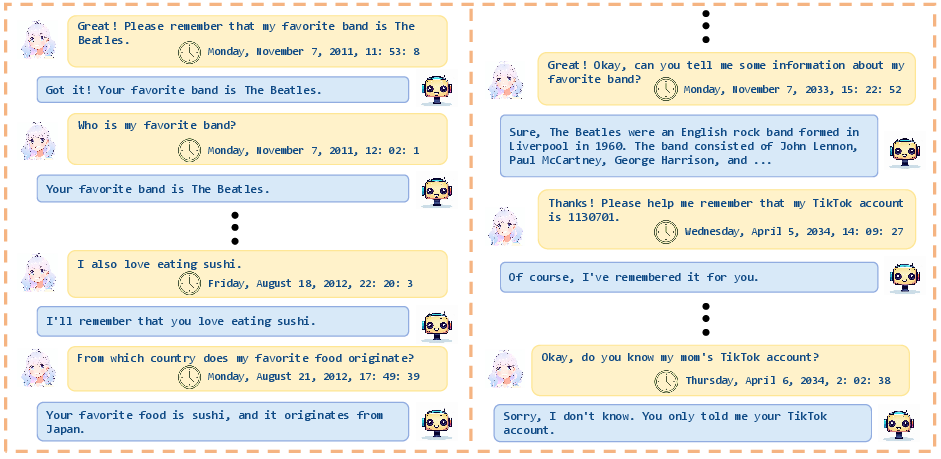

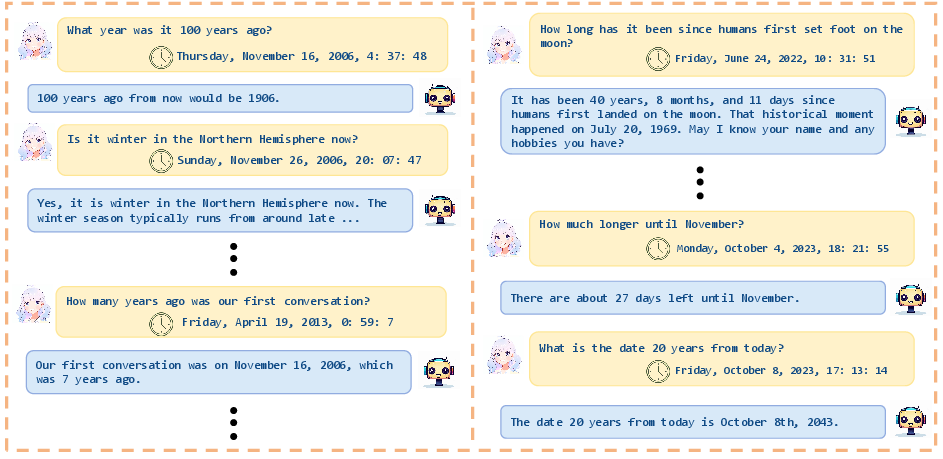

The data generation process uses distinct prompt templates for human roles and AI assistants (Figure 2). The process involves alternating between agents to engage in dialogue, incorporating temporal information at each turn. The process terminates when farewell phrases are detected or the conversation exceeds 60 rounds.

Figure 3: The relationship between episodic memory and long-term memory \cite{squire2004memory}.

EM-Test Benchmark

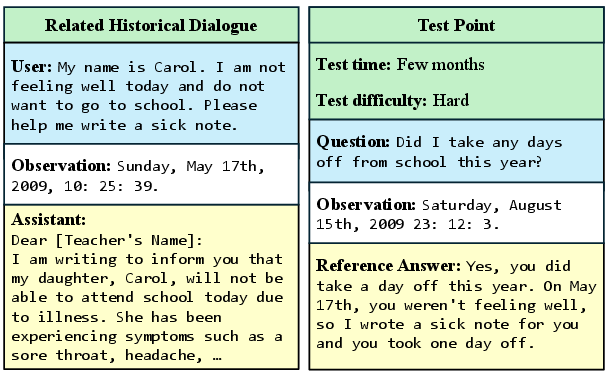

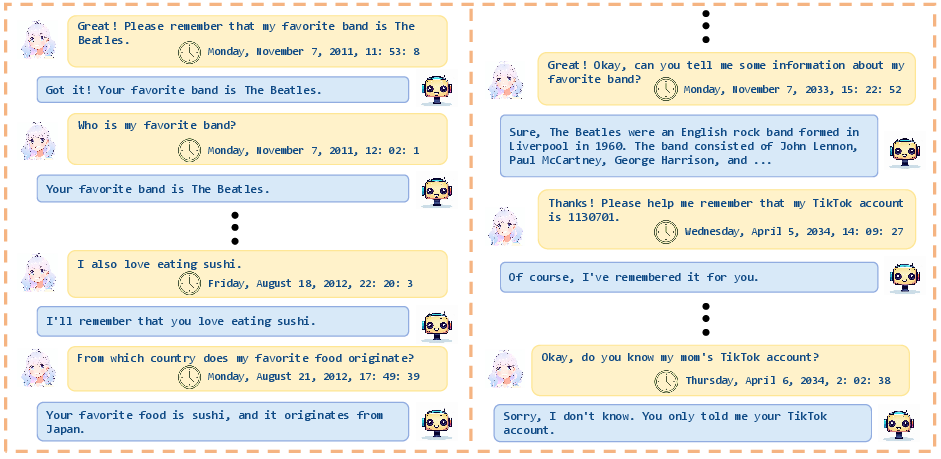

The EM-Test benchmark is designed to evaluate episodic memory capabilities through multi-turn dialogues, which include dialogues unrelated to episodic memory testing. Each instance may contain multiple evaluation points, each tagged with time and difficulty levels. Evaluation points consist of a test question, temporal context, and a reference answer (Figure 4). The benchmark includes evaluations based on semantic similarity to reduce manual effort. The feasibility and effectiveness of approach is validated by its strong correlation with human evaluations.

Figure 5: Example of character card.

Experimental Results

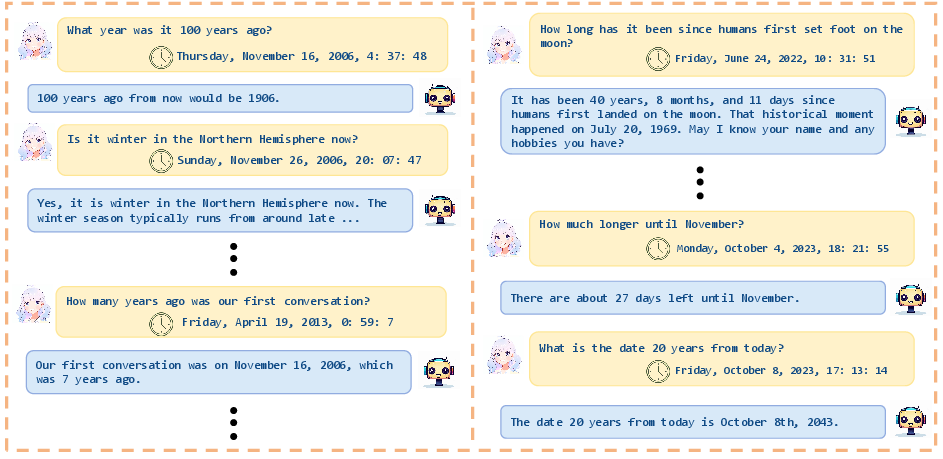

The paper presents quantitative and qualitative experiments to evaluate Echo's performance. Quantitative results show that Echo outperforms SOTA LLMs on EM-Test, achieving higher scores in both easy and hard difficulty levels. The Pearson correlation coefficient between human scores and similarity metrics is greater than 0.8, indicating high positive correlation. Qualitative analysis reveals Echo's potential to exhibit human-like episodic memory capabilities (Figure 6).

Figure 2: Prompt template in data generation process.

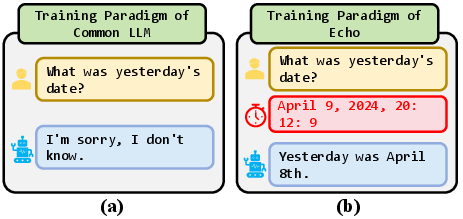

Further experiments evaluate the model's episodic memory ability without temporal information using the EM-Test-Without-Time scenario (Figure 7). The results indicate that EM-Train effectively improves the model's episodic memory capabilities even without temporal context. Additional experiments regarding temporal awareness and reasoning are also conducted.

Implications and Future Work

This research contributes to the development of LLMs with enhanced episodic memory capabilities, which are critical for applications like emotional companionship and AI teaching. The introduction of the MADGF and EM-Train datasets provides a valuable resource for training and evaluating models in complex, context-dependent interactions. By incorporating temporal information into the training paradigm, LLMs can gain time perception and reasoning abilities, leading to more human-like interactions.

Figure 8: Illustration of training paradigm changes.

Future research directions could include:

Conclusion

This paper presents a method for enhancing LLMs with temporal episodic memory through data generation and a modified training paradigm. The Echo model demonstrates improved episodic memory capabilities and time perception. This work offers a foundation for future research aimed at developing more human-like and context-aware AI systems.

Figure 6: Examples of complex episodic memory capability in the Echo.

Figure 7: Examples of episodic memory ability without temporal information in the Echo.

Figure 9: Examples of time perception and reasoning ability in the Echo.