Evaluating Long-Term Interactive Memory in Chat Assistants: A Detailed Examination

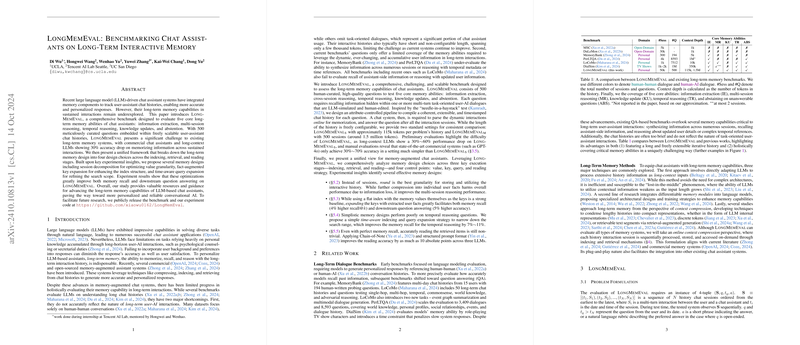

The paper introduces LONG M EM EVAL, a benchmark designed to evaluate the long-term memory capabilities of chat assistants. This benchmark assesses several crucial abilities including information extraction, cross-session reasoning, temporal reasoning, knowledge updates, and abstention, which collectively represent the core components of long-term memory systems desired in conversational AI.

Key Components of the Benchmark

LONG M EM EVAL consists of 500 high-quality questions organized around five core memory abilities. The questions are embedded in simulated user-assistant chat histories designed with extensible context lengths. The benchmark provides different configurations, with contexts reaching up to 1.5 million tokens. Preliminary results show a significant performance challenge for current memory systems, with long-context LLMs experiencing as much as a 60% accuracy drop depending on the configuration used.

Notable Findings and Memory System Analysis

Through the use of LONG M EM EVAL, the paper identifies significant performance gaps in existing memory-augmented chat assistant systems. Commercial solutions and state-of-the-art LLMs exhibit noticeable deficiencies, especially with tasks involving the synthesis of information across multiple sessions or integrating temporal and updated knowledge into the reasoning process.

The evaluation results indicate that despite advancements, the major obstacle for current systems lies in the unreliable integration and retrieval of long-term information, which is crucial for a personalized user experience. Existing systems often struggle to handle information dynamism and fail to accurately track and incorporate evolving user knowledge.

Proposed Optimizations for Memory-Augmented Systems

The paper proposes a unified framework for memory-augmented chat assistants, structured around three stages—indexing, retrieval, and reading. Key innovations include:

- Session Decomposition: Storing interactions as rounds rather than sessions to improve granularity and retrieval efficiency.

- Fact-Augmented Key Expansion: Leveraging extracted user facts to enhance indexing, aiding in a more targeted retrieval of memory.

- Time-Aware Query and Retrieval: Introducing a mechanism to use temporal metadata to narrow down the retrieval scope for temporal reasoning questions.

- Advanced Reading Strategies: Utilizing techniques such as the Chain-of-Note, which involves a step-by-step processing of retrieved information, and structured prompt formats for improving the extraction and reasoning stages.

These developments aim at increasing both the effectiveness of long-term memory retrieval and the downstream task performance. Practical implementations of these strategies demonstrate increased recall by 4% and accuracy up to 11% on temporal reasoning tasks.

Implications and Future Directions

The research presents a comprehensive benchmark that not only serves as a tool for evaluating and training AI systems but also poses a significant step towards understanding the complex requirements of long-term interactions within conversational applications. By providing holistic coverage of memory capabilities, LONG M EM EVAL facilitates the development and testing of more advanced AI systems equipped to handle personalized conversation over extended periods.

The findings and innovations in this paper underline the necessity for continued exploration into efficient memory mechanisms that can maintain user context over long periods, incentivizing new lines of research in scalable memory architectures and integration strategies. Future developments are positioned towards achieving highly personalized, context-aware, and memory-efficient conversational agents that can operate reliably in real-world dynamic scenarios. The public release of the benchmark promises to foster further progress and contribute to the evolution of conversational AI with robust long-term memory functions.