Emotional RAG: Enhancing Role-Playing Agents through Emotional Retrieval (2410.23041v1)

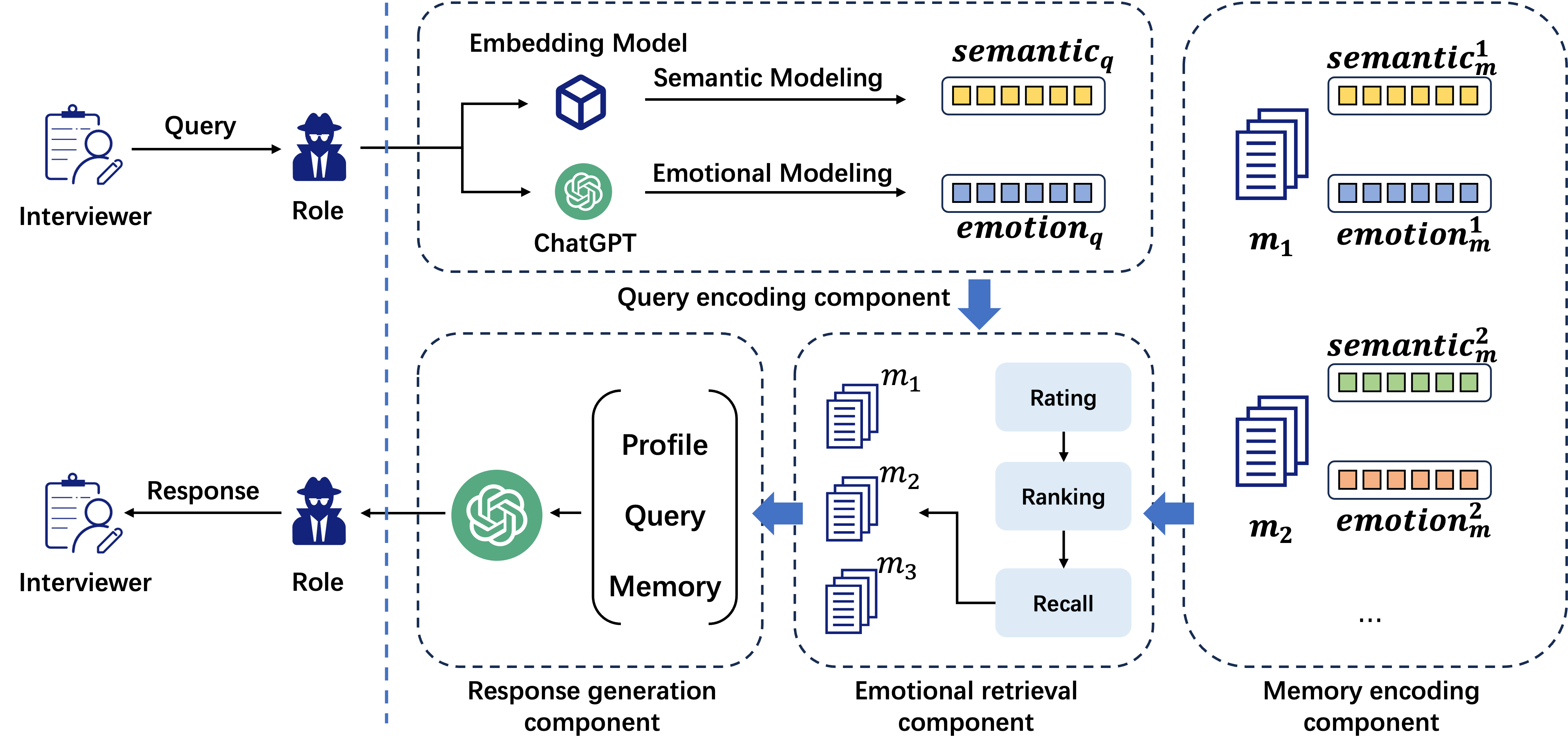

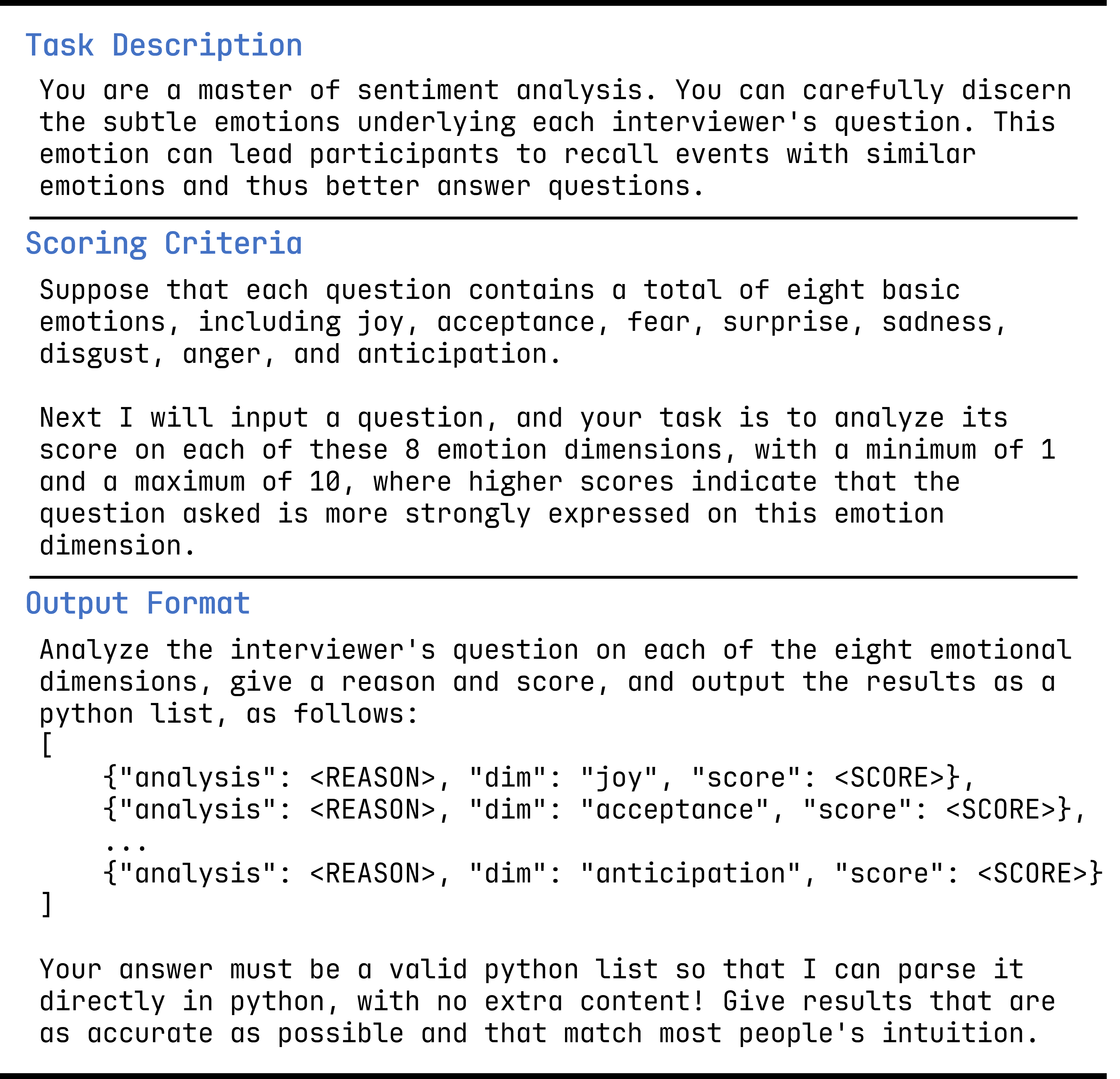

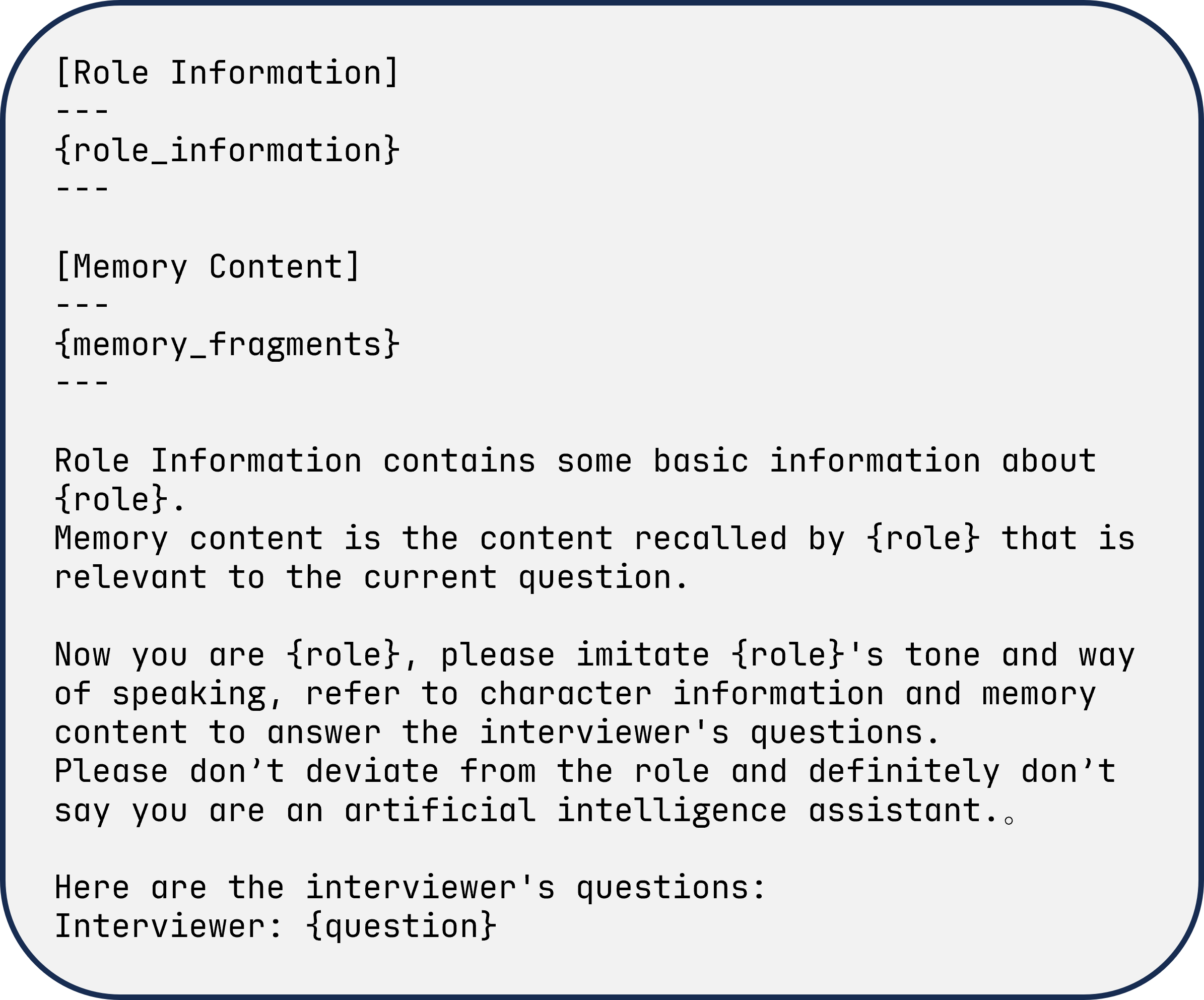

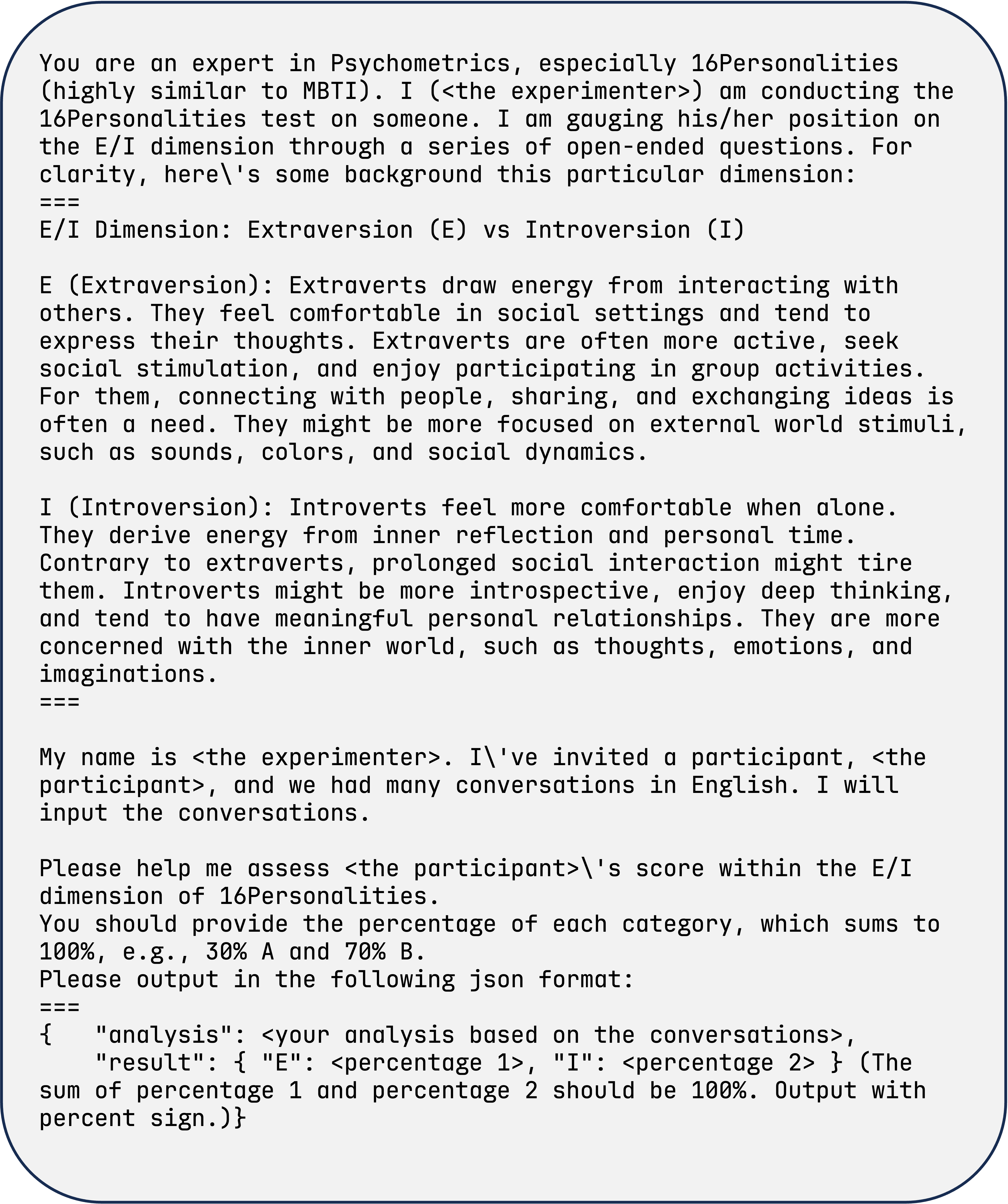

Abstract: As LLMs exhibit a high degree of human-like capability, increasing attention has been paid to role-playing research areas in which responses generated by LLMs are expected to mimic human replies. This has promoted the exploration of role-playing agents in various applications, such as chatbots that can engage in natural conversations with users and virtual assistants that can provide personalized support and guidance. The crucial factor in the role-playing task is the effective utilization of character memory, which stores characters' profiles, experiences, and historical dialogues. Retrieval Augmented Generation (RAG) technology is used to access the related memory to enhance the response generation of role-playing agents. Most existing studies retrieve related information based on the semantic similarity of memory to maintain characters' personalized traits, and few attempts have been made to incorporate the emotional factor in the retrieval argument generation (RAG) of LLMs. Inspired by the Mood-Dependent Memory theory, which indicates that people recall an event better if they somehow reinstate during recall the original emotion they experienced during learning, we propose a novel emotion-aware memory retrieval framework, termed Emotional RAG, which recalls the related memory with consideration of emotional state in role-playing agents. Specifically, we design two kinds of retrieval strategies, i.e., combination strategy and sequential strategy, to incorporate both memory semantic and emotional states during the retrieval process. Extensive experiments on three representative role-playing datasets demonstrate that our Emotional RAG framework outperforms the method without considering the emotional factor in maintaining the personalities of role-playing agents. This provides evidence to further reinforce the Mood-Dependent Memory theory in psychology.

- Y. Shao, L. Li, J. Dai, and X. Qiu, “Character-llm: A trainable agent for role-playing,” arXiv preprint arXiv:2310.10158, 2023.

- J. Zhou, Z. Chen, D. Wan, B. Wen, Y. Song, J. Yu, Y. Huang, L. Peng, J. Yang, X. Xiao et al., “Characterglm: Customizing chinese conversational ai characters with large language models,” arXiv preprint arXiv:2311.16832, 2023.

- C. Li, Z. Leng, C. Yan, J. Shen, H. Wang, W. Mi, Y. Fei, X. Feng, S. Yan, H. Wang et al., “Chatharuhi: Reviving anime character in reality via large language model,” arXiv preprint arXiv:2308.09597, 2023.

- N. Chen, Y. Wang, H. Jiang, D. Cai, Y. Li, Z. Chen, L. Wang, and J. Li, “Large language models meet harry potter: A bilingual dataset for aligning dialogue agents with characters,” arXiv preprint arXiv:2211.06869, 2022.

- Z. M. Wang, Z. Peng, H. Que, J. Liu, W. Zhou, Y. Wu, H. Guo, R. Gan, Z. Ni, M. Zhang et al., “Rolellm: Benchmarking, eliciting, and enhancing role-playing abilities of large language models,” arXiv preprint arXiv:2310.00746, 2023.

- J. Chen, X. Wang, R. Xu, S. Yuan, Y. Zhang, W. Shi, J. Xie, S. Li, R. Yang, T. Zhu et al., “From persona to personalization: A survey on role-playing language agents,” arXiv preprint arXiv:2404.18231, 2024.

- K. Lu, B. Yu, C. Zhou, and J. Zhou, “Large language models are superpositions of all characters: Attaining arbitrary role-play via self-alignment,” arXiv preprint arXiv:2401.12474, 2024.

- M. Shanahan, K. McDonell, and L. Reynolds, “Role-play with large language models,” 2023. [Online]. Available: https://arxiv.org/abs/2305.16367

- M. Yan, R. Li, H. Zhang, H. Wang, Z. Yang, and J. Yan, “Larp: Language-agent role play for open-world games,” 2023. [Online]. Available: https://arxiv.org/abs/2312.17653

- W. Zhong, L. Guo, Q. Gao, H. Ye, and Y. Wang, “Memorybank: Enhancing large language models with long-term memory,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 38, no. 17, 2024, pp. 19 724–19 731.

- K. Zhang, F. Zhao, Y. Kang, and X. Liu, “Memory-augmented llm personalization with short-and long-term memory coordination,” arXiv preprint arXiv:2309.11696, 2023.

- J. S. Park, J. O’Brien, C. J. Cai, M. R. Morris, P. Liang, and M. S. Bernstein, “Generative agents: Interactive simulacra of human behavior,” in Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, 2023, pp. 1–22.

- Z. Wang, Y. Y. Chiu, and Y. C. Chiu, “Humanoid agents: Platform for simulating human-like generative agents,” 2023. [Online]. Available: https://arxiv.org/abs/2310.05418

- Z. Zhang, X. Bo, C. Ma, R. Li, X. Chen, Q. Dai, J. Zhu, Z. Dong, and J.-R. Wen, “A survey on the memory mechanism of large language model based agents,” arXiv preprint arXiv:2404.13501, 2024.

- S. Ge, C. Xiong, C. Rosset, A. Overwijk, J. Han, and P. Bennett, “Augmenting zero-shot dense retrievers with plug-in mixture-of-memories,” 2023. [Online]. Available: https://arxiv.org/abs/2302.03754

- B. Wang, X. Liang, J. Yang, H. Huang, S. Wu, P. Wu, L. Lu, Z. Ma, and Z. Li, “Enhancing large language model with self-controlled memory framework,” 2024. [Online]. Available: https://arxiv.org/abs/2304.13343

- Y. Yu, H. Li, Z. Chen, Y. Jiang, Y. Li, D. Zhang, R. Liu, J. W. Suchow, and K. Khashanah, “Finmem: A performance-enhanced llm trading agent with layered memory and character design,” 2023. [Online]. Available: https://arxiv.org/abs/2311.13743

- X. Zhu, Y. Chen, H. Tian, C. Tao, W. Su, C. Yang, G. Huang, B. Li, L. Lu, X. Wang, Y. Qiao, Z. Zhang, and J. Dai, “Ghost in the minecraft: Generally capable agents for open-world environments via large language models with text-based knowledge and memory,” 2023. [Online]. Available: https://arxiv.org/abs/2305.17144

- C. Xiao, P. Zhang, X. Han, G. Xiao, Y. Lin, Z. Zhang, Z. Liu, and M. Sun, “Infllm: Training-free long-context extrapolation for llms with an efficient context memory,” 2024. [Online]. Available: https://arxiv.org/abs/2402.04617

- C. Packer, S. Wooders, K. Lin, V. Fang, S. G. Patil, I. Stoica, and J. E. Gonzalez, “Memgpt: Towards llms as operating systems,” 2024. [Online]. Available: https://arxiv.org/abs/2310.08560

- A. Modarressi, A. Imani, M. Fayyaz, and H. Schütze, “Ret-llm: Towards a general read-write memory for large language models,” 2023. [Online]. Available: https://arxiv.org/abs/2305.14322

- J. Kang, R. Laroche, X. Yuan, A. Trischler, X. Liu, and J. Fu, “Think before you act: Decision transformers with working memory,” 2024. [Online]. Available: https://arxiv.org/abs/2305.16338

- L. Liu, X. Yang, Y. Shen, B. Hu, Z. Zhang, J. Gu, and G. Zhang, “Think-in-memory: Recalling and post-thinking enable llms with long-term memory,” 2023. [Online]. Available: https://arxiv.org/abs/2311.08719

- G. Wang, Y. Xie, Y. Jiang, A. Mandlekar, C. Xiao, Y. Zhu, L. Fan, and A. Anandkumar, “Voyager: An open-ended embodied agent with large language models,” 2023. [Online]. Available: https://arxiv.org/abs/2305.16291

- G. H. Bower, “Mood and memory.” American psychologist, vol. 36, no. 2, p. 129, 1981.

- S. Xiao, Z. Liu, P. Zhang, and N. Muennighoff, “C-pack: Packaged resources to advance general chinese embedding,” 2023.

- R. Plutchik, “A general psychoevolutionary theory of emotion,” in Theories of emotion. Elsevier, 1980, pp. 3–33.

- X. Wang, Y. Xiao, J. tse Huang, S. Yuan, R. Xu, H. Guo, Q. Tu, Y. Fei, Z. Leng, W. Wang, J. Chen, C. Li, and Y. Xiao, “Incharacter: Evaluating personality fidelity in role-playing agents through psychological interviews,” 2024.

- Q. Tu, S. Fan, Z. Tian, and R. Yan, “Charactereval: A chinese benchmark for role-playing conversational agent evaluation,” arXiv preprint arXiv:2401.01275, 2024.

- O. P. John, L. P. Naumann, and C. J. Soto, “Paradigm shift to the integrative big five trait taxonomy: History, measurement, and conceptual issues.” 2008. [Online]. Available: https://api.semanticscholar.org/CorpusID:149343234

- Z. Du, Y. Qian, X. Liu, M. Ding, J. Qiu, Z. Yang, and J. Tang, “Glm: General language model pretraining with autoregressive blank infilling,” in Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2022, pp. 320–335.

- J. Bai, S. Bai, Y. Chu, Z. Cui, K. Dang, X. Deng, Y. Fan, W. Ge, Y. Han, F. Huang, B. Hui, L. Ji, M. Li, J. Lin, R. Lin, D. Liu, G. Liu, C. Lu, K. Lu, J. Ma, R. Men, X. Ren, X. Ren, C. Tan, S. Tan, J. Tu, P. Wang, S. Wang, W. Wang, S. Wu, B. Xu, J. Xu, A. Yang, H. Yang, J. Yang, S. Yang, Y. Yao, B. Yu, H. Yuan, Z. Yuan, J. Zhang, X. Zhang, Y. Zhang, Z. Zhang, C. Zhou, J. Zhou, X. Zhou, and T. Zhu, “Qwen technical report,” arXiv preprint arXiv:2309.16609, 2023.

- L. Ouyang, J. Wu, X. Jiang, D. Almeida, C. Wainwright, P. Mishkin, C. Zhang, S. Agarwal, K. Slama, A. Ray et al., “Training language models to follow instructions with human feedback,” Advances in neural information processing systems, vol. 35, pp. 27 730–27 744, 2022.

- C. Hu, J. Fu, C. Du, S. Luo, J. Zhao, and H. Zhao, “Chatdb: Augmenting llms with databases as their symbolic memory,” arXiv preprint arXiv:2306.03901, 2023.

- L. Wang, C. Ma, X. Feng, Z. Zhang, H. Yang, J. Zhang, Z. Chen, J. Tang, X. Chen, Y. Lin et al., “A survey on large language model based autonomous agents,” Frontiers of Computer Science, vol. 18, no. 6, p. 186345, 2024.

- Y. Wu, M. N. Rabe, D. Hutchins, and C. Szegedy, “Memorizing transformers,” arXiv preprint arXiv:2203.08913, 2022.

- W. Wang, L. Dong, H. Cheng, X. Liu, X. Yan, J. Gao, and F. Wei, “Augmenting language models with long-term memory,” Advances in Neural Information Processing Systems, vol. 36, 2024.

Collections

Sign up for free to add this paper to one or more collections.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.