- The paper finds that AI-enhanced coding tools reduced developer task time by 21% in a rigorously controlled enterprise trial.

- It employs a randomized controlled trial with 96 participants and evaluates tools like code completion, smart paste, and natural language to code.

- The evidence suggests that experienced developers benefit most, highlighting AI’s potential to accelerate software development.

How Much Does AI Impact Development Speed? An Enterprise-Based Randomized Controlled Trial

This paper presents a rigorously conducted randomized controlled trial (RCT) evaluating the impact of AI-enhanced tools on the development speed of software engineers at Google. Conducted with 96 participants, the study aims to quantify how AI features like code completion influence time on task in enterprise-grade software development.

Introduction and Motivation

The emergence of AI-assisted tools for software development continues to gain traction, with products like GitHub Copilot™ leading the charge. Yet, there remains a lack of empirical evidence detailing their efficacy in enhancing productivity, especially within enterprise environments. Prior studies have reported up to 56% improvements in task completion speed using AI tools like Copilot. However, discrepancies remain, mainly regarding varying developer experiences and task contexts. This paper addresses these gaps by providing a comprehensive analysis of AI's impact using Google's proprietary tools.

Research Design

The study deployed an RCT to minimize biases, with participants randomly assigned to either use specific AI features (experimental group) or abstain (control group), ensuring any observed effects could be attributed to AI usage.

Figure 1: Study design: Activities and randomization.

Participants engaged in a coding task designed to mirror typical enterprise-grade development work, including code writing, editing, and testing. The task involved implementing a service to log messages on Google's internal infrastructure, a task requiring both comprehension and coding expertise.

The AI tools tested included:

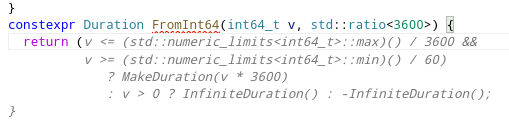

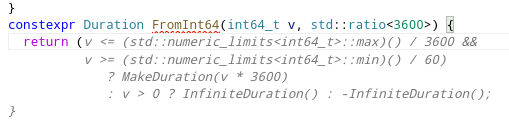

- AI Code Completion: Offers contextual, multi-line auto-suggestions to streamline coding processes.

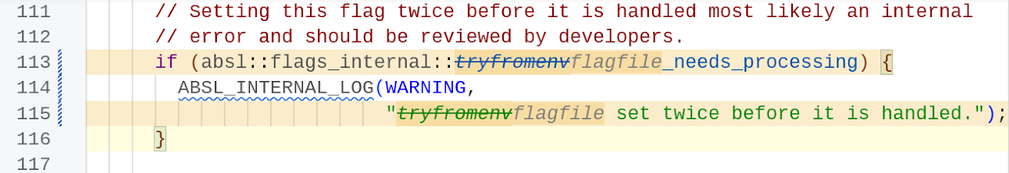

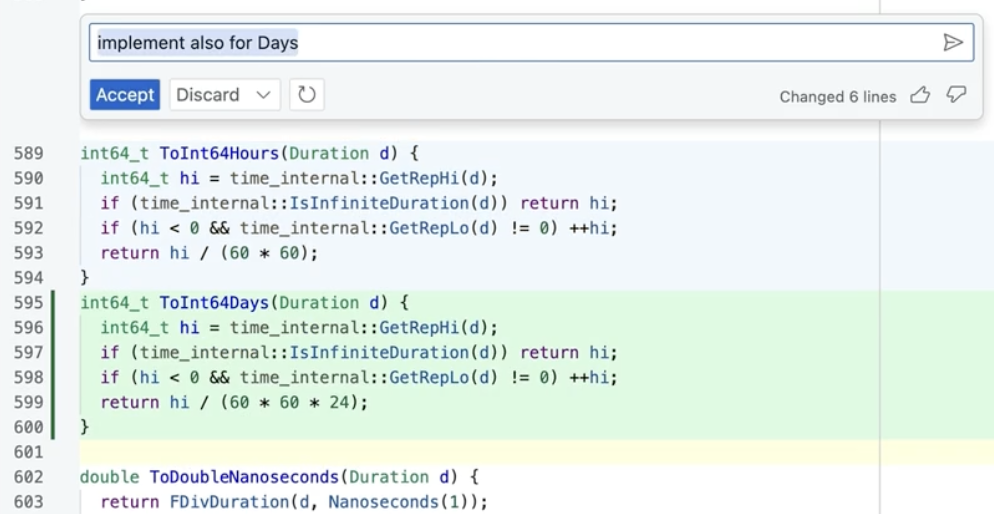

Figure 2: AI Code Completion in Cider V. When a user starts typing code, the feature auto-completes based on context.

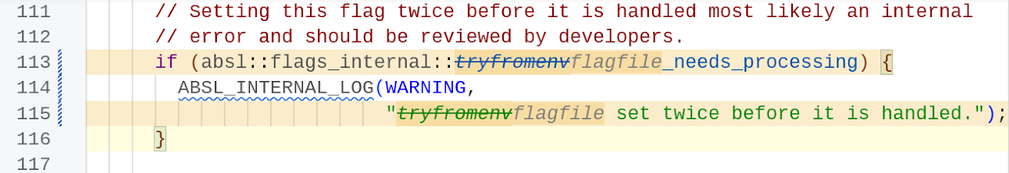

- Smart Paste: Facilitates context-aware modifications when code is pasted, enhancing code coherence and minimizing manual adjustments.

Figure 3: Smart Paste feature in Cider V showing automatic code adjustments upon pasting.

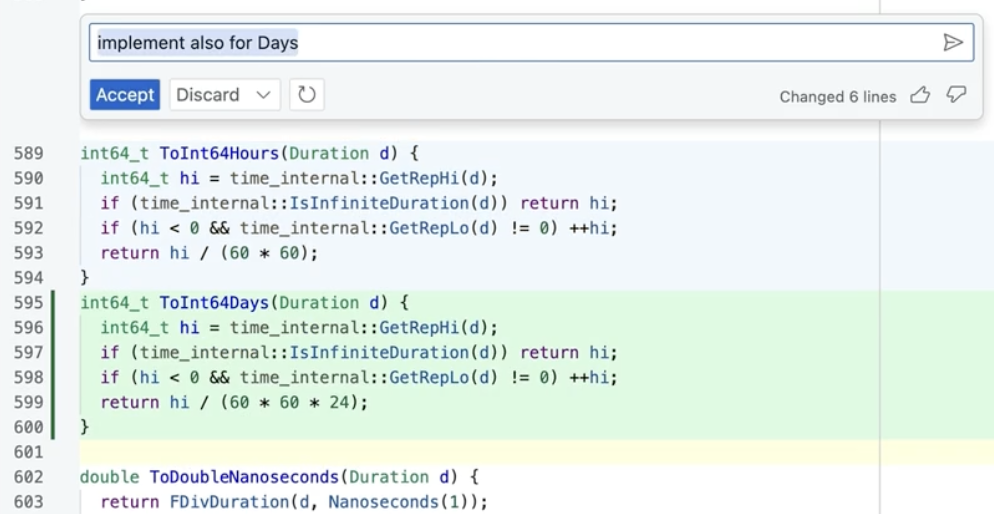

- Natural Language to Code: Converts natural language queries into code suggestions, aiding developers in code synthesis.

Figure 4: Natural language to code feature in Cider V translating user queries into code suggestions.

These tools were integrated into Google's Cider V, a customized IDE based on Microsoft's VS Code.

Results and Analysis

The primary hypothesis (H1) posited that AI tools would reduce time on task. Results indicated a 21% reduction in task completion time for developers using AI, although this effect size was smaller than previous findings from studies like Peng et al. (56% reduction). However, this difference aligns with Cui et al., which reported a 26% increase in productivity using AI in enterprise settings.

Figure 5: Box and whisker plot of time spent on task by experimental condition.

Senior developers and those coding for longer hours daily seemed to derive greater benefit from AI, suggesting expertise amplifies AI's utility. No significant task-level interaction effects were found, indicating that tool efficacy transcends specific domain expertise.

Implications

The findings underscore AI's role in speeding up development cycles, especially for experienced developers. By harnessing advanced AI tools, enterprises could achieve substantial productivity gains. However, the technology's swift evolution suggests continued iteration and longitudinal studies are warranted to capture further improvements and address equity concerns.

Conclusion

While AI-enhanced tools demonstrably accelerate development, the complex interaction of user expertise, task complexity, and tool sophistication necessitates ongoing research. Future work should explore personalization to address individual user needs comprehensively and ensure equitable benefits across varying skill levels. Continued investment in empirically validated AI tools promises sustained enhancements in software engineering productivity.