- The paper finds a 19% slowdown in developer productivity when using AI tools, challenging prior speedup forecasts.

- It employs an RCT with 16 experienced developers on 246 tasks using tools like Cursor Pro and Claude, with data from screen recordings and forecasts.

- The study highlights that factors such as AI latency, high repository complexity, and over-optimism contribute to the productivity gap.

AI Slowdown in Open-Source Development

This essay synthesizes the findings of "Measuring the Impact of Early-2025 AI on Experienced Open-Source Developer Productivity" (2507.09089), which examines the impact of AI tools on experienced open-source developers. The paper employs a randomized controlled trial (RCT) methodology to measure the effect of AI on developer productivity. The results of the RCT indicate a surprising slowdown in developer productivity when using AI tools.

Research Methodology

The RCT involved 16 experienced developers completing 246 tasks on open-source repositories with which they had significant prior experience. The tasks were randomly assigned to either an AI-allowed or AI-disallowed condition. The developers primarily used Cursor Pro, a code editor with integrated AI features, and Claude 3.5/3.7 Sonnet. The paper collected data through screen recordings, developer surveys, and expert forecasts.

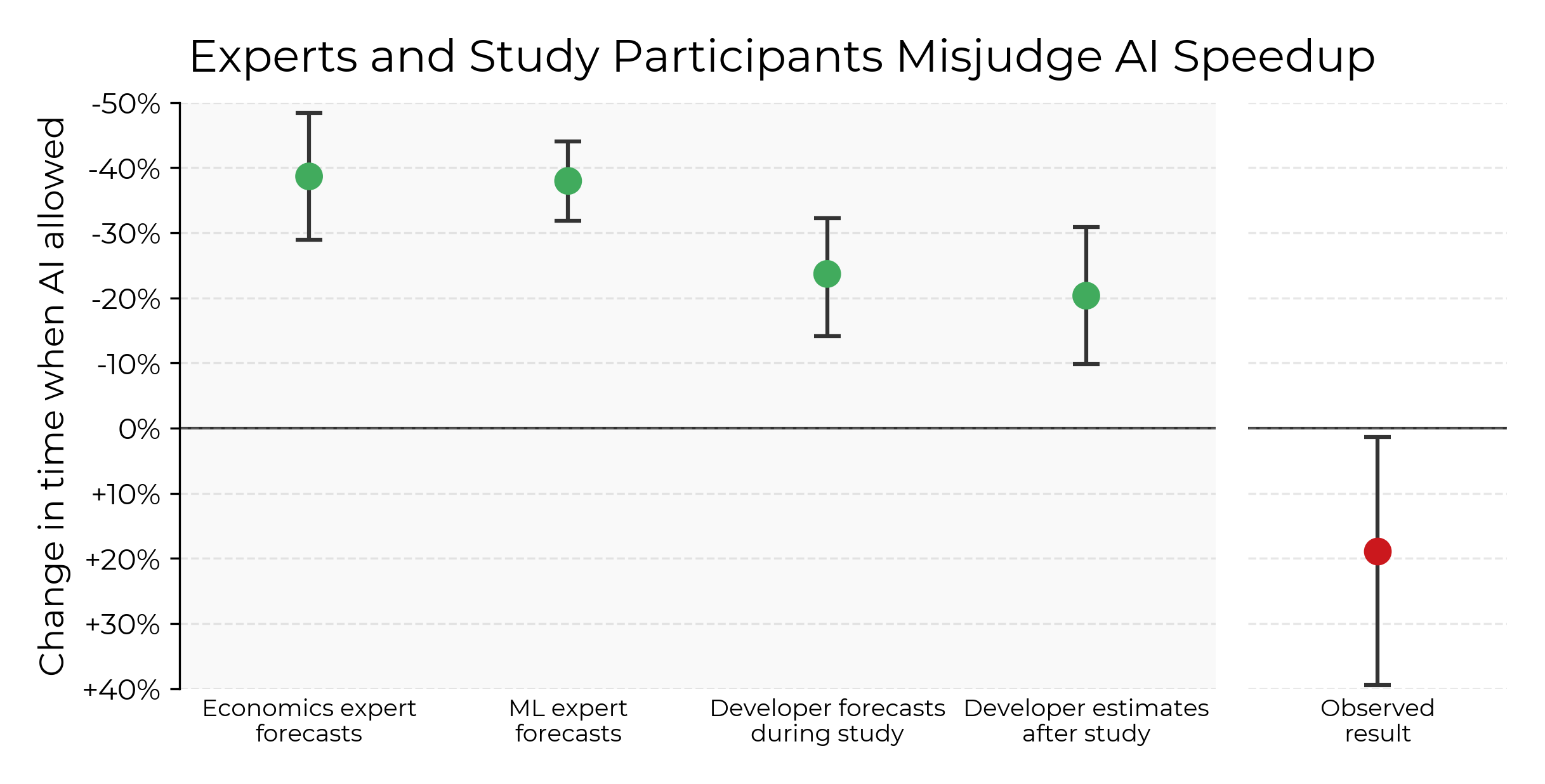

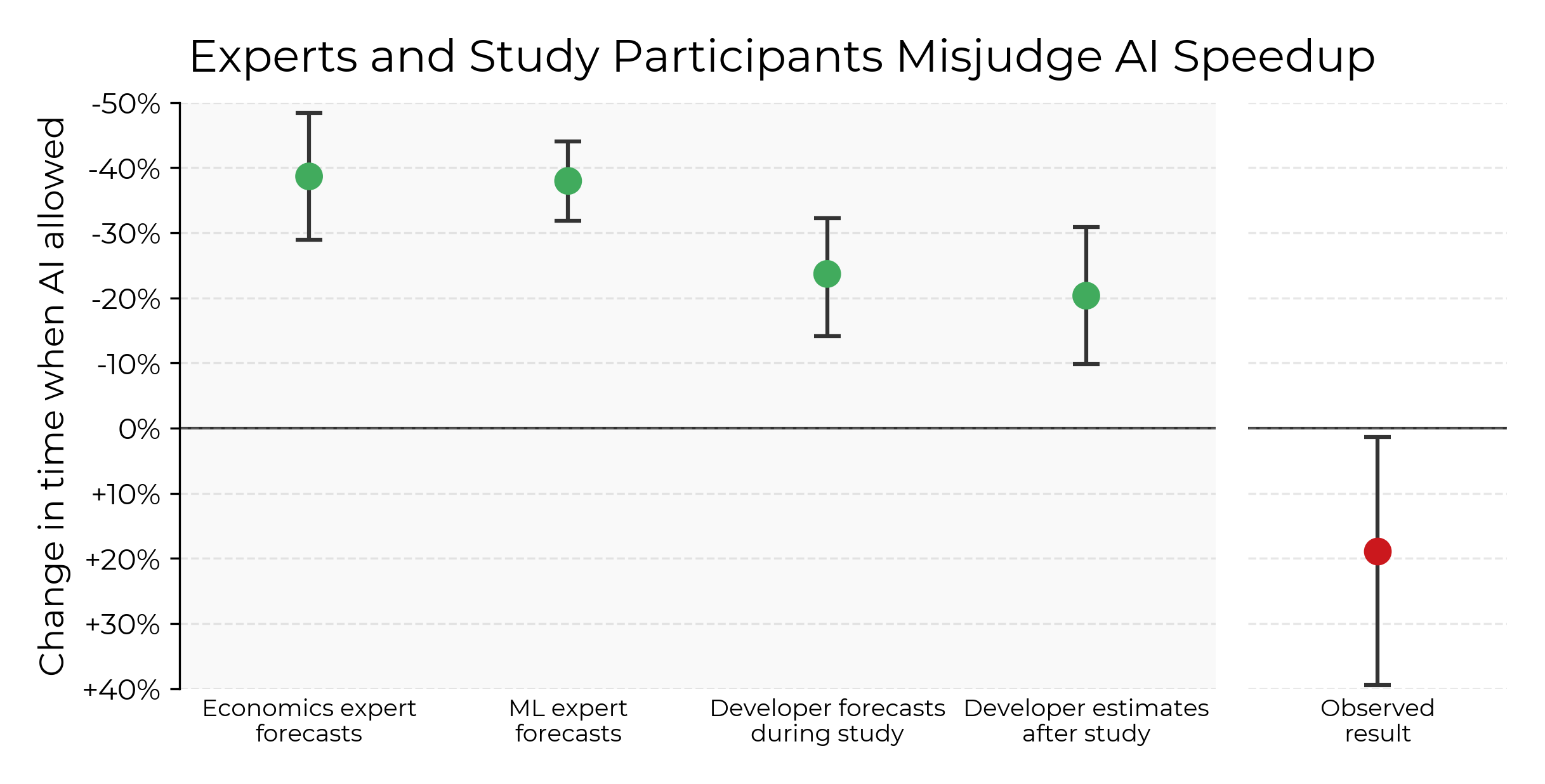

Figure 1: Experts and paper participants (experienced open-source contributors) substantially overestimate how much AI assistance will speed up developers---tasks take 19\% more time when paper participants can use AI tools like Cursor Pro.

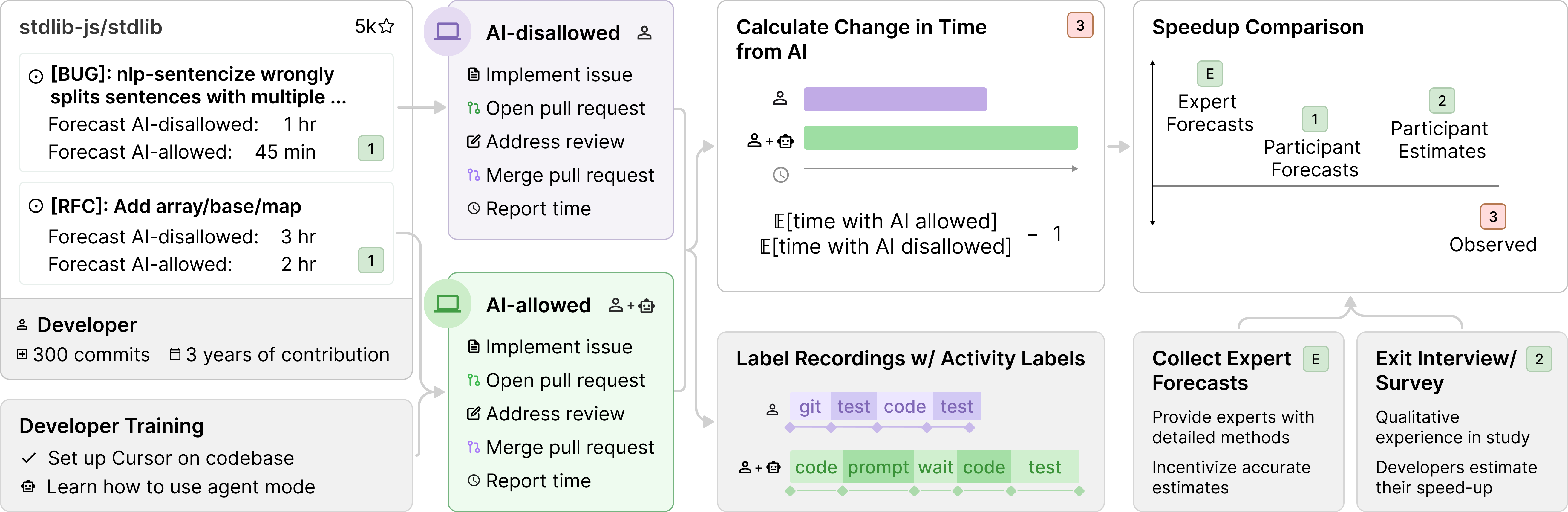

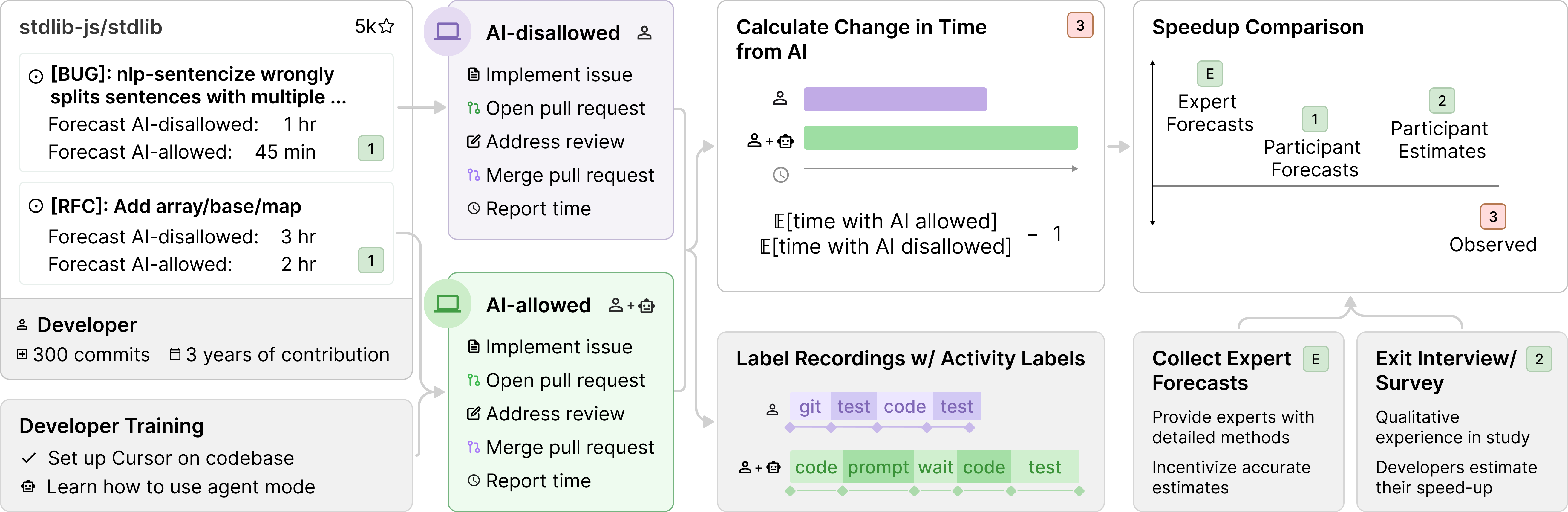

The experimental design (Figure 2) ensured that tasks were defined before treatment assignment, and screen recordings were used to verify compliance and gather data for analysis. Developers forecasted task completion times with and without AI, providing a basis for comparison against observed results. Experts in economics and machine learning also provided forecasts to compare against the observed speedup.

Figure 2: Our experimental design. Tasks (referred to as issues) are defined before treatment assignment, screen recordings let us verify compliance (and provide a rich source of data for analysis), and forecasts from experts and developers help us measure the gap between expectations and observed results.

Key Findings

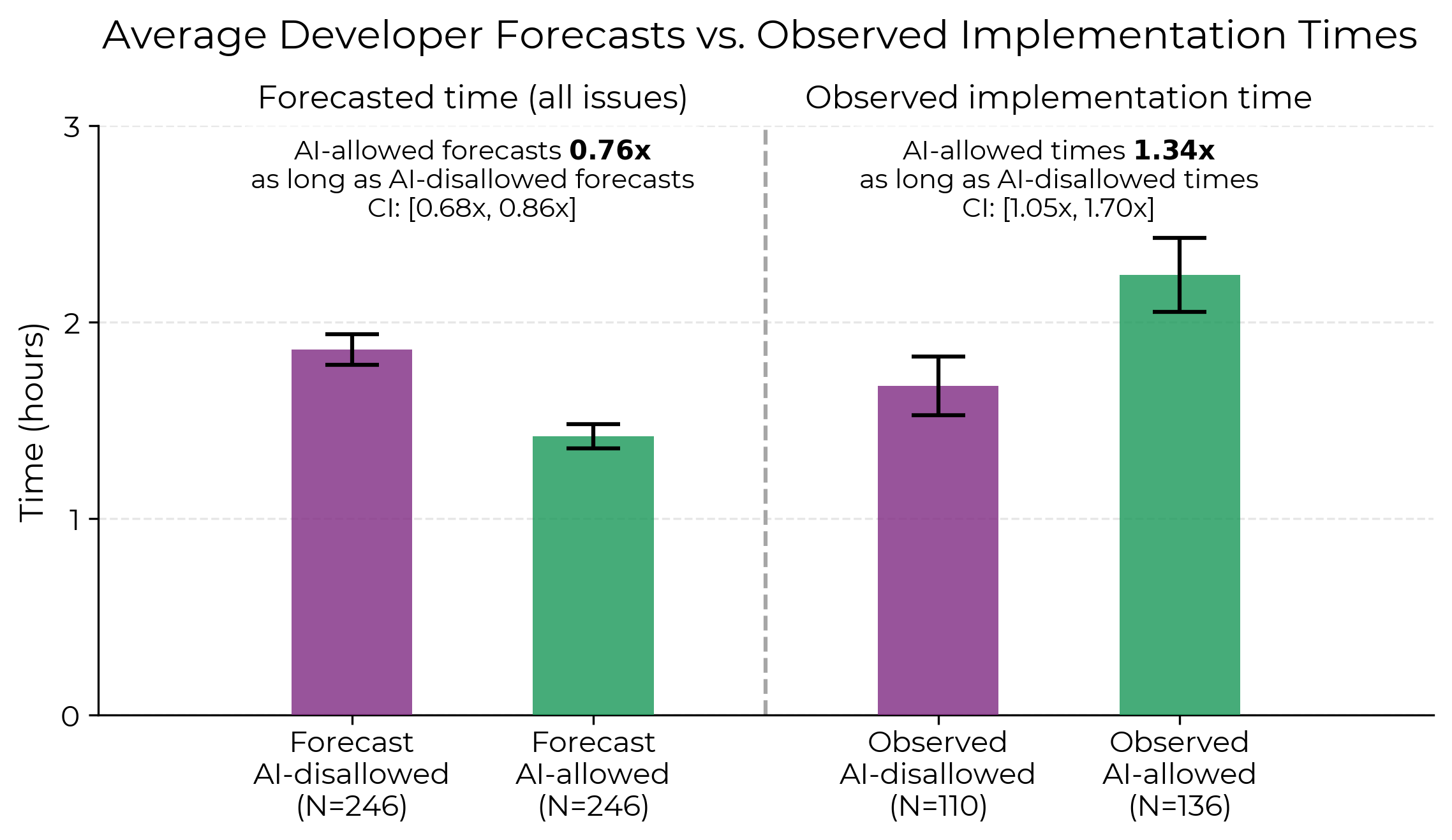

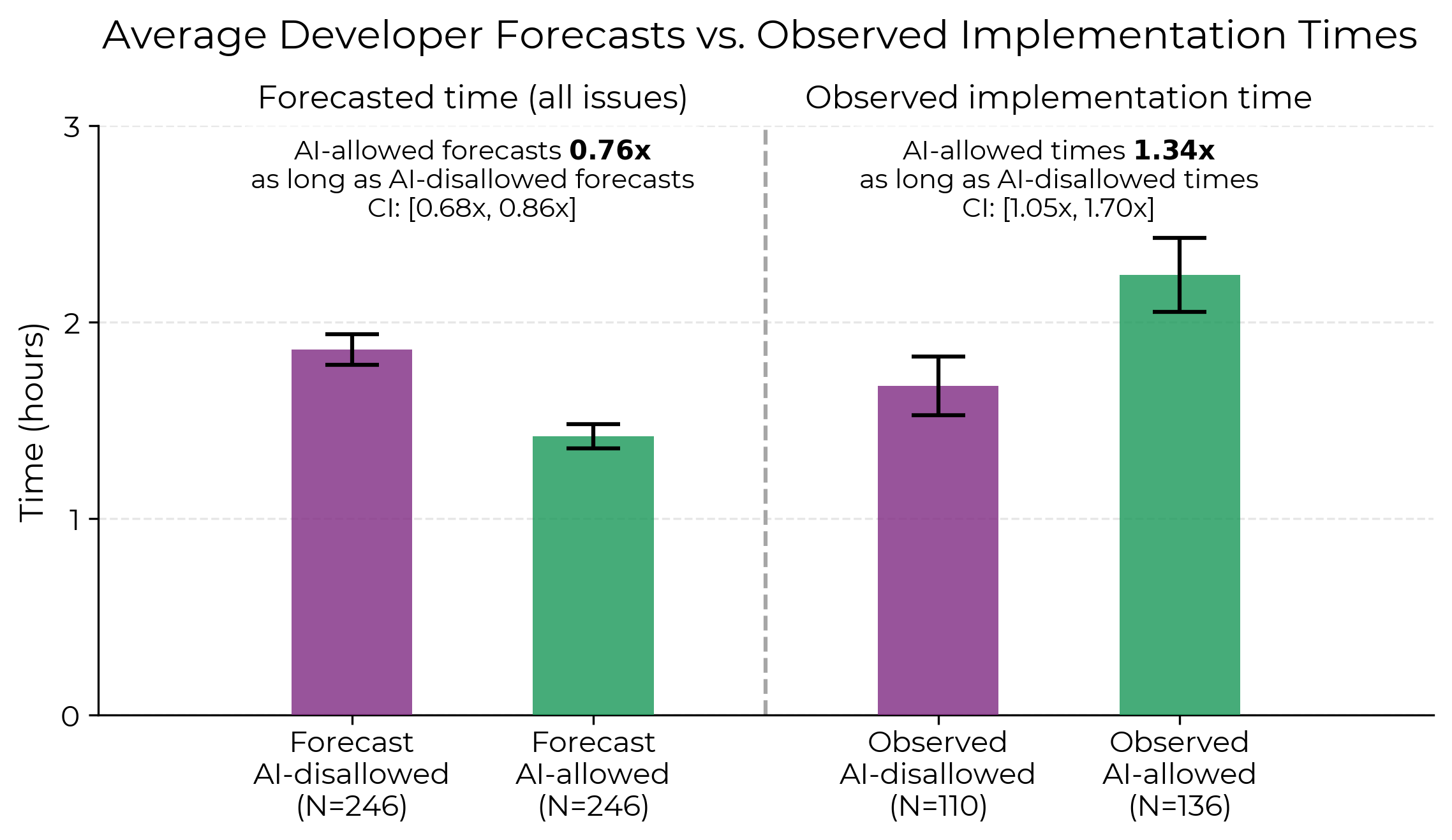

Contrary to expectations, the paper found that allowing AI increased task completion time by 19%. This slowdown contradicted both developer and expert forecasts, which predicted significant speedups. Developers initially forecasted a 24% reduction in completion time with AI, while experts predicted reductions of 39% (economics) and 38% (ML).

Figure 3: Left: Raw average forecasted implementation times. Right: Raw average observed implementation times. The ratio of observed implementation times gives a more extreme slowdown estimate than regression-based estimates (\autoref{sec:regression}) because AI-allowed issues are forecasted (importantly, before treatment assignment) by developers to take slightly longer, which the regression corrects for. Both: \autoref{sec:ratio-estimator} describes confidence intervals around ratios of average times.

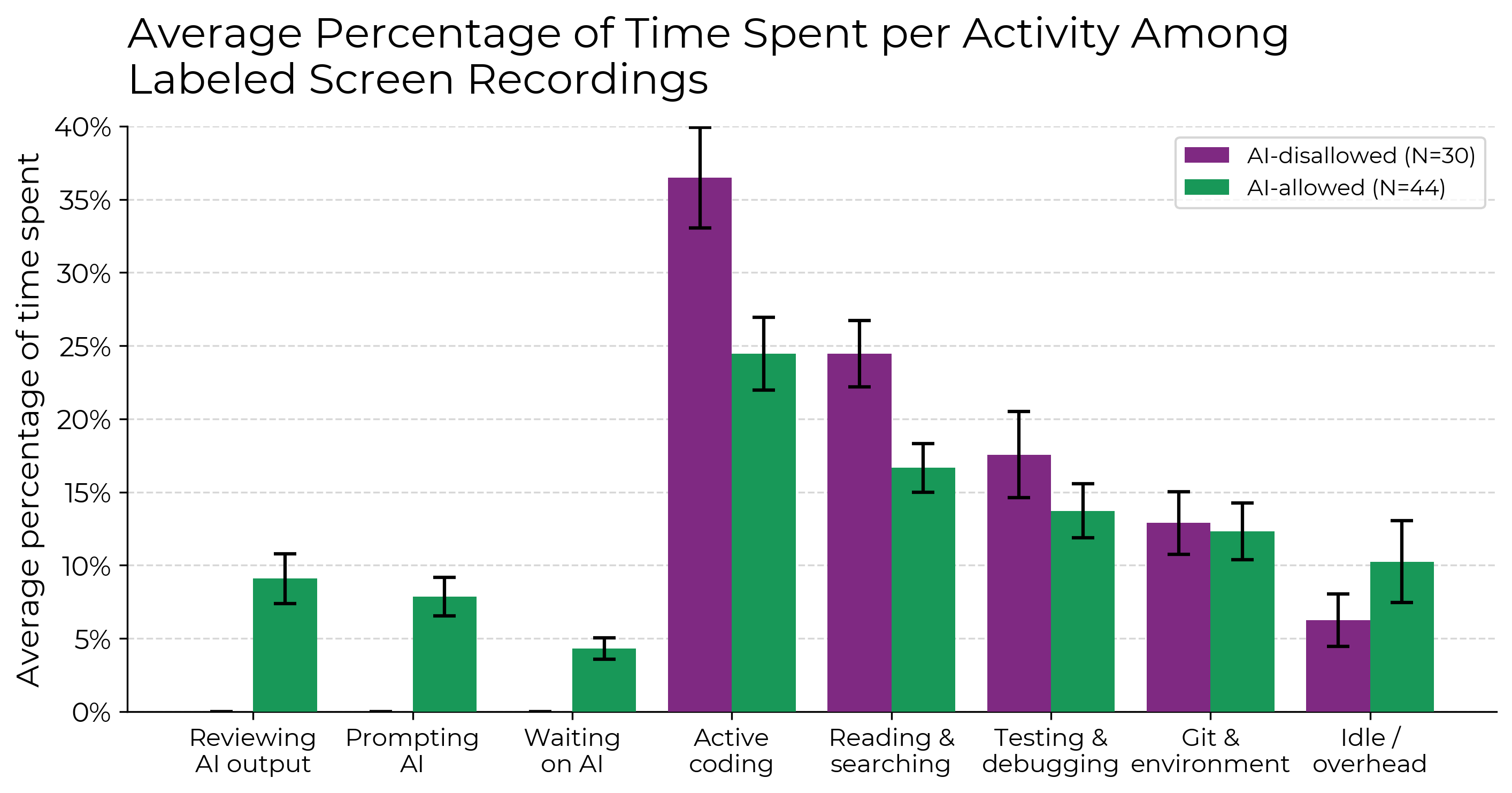

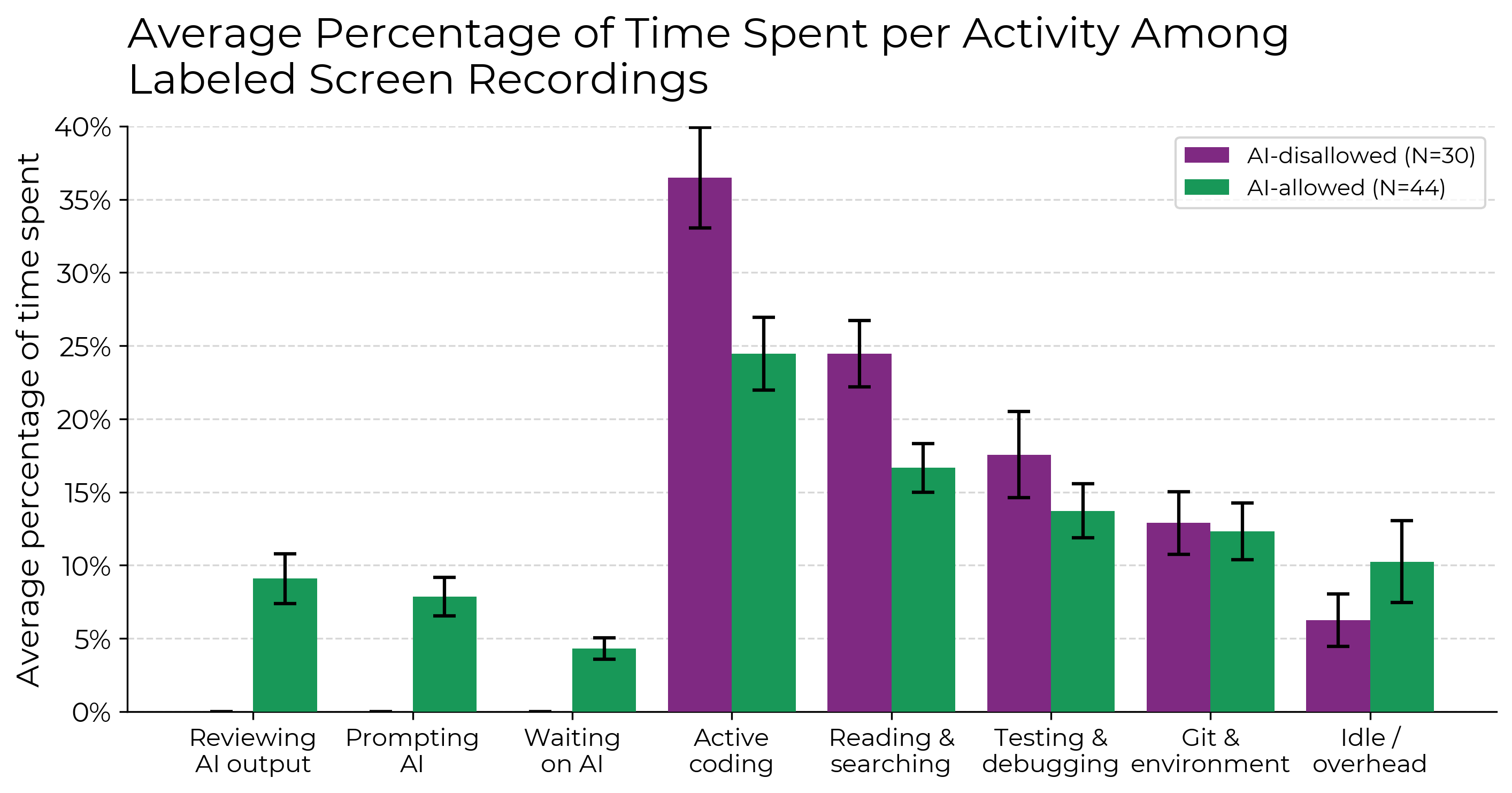

Analysis of screen recordings (Figure 4) revealed that developers spent less time actively coding and searching for information when using AI. Instead, they allocated more time to prompting AI, waiting for AI outputs, and reviewing AI-generated code.

Figure 4: On the subset of labeled screen recordings, when AI is allowed, developers spend less time actively coding and searching for/reading information, and instead spend time prompting AI, waiting on and reviewing AI outputs, and idle. \autoref{fig:loom-high-category-minutes} shows the absolute (average) minutes spent in each category, and \autoref{fig:loom-low-category-percentage} presents these results broken down into 27 fine-grained categories.

Further investigation identified several factors contributing to the slowdown, including over-optimism about AI usefulness, high developer familiarity with the repositories, the complexity of the repositories, low AI reliability, and implicit repository context.

Implications and Discussion

The paper's findings challenge the prevailing narrative of AI-driven productivity gains in software development. The observed slowdown highlights the gap between perceived and actual AI impact, even among experienced developers. The results suggest that AI benchmarks may not accurately reflect real-world performance, and field experiments with robust outcome measures are essential for evaluating AI capabilities.

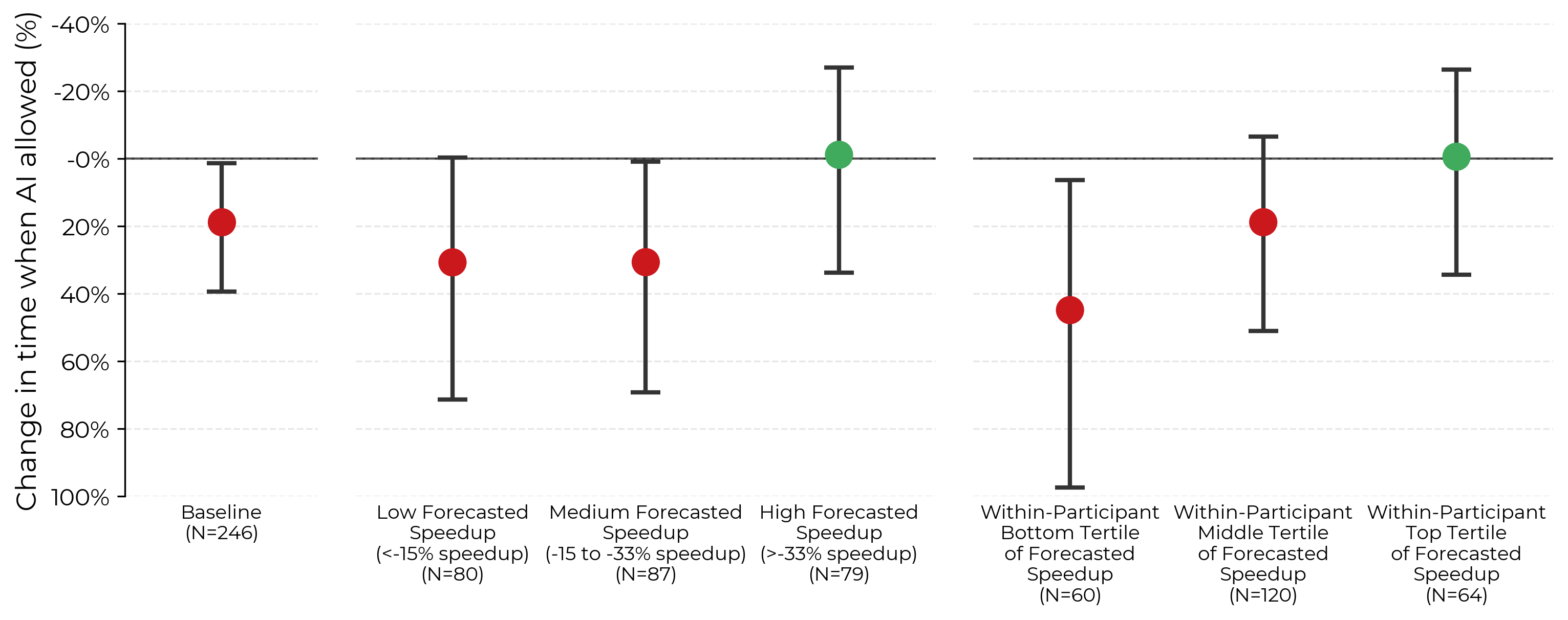

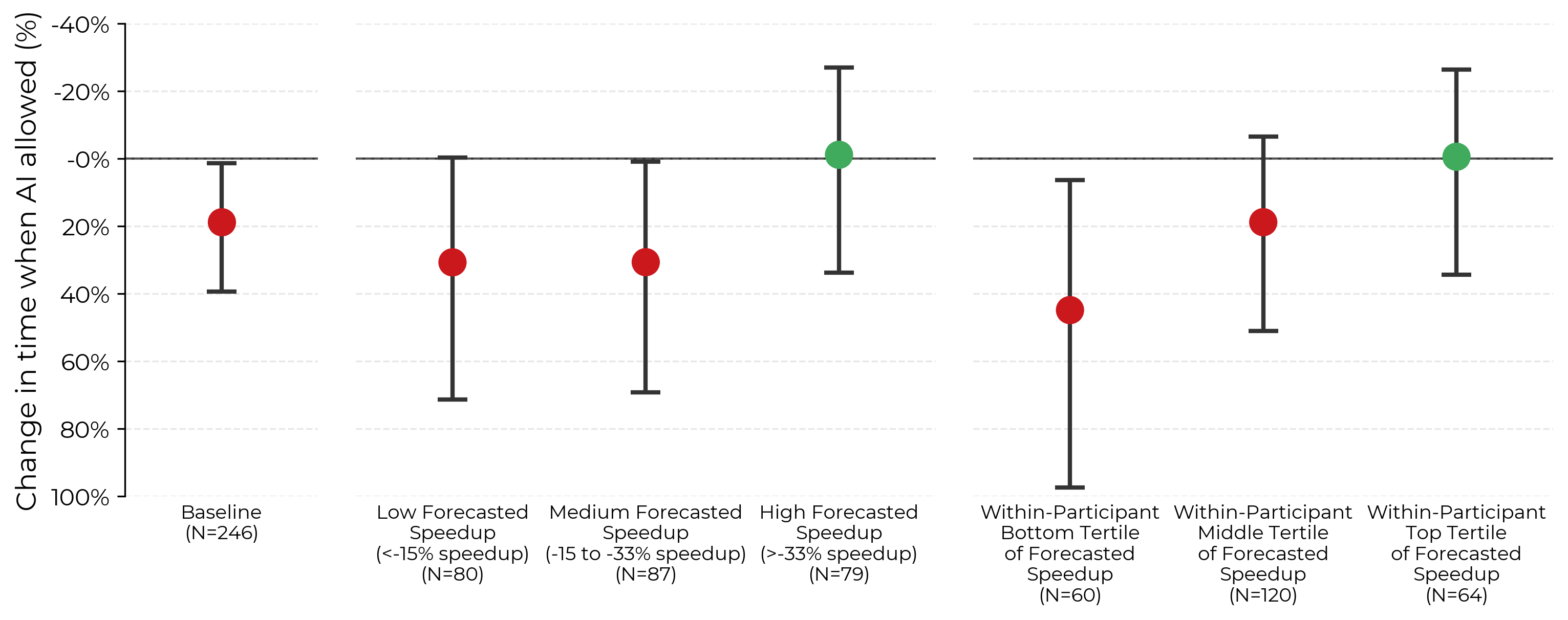

Figure 5: Speedup broken down by forecasted speedup between and within developers (developers forecast how long they expect each issue to take with and without AI). Speedup cutoffs are chosen to make bins approximately similarly sized. Tertiles are imbalanced because forecasted speedup contains duplicates that we assign to a single bin. Developers experience less slowdown on issues that they forecast high speedup. See \autoref{sec:heterogeneous_treatment_effect_estimation} for details on how we estimate heterogeneous treatment effects.

The paper identifies several setting-specific factors that may contribute to the slowdown. High developer familiarity with the repositories and the size and maturity of the repositories may limit the effectiveness of current AI tools. The low reliability and high latency of AI-generated code also contribute to productivity losses.

Future research should focus on improving AI reliability, reducing latency, and developing better elicitation strategies. Domain-specific fine-tuning and increased inference compute/tokens may also improve AI performance in software development tasks. The paper suggests that future AI systems could potentially speed up developers by addressing these limitations.

Conclusion

The paper provides empirical evidence that current AI tools can slow down experienced open-source developers. This finding underscores the importance of rigorous evaluation methodologies and highlights the need for further research to improve AI capabilities and address the factors limiting AI performance in real-world software development settings. The observed disconnect between perceived and actual AI impact calls for a more nuanced understanding of AI's role in enhancing developer productivity.