- The paper introduces a novel multi-scale, multi-modal fusion approach that employs RBMs for modality completion and task-targeted gates for multi-task learning.

- The methodology uses multi-grained encoders and a dynamic fusion module to effectively integrate varied data resolutions and reduce feature redundancy.

- Empirical results show notable improvements in MAPE, RMSE, and classification metrics over traditional models like LSTM, GRU, and Transformers.

MSMF: Multi-Scale Multi-Modal Fusion for Enhanced Stock Market Prediction

Introduction

The paper "MSMF: Multi-Scale Multi-Modal Fusion for Enhanced Stock Market Prediction" introduces a sophisticated approach to stock market forecasting using a fusion of multiple data modalities. Traditional stock prediction models face challenges due to the heterogeneity of data sources and sampling frequencies. MSMF addresses these through a novel integration of multi-scale and multi-modal feature extraction, emphasizing balancing complementarity and redundancy in data. It proposes a comprehensive framework leveraging modality completion, multi-scale alignment, and progressive fusion techniques to enhance prediction accuracy.

Methodology

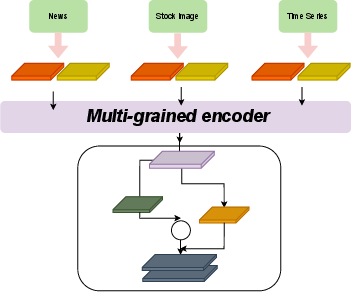

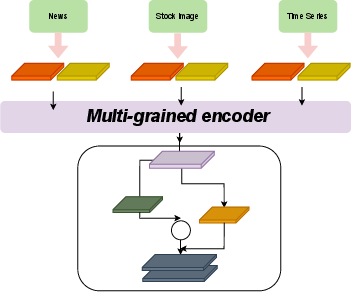

The MSMF architecture is designed to synthesize data from various modalities, addressing several key challenges in stock prediction.

Modality Completion

The approach uses Restricted Boltzmann Machines (RBMs) to complete missing modal data, enabling the integration of heterogeneous inputs. It models the joint distribution of different modalities to address sampling time discrepancies and fill data gaps, thus ensuring more reliable prediction inputs.

The architecture employs multi-scale encoders to extract features at varying granularities, enhancing the model's capacity to capture both local variations and global trends in the data. The encoder design allows for concatenation of fine and coarse features, promoting complementary information fusion.

Multi-Modal Fusion

The Multi-Scale Alignment and Blank Learning (MSA-BL) module plays a pivotal role in fusing information across modalities. This approach mitigates conflicts and redundancy in feature integration, utilizing task-specific gating mechanisms to dynamically adjust the importance of different modalities and granularities.

Multi-Task Learning

The paper introduces Task-targeted Gates (TTG) to enable context-sensitive predictions across multiple tasks, allowing each task to assign individual weights to local and global features. An adaptive Task-targeted Prediction Layer (TTPL) further refines predictions by accounting for task-specific needs.

Figure 1: Overview of the MSMF architecture.

Results and Analysis

The experimental results underscore the efficacy of MSMF in enhancing prediction accuracy across diverse stock market tasks. Evaluations revealed MSMF's superior performance in reducing Mean Absolute Percentage Error (MAPE) and Root Mean Square Error (RMSE), as well as achieving higher classification accuracy and F1 scores for stock movement predictions in comparison to existing models like LSTM, GRU, and Transformer architectures.

Ablation Studies

- Multi-Grained Encoder: Incorporating multi-scale encoders demonstrated enhanced accuracy and F1 scores over single-scale approaches.

- Modality Completion: The introduction of modality completion showed a significant reduction in prediction errors compared to traditional imputation methods.

- Multi-Modal Fusion: Integration through MSA-BL improved the natural blending of modality features, verified by ablation against simple concatenation approaches.

- Multi-Task Learning: Leveraging auxiliary tasks in a multi-task framework improved model generalization, clearly benefiting from the use of Multi-Granularity Gates.

Discussion

MSMF's architecture significantly contributes to multi-modal data analysis strategies. By effectively handling varying sampling times, reducing feature redundancy, and optimizing feature interactions across scales and modalities, MSMF provides a robust tool for improving stock market predictions. The design choices, like modality completion and multi-task integration, highlight the model's adeptness in dealing with complex, real-world data challenges inherent in financial forecasting.

Conclusion

The MSMF architecture provides a significant advancement in multi-modal stock market prediction. It addresses key limitations of existing models by utilizing modality completion, dynamic feature gating, and multi-scale fusion to achieve superior predictive performance. This approach sets a foundation for further enhancements in multimodal analysis techniques and could influence future developments in AI research focusing on complex data integration and dynamic prediction tasks. The robustness and enhanced predictive capabilities demonstrated by MSMF underline its potential application in diverse financial analytics environments.