X-posing Free Speech: Examining the Impact of Moderation Relaxation on Online Social Networks (2404.11465v2)

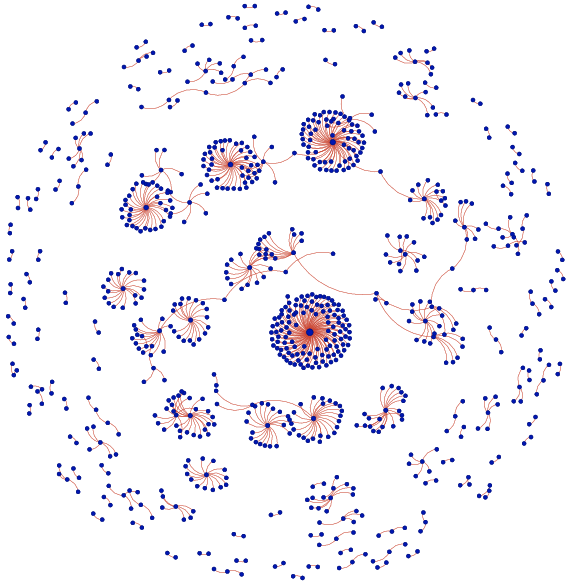

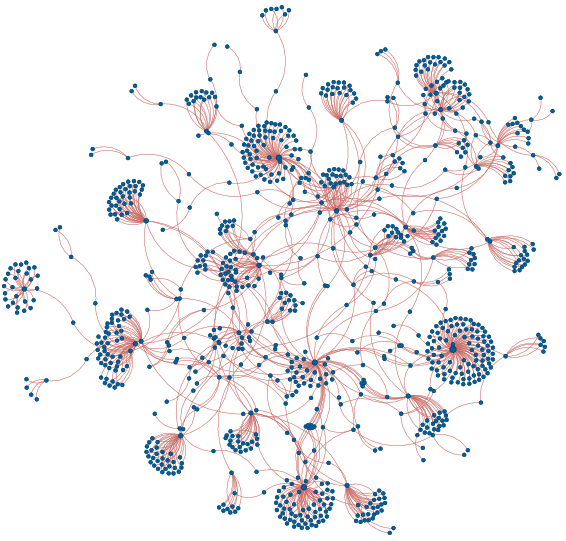

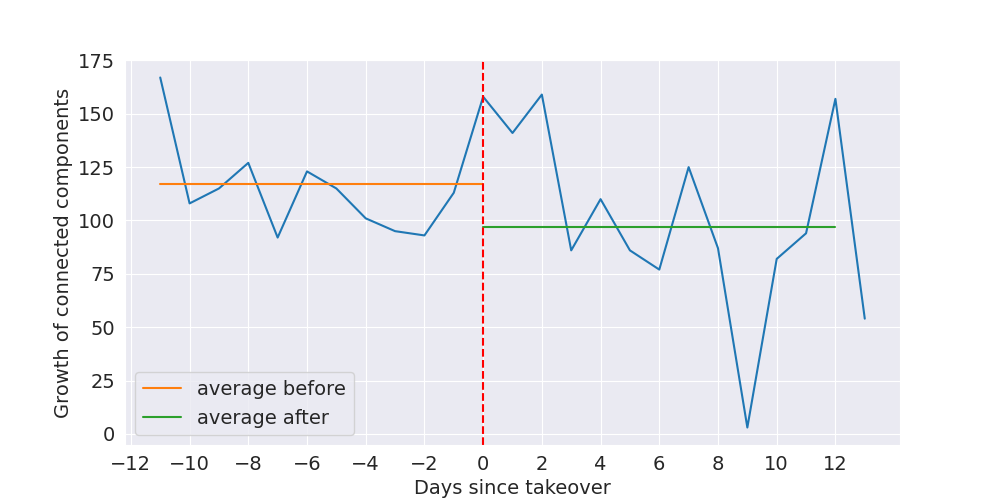

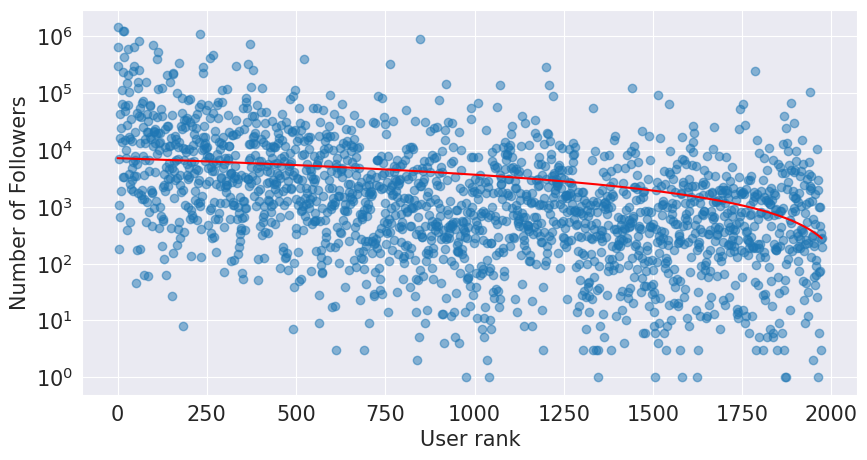

Abstract: We investigate the impact of free speech and the relaxation of moderation on online social media platforms using Elon Musk's takeover of Twitter as a case study. By curating a dataset of over 10 million tweets, our study employs a novel framework combining content and network analysis. Our findings reveal a significant increase in the distribution of certain forms of hate content, particularly targeting the LGBTQ+ community and liberals. Network analysis reveals the formation of cohesive hate communities facilitated by influential bridge users, with substantial growth in interactions hinting at increased hate production and diffusion. By tracking the temporal evolution of PageRank, we identify key influencers, primarily self-identified far-right supporters disseminating hate against liberals and woke culture. Ironically, embracing free speech principles appears to have enabled hate speech against the very concept of freedom of expression and free speech itself. Our findings underscore the delicate balance platforms must strike between open expression and robust moderation to curb the proliferation of hate online.

- Understanding the effect of deplatforming on social networks. In Proceedings of the 13th ACM Web Science Conference 2021, pages 187–195.

- Predicting anti-Asian hateful users on Twitter during COVID-19. In Findings of EMNLP.

- Dimosthenis Antypas and Jose Camacho-Collados. 2023. Robust hate speech detection in social media: A cross-dataset empirical evaluation. In The 7th Workshop on Online Abuse and Harms (WOAH), pages 231–242, Toronto, Canada. Association for Computational Linguistics.

- Hate speech spikes on twitter after elon musk acquires the platform. School of Communication and Media, Montclair State University.

- Quarantined! examining the effects of a community-wide moderation intervention on reddit. ACM Transactions on Computer-Human Interaction (TOCHI), 29(4):1–26.

- You can’t stay here: The efficacy of reddit’s 2015 ban examined through hate speech. Proc. ACM Hum.-Comput. Interact., 1(CSCW).

- Antisocial behavior in online discussion communities. In Proceedings of the international aaai conference on web and social media, volume 9, pages 61–70.

- Amanda LL Cullen and Sanjay R Kairam. 2022. Practicing moderation: Community moderation as reflective practice. Proceedings of the ACM on Human-computer Interaction, 6(CSCW1):1–32.

- You too brutus! trapping hateful users in social media: Challenges, solutions & insights. In Proceedings of the 32nd ACM Conference on Hypertext and Social Media, HT ’21, page 79–89, New York, NY, USA. Association for Computing Machinery.

- Automated hate speech detection and the problem of offensive language. In Proceedings of the international AAAI conference on web and social media, volume 11, pages 512–515.

- Mixed messages? the limits of automated social media content analysis. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency, volume 81 of Proceedings of Machine Learning Research, pages 106–106. PMLR.

- Frederic Guerrero-Solé. 2018. Interactive behavior in political discussions on twitter: Politicians, media, and citizens’ patterns of interaction in the 2015 and 2016 electoral campaigns in spain. Social Media + Society, 4(4).

- Auditing elon musk’s impact on hate speech and bots. ICWSM, 17(1).

- Do platform migrations compromise content moderation? evidence from r/the_donald and r/incels. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2):1–24.

- Early Detection of Online Hate Speech Spreaders with Learned User Representations—Notebook for PAN at CLEF 2021. In CLEF 2021 Labs and Workshops, Notebook Papers. CEUR-WS.org.

- Abraham Israeli and Oren Tsur. 2022. Free speech or free hate speech? analyzing the proliferation of hate speech in parler. In Proceedings of the Sixth Workshop on Online Abuse and Harms (WOAH), pages 109–121, Seattle, Washington (Hybrid). Association for Computational Linguistics.

- Crowdsourcing civility: A natural experiment examining the effects of distributed moderation in online forums. Government Information Quarterly, 31(2):317–326.

- Online harassment, digital abuse, and cyberstalking in America. Data and Society Research Institute.

- Syntactic annotations for the google books ngram corpus. In Proceedings of the 50th Annual Meeting of the Association for Computational Linguistics, Volume 2: Demo Papers (ACL ’12).

- Thou shalt not hate: Countering online hate speech. Proceedings of the International AAAI Conference on Web and Social Media, 13(01):369–380.

- J. Nathan Matias. 2019a. The civic labor of volunteer moderators online. Social Media + Society, 5(2):2056305119836778.

- J Nathan Matias. 2019b. Preventing harassment and increasing group participation through social norms in 2,190 online science discussions. Proceedings of the National Academy of Sciences, 116(20):9785–9789.

- Fightin’ words: Lexical feature selection and evaluation for identifying the content of political conflict. Political Analysis, 16(4):372–403.

- Toxicity detection: Does context really matter? arXiv preprint arXiv:2006.00998.

- Leveraging intra-user and inter-user representation learning for automated hate speech detection. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 2 (Short Papers), pages 118–123, New Orleans, Louisiana. Association for Computational Linguistics.

- Characterizing and detecting hateful users on twitter. Proceedings of the International AAAI Conference on Web and Social Media, 12(1).

- Does the musk twitter takeover matter? political influencers, their arguments, and the quality of information they share. Socius, 9.

- Prevalence and psychological effects of hateful speech in online college communities. In Proceedings of the 10th ACM Conference on Web Science, WebSci ’19, page 255–264, New York, NY, USA. Association for Computing Machinery.

- On the rise of fear speech in online social media. PNAS, 120(11):e2212270120.

- Correlation coefficients: Appropriate use and interpretation. Anesthesia & Analgesia, 126(5):1763–1768.

- Joseph Seering and Sanjay R Kairam. 2023. Who moderates on twitch and what do they do? quantifying practices in community moderation on twitch. Proceedings of the ACM on Human-Computer Interaction, 7(GROUP):1–18.

- The hypervisibility and discourses of ‘wokeness’ in digital culture. Media, Culture & Society, 44(8):1576–1587.

- “go eat a bat, chang!”: On the emergence of sinophobic behavior on web communities in the face of covid-19. In TheWeb.

- Understanding abuse: A typology of abusive language detection subtasks. In Proceedings of the First Workshop on Abusive Language Online, pages 78–84, Vancouver, BC, Canada. Association for Computational Linguistics.

- Deep fusion of multimodal features for social media retweet time prediction. TheWeb.

- What is gab: A bastion of free speech or an alt-right echo chamber. In Companion Proceedings of the The Web Conference 2018, WWW ’18, page 1007–1014, Republic and Canton of Geneva, CHE. International World Wide Web Conferences Steering Committee.

- Veli Şafak and Aniish Sridhar. 2022. Elon musk’s twitter takeover: Politician accounts. ArXiv, abs/2205.08491.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.