Exploring Prompt Engineering Practices in the Enterprise

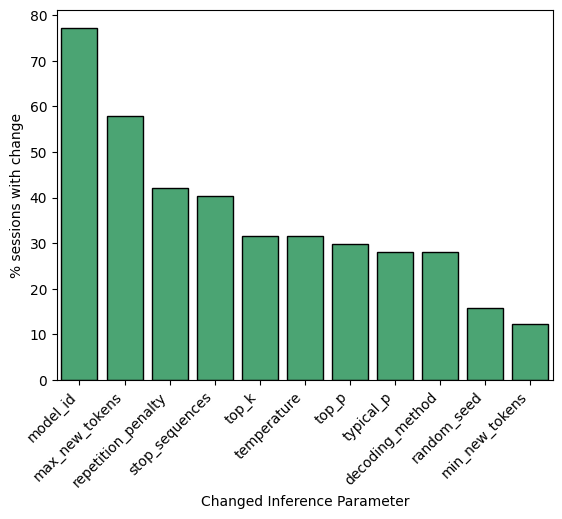

Abstract: Interaction with LLMs is primarily carried out via prompting. A prompt is a natural language instruction designed to elicit certain behaviour or output from a model. In theory, natural language prompts enable non-experts to interact with and leverage LLMs. However, for complex tasks and tasks with specific requirements, prompt design is not trivial. Creating effective prompts requires skill and knowledge, as well as significant iteration in order to determine model behavior, and guide the model to accomplish a particular goal. We hypothesize that the way in which users iterate on their prompts can provide insight into how they think prompting and models work, as well as the kinds of support needed for more efficient prompt engineering. To better understand prompt engineering practices, we analyzed sessions of prompt editing behavior, categorizing the parts of prompts users iterated on and the types of changes they made. We discuss design implications and future directions based on these prompt engineering practices.

- Chainforge: An open-source visual programming environment for prompt engineering. In Adjunct Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, pages 1–3, 2023.

- Promptsource: An integrated development environment and repository for natural language prompts. arXiv preprint arXiv:2202.01279, 2022.

- Beyond accuracy: The role of mental models in human-ai team performance. In Proceedings of the AAAI conference on human computation and crowdsourcing, volume 7, pages 2–11, 2019.

- User intent recognition and satisfaction with large language models: A user study with chatgpt. arXiv preprint arXiv:2402.02136, 2024.

- The foundation model transparency index. arXiv preprint arXiv:2310.12941, 2023.

- Can (a) i have a word with you? a taxonomy on the design dimensions of ai prompts. 2024.

- Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- Early llm-based tools for enterprise information workers likely provide meaningful boosts to productivity. 2023.

- How to prompt? opportunities and challenges of zero-and few-shot learning for human-ai interaction in creative applications of generative models. arXiv preprint arXiv:2209.01390, 2022.

- Mental models of ai agents in a cooperative game setting. In Proceedings of the 2020 chi conference on human factors in computing systems, pages 1–12, 2020.

- Discovering the syntax and strategies of natural language programming with generative language models. In Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, pages 1–19, 2022.

- Understanding users’ dissatisfaction with chatgpt responses: Types, resolving tactics, and the effect of knowledge level. arXiv preprint arXiv:2311.07434, 2023.

- Guiding large language models via directional stimulus prompting. Advances in Neural Information Processing Systems, 36, 2024.

- Ai transparency in the age of llms: A human-centered research roadmap. arXiv preprint arXiv:2306.01941, 2023.

- Zhicheng Lin. Ten simple rules for crafting effective prompts for large language models. Available at SSRN 4565553, 2023.

- “what it wants me to say”: Bridging the abstraction gap between end-user programmers and code-generating large language models. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, pages 1–31, 2023.

- Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Computing Surveys, 55(9):1–35, 2023.

- Propane: Prompt design as an inverse problem. arXiv preprint arXiv:2311.07064, 2023.

- Promptaid: Prompt exploration, perturbation, testing and iteration using visual analytics for large language models. arXiv preprint arXiv:2304.01964, 2023.

- Python Software Foundation. difflib: Helpers for Computing String Similarities and Differences. Python Software Foundation, 2024.

- Transforming boundaries: how does chatgpt change knowledge work? Journal of Business Strategy, 2023.

- Cataloging prompt patterns to enhance the discipline of prompt engineering. URL: https://www. dre. vanderbilt. edu/~ schmidt/PDF/ADA_Europe_Position_Paper. pdf [accessed 2023-09-25], 2023.

- Interactive and visual prompt engineering for ad-hoc task adaptation with large language models. IEEE transactions on visualization and computer graphics, 29(1):1146–1156, 2022.

- Investigating explainability of generative ai for code through scenario-based design. In 27th International Conference on Intelligent User Interfaces, pages 212–228, 2022.

- Promptagent: Strategic planning with language models enables expert-level prompt optimization. arXiv preprint arXiv:2310.16427, 2023.

- Chain-of-thought prompting elicits reasoning in large language models. Advances in Neural Information Processing Systems, 35:24824–24837, 2022.

- A prompt pattern catalog to enhance prompt engineering with chatgpt. arXiv preprint arXiv:2302.11382, 2023.

- Promptchainer: Chaining large language model prompts through visual programming. In CHI Conference on Human Factors in Computing Systems Extended Abstracts, pages 1–10, 2022.

- Why johnny can’t prompt: how non-ai experts try (and fail) to design llm prompts. In Proceedings of the 2023 CHI Conference on Human Factors in Computing Systems, pages 1–21, 2023.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.