The paper "Efficient Prompting Methods for LLMs: A Survey" presents a comprehensive overview of methods designed to improve the efficiency of prompting LLMs. Prompting has become a mainstream paradigm for adapting LLMs to specific Natural Language Processing (NLP) tasks, enabling in-context learning. However, the use of lengthy and complex prompts increases computational costs and necessitates manual design efforts. Efficient prompting methods aim to address these challenges by reducing computational burden and optimizing prompt design.

The paper categorizes efficient prompting methods into two main approaches:

- Prompting with efficient computation

- Prompting with efficient design

The paper reviews advances in efficient prompting and highlights potential future research directions.

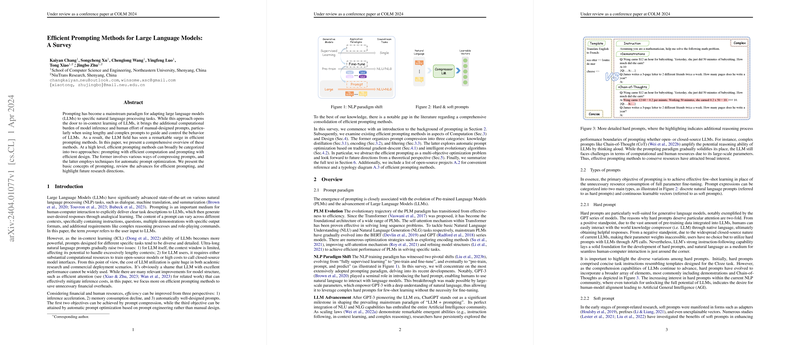

The paper begins with an introduction to the background of prompting, highlighting the evolution of Pre-trained LLMs (PLMs) and the shift in NLP paradigms from fully supervised learning to pre-train and fine-tune, and eventually to pre-train, prompt, and predict. The development of Generative Pre-trained Transformer 3 (GPT-3) and ChatGPT has solidified the "LLM + prompting" paradigm, leading to increased interest in effective prompting methods that conserve resources.

Prompt expressions are categorized into hard prompts (discrete natural language prompts) and soft prompts (continuous learnable vectors). Hard prompts are suitable for generative LLMs like the GPT series, while soft prompts, such as adapters and prefixes, enhance efficient training by exploring different embedding positions. The challenges associated with hard prompts include lengthy prompt content and the difficulty of prompt design. Lengthy prompts can strain the limited context window of LLMs and increase computational costs. The discrete nature of natural language makes manual prompt design challenging, relying heavily on empirical knowledge and human subjectivity.

The paper then discusses "Prompting with Efficient Computation," which aims to alleviate the economic burden of lengthy prompts on both open-source and closed-source LLMs. This involves prompt compression techniques, categorized into text-to-vector level and text-to-text level approaches, to extract essential information from original prompts while maintaining comparable performance.

- Knowledge Distillation (KD): This classic compression method involves directing a lightweight student model to mimic a better-performing teacher model. KD methods compress the natural language information of the hard prompt inside LLMs through soft prompt tuning. The loss function is typically Kullback-Leibler Divergence between teacher and student.

where:

- is the loss function for the student model

- is the probability distribution of the student model given prompt

- is the hard prompts

- is the probability distribution of the teacher model

- is the output

- is the input

- Encoding: This text-to-vector level compression involves fine-tuning LMs with a Cross-Entropy objective, compressing the extensive information of hard prompts into concise vectors accessible to the model. Semantic information from all modalities in the context is valuable for prompting LLMs.

- Filtering: This text-to-text level compression evaluates the information entropy of different lexical structures in the prompt using a lightweight LM and filters out redundant information to simplify user prompts. The concept of "self-information" is used to quantify the amount of information within a prompt.

"Prompting with Efficient Design" addresses the growing complexity of prompt content by automating prompt optimization based on Prompt Engineering. This involves finding the best natural language prompt within a given search space to maximize task accuracy. The paper explores this problem from the perspectives of traditional mathematical optimization and intelligent algorithmic optimization, dividing this section into gradient-based and evolution-based approaches.

- Gradient-based methods: These methods involve using gradient-descent algorithms to update parameters in neural networks. However, since hard prompts are discrete, researchers have investigated suitable gradient-based optimization frameworks for open-source and closed-source models separately. For open-source models, fine-tuning can be performed based on the real gradient, while for closed-source models, the gradient can only be imitated for prompting.

- Evolution-based methods: These methods simulate the biological evolution process of "survival of the fittest" in nature, involving random searches by sampling objective functions. This approach exploits the diversity of samples in the search space and explores the optimization direction through iterations.

Finally, the paper abstracts the efficient prompting paradigm into a multi-objective optimization problem, with the overall objective of compressing prompts to reduce computational complexity while optimizing LLM task accuracy. The overall optimization formulation is defined as :

$\mathcal{F}_{\text{total} = \lambda_1 \cdot \mathcal{F}_{\text{compression}(\widetilde{X}) + \lambda_2 \cdot \mathcal{F}_{\text{accuracy}(\Theta)}$

where:

- is the total optimization objective

- is the objective for prompt compression

- is the objective for task accuracy

- denotes the prompts that are compressed

- denotes the accessible parameters

- and are weighting factors

$\mathcal{F}_{\text{compression}(\widetilde{X}) = \min \mathcal{D} \left( Y(\widetilde{X} \mid \text{argmax} I(\widetilde{X})), Y(X) \right)$

where:

- denotes the discrepancy between the outputs before and after prompt compression

- denotes the information entropy metrics that identify the amount of information

- is the output

- is the input

The paper concludes by summarizing efficient prompting methods for LLMs, highlighting their connections and abstracting these approaches from a theoretical perspective. It also provides a list of open-source projects and a typology diagram to overview the efficient prompting field.